Data Center Networking

Build data center spine-and-leaf networks with solutions providing industry-leading throughput and scalability, an extensive routing stack, the open programmability of the Junos OS, and a broad set of EVPN-VXLAN and IP fabric capabilities.

Rethink data center operations and fabric management with Apstra Data Center Director. Automate the entire network lifecycle to simplify design and deployment and provide closed-loop validation. With Data Center Director, customers have achieved 90% faster time to delivery, 70% faster time to resolution, and 80% OpEx reduction.

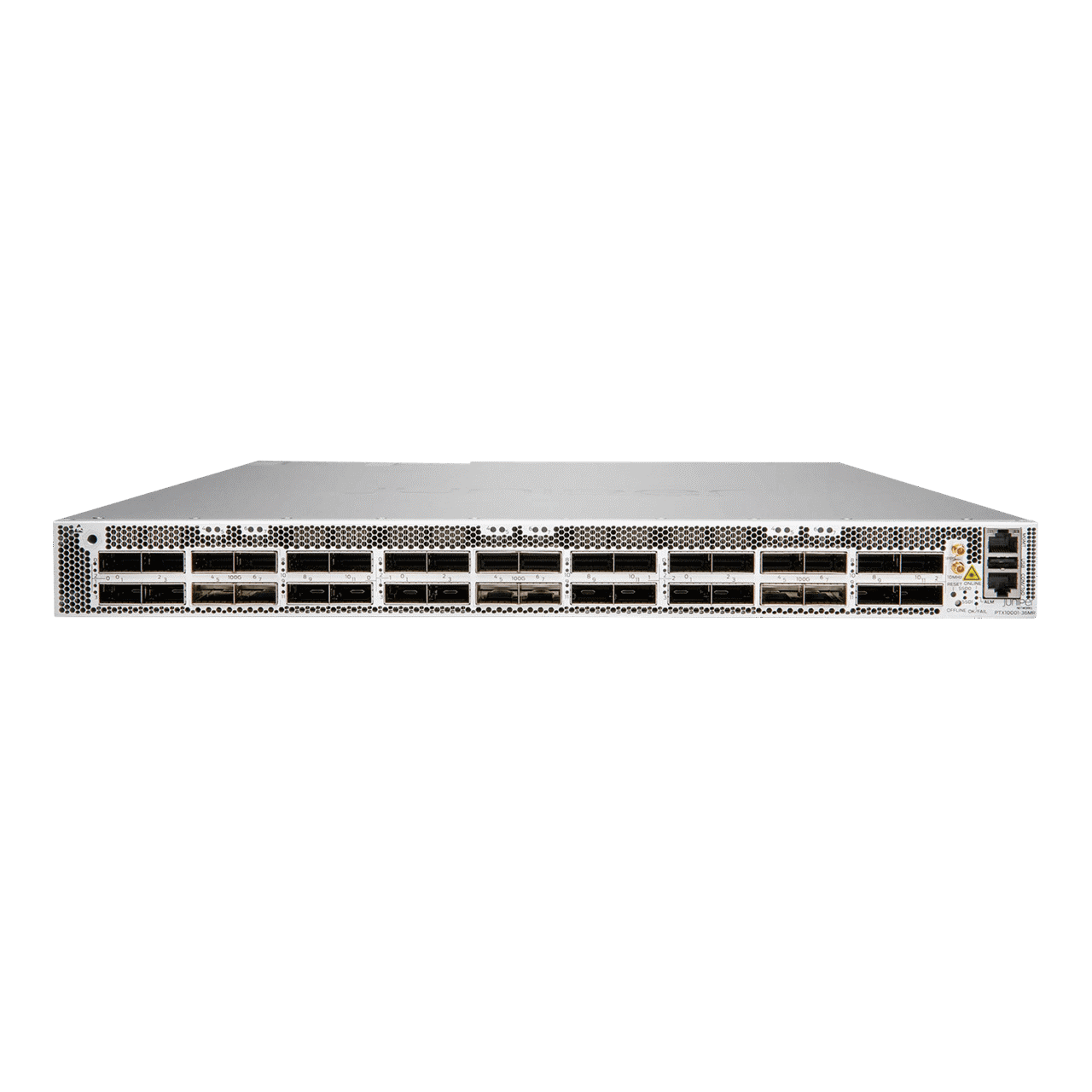

QFX5240

The QFX5240 line offers up to 800GbE interfaces to support AI Data Center Networking deployments with AI/ML workloads and other high-speed, high-density, spine-and-leaf IP fabrics where scalability, performance and low latency are critical.

Use Cases: AI data center leaf/spine, Data center fabric leaf/spine/super spine Port Density:

Throughput: Up to 102.4 Tbps (bidirectional) |

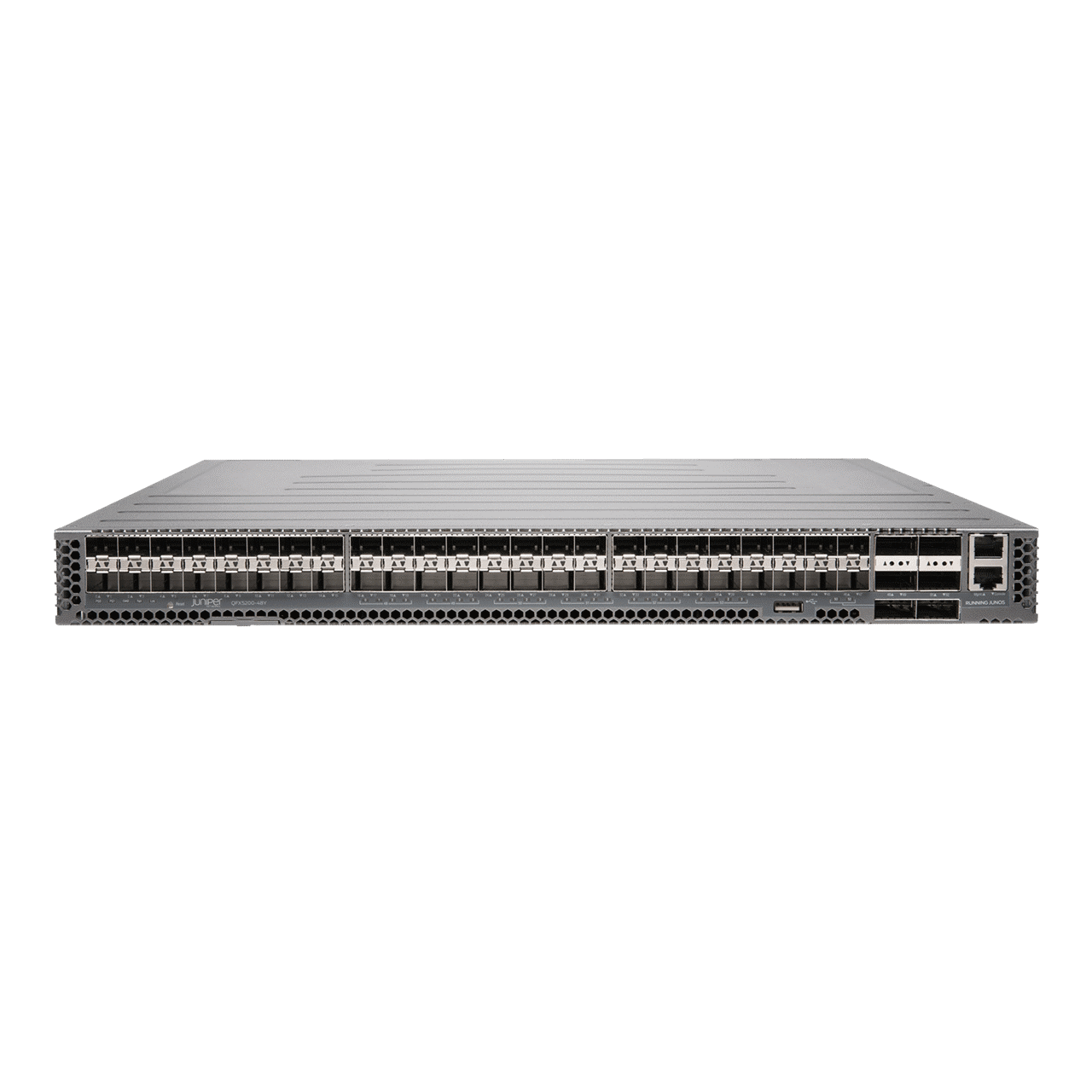

QFX5700

The QFX5700 line supports very large, dense, and fast 400GbE IP fabrics based on proven Internet-scale technology. With 10/25/40/50/100/200/GbE interface options, the QFX5700 is an optimal choice for spine-and-leaf deployments in enterprise, high-performance computing, service provider, and cloud provider data centers.

Use Cases: Data Center Fabric Spine, EVPN-VXLAN Fabric, Data Center Interconnect (DCI) Border, Secure DCI, Multitier Campus, Campus Fabric Port Density:

Throughput: Up to 25.6 Tbps (bidirectional) |

QFX5230

The QFX5230 Switch offers up to 400GbE interfaces to support high-speed, high-density, spine-and-leaf IP fabrics. Prime use cases include AI data center networks with AI/ML workloads where scalability, performance, and low latency are critical.

Use Cases: Data center fabric spine, super spine (including AI data center networking), IP storage networking, and edge/data center interconnect (DCI) Port density:

Throughput: Up to 51.2 Tbps (bidirectional) |

QFX5130

The QFX5130 line offers high-density, cost-optimized, 1-U, 400GbE and 100GbE fixed-configuration switches based on the Broadcom Trident 4 processor. It’s ideal for environments where cloud services are being added. With 10/25/40/100/400GbE interface options, the QFX5130 is an optimal choice for spine-and-leaf deployments in enterprise, service provider, and cloud provider environments.

Use Cases: Data Center Fabric Spine Port Density:

|

ACX7100

The ACX7100, part of the ACX7000 family, delivers high density and performance in a compact, 1 U footprint. Ideal for service provider, large enterprise, wholesale, and data center applications, it helps operators deliver premium customer and user experiences.

| Form factor | Compact 1 U, with 59.49-cm depth |

| Throughput | Up to 4.8 Tbps |

| Port density | ACX7100-48L: 48 x 10/25/50GbE 6 x 400GbE

ACX7100-32C: 32 x 40/100GbE 4 x 400GbE |

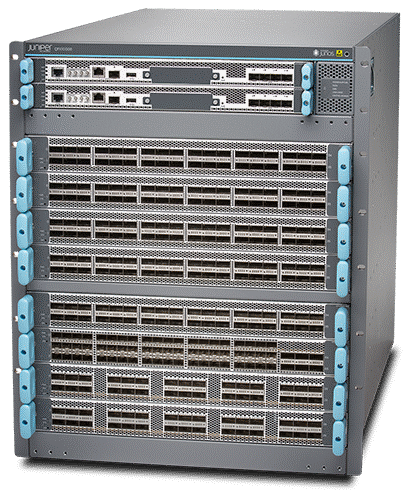

PTX10004, PTX10008, PTX10016

The modular PTX10004, PTX10008, and PTX10016 Packet Transport Routers directly address the massive bandwidth demands placed on networks today and in the foreseeable future. They bring ultra-high port density, native 400GE and 800GE inline MACsec, and latest generation ASIC investment to the most demanding WAN and data center architectures.

|

PTX10003

The PTX10003 Packet Transport Router offers on-demand scalability for critical core and peering functions. With high-density 100GbE, 200GbE, and 400GbE ports, operators can meet high-volume demands with efficiency, programmability, and performance at scale.

|

QFX5120

The QFX5120 line offers 1/10/25/40/100GbE switches designed for data center, data center edge, data center interconnect and campus deployments with requirements for low-latency Layer 2/Layer 3 features and advanced EVPN-VXLAN capabilities.

Use Case: Data Center Fabric Leaf/Spine, Campus Distribution/Core, applications requiring MACsec Port Density:

Throughput: Up to 2.16/4/6.4 Tbps (bidirectional) MACsec: AES-256 encryption on all ports (QFX5120-48YM) |

QFX5110

QFX5110 10/40GbE switches offer flexible deployment options and rich automation features for data center and campus deployments that require low-latency Layer 2/Layer 3 features and advanced EVPN-VXLAN capabilities. The QFX5110 provides universal building blocks for industry-standard architectures such as spine-and-leaf fabrics.

Use Case: Data Center Fabric Leaf Port Density:

Throughput: Up to 1.76/2.56 Tbps

|

PTX10001-36MR

The PTX10001-36MR is a high-capacity, space- and power-optimized routing and switching platform. It delivers 9.6 Tbps of throughput and 10.8 Tbps of I/O capacity in a 1 U, fixed form factor. Based on the Juniper Express 4 ASIC, the platform provides dense 100GbE and 400GbE connectivity for highly scalable routing and switching in cloud, service provider, and enterprise networks and data centers.

|

QFX5220

The QFX5220 line offers up to 400GbE interfaces for very large, dense, and fast standards-based fabrics based on proven internet-scale technology. QFX5220 switches are an optimal choice for spine-and-leaf data center fabric deployments as well as metro use cases.

Use Case: Data Center Fabric Spine Port Density:

Throughput: Up to 25.6 Tbps (bidirectional)

|

QFX5210

The QFX5210 line offers line-rate, low-latency 10/25/40/100GbE switches for building large, standards-based fabrics. QFX5210 Switches are an optimal choice for spine-and-leaf data center fabric deployments as well as metro use cases.

Use Case: DC fabric leaf/spine Port Density:

Throughput: Up to 12.8 Tbps (bidirectional) |

QFX5200

The QFX5200 line offers line-rate, low-latency 10/25/40/50/100GbE switches for building large, standards-based fabrics. QFX5200 Switches are an optimal choice for spine-and-leaf fabric deployments in the data center as well as metro use cases.

Use Case: Data Center Fabric Leaf/Spine Port Density:

Throughput: Up to 3.6/6.4 Tbps (bidirectional)

|

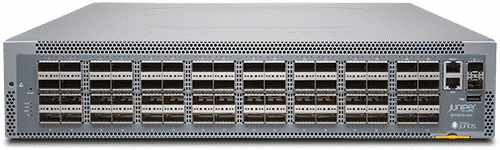

QFX10008 and QFX10016

QFX10008 and QFX10016 Switches support the most demanding data center, campus, and routing environments. With our custom silicon Q5 ASICs, deep buffers, and up to 96 Tbps throughput, these switches deliver flexibility and capacity for long-term investment protection.

Use Case: Data Center Fabric Spine Chassis Options:

Port Density:

Throughput: Up to 96 Tbps (bidirectional) |

CASE STUDY

Data Consolidation and Modernization Bolster T‑Systems’ IT Service Delivery

T‑Systems offers a wide range of digital services in 20 countries. The provider ran 45 global data centers and decided to consolidate and modernize them to simplify operations and meet new performance, availability, and scalability requirements.