ON THIS PAGE

Configure the Collapsed Spine IP Fabric Underlay Integrated With the Route Reflector Layer

Configure the Collapsed Spine EVPN-VXLAN Overlay Integrated With the Route Reflector Layer

Configure EVPN Multihoming and Virtual Networks on the Spine Devices for the ToR Switches

Verify Collapsed Spine Fabric Connectivity With Route Reflector Cluster and ToR Devices

Verify Collapsed Spine Fabric BGP Underlay and EVPN-VXLAN Overlay Configuration

Collapsed Spine Fabric Design and Implementation

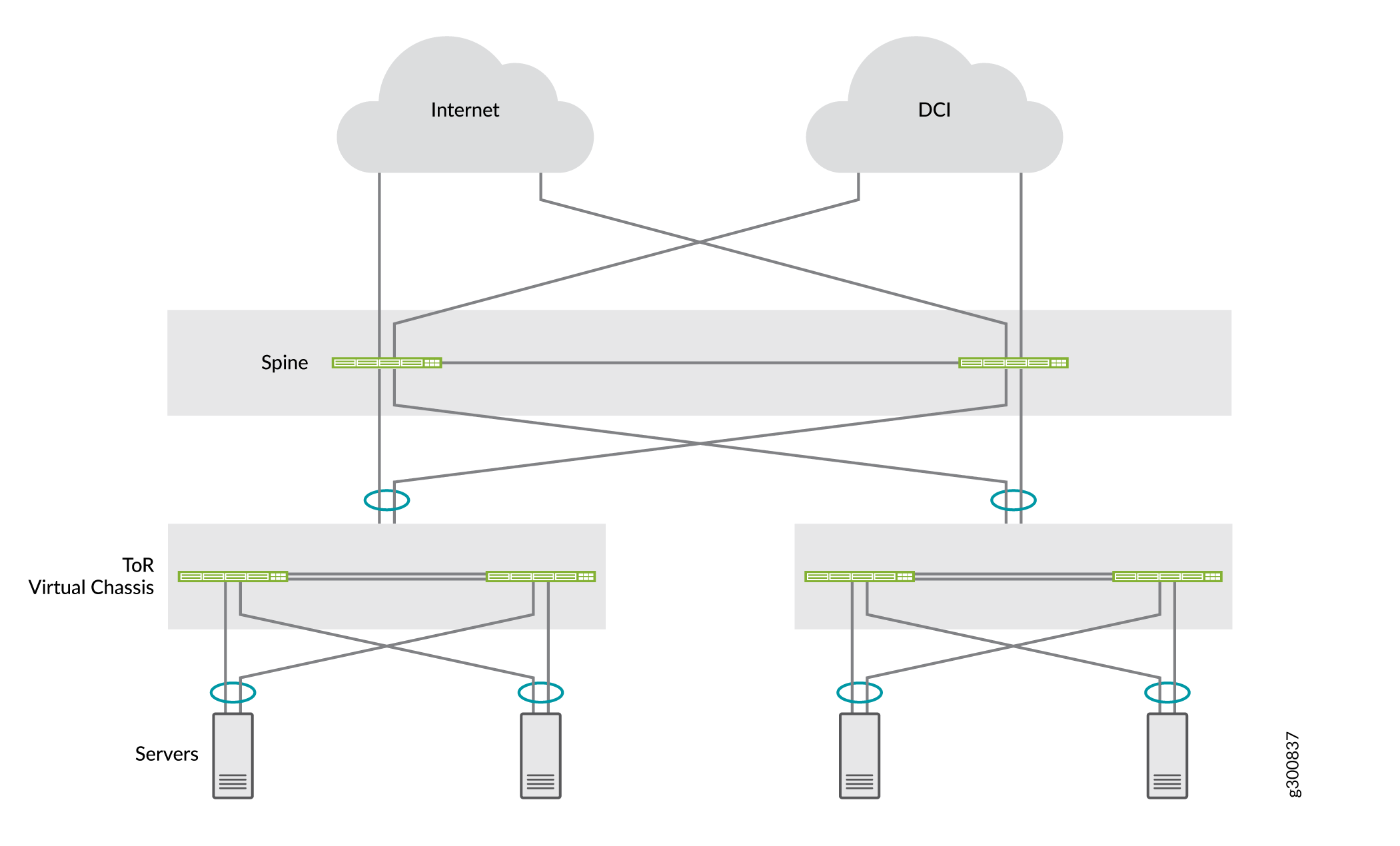

In collapsed spine fabrics, core EVPN-VXLAN overlay functions are collapsed only onto a spine layer. There is no leaf layer; the spine devices can interface directly to existing top-of-rack (ToR) switches in the access layer that might not support EVPN-VXLAN.

TOR switches can be multihomed to more than one spine device for access layer resiliency, which the spine devices manage using EVPN multihoming (also called ESI-LAG) the same way as the leaf devices do in other EVPN-VXLAN reference architectures. (See Multihoming an Ethernet-Connected End System Design and Implementation for details.)

The spine devices also assume any border device roles for connectivity outside the data center.

Some common elements in collapsed spine architecture use cases include:

Collapsed spine fabric with spine devices connected back-to-back:

In this model, the spine devices are connected with point-to-point links. The spine devices establish BGP peering in the underlay and overlay over those links using their loopback addresses. See Figure 1.

Alternatively, the collapsed spine core devices can be integrated with a route reflector cluster in a super spine layer, which is explained later (our reference architecture).

Data center locations connected with Data Center Interconnect (DCI):

The spine devices can perform border gateway functions to establish EVPN peering between data centers, including Layer 2 stretch and Layer 3 connectivity, as Figure 1 shows.

Standalone switches or Virtual Chassis in the access layer:

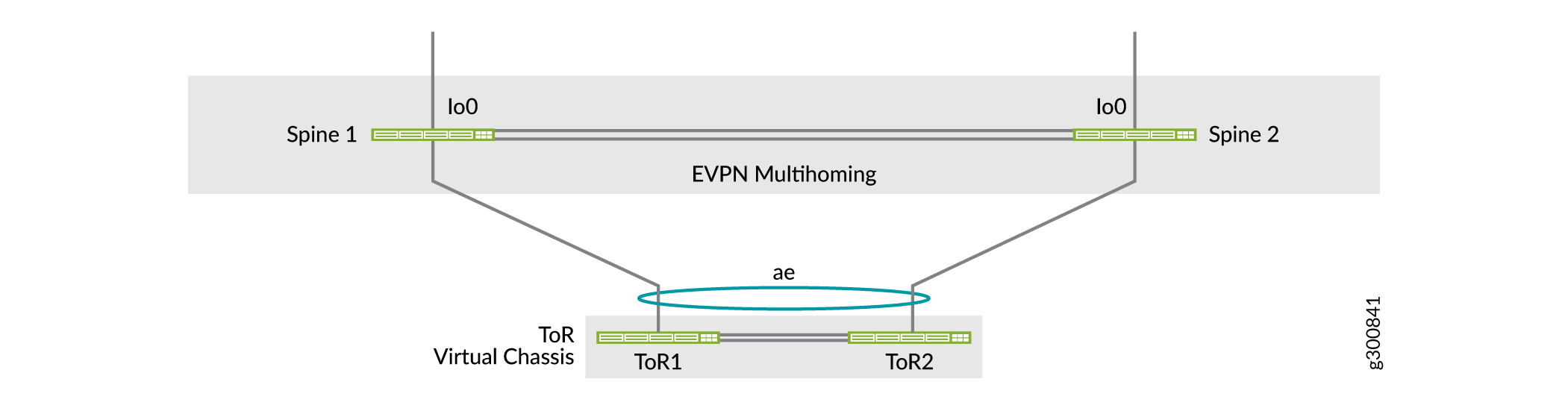

The ToR layer can contain standalone switches or Virtual Chassis multihomed to the collapsed spine devices. With Virtual Chassis, you can establish redundant links in the ESI-LAGs between the spine devices and different Virtual Chassis member switches to increase resiliency. See Figure 2.

Figure 1 shows a logical view of a collapsed spine data center with border connectivity, DCI between data centers, and Virtual Chassis in the ToR layer multihomed to the spine devices.

Figure 2 illustrates Virtual Chassis in the ToR layer multihomed to a back-to-back collapsed spine layer, where the spine devices link to different Virtual Chassis member switches to improve ESI-LAG resiliency.

Refer to Collapsed Spine with EVPN Multihoming, a network configuration example that describes a common collapsed spine use case with back-to-back spine devices. In that example, the ToR devices are Virtual Chassis that are multihomed to the collapsed spine devices. The example includes how to configure additional security services using an SRX chassis cluster to protect inter-tenant traffic, with inter-data center traffic also routed through the SRX cluster as a DCI solution.

Another collapsed spine fabric model interconnects the spine devices through an IP transit layer route reflector cluster that you integrate with the collapsed spine core underlay and overlay networks. Our reference architecture uses this model and is described in the following sections.

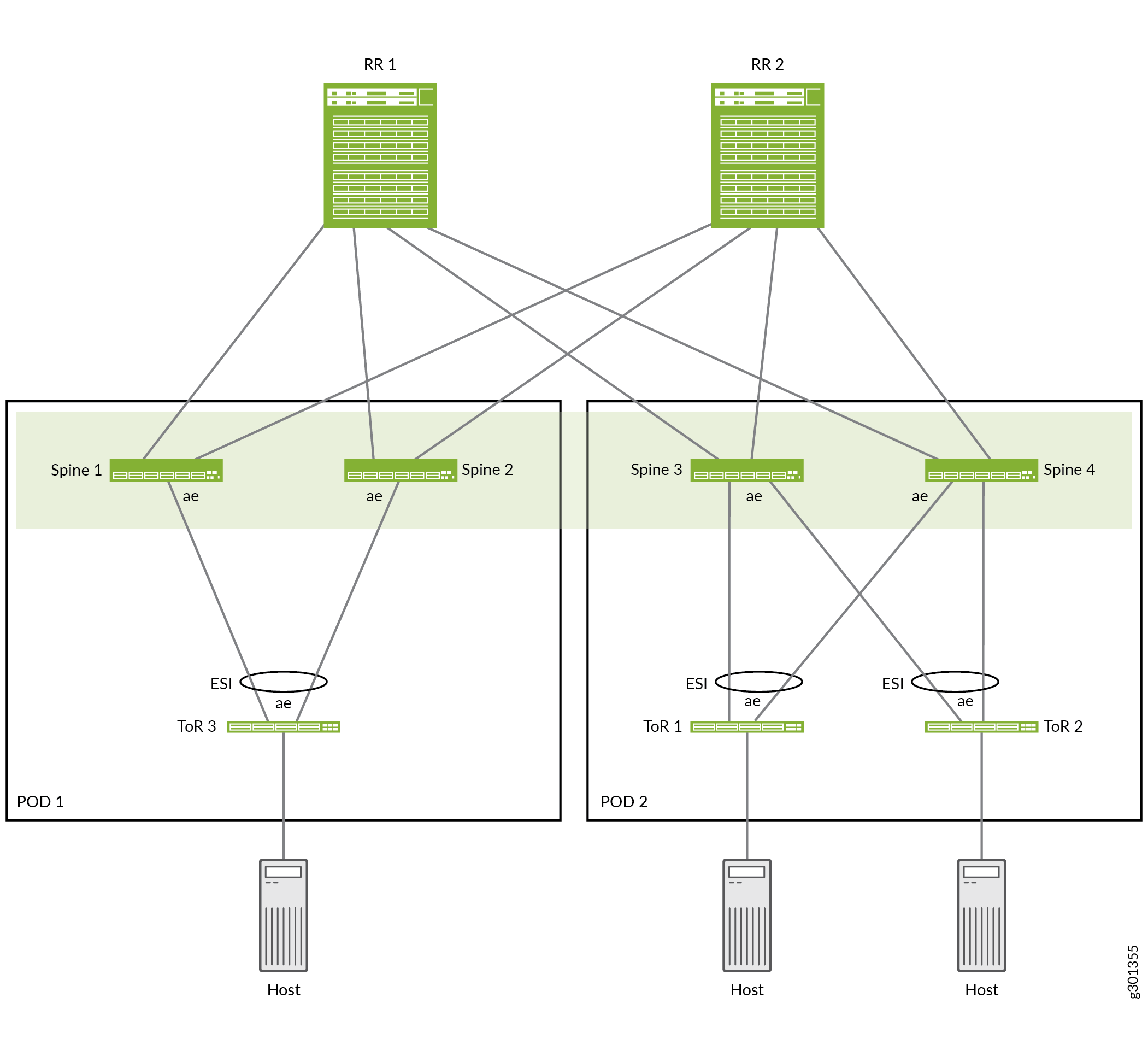

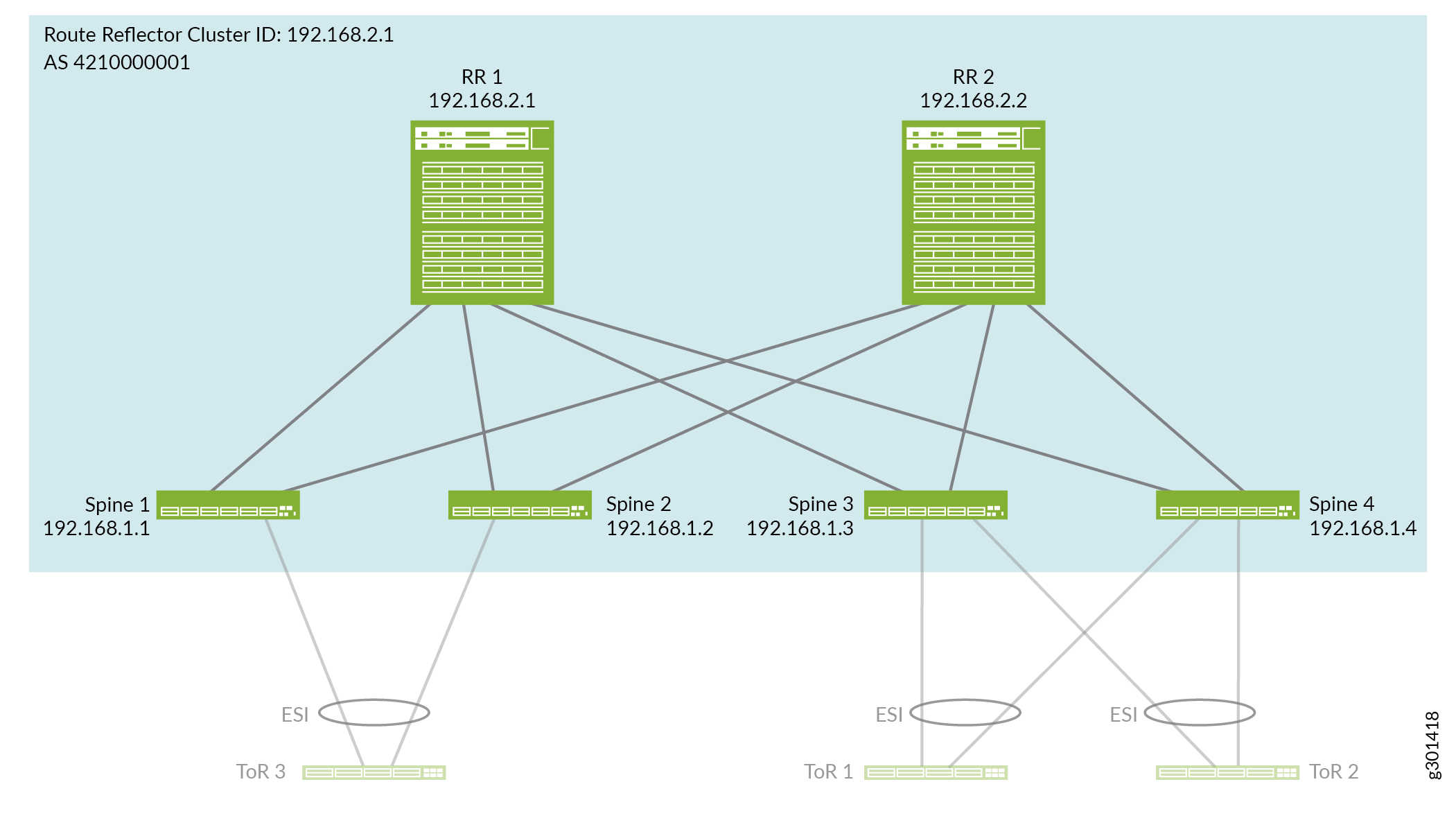

Overview of Collapsed Spine Reference Architecture

Our reference architecture presents a use case for a collapsed spine data center fabric comprising two inter-point of delivery (POD) modules. The PODs and collapsed spine devices in the PODs are interconnected by a super spine IP transit layer configured as a route reflector cluster. See Figure 3. This architecture is similar to a five-stage IP fabric design (see Five-Stage IP Fabric Design and Implementation), but with only the super spine, spine, and access layers. You configure the collapsed spine fabric to integrate the route reflector cluster devices into the IP fabric underlay and EVPN overlay in a similar way.

Figure 3 shows an example of the collapsed spine reference design, which includes the following elements:

POD 1: ToR 3 multihomed to Spine 1 and Spine 2

POD 2: ToR 1 and ToR 2 multihomed to Spine 3 and Spine 4

Route reflector cluster: RR 1 and RR 2 interconnecting Spine devices 1 through 4

The four spine devices make up the collapsed spine EVPN fabric core, with Layer 2 stretch and Layer 3 routing between the spine devices in the two PODs. The spine devices in each POD use ESI-LAGs to the multihomed ToR switches in the same POD.

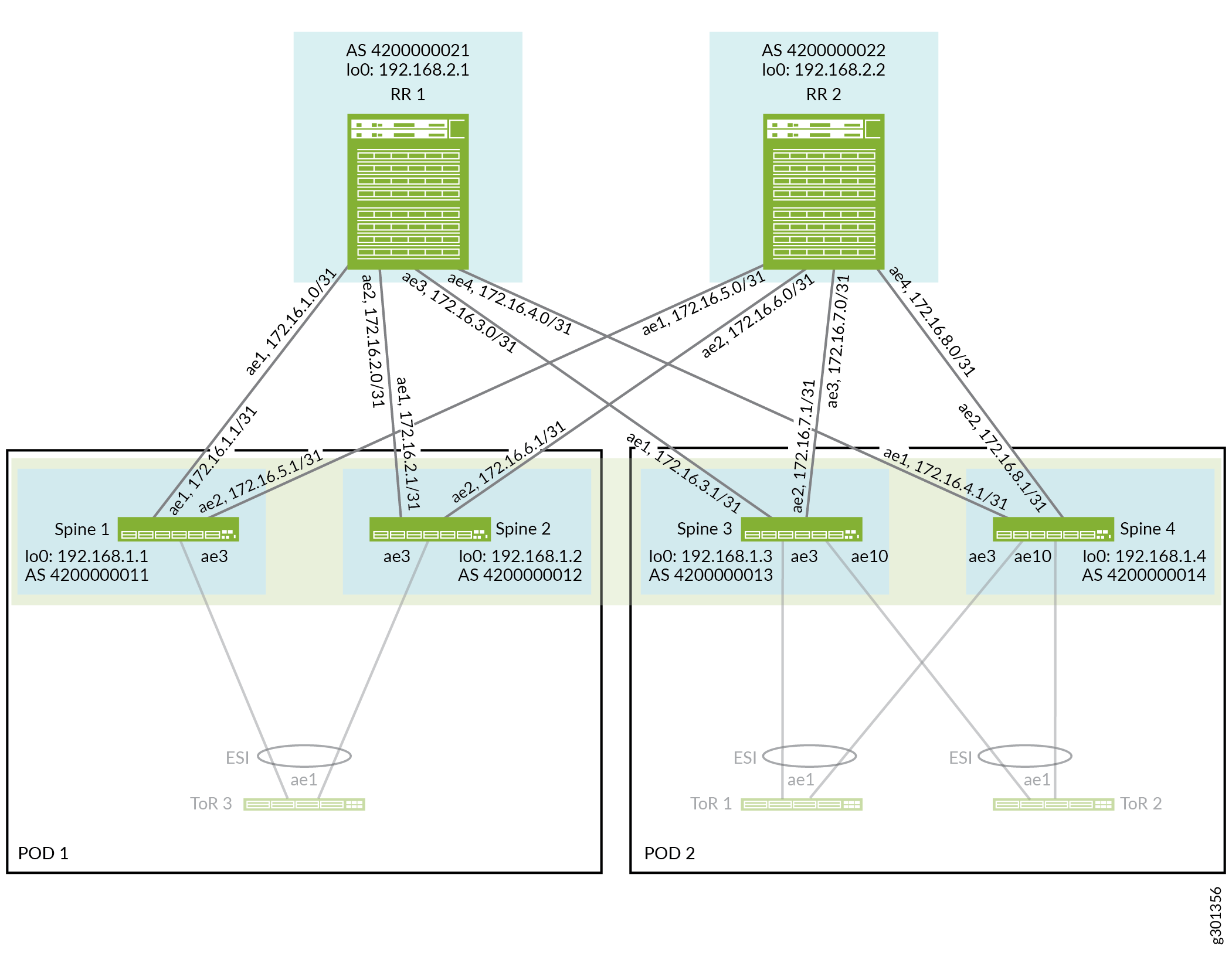

Configure the Collapsed Spine IP Fabric Underlay Integrated With the Route Reflector Layer

This section describes how to configure the interconnecting links and the IP fabric underlay on the spine and route reflector devices.

Figure 4 shows the collapsed spine and route reflector devices connected by aggregated Ethernet interface links.

To configure the underlay:

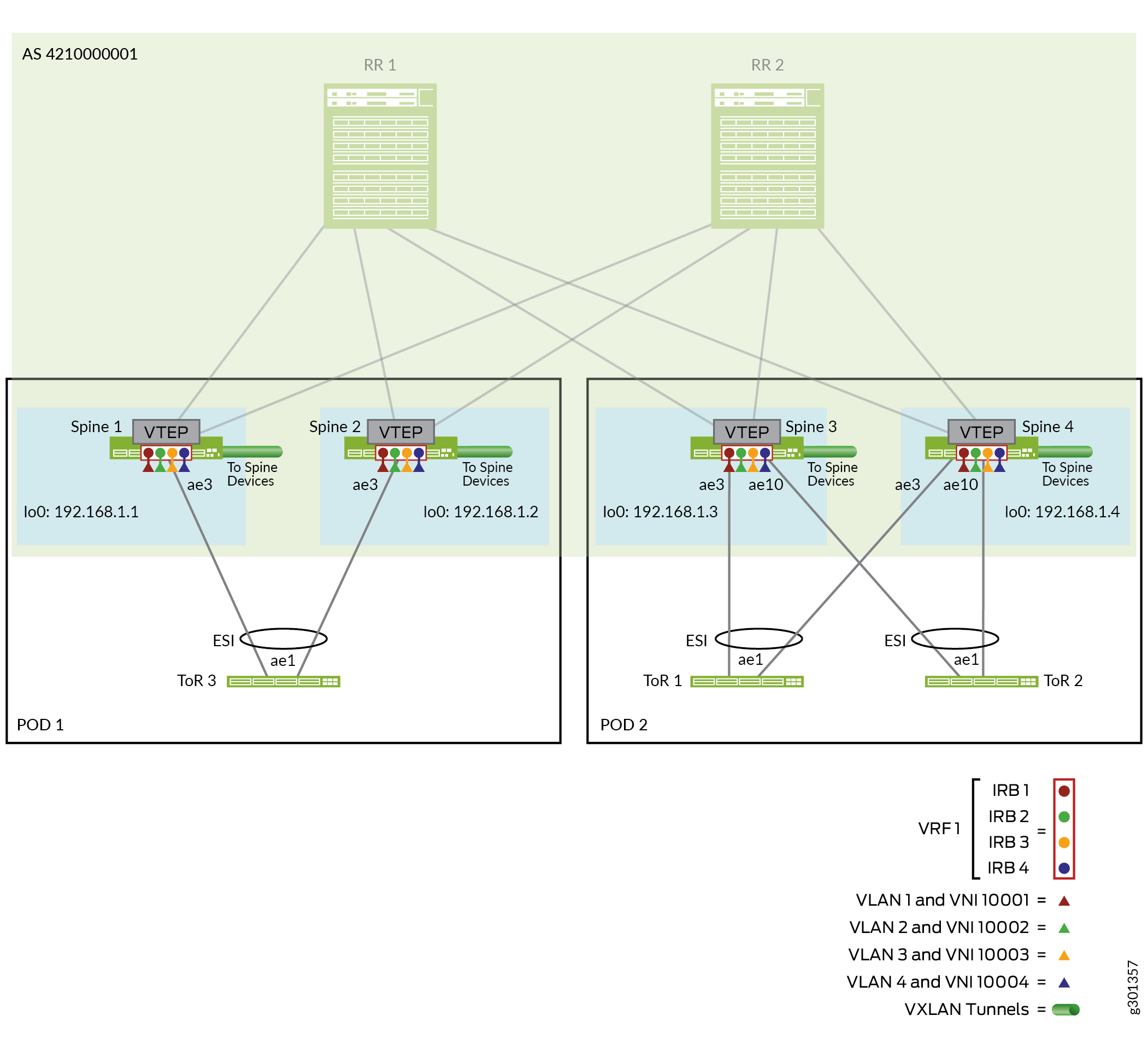

Configure the Collapsed Spine EVPN-VXLAN Overlay Integrated With the Route Reflector Layer

In this design, the overlay is similar to other EVPN-VXLAN data center spine and leaf reference architectures, but doesn’t include a leaf layer. Only the spine devices (integrated with the route reflector cluster) do intra-VLAN and inter-VLAN routing in the fabric. We configure IBGP with Multiprotocol BGP (MP-IBGP) with a single autonomous system (AS) number on the spine devices to establish a signalling path between them by way of the route reflector cluster devices as follows:

The route reflector cluster devices peer with the spine devices in both PODs for IP transit.

The spine devices peer with the route reflector devices.

See Figure 5, which illustrates the spine and route reflector cluster devices and BGP neighbor IP addresses we configure in the EVPN overlay network.

The overlay configuration is the same on both of the route reflector devices except for the device’s local address (the loopback address). The route reflector devices peer with all of the spine devices.

The overlay configuration is the same on each of the spine devices except for the device’s local address (the loopback address). All of the spine devices peer with the route reflector cluster devices.

We configure EVPN with VXLAN encapsulation and virtual tunnel endpoint (VTEP) interfaces only on the spine devices in the collapsed spine fabric.

To configure the overlay:

Configure EVPN Multihoming and Virtual Networks on the Spine Devices for the ToR Switches

This collapsed spine reference design implements EVPN multihoming as described in Multihoming an Ethernet-Connected End System Design and Implementation, except because the leaf layer functions are collapsed into the spine layer, you configure the ESI-LAGs on the spine devices. You also configure VLANs and Layer 2 and Layer 3 routing functions on the spine devices in a similar way as you would on the leaf devices in an edge-routed bridging (ERB) overlay design. The core collapsed spine configuration implements a Layer 2 stretch by setting the same VLANs (and VLAN-to-VNI mappings) on all of the spine devices in both PODs. EVPN Type 2 routes enable communication between endpoints within and across the PODs.

Figure 6 shows the collapsed spine devices in each POD connected with aggregated Ethernet interface links to the multihomed ToR switches in the POD.

For brevity, this section illustrates one aggregated Ethernet link between each spine and each ToR device, with one interface configured on each aggregated Ethernet link from the spine devices to the ToR devices in the POD.

This section covers configuration details only for the spine and ToR devices in POD 2. You can apply a similar configuration with applicable device parameters and interfaces to the spine and ToR devices in POD 1.

The ToR devices include two interfaces in their aggregated Ethernet links, one to each spine device in the POD that form the ESI-LAG for multihoming.

The configuration includes steps to:

Configure the interfaces.

Set up the ESI-LAGs for EVPN multihoming.

Configure Layer 2 and Layer 3 gateway functions, including defining VLANs, the associated IRB interfaces for inter-VLAN routing, and corresponding VLAN-to-VNI mappings.

Verify Collapsed Spine Fabric Connectivity With Route Reflector Cluster and ToR Devices

This section shows CLI commands you can use to verify connectivity between the collapsed spine devices and the route reflector cluster, and between the collapsed spine devices and the ToR devices.

For brevity, this section includes verifying connectivity on the spine devices using only Spine 3 and Spine 4 in POD 2. You can use the same commands on the spine devices (Spine 1 and Spine 2) in POD 1.

Verify Collapsed Spine Fabric BGP Underlay and EVPN-VXLAN Overlay Configuration

This section shows CLI commands you can use to verify the underlay and overlay are working for the collapsed spine devices integrated with the route reflector cluste. Refer to Figure 4 and Figure 5 again for the configured underlay and overlay parameters.

For brevity, this section includes verifying connectivity on the spine devices using only Spine 3 and Spine 4 in POD 2. You can use the same commands on the spine devices (Spine 1 and Spine 2) in POD 1.