Templates

Templates are abstractions of network designs that define the capabilities of a network, its structure and policy intent, without defining any vendor-specific information. They're defined with other elements of abstraction (rack-types, logical devices), as well as other details, as described below. When you're ready to build your network, you'll use a template to create a blueprint. Templates can be rack-based, pod-based, or spineless (collapsed). See the sections below for details on each type of template.

Rack types are used in templates. Apstra provides many predefined templates, but if you do need to create a custom template, you'll use one of the many predefined rack types or one that you've custom-built yourself. When the majority of racks in your data center use the same leaf hardware with the same link speeds to hosts, uplink speeds to spines and so on, instead of designing and building every single rack in a data center, you can take advantage of the efficiency of using one rack type.

Rack-based Templates

Rack-based templates define the type and number of racks to connect as top-of-rack (ToR) switches (or pairs of ToR switches). Rack-based templates are divided into the following sections:

Template Parameters

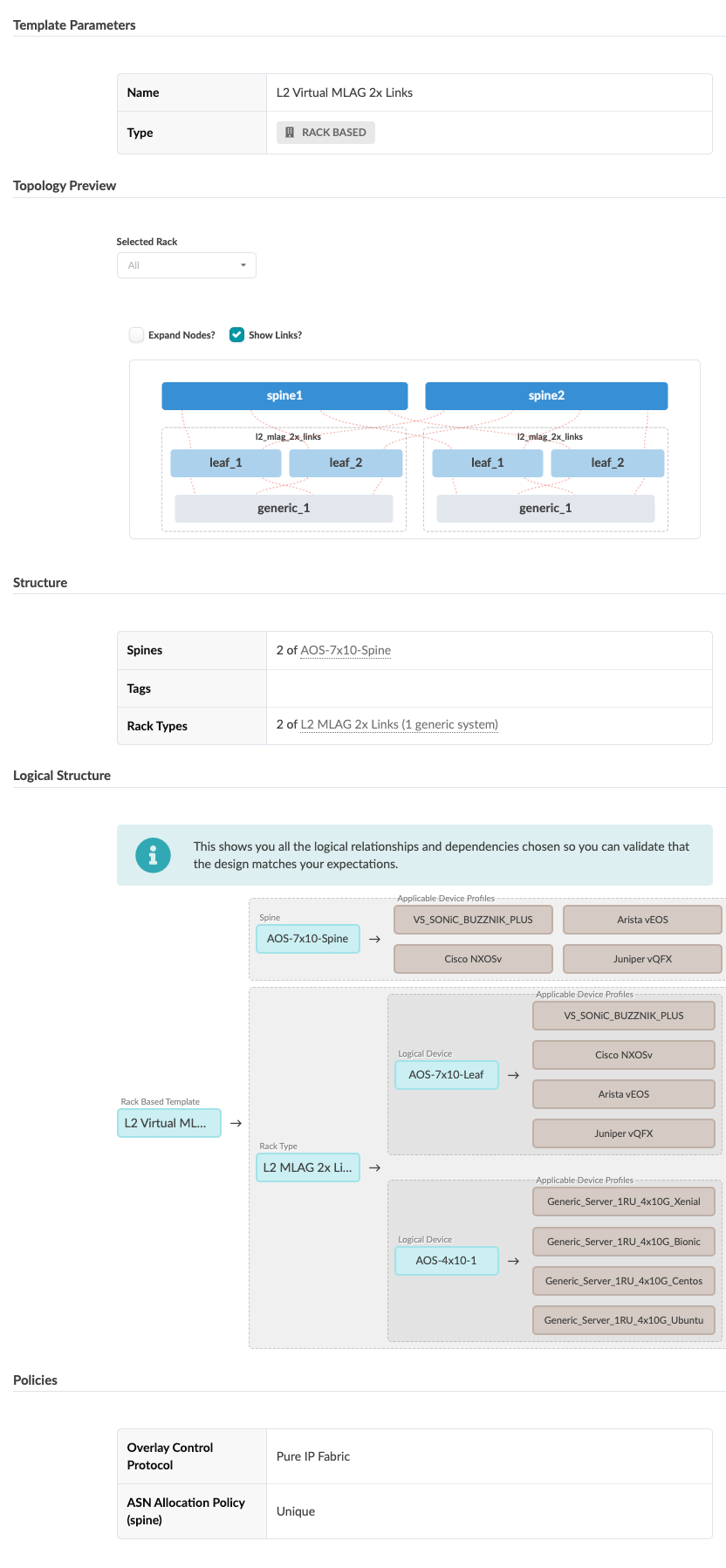

The Template Parameters section includes the template name and type:

-

Name - A unique name to identify the template. 17 characters or fewer

-

Type - Rack-based: based on the type and number of racks to connect

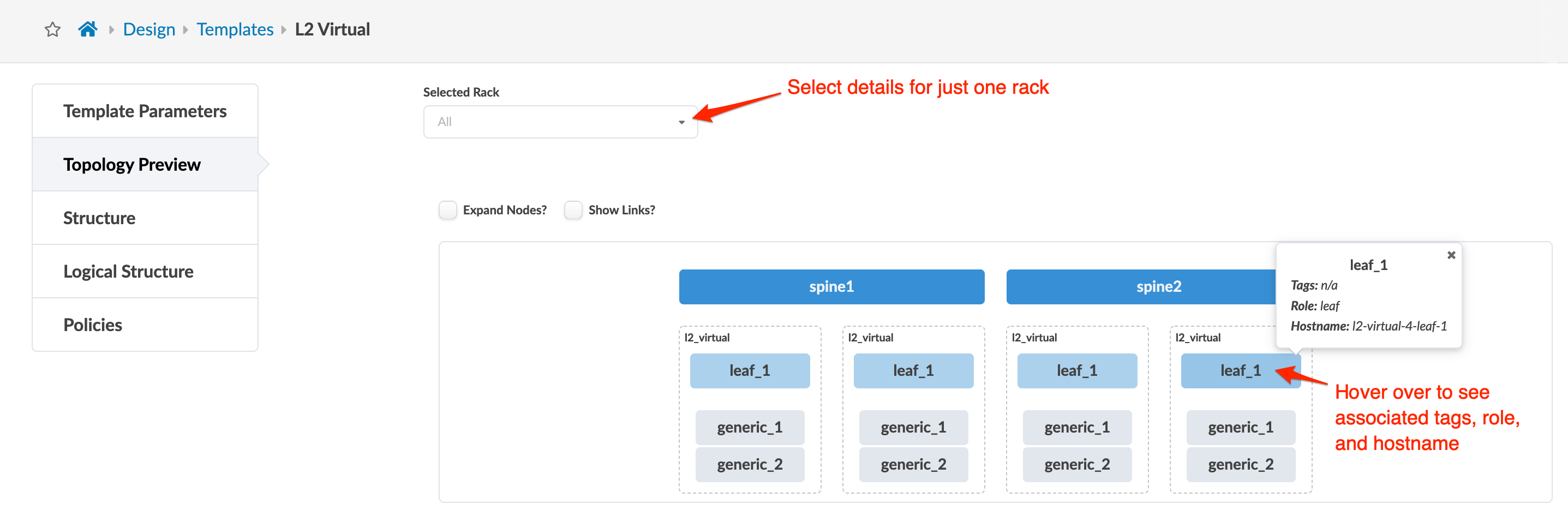

Topology Preview

The Topology Preview section shows a visual representation of the elements included in the template. The screenshot below is for the predefined rack-based rack type named L2 Virtual.

Structure

The Structure section includes details about the components that make up the template, as shown in the table below:

|

Structure |

Options |

|---|---|

|

Spines |

|

|

Tags |

User-specified. Select tags from drop-down list generated from global catalog or create tags on-the-fly (which then become part of the global catalog). Useful for specifying external routers. Tags used in templates are embedded, so any subsequent changes to tags in the global catalog do not affect templates. |

|

Rack Types |

ESI-based rack types in rack-based templates without EVPN are invalid. |

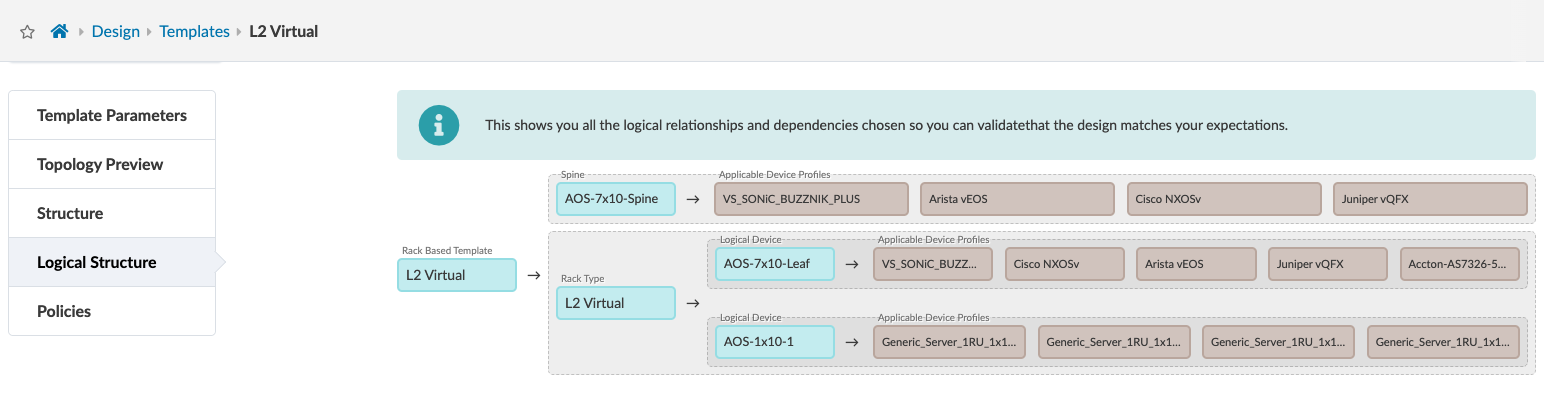

Logical Structure

The Logical Structure section consists of a visual representation of the logical elements in the template. It shows the logical relationships and dependencies so you can validate that the design matches your expectations. The screenshot below is for the predefined rack-based rack type named L2 Virtual. In the screenshot, you can see that for the AOS-7x10-Leaf logical device, you could use one of 5 different devices. These associations are the interface maps that use that leaf logical device. If you created a new interface map that included the AOS-7x10-Leaf logical device and a different device profile, it would also appear in this logical structure graphic.

Policies

The Policies section includes policies that you can configure in rack-based templates are shown in the table below.

|

Policy |

Options |

|---|---|

|

ASN Allocation Scheme (spine) |

|

|

Overlay Control Protocol |

|

|

Spine to Leaf Links Underlay Type |

|

Pod-based Templates

Pod-based templates are used to create large, 5-stage Clos networks, essentially combining multiple rack-based templates using an additional layer of superspine devices. See 5-Stage Clos Architecture for more information.

Pod-based templates are divided into the following sections:

Template Parameters

The Template Parameters section includes the template name and the type of template:

-

Name - A unique name to identify the template. 17 characters or fewer

-

Type - Pod-based: based on the type and number of rack-based templates to connect

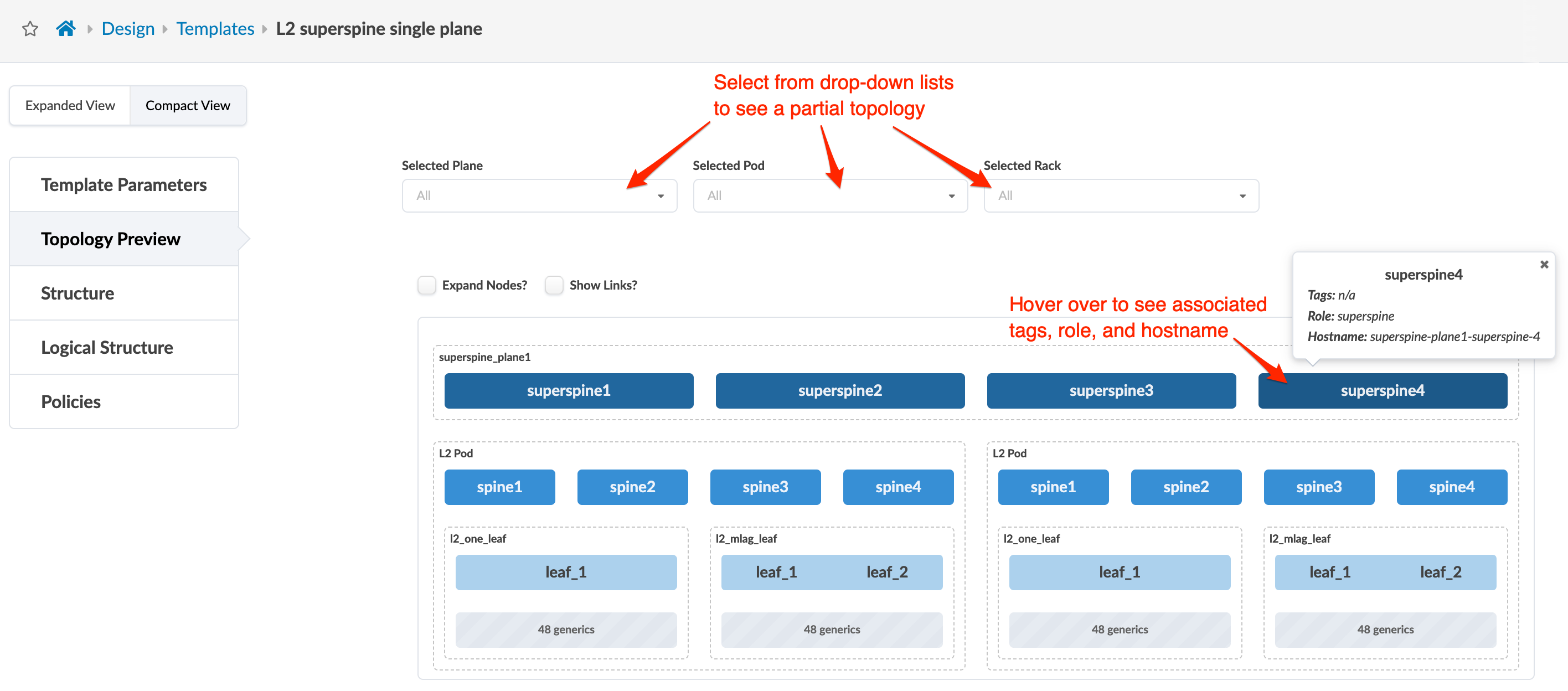

Topology Preview

The Topology Preview section shows a visual representation of the elements included in the template. The screenshot below is for the predefined pod-based rack type named L2 superspine single plane.

Structure

The Structure section includes details about the components that make up the template, as shown in the table below:

|

Structure |

Options |

|---|---|

|

Superspines |

|

|

Tags |

User-specified. Select tags from drop-down list generated from global catalog or create tags on-the-fly (which then become part of the global catalog). Useful for specif.ying external routers. Tags used in templates are embedded, so any subsequent changes to tags in the global catalog do not affect templates. |

|

Pods |

Type of rack-based template and number of each selected template |

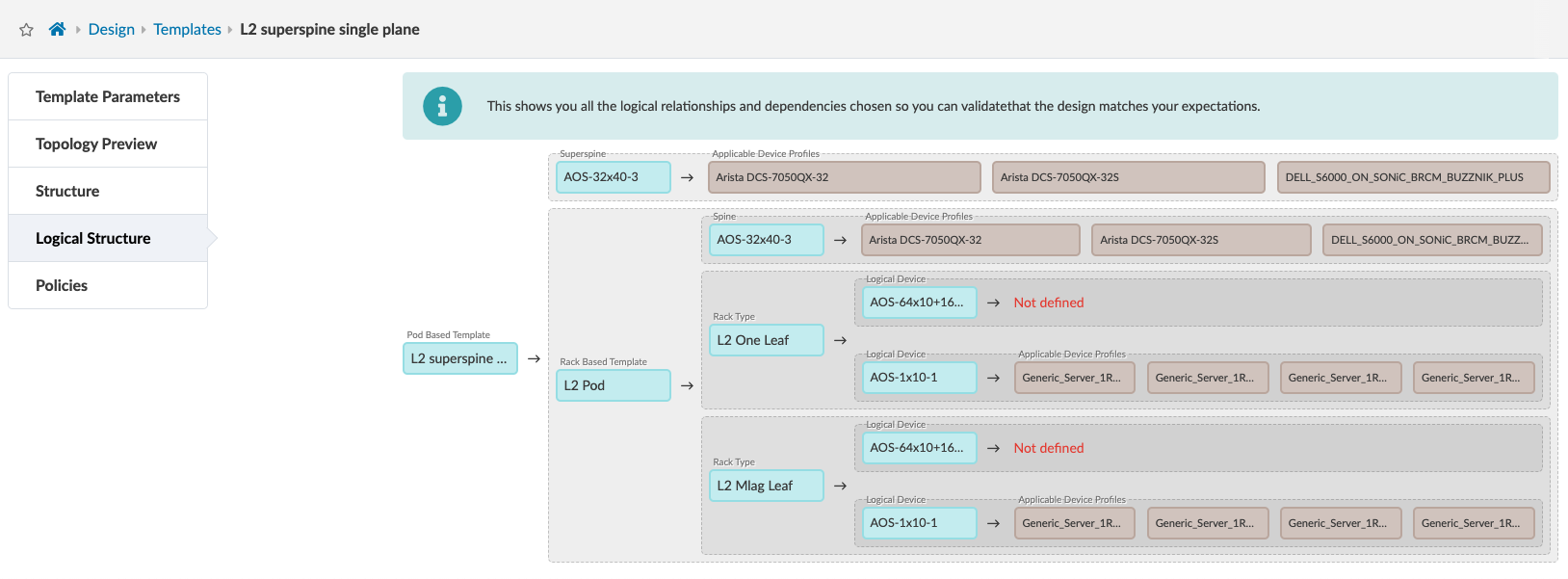

Logical Structure

The Logical Structure section consists of a visual representation of the logical elements in the template. It shows the logical relationships and dependencies so you can validate that the design matches your expectations. The screenshot below is for the predefined pod-based rack type named L2 superspine single plane.

Policies

The Policies section includes policies that you can configure in pod-based templates are shown in the table below

|

Policy |

Options |

|---|---|

|

Spine to Superspine Links |

|

|

Overlay Control Protocol |

|

Collapsed Templates

Collapsed templates allow you to consolidate leaf, border leaf and spine functions into a single pair of devices. A full mesh topology is created at the leaf level instead of at leaf-spine connections. This spineless template uses L3 collapsed rack types. Collapsed templates have the following limitations:

-

No support for upgrading collapsed L3 templates to L3 templates with spine devices (To achieve the same result you could move devices from the collapsed L3 blueprint to an L3 Clos blueprint.)

-

Collapsed L3 templates can't be used as pods in 5-stage templates.

-

You can't mix vendors inside redundant leaf devices - the two leaf devices must be from the same vendor and model.

-

Leaf-to-leaf links can't be added, edited, or deleted.

-

Inter-leaf connections are limited to full mesh.

-

IPv6 is not supported.

Collapsed templates are divided into the following sections:

Template Parameters

The Template Parameters section includes the template name and the type of template:

-

Name - A unique name to identify the template. 17 characters or fewer

-

Type - Collapsed: a spineless template using L3 collapsed rack types

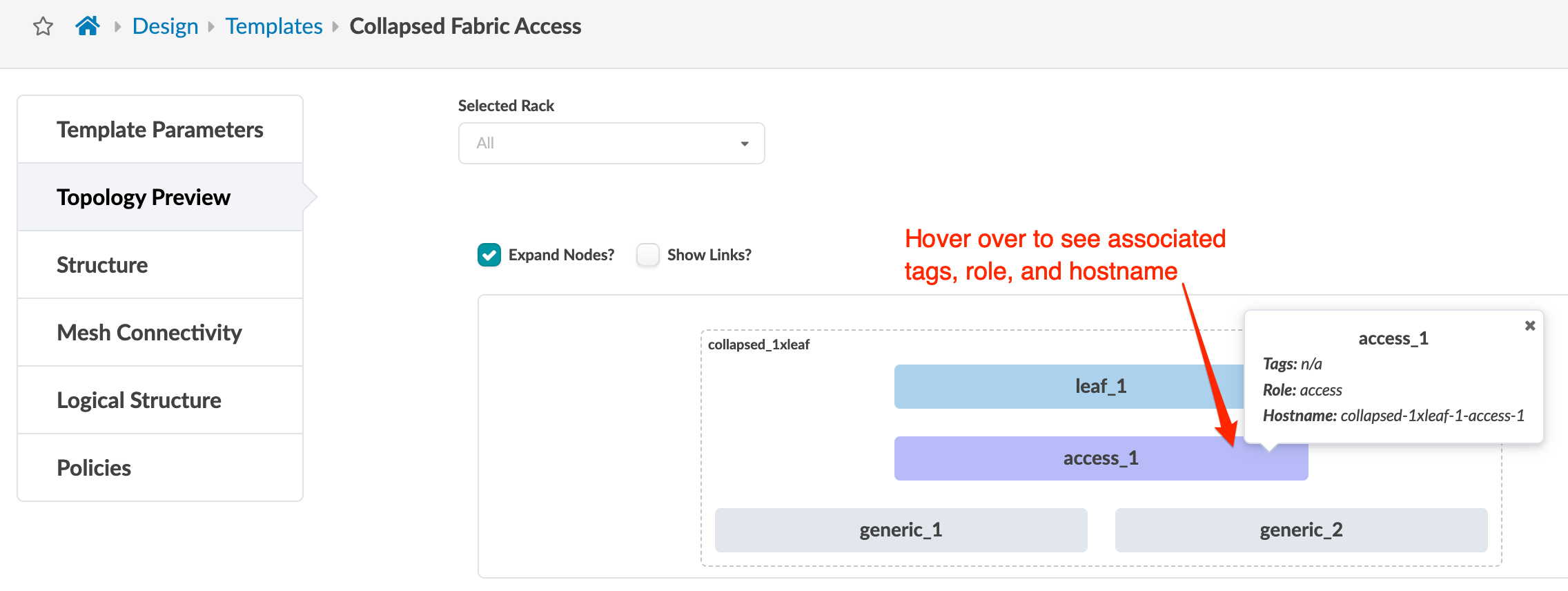

Topology Preview

The Topology Preview section shows a visual representation of the elements included in the template. The screenshot below is for the predefined collapsed rack type named Collapsed Fabric Access.

Structure

The Structure section includes details about the components that make up the template, as shown in the table below:

|

Structure |

Options |

|---|---|

|

Rack Type |

Type of L3 collapsed rack and number of each selected rack type |

Mesh Connectivity

The Mesh Connectivity section includes details about the number of mesh links and their speeds, as shown in the table below:

|

Mesh Connectivity |

Options |

|---|---|

|

Mesh Links Count and Speed |

Defines the link set created between every pair of physical devices, including devices in redundancy groups (MLAG / ESI). These links are always physical L3. No logical links are needed at the mesh level. |

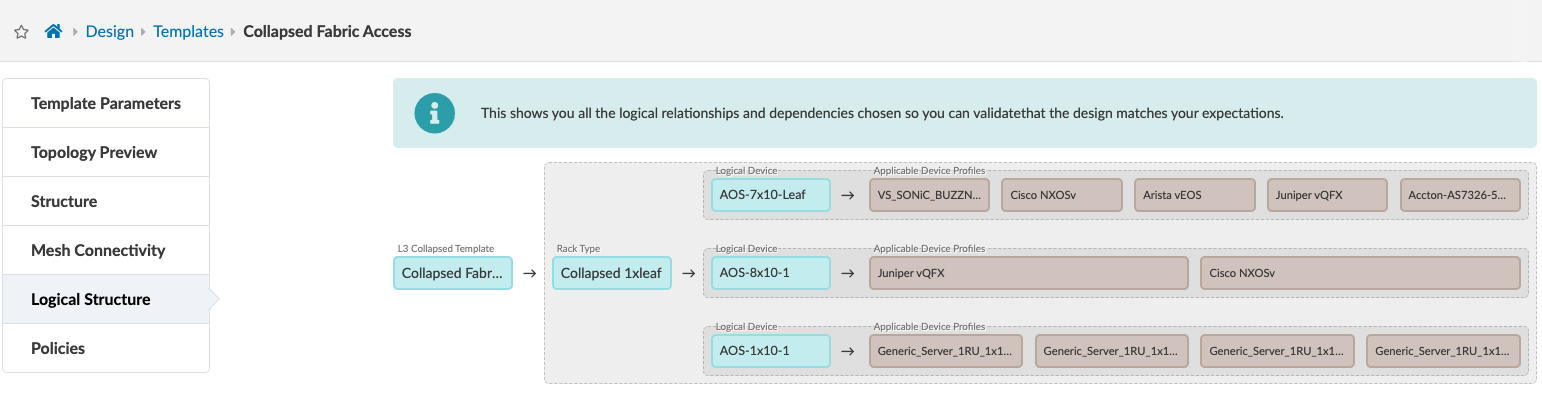

Logical Structure

The Logical Structure section consists of a visual representation of the logical elements in the template. It shows the logical relationships and dependencies so you can validate that the design matches your expectations. The screenshot below is for the predefined collapsed rack type named Collapsed Fabric Access.

Policies

The Policies section includes policies that you can configure in collapsed templates are shown in the table below

|

Policy |

OPtions |

|---|---|

|

Overlay Control Protocolegp |

|

Rail Collapsed Templates

AI Cluster Templates

L3 Clos

Rail Collapsed Design

The Rail-Collapsed (rail-only) AI Fabric design enables you to optimize your network fabric investment for a small AI cluster. If your GPU servers can fit within a single stripe, you can simplify the architecture by removing the spine layer. In a rail-collapsed AI fabric, localized GPU-to-GPU communication is confined to a single rail using direct or local switch links, removing dependency on the spine layer.

This design approach eliminates the need for a spine layer, while optimizing traffic flow for small-scale AI clusters (see Table 9: Maximum number of GPUs supported per stripe). In Juniper Apstra 6.0, you can create and configure rail-collapsed fabric clusters with the Create AI Cluster Template tool. Apstra 6.0 supports all of the rail-optimized traffic visibility, resource monitoring, and automation tools for rail-collapsed fabrics.

This document explores the design and key aspects of rail-collapsed AI fabrics.

Intra-Rail Communication with Local Optimization

In a rail-collapsed AI network, a critical requirement is that Local Optimization is enabled on the GPU server. Local Optimization is a generic term that refers to GPU technology that enables single-hop communication between GPUs across rails. Traffic is routed internally within the GPU server, eliminating the need for the spine layer. Intra-rail GPU-to-GPU communication is achieved because Local Optimization allows the source GPU to jump directly to the destination rail internally, within the server’s high-bandwidth interconnect switch (for example, NVIDIA NVSwitch). As a result, inter-rail traffic does not require a spine switch and is routed inside the GPU server itself. Through Local Optimization, the absence of a spine layer does not limit the network’s ability to handle any traffic pattern in the cluster.

A key motivation for the rail-collapsed design is to optimize efficiency and cost-effectiveness of network fabric infrastructure for small-scale AI clusters. Traditional rail-optimized designs reserve 50% of leaf ports for spine uplinks to avoid oversubscribed bandwidth, which limits directly connected GPUs and increases network hops. For example, a 64-port switch (shown in the Fabric Scaling image here) would connect 64 GPU servers in a rail-collapsed design, compared to 32 servers if ports are reserved for spine uplinks. By eliminating the spine layer, every leaf port is dedicated to server connectivity, doubling port utilization and enabling direct, single-hop communication between GPUs on the same rail.

In leaf-spine networks, even when there is no oversubscription, congestion issues still arise as a result of non-uniform or bursty AI traffic patterns. In a rail-collapsed design, all GPU-to-GPU traffic within the rail is kept local, and irregular traffic patterns don’t traverse the spine layer. This approach lowers network latency and increases reliability because the number of network hops and potential points of failure are minimized.

For information about how Local Optimization is implemented in environments using NVIDIA hardware, see the the following official documentation:

Scale Limitations of Rail-Collapsed Fabric Design

As mentioned earlier, rail-collapsed AI fabrics are only suitable for clusters where traffic does not necessitate the need for the spine layer. Clusters with up to 1024 GPUs can be deployed without a spine layer. This type of cluster can support inference or testing workloads because most of the traffic remains within the rail. For medium and large clusters with potentially thousands of GPUs, a traditional 3-Clos network is necessary to facilitate inter-stripe traffic in clusters with more than one stripe.

For information about how to create a Rail-Collapsed AI Cluster Template, see Create AI Cluster Template.

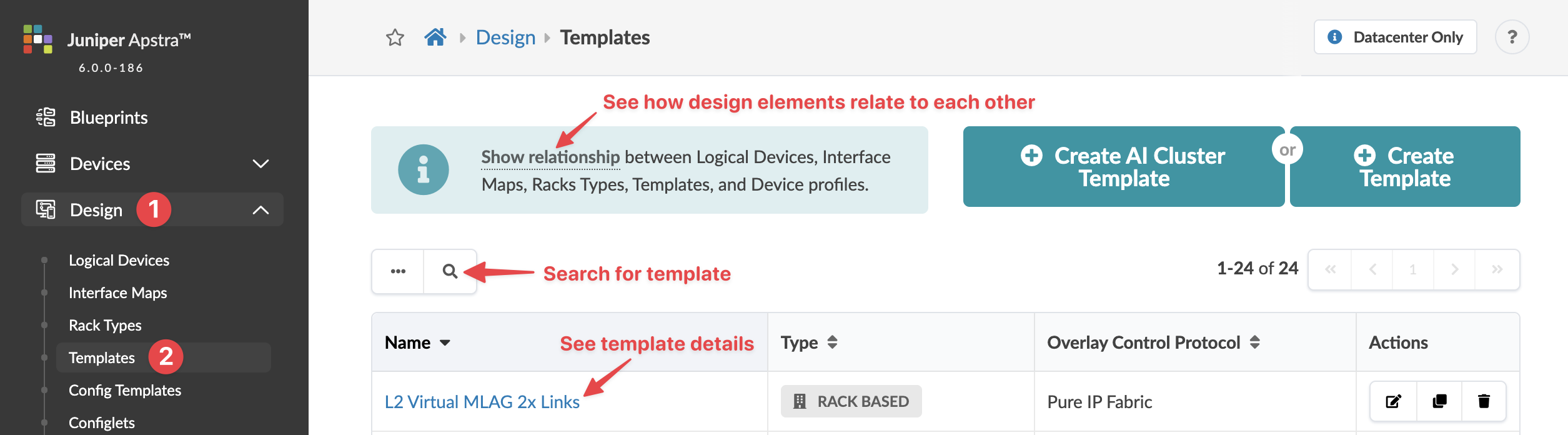

Templates in the Apstra GUI

From the left navigation menu, navigate to Design > Templates to go to the templates table in the Design (global) catalog.

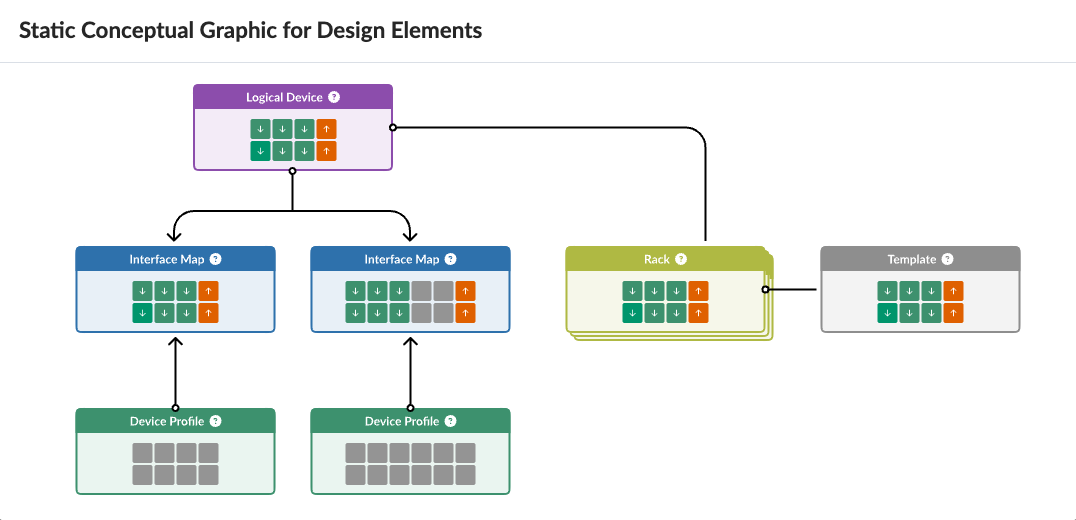

To see how design elements and device profiles are related to each other, click Show relationship. This is helpful if you're new to the Apstra environment.

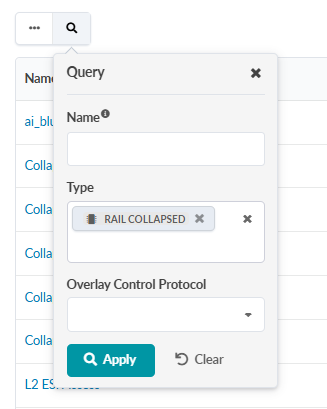

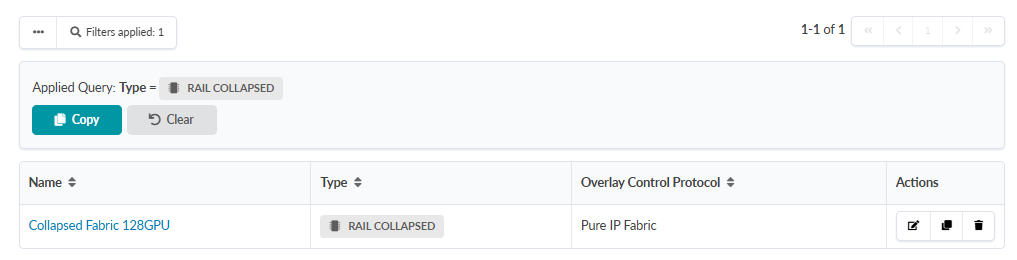

Many templates are predefined for you. To search for a template by its name, type of template and/or overlay control protocol, click the Search button (magnifying glass), enter your criteria and click Apply.

For example, to quickly find rail-collapsed templates, click the Search button at the top, select RAIL COLLAPSED from the Type drop-down list, then click Apply.

Click a template name to go to its details. The example below is for the L2 Virtual MLAG 2x Links.

You can create, edit, and delete templates.