Preparing for Junos Node Slicing Setup

Topics in this section apply only to Junos node slicing set up using the external server model. For the in-chassis Junos node slicing, proceed to Configuring MX Series Router to Operate in In-Chassis Mode.

Before setting up Junos node slicing (external server model), you need to perform a few preparatory steps, such as connecting the servers and the router, installing additional packages, configuring x86 server Linux GRUB, and setting up the BIOS of the x86 server CPUs.

Connecting the Servers and the Router

To set up Junos node slicing, you must directly connect a pair of external x86 servers to the MX Series router. Besides the management port for the Linux host, each server also requires two additional ports for providing management connectivity for the JDM and the GNF VMs, respectively, and two ports for connecting to the MX Series router.

Do not connect the loopback cable to external CB port when Junos node slicing is enabled on the MX series router. Also, ensure that the external CB port is not connected to the other CB's external port.

To prevent the host server from any SSH brute force attack, we recommend that you add IPtables rules on the host server. The following is an example:

iptables -N SSH_CONNECTIONS_LIMIT iptables -A INPUT -i jmgmt0 -p tcp -m tcp --dport 22 -m state --state NEW -j SSH_CONNECTIONS_LIMIT iptables -A SSH_CONNECTIONS_LIMIT -m recent --set --name SSH --rsource iptables -A SSH_CONNECTIONS_LIMIT -m recent --update --seconds 120 --hitcount 10 --name SSH --rsource -j DROP iptables -A SSH_CONNECTIONS_LIMIT -j ACCEPT

The rule in the above example is used to rate-limit the incoming SSH connections. It allows you to block connections from the remote IP for a certain period of time when a particular number of SSH attempts are made. As per the example above, after 10 attempts, connections from remote IP will be blocked for 120 seconds.

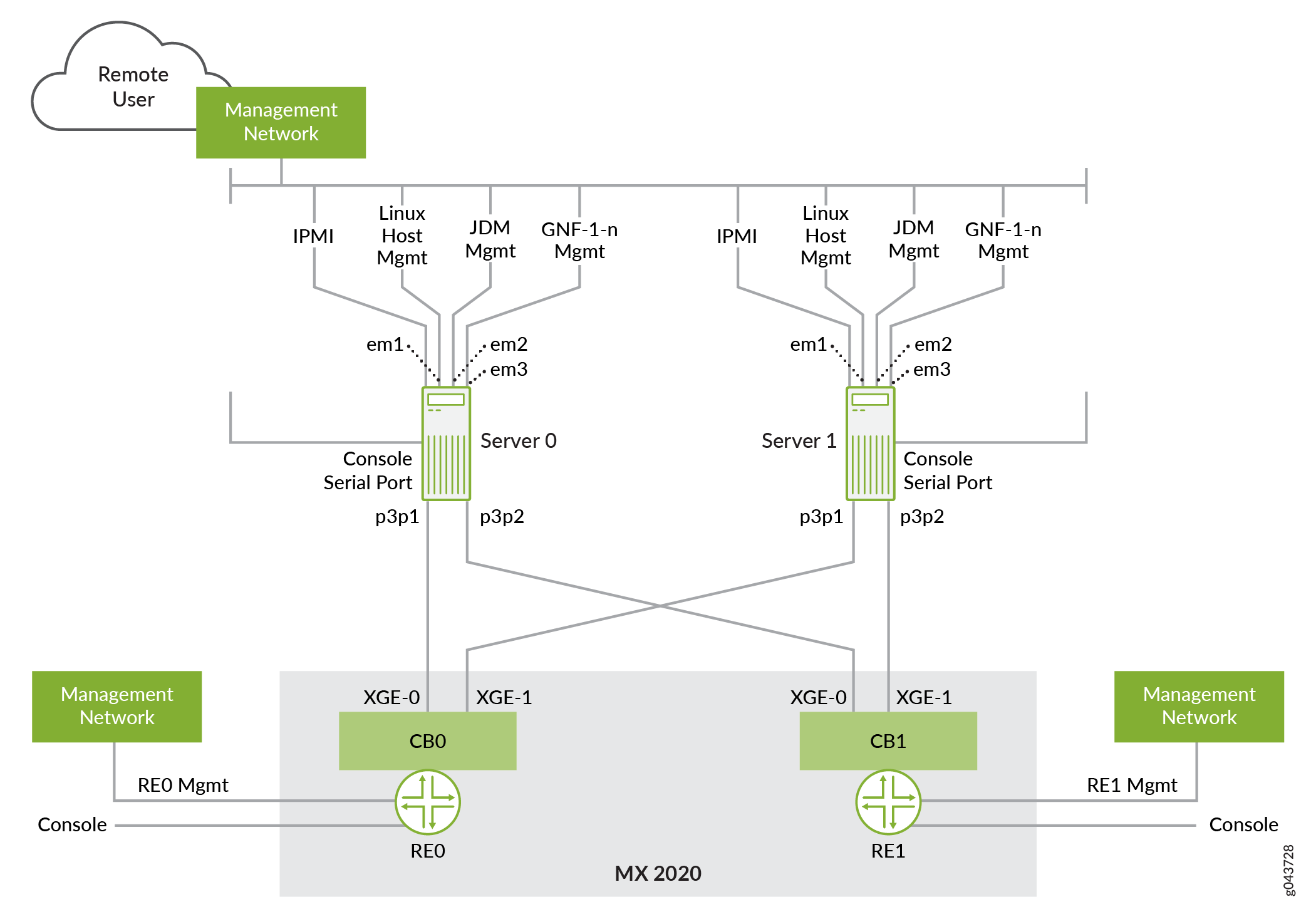

Figure 1 shows how an MX2020 router is connected to a pair of x86 external servers.

According to the example in Figure 1, em1, em2, and em3 on the x86 servers

are the ports that are used for the management of the Linux host,

the JDM and the GNFs, respectively. p3p1 and p3p2 on each server are the two 10-Gbps ports that are connected to the

Control Boards of the MX Series router.

The names of interfaces on the server, such as em1, p3p1 might vary according to the server hardware configuration.

For more information on the XGE ports of the MX Series router Control Board (CB) mentioned in Figure 1, see:

SCBE2-MX Description (for MX960 and MX480)

Note:The XGE port numbers are not labeled on the SCBE2. On a vertically oriented SCBE2, the upper port is XGE-0 and the lower port is XGE-1. On a horizontally oriented SCBE2, the left port is XGE-0 and the right port is XGE-1.

REMX2K-X8-64G and REMX2K-X8-64G-LT CB-RE Description (for MX2010 and MX2020)

Use the show chassis ethernet-switch command

to view these XGE ports. In the command output on MX960, refer to

the port numbers 24 and 26 to view these ports

on the SCBE2. In the command output on MX2010 and MX2020, refer to

the port numbers 26 and 27 to view these ports

on the Control Board-Routing Engine (CB-RE).

x86 Server CPU BIOS Settings

For Junos node slicing, the BIOS of the x86 server CPUs should be set up such that:

Hyperthreading is disabled.

The CPU cores always run at their rated frequency.

The CPU cores are set to reduce jitter by limiting C-state use.

To find the rated frequency of the CPU cores on the server,

run the Linux host command lscpu, and check the value for

the field Model name. See the following

example:

Linux server0:~# lscpu

..

Model name: Intel(R) Xeon(R) CPU E5-2680 v3 @ 2.50GHz

..

To find the frequency at which the CPU cores are currently running,

run the Linux host command grep MHz /proc/cpuinfo and check

the value for each CPU core.

On a server that has the BIOS set to operate the CPU cores at their rated frequency, the observed values for the CPU cores will all match the rated frequency (or be very close to it), as shown in the following example.

Linux server0:~# grep MHz /proc/cpuinfo

…

cpu MHz : 2499.902

cpu MHz : 2500.000

cpu MHz : 2500.000

cpu MHz : 2499.902

…

On a server that does not have the BIOS set to operate the CPU cores at their rated frequency, the observed values for the CPU cores do not match the rated frequency, and the values could also vary with time (you can check this by rerunning the command).

Linux server0:~# grep MHz /proc/cpuinfo

…

cpu MHz : 1200.562

cpu MHz : 1245.468

cpu MHz : 1217.625

cpu MHz : 1214.156

To set the x86 server BIOS system profile to operate the CPU cores at their rated frequency, reduce jitter, and disable hyperthreading, consult the server manufacturer, because these settings vary with server model and BIOS versions.

Typical BIOS system profile settings to achieve this include:

Logical processor: set to Disabled.CPU power management: set to Maximum performance.Memory frequency: set to Maximum performance.Turbo boost: set to Disabled.C-states and C1E state: set to Disabled.Energy efficient policy: set to Performance.Monitor/Mwait: set to Disabled.

A custom BIOS system profile might be required to set these values.

x86 Server Linux GRUB Configuration

In Junos node slicing, each GNF VM is assigned dedicated CPU

cores. This assignment is managed by Juniper Device Manager (JDM).

On each x86 server, JDM requires that all CPU cores other than CPU

cores 0 and 1 be reserved for Junos node slicing – and in effect,

that these cores be isolated from other applications. CPU cores 2

and 3 are dedicated for GNF virtual disk and network I/O. CPU cores

4 and above are available for assignment to GNF VMs. To reserve these

CPU cores, you must set the isolcpus parameter in the Linux

GRUB configuration as described in the following procedure:

For x86 servers running Red Hat Enterprise Linux (RHEL) 7.3, perform the following steps:

Determine the number of CPU cores on the x86 server. Ensure that hyperthreading has already been disabled, as described in x86 Server CPU BIOS Settings. You can use the Linux command

lscputo find the total number of CPU cores, as shown in the following example:Linux server0:~#

lscpu… Cores per socket: 12 Sockets: 2 …Here, there are 24 cores (12 x 2). The CPU cores are numbered as core 0 to core 23.

As per this example, the

isolcpusparameter must be set to ’isolcpus=4-23’ (isolate all CPU cores other than cores 0, 1, 2, and 3 for use by the GNF VMs). Theisolcpusparameter is set to ’isolcpus=4-23’ because of the following:On each x86 server, JDM requires that all CPU cores other than CPU cores 0 and 1 be reserved for Junos node slicing.

CPU cores 2 and 3 are dedicated for GNF virtual disk and network I/O.

Note:Previously, the

isolcpusparameter 'isolcpus=2-23' was used. This has now been updated to 'isolcpus=4-23'. For more information, see KB35301.To set the

isolcpusparameter in the Linux GRUB configuration file, follow the procedures specific to your host OS version. For RHEL 7, the procedure is described in the section Isolating CPUs from the process scheduler in this Red Hat document. For RHEL 9, the information on modifying the kernel command-line is described in this Red Hat document.A summary of the procedures is as follows:

Edit the Linux GRUB file /etc/default/grub to append the

isolcpusparameter to the variable GRUB_CMDLINE_LINUX, as shown in the following example:GRUB_CMDLINE_LINUX= "crashkernel=auto rd.lvm.lv=rhel/root rd.lvm.lv=rhel/swap rhgb quiet

isolcpus=4-23”Run the Linux shell command

grub2-mkconfigto generate the updated GRUB file as shown below:If you are using legacy BIOS, issue the following command:

# grub2-mkconfig -o /boot/grub2/grub.cfgIf you are using UEFI, issue the following command:

# grub2-mkconfig -o /boot/efi/EFI/redhat/grub.cfgIn RHEL 9.3 and later, issue the following command for either legacy BIOS or UEFI systems:

# grub2-mkconfig -o /boot/grub2/grub.cfg --update-bls-cmdlineReboot the x86 server.

Verify that the

isolcpusparameter has now been set, by checking the output of the Linux commandcat /proc/cmdline, as shown in the following example:#

cat /proc/cmdlineBOOT_IMAGE=/vmlinuz-3.10.0-327.36.3.el7.x86_64 … quiet isolcpus=4-23

For x86 servers running Ubuntu 24.04, perform the following steps:

Determine the number of CPU cores on the x86 server. Ensure that hyperthreading has already been disabled, as described in x86 Server CPU BIOS Settings. You can use the Linux command

lscputo find the total number of CPU cores.Edit the /etc/default/grub file to append the isolcpus parameter to the variable GRUB_CMDLINE_LINUX_DEFAULT, as shown in the following example:

GRUB_CMDLINE_LINUX_DEFAULT= "intel_pstate=disable processor.ignore_ppc=1

isolcpus=4-23"To update the changes, run

update-grub.Reboot the server.

Verify that the

isolcpusparameter has now been set, by checking the output of the Linux commandcat /proc/cmdline.

Updating Intel X710 NIC Driver for x86 Servers

If you are using Intel X710 NIC, ensure that you have the latest driver (2.4.10 or later) installed on the x86 servers, and that X710 NIC firmware version is 18.5.17 or later.

You need to first identify the X710 NIC interface on the servers.

For example, this could be p3p1.

You can check the NIC driver version by running the Linux command ethtool -i interface. See the following

example:

root@Linux server0# ethtool -i p3p1

driver: i40e

version: 2.4.10

firmware-version: 5.05 0x80002899 18.5.17

...Refer to the Intel support page for instructions on updating the driver.

Updating the host OS may replace the Intel X710 NIC driver. Therefore, ensure that the host OS is up to date prior to updating the Intel X710 NIC driver.

You need the following packages for building the driver:

For RedHat:

kernel-devel

Development Tools

For Ubuntu:

make

gcc

If you are using RedHat, run the following commands to install the packages:

root@Linux server0#yum install kernel-develroot@Linux server0#yum group install "Development Tools"

If you are using Ubuntu, run the following commands to install the packages:

root@Linux server0#apt-get install makeroot@Linux server0#apt-get install gcc

After updating the Intel X710 NIC driver, you might notice the following message in the host OS log:

"i40e: module verification failed: signature and/or required

key missing - tainting kernel"

Ignore this message. It appears because the updated NIC driver module has superseded the base version of the driver that was packaged with the host OS.

See Also

Installing Additional Packages for JDM

The x86 servers must have Red Hat Enterprise Linux (RHEL) 7.3, (RHEL) 9.4, or Ubuntu 24.04 LTS installed.

The x86 Servers must have the virtualization packages installed.

For RHEL 9.4, ensure that podman and containernetworking-plugins are installed. If not, use the DNF utility to install the following additional packages, which can be downloaded from the Red Hat Customer Portal.

-

podman

-

containernetworking-plugins

For RHEL 7.3, install the following additional packages, which can be downloaded from the Red Hat Customer Portal.

-

python-psutil-1.2.1-1.el7.x86_64.rpm

-

net-snmp-5.7.2-24.el7.x86_64.rpm

-

net-snmp-libs-5.7.2-24.el7.x86_64.rpm

-

libvirt-snmp-0.0.3-5.el7.x86_64.rpm

Only for Junos OS Releases 17.4R1 and earlier, and for 18.1R1, if you are running RHEL 7.3, also install the following additional package:

-

libstdc++-4.8.5-11.el7.i686.rpm

-

The package version numbers shown are the minimum versions. Newer versions might be available in the latest RHEL 7.3 and RHEL 9.4 patches.

-

The libstdc++ package extension

.i686indicates that it is a 32-bit package. -

For RHEL, we recommend that you install the packages using the

yumordnfcommand.

For Ubuntu 24.04, install the following packages:

-

python-psutil

Only for Junos OS Releases 17.4R1 and earlier, and for 18.1R1, if you are running Ubuntu, also install the following additional package:

-

libstdc++6:i386

-

For Ubuntu, you can use the

apt-getcommand to install the latest version of these packages. For example, use:-

the command

apt-get install python-psutilto install the latest version of thepython-psutilpackage. -

the command

apt-get install libstdc++6:i386to install the latest version of thelibstdc++6package (the extension:i386indicates that the package being installed is a 32-bit version).

-

Completing the Connection Between the Servers and the Router

Complete the following steps before you start installing the JDM:

Ensure that the MX Series router is connected to the x86 servers as described in Connecting the Servers and the Router.

Power on the two x86 servers and both the Routing Engines on the MX Series router.

Identify the Linux host management port on both the x86 servers. For example,

em1.Identify the ports to be assigned for the JDM and the GNF management ports. For example,

em2andem3.Identify the two 10-Gbps ports that are connected to the Control Boards on the MX Series router. For example,

p3p1andp3p2.