Cloud-Native Router Operator Service Module: Host-Based Routing

The Cloud-Native Router Operator Service Module is an operator framework that we use to develop cRPD applications and solutions. This section describes how to use the Service Module to implement a host-based routing solution for your Kubernetes cluster.

Overview

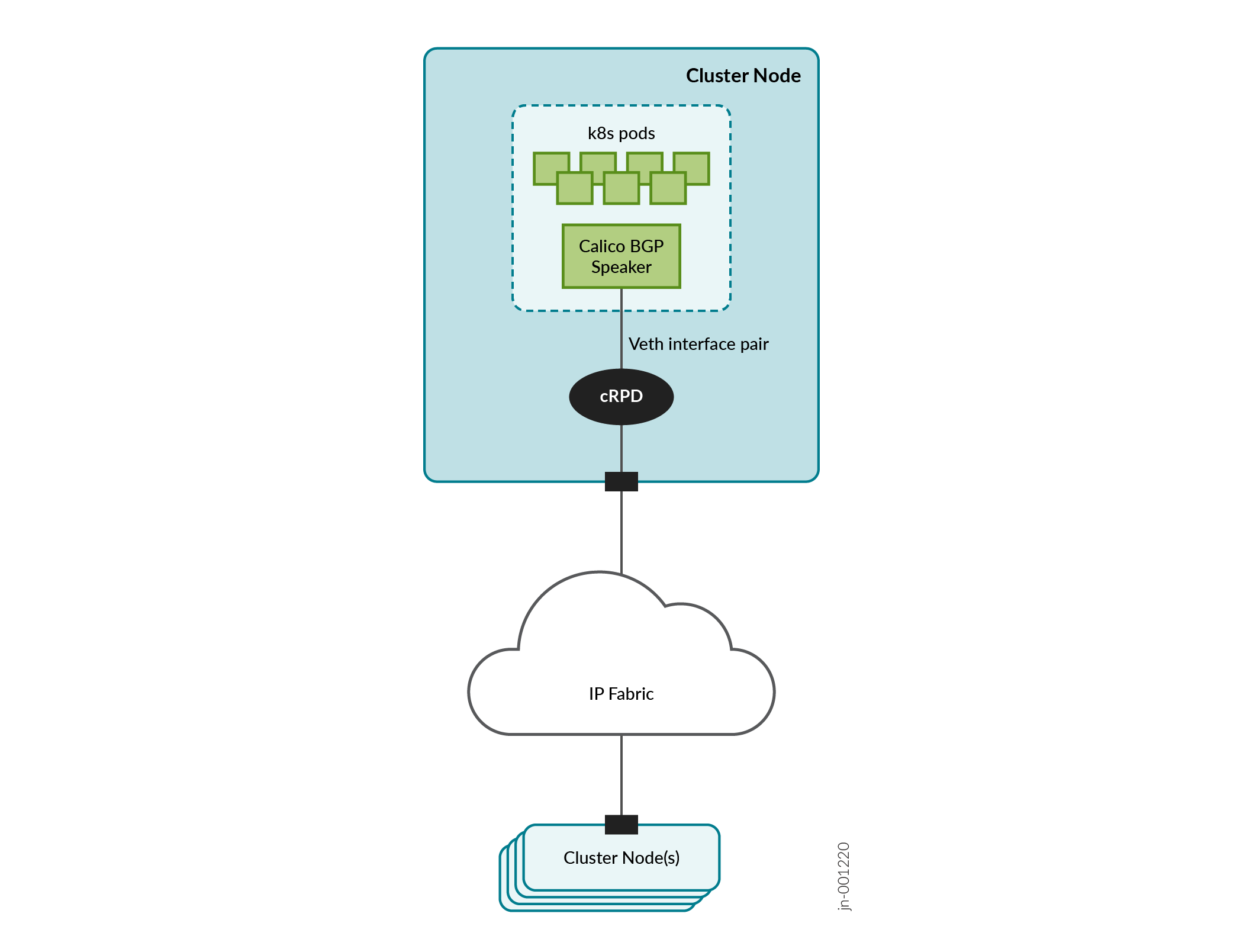

We provide the Cloud-Native Router Operator Service Module to implement a cRPD host-based routing solution for your Kubernetes cluster. Host-based routing, also known as host-based networking, refers to using the host's network namespace instead of the Kubernetes default namespace for the pod network. In the context of Cloud-Native Router, this means that pod-to-pod traffic traverses an external (to the cluster) network provided by cRPD.

The Kubernetes CNI exposes container network endpoints through BGP to a co-located (but independent) cRPD instance acting as a BGP peer. Packets between pods are routed by the Kubernetes CNI to this cRPD instance. This cRPD instance, in turn, routes the packets to the cRPD instance on the destination node for hand-off to the destination Kubernetes CNI for delivery to the destination pod.

By taking this approach to Kubernetes host networking, we are leveraging Cloud-Native Router to provide a more fulsome pod networking implementation that supports common data center protocols such as EVPN-VXLAN and MPLS over UDP.

Figure 1 shows a Kubernetes cluster leveraging cRPD for host-based routing. The Calico BGP speaker in the cluster connects through a virtual Ethernet (veth) interface to a co-located cRPD instance attached to the IP fabric interconnecting cluster nodes.

To facilitate the installation of this host-based routing solution, we provide a Helm chart that you can install and we show you how to configure and customize cRPD and the underlying network infrastructure to support a 5-node cluster (3 control plane nodes and 2 worker nodes).

Install Host-Based Routing

Prepare the Nodes

-

one interface for regular management access (for example, SSH)

-

one interface for cRPD to connect to the IP fabric

Create Virtual Ethernet Interface (VETH) Pairs and Configure Static Routes

Before we bring up the Kubenetes cluster and cRPD, we'll create the veth interfaces that connect them together and configure static routes to direct traffic to the cRPD instance.

On each node, we'll run the Kubernetes cluster (including the Calico BGP speaker)

in the Kubernetes default namespace and we'll run cRPD in a namespace that we'll

call crpd. We'll create a veth pair that connects the two

namespaces, from veth-k8s in the default namespace to

veth-crpd in the crpd namespace.

This is shown in Table 1 along with the IP address assignments we'll use in our example. This includes both IPv4 and IPv6 addresses for a dual stack deployment. If you're only running IPv4, then ignore the IPv6 settings.

|

Node |

Namespace |

Interface |

IP Address |

|---|---|---|---|

|

Node 1 (control plane) |

default |

veth-k8s |

10.1.1.1/30 2001:db8:1::1/126 |

|

crpd |

veth-crpd |

10.1.1.2/30 2001:db8:1::2/126 |

|

|

ens41 |

192.168.1.101/24 |

||

|

Node 2 (control plane) |

default |

veth-k8s |

10.1.2.1/30 2001:db8:2::1/126 |

|

crpd |

veth-crpd |

10.1.2.2/30 2001:db8:2::2/126 |

|

|

ens41 |

192.168.1.102/24 |

||

|

Node 3 (control plane) |

default |

veth-k8s |

10.1.3.1/30 2001:db8:3::1/126 |

|

crpd |

veth-crpd |

10.1.3.2/30 2001:db8:2::2/126 |

|

|

ens41 |

192.168.1.103/24 |

||

|

Node 4 (worker) |

default |

veth-k8s |

10.1.4.1/30 2001:db8:4::1/126 |

|

crpd |

veth-crpd |

10.1.4.2/30 2001:db8:4::2/126 |

|

|

ens41 |

192.168.1.104 |

||

|

Node 5 (worker) |

default |

veth-k8s |

10.1.5.1/30 2001:db8:5::1/126 |

|

crpd |

veth-crpd |

10.1.5.2/30 2001:db8:5::2/126 |

|

|

ens41 |

192.168.1.105/24 |

||

|

1 This is the physical underlay interface connecting cRPD to the IP fabric. The interface name in your setup may differ. |

|||

Perform the following steps on all nodes in the cluster. Remember to set the IP addresses for the different nodes as shown in Table 1.