vMX Overview

SUMMARY Read this topic to get an overview about vMX virtual routers.

The vMX router is a virtual version of the MX Series 3D Universal Edge Router. Like the MX Series router, the vMX router runs the Junos operating system (Junos OS) and supports Junos OS packet handling and forwarding modeled after the Trio chipset. Configuration and management of vMX routers are the same as for physical MX Series routers, allowing you to add the vMX router to a network without having to update your operations support systems (OSS).

You install vMX software components on an industry-standard x86 server running a hypervisor, either the kernel-based virtual machine (KVM) hypervisor or the VMware ESXi hypervisor.

For servers running the KVM hypervisor, you also run the Linux operating system and applicable third-party software. vMX software components come in one software package that you install by running an orchestration script included with the package. The orchestration script uses a configuration file that you customize for your vMX deployment. You can install multiple vMX instances on one server.

For servers running the ESXi hypervisor, you run the applicable third-party software.

Some Junos OS software features require a license to activate the feature. To understand more about vMX Licenses, see, vMX Licenses for KVM and VMware. Please refer to the Licensing Guide for general information about License Management. Please refer to the product Data Sheets for further details, or contact your Juniper Account Team or Juniper Partner.

Benefits and Uses of vMX Routers

You can use virtual devices to lower your capital expenditure and operating costs, sometimes through automating network operations. Even without automation, use of the vMX application on standard x86 servers enables you to:

Quickly introduce new services

More easily deliver customized and personalized services to customers

Scale operations to push IP services closer to customers or to manage network growth when growth forecasts are low or uncertain

Quickly expand service offerings into new sites

A well designed automation strategy decreases costs as well as increasing network efficiency. By automating network tasks with the vMX router, you can:

Simplify network operations

Quickly deploy new vMX instances

Efficiently install a default Junos OS configuration on all or selected vMX instances

Quickly reconfigure existing vMX routers

You can deploy the vMX router to meet some specific network edge requirements, such as:

Network simulation

Terminate broadband subscribers with a virtual broadband network gateway (vBNG)

Temporary deployment until a physical MX Series router is available

Automation for vMX Routers

Automating network tasks simplifies network configuration, provisioning, and maintenance. Because the vMX software uses the same Junos OS software as MX Series routers and other Juniper Networks routing devices, vMX supports the same automation tools as Junos OS. In addition, you can use standard automation tools to deploy the vMX, as you do other virtualized software.

Architecture of a vMX Instance

The vMX architecture is organized in layers:

The vMX router at the top layer

Third-party software and the hypervisor in the middle layer

Linux, third-party software, and the KVM hypervisor in the middle layer in Junos OS Release 15.1F3 or earlier releases. In Junos OS Release 15.1F3 and earlier releases, the host contains the Linux operating system, applicable third-party software, and the hypervisor.

The x86 server in the physical layer at the bottom

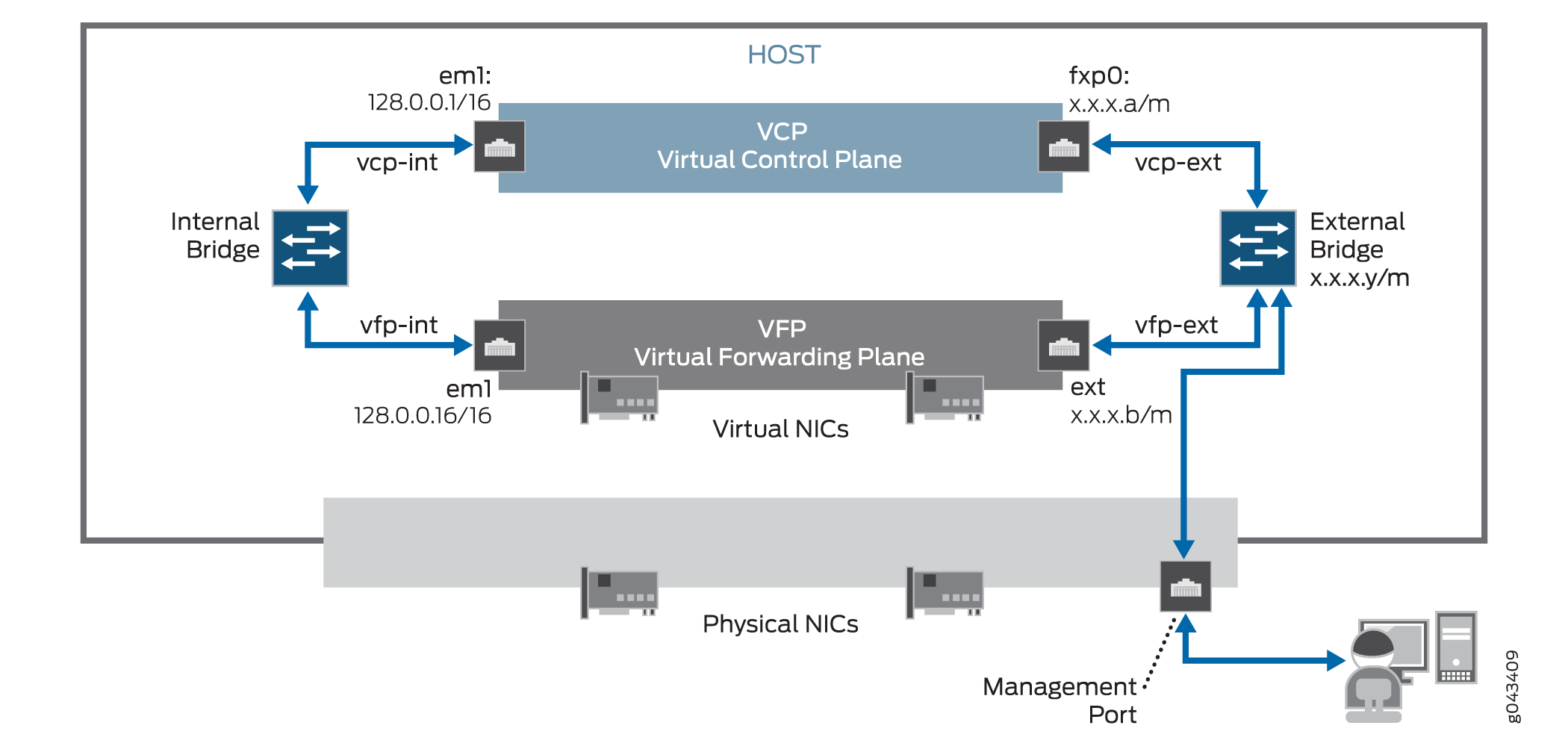

Figure 1 illustrates the architecture of a single vMX instance inside a server. Understanding this architecture can help you plan your vMX configuration.

The physical layer of the server contains the physical NICs, CPUs, memory, and Ethernet management port. The host contains applicable third-party software and the hypervisor.

Supported in Junos OS Release 15.1F3 and earlier releases, the host contains the Linux operating system, applicable third-party software, and the hypervisor.

The vMX instance contains two separate virtual machines (VMs), one for the virtual forwarding plane (VFP) and one for the virtual control plane (VCP). The VFP VM runs the virtual Trio forwarding plane software and the VCP VM runs Junos OS.

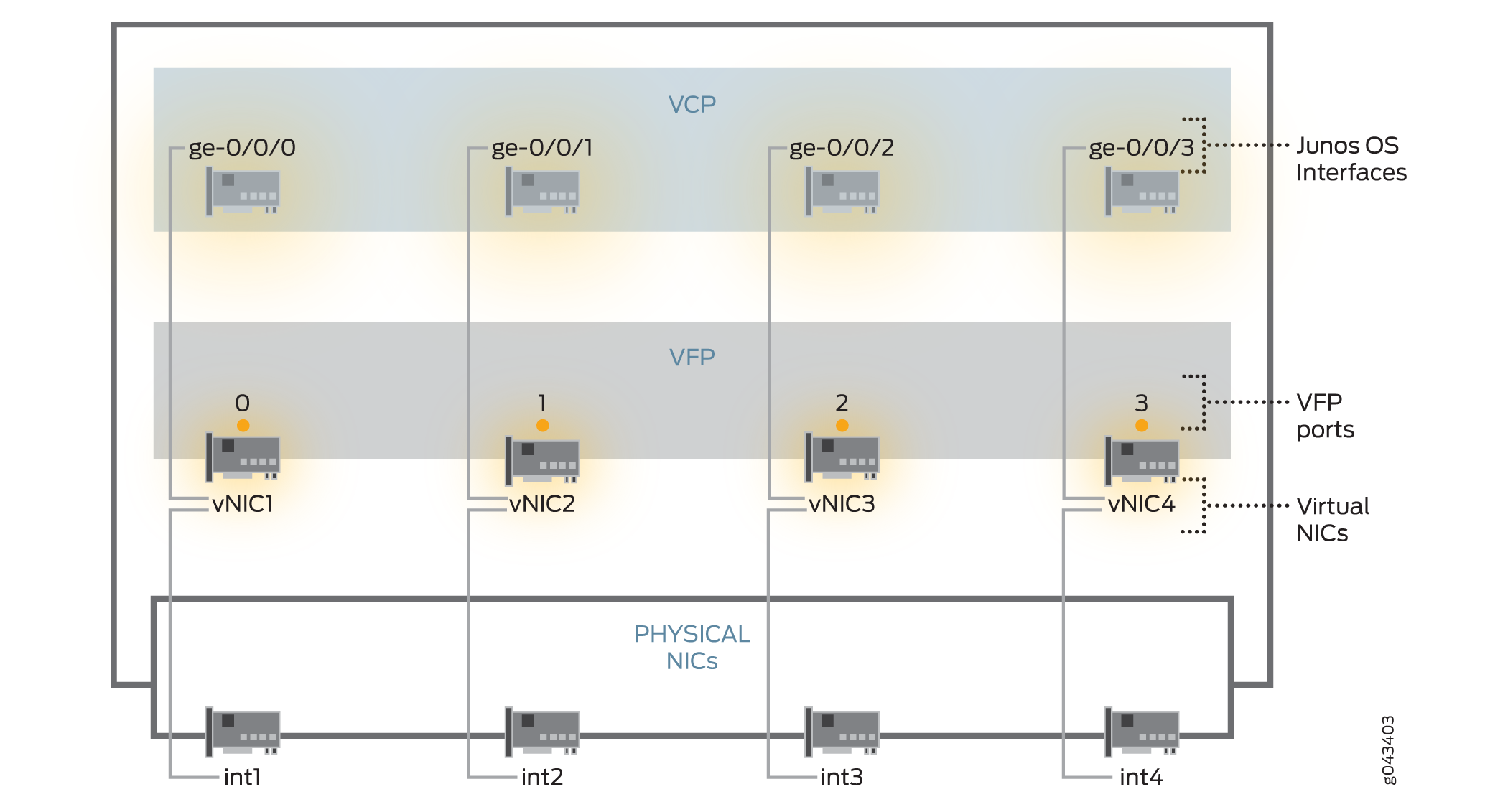

The hypervisor presents the physical NIC to the VFP VM as a virtual NIC. Each virtual NIC maps to a vMX interface. Figure 2 illustrates the mapping.

The orchestration script maps each virtual NIC to a vMX interface that you specify in the configuration file. After you run the orchestration script and the vMX instance is created, you use the Junos OS CLI to configure these vMX interfaces in the VCP (supported in Junos OS Release 15.1F3 or earlier releases).

After the vMX instance is created, you use the Junos OS CLI to configure these vMX interfaces in the VCP. The vMX router supports the following types of interface names:

Gigabit Ethernet (ge)

10-Gigabit Ethernet (xe)

100-Gigabit Ethernet (et)

vMX interfaces configured with the Junos OS CLI and the underlying physical NIC on the server are independent of each other in terms of interface type (for example, ge-0/0/0 can get mapped to a 10-Gigabit NIC).

The VCP VM and VFP VM require Layer 2 connectivity to communicate with each other. An internal bridge that is local to the server for each vMX instance enables this communication.

The VCP VM and VFP VM also require Layer 2 connectivity to communicate with the Ethernet management port on the server. You must specify virtual Ethernet interfaces with unique IP addresses and MAC addresses for both the VFP and VCP to set up an external bridge for a vMX instance. Ethernet management traffic for all vMX instances enters the server through the Ethernet management port.

The way network traffic passes from the physical NIC to the virtual NIC depends on the virtualization technique that you configure.

vMX can be configured to run in two modes depending on the use case:

Lite mode—Needs fewer resources in terms of CPU and memory to run at lower bandwidth.

Performance mode—Needs higher resources in terms of CPU and memory to run at higher bandwidth.

Note:Performance mode is the default mode.

Traffic Flow in a vMX Router

The x86 server architecture consists of multiple sockets and multiple cores within a socket. Each socket also has memory that is used to store packets during I/O transfers from the NIC to the host. To efficiently read packets from memory, guest applications and associated peripherals (such as the NIC) should reside within a single socket. A penalty is associated with spanning CPU sockets for memory accesses, which might result in non-deterministic performance.

The VFP consists of the following functional components:

Receive thread (RX): RX moves packets from the NIC to the VFP. It performs preclassification to ensure host-bound packets receive priority.

Worker thread: The Worker performs lookup and tasks associated with packet manipulation and processing. It is the equivalent of the lookup ASIC on the physical MX Series router.

Transmit thread (TX): TX moves packets from the Worker to the physical NIC.

The RX and TX components are assigned to the same core (I/O core). If there are enough cores available for the VFP, the QoS scheduler can be allocated separate cores. If there are not enough cores available, the QoS scheduler shares the TX core.

TX has a QoS scheduler that can prioritize packets across several queues before they are sent to the NIC (supported in Junos OS Release 16.2).

The RX and TX components can be dedicated to a single core for each 1G or 10G port for the most efficient packet processing. High-bandwidth applications must use SR-IOV. The Worker component utilizes a scale-out distributed architecture that enables multiple Workers to process packets based on packets-per-second processing needs. Each Worker requires a dedicated core (supported in Junos OS Release 16.2).