ON THIS PAGE

Solution Architecture

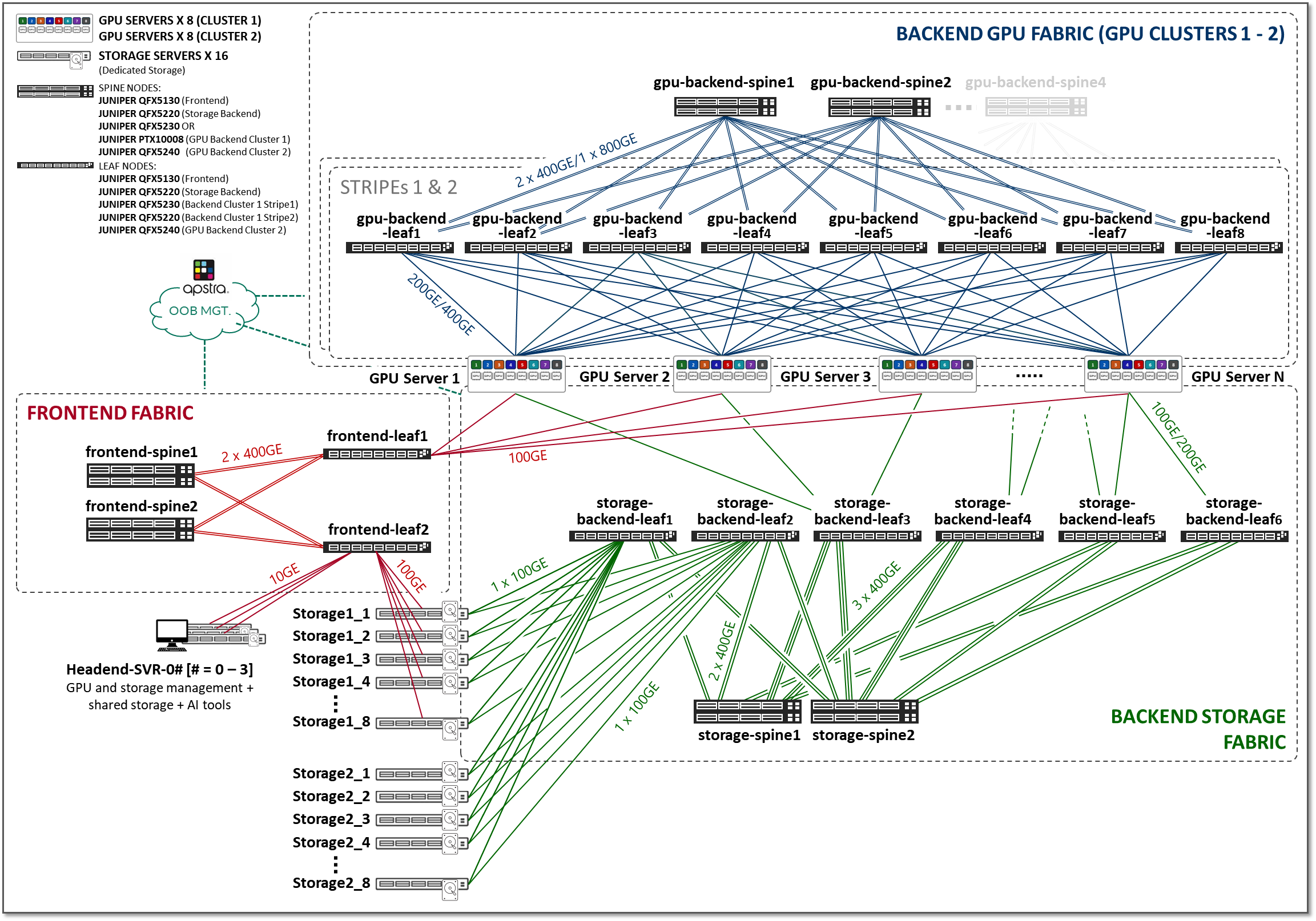

The three fabrics described in the previous section (Frontend, GPU Backend, and Storage Backend), are interconnected together in the overall AI JVD solution architecture as shown in Figure 2.

Figure 2: AI JVD Solution Architecture'

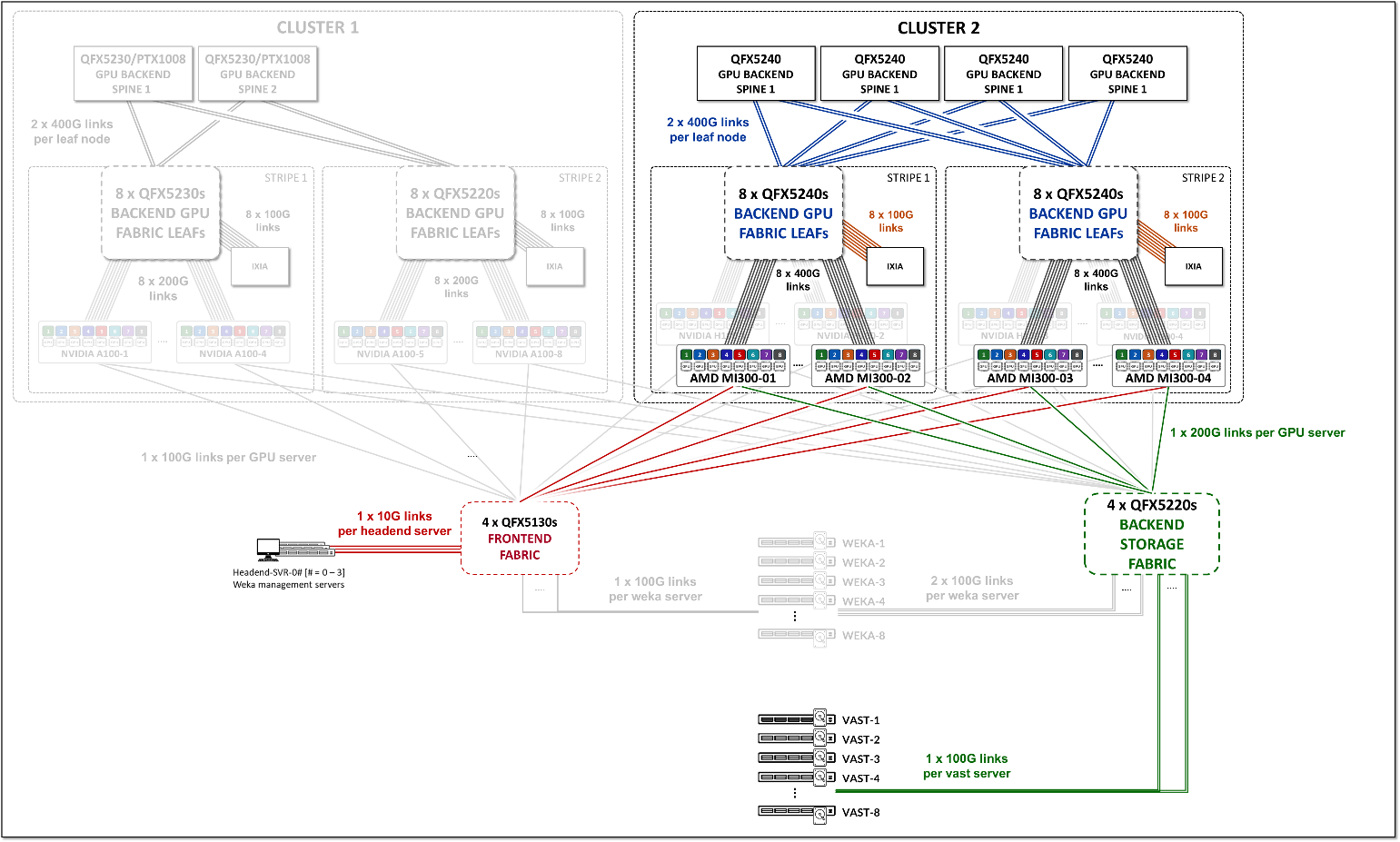

We have built two different Clusters, as shown in Figure 3, which share the Frontend fabric and Storage Backend fabric but have separate GPU Backend fabrics . Each cluster is made of two stripes following the Rail Optimized Stripe Architecture , but include different switch models as Leaf and Spine nodes, as well as GPU server models.

Figure 3: AI JVD Lab Clusters

The GPU Backend in Cluster 1 consists of Juniper QFX5220, and QFX5230 switches as leaf nodes and either QFX5230s switches or PTX10008 routers acting as spine nodes. The QFX5230s and PTX10008 have been validated acting as spine nodes separately, while maintaining the leaf nodes the same. Apstra blueprints are used to switch between the setups with QFX5230s acting as spine nodes and the one with PTX10008 acting as spine.

The GPU Backend in Cluster 2 consists of Juniper QFX5240 switches acting as both leaf nodes and spine nodes and includes AMD MI300X GPU servers and Nvidia H100 GPU servers.

Details about Cluster 1, and the Nvidia GPU servers in Cluster 2 are included in the AI Data Center Network with Juniper Apstra, NVIDIA GPUs, and WEKA Storage—Juniper Validated Design (JVD).

The rest of this document focuses on the AMD MI300X GPU servers and VAST storage and includes server and storage configurations, specific for these systems.

It is important to notice that the type of switch and the number of switches acting as leaf and spine nodes, as well as the number and speed of the links between them, is determined by the type of fabric (Frontend, GPU Backend or Storage Backend) as they present different requirements. More details will be included in the respective fabric description sections.

In the case of the GPU Backend fabric, the number of GPU servers, as well as the number of GPUs per server, are also factors determining the number and switch type of the leaf and spine nodes.

Frontend Fabric

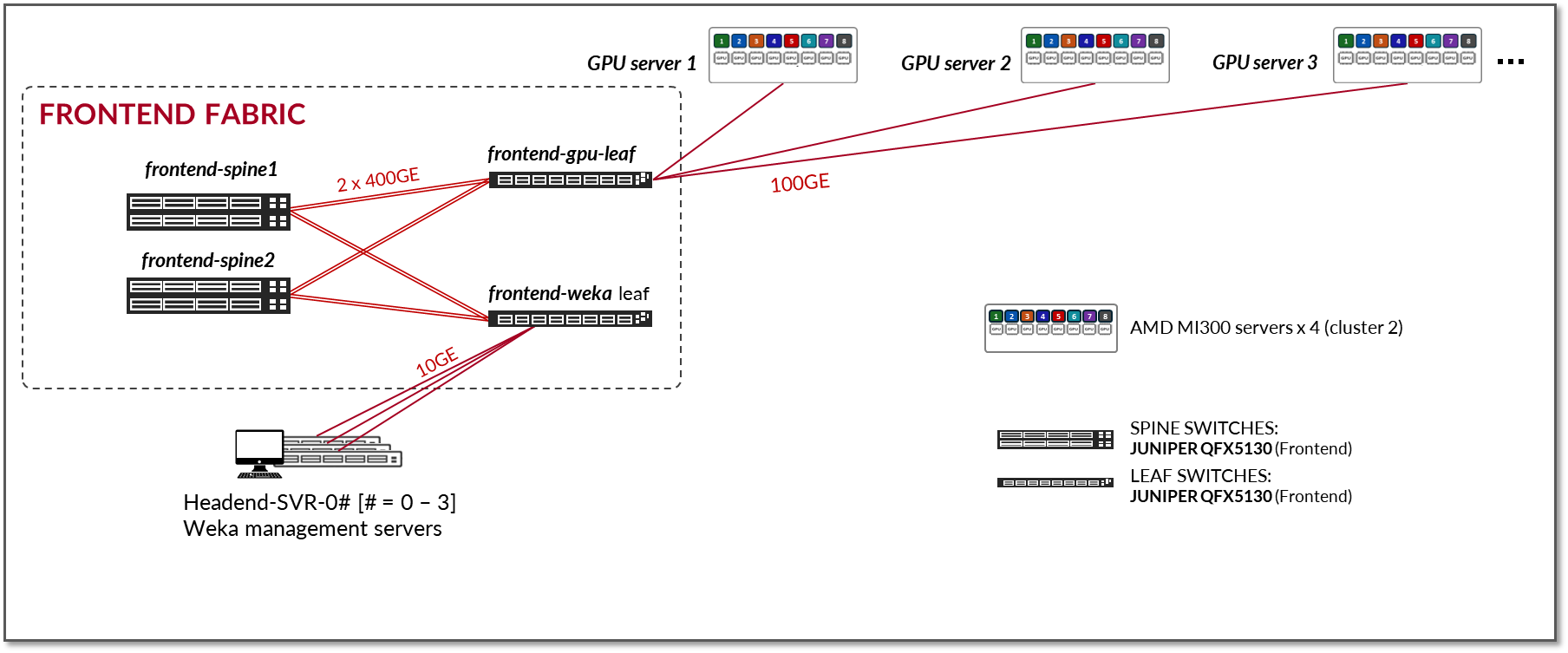

The Frontend Fabric provides the infrastructure for users to interact with the AI systems to orchestrate training and inference tasks workflows using tools such as SLURM. These interactions do not generate heavy data flows nor have rigorous requirements regarding latency or packet drops; thus, they do not impose rigorous demands on the fabric.

The Frontend Fabric design described in this JVD follows a traditional 3-stage IP Fabric architecture without HA, as shown in Figure 4. This architecture provides a simple and effective solution for the connectivity required in the Frontend. However, any fabric architecture including EVPN/VXLAN, could be used. If an HA-capable Frontend Fabric is required we recommend following the 3-Stage with Juniper Apstra JVD. An EVPN/VXLAN JVD specifically for AI will be developed in the future.

Figure 4: Frontend Fabric Architecture

The devices included in the Frontend fabric, and the connections between them, are summarized in the following tables:

Table 1: Frontend devices

| AMD GPU Servers | Headend Servers | Frontend Leaf Nodes switch model | Frontend Spine Nodes switch model |

|---|---|---|---|

|

MI300X x 4 (MI300X-01 to MI300X-04) |

Headend-SVR x 3 (Headend-SVR-01 to Headend-SVR-03) |

QFX5130-32CD x 2 (frontend-leaf#; #= 1-2 |

QFX5130-32CD x 2 (frontend-spine#; #= 1-2) |

Table 2: Connections between servers, leaf and spine nodes per cluster and stripe in the Frontend

|

GPU Servers <=> Frontend Leaf Nodes |

Headend Servers <=> Frontend Leaf Nodes |

Frontend Leaf Nodes <=> Frontend Spine Nodes |

|---|---|---|

|

1 x 100GE links per GPU server to leaf connection Total number of 100GE links between GPU servers and frontend leaf nodes = 4 (4 servers x 1 link per server) |

1 x 10GE links per headend server to lead connection Total number of 10GE links between headend servers and frontend leaf nodes = 3 (3 servers x 1 link/server) |

2 x 400GE links per leaf node to spine node connection Total number of 400GE links between frontend leaf nodes and spine nodes = 8 (2 leaf nodes x 2 spines nodes x 2 links per leaf to spine connection) |

This fabric is an L3 IP fabric using EBGP for route advertisement. The IP addressing and EBGP configuration details are described in the networking section on this document.

GPU Backend Fabric

The GPU Backend fabric provides the infrastructure for GPUs to communicate with each other within a cluster, using RDMA over Converged Ethernet (RoCEv2). RoCEv2 enhances data center efficiency, reduces complexity, and optimizes data delivery across high-speed Ethernet networks.

Packet loss can significantly impact job completion times and therefore should be avoided. Therefore, when designing the compute network infrastructure to support RoCEv2 for an AI cluster, one of the key objectives is to provide a near lossless fabric, while also achieving maximum throughput, minimal latency, and minimal network interference for the AI traffic flows. ROCEv2 is more efficient over lossless networks, resulting in optimum job completion times.

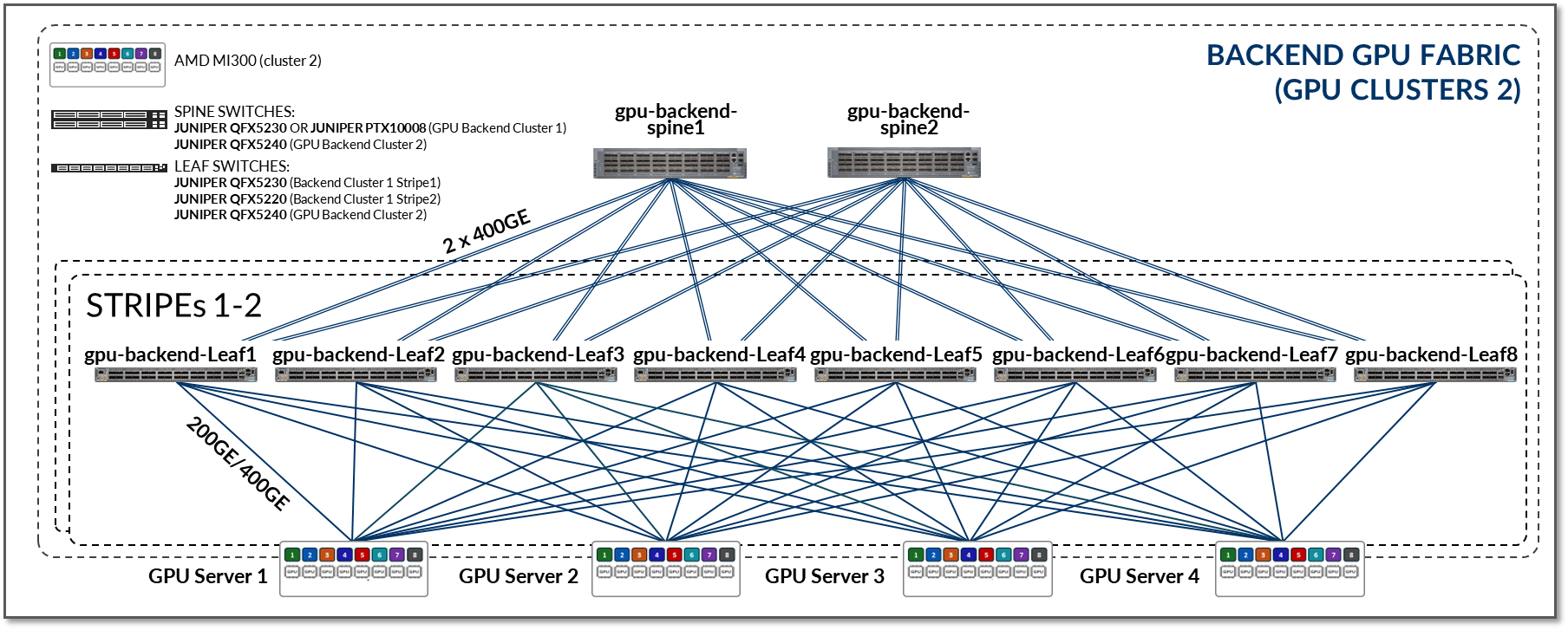

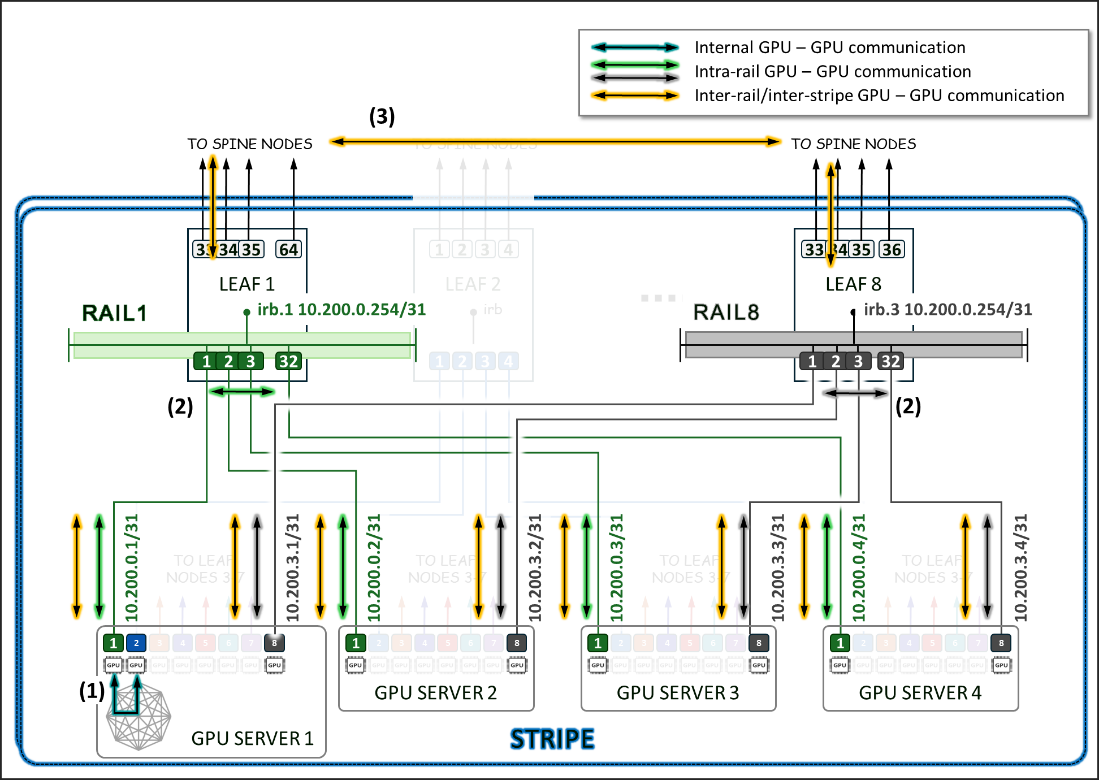

The GPU Backend fabric in this JVD was designed with these goals in mind. The design follows a 3-stage IP clos and Rail Optimized Stripe architecture as shown in Figure 5.

Figure 5: GPU Backend Fabric Architecture

The devices that are part of the GPU Backend fabric, and the connections between them, are summarized in the following tables:

Table 3: GPU Backend devices per cluster and stripe

| Stripe | GPU Servers |

GPU Backend Leaf nodes switch model |

GPU Backend Spine nodes switch model |

|---|---|---|---|

| 1 |

MI300X x 2 (MI300X-01 & MI300X-02) |

QFX5240-64OD x 8 (gpu-backend-001_leaf#; #=1-8) |

QFX5240-64OD x 4 (gpu-backend-spine#; #=1-4) |

| 2 |

MI300X x 2 (MI300X-03 & MI300X-04) |

QFX5240-64OD x 8 (gpu-backend-002_leaf#; #=1-8) |

Table 4: GPU Backend connections between servers, leaf nodes and spine nodes.

| Stripe | GPU Servers <=> GPU Backend Leaf Nodes | GPU Backend Leaf Nodes <=> GPU Backend Spine Nodes |

|---|---|---|

| 1 |

8 (number of GPUs per server) x 400GE links per MI300X server to leaf connections Total number of 400GE links between servers and leaf nodes = 16 (2 server x 8 links/server) |

2 x 400GE links per leaf node to spine node connection Total number of 400GE links between frontend leaf nodes and spine nodes = 64 (8 leaf nodes x 4 spines nodes x 2 links per leaf to spine connection) each leaf node and each spine node) |

| 2 |

8 (number of GPUs per server) x 400GE links per MI300X server to leaf connections Total number of 400GE links between servers and leaf nodes = 16 (2 server x 8 links/server) |

2 x 400GE links per leaf node to spine node connection Total number of 400GE links between frontend leaf nodes and spine nodes = 64 (8 leaf nodes x 4 spines nodes x 2 links per leaf to spine connection) each leaf node and each spine node) |

- All the AMD MI300X GPU servers are connected to the GPU backend fabric using 400GE interfaces.

- This fabric is an L3 IP fabric that uses EBGP for route advertisement (This is described in the networking section).

- Connectivity between the servers and the leaf nodes is L2 untagged vlan-based with IRB interfaces on each leaf node acting as default gateway for the servers (described in the networking section).

The speed and number of links between the GPU servers and leaf nodes and between the leaf and spine nodes determines the oversubscription factor. As an example, consider the number of GPU servers available in the lab, and how they are connected to the GPU backend fabric as described above.

The bandwidth between the servers and the leaf nodes is 12.8 Tbps (Table 5), while the bandwidth available between the leaf and spine nodes is also 25.6 Tbps (Table 6). This means that the fabric has enough capacity to process all traffic between the GPUs even when this traffic is 100% inter-stripe and has extra capacity to accommodate 4 more servers. With 4 additional servers the subscription factor would be 1:1 (no oversubscription).

Table 5: Per stripe Server to Leaf Bandwidth

| Server to Leaf Bandwidth per Stripe | ||||

|---|---|---|---|---|

| Stripe |

Number of servers per Stripe |

Number of 400 Gbps server ó leaf links per server (Same as number of leaf nodes & number of GPUs per server) |

Server <=> Leaf Link Bandwidth [Gbps] |

Total Servers <=> Leaf Links Bandwidth per stripe [Tbps] |

| 1 | 2 | 8 | 400 Gbps | 2 x 8 x 400 Gbps = 6.4 Tbps |

| 2 | 2 | 8 | 400 Gbps | 2 x 8 x 400 Gbps = 6.4 Tbps |

|

Total Server <=> Leaf Bandwidth |

12.8 Tbps | |||

Table 6: Per stripe Leaf to Spine Bandwidth

| Leaf nodes to spine nodes bandwidth per Stripe | |||||

|---|---|---|---|---|---|

| Stripe |

Number of leaf nodes |

Number of spine nodes |

Number of 400 Gbps leaf ó spine links per leaf node |

Server <=> Leaf Link Bandwidth [Gbps] |

Bandwidth Leaf <=> Spine Per Stripe [Tbps] |

| 1 | 8 | 4 | 2 | 400 | 8 x 2 x 2 x 400 Gbps = 12.8Tbps |

| 2 | 8 | 4 | 2 | 400 | 8 x 2 x 2 x 400 Gbps = 12.8Tbps |

|

Total Leaf <=> Spine Bandwidth |

25.6 Tbps | ||||

Optimization in rail-optimized topologies refers to how GPU communication is managed to minimize congestion and latency while maximizing throughput. A key part of this optimization strategy is keeping traffic local whenever possible. By ensuring that GPU communication remains within the same rail or stripe, or even within the server, the need to traverse spines or external links is reduced, which lowers latency, minimizes congestion, and enhances overall efficiency.

While localizing traffic is prioritized, inter-stripe communication will be necessary in larger GPU clusters. Inter-stripe communication is optimized by means of proper routing and balancing techniques over the available links to avoid bottlenecks and packet loss.

The essence of optimization lies in leveraging the topology to direct traffic along the shortest and least-congested paths, ensuring consistent performance even as the network scales. Traffic between GPUs in the same servers can be forwarded locally across the internal Server fabric (vendor dependent), while traffic between GPUs in different servers happens across the external GPU backend infrastructure. Communication between GPUs in different servers can be intra-rail, or inter-rail/inter-stripe.

Intra-rail traffic is switched (processed at Layer 2) on the local leaf node. Following this design, data between GPUs on different servers (but in the same stripe) is always moved on the same rail and across one single switch. This guarantees GPUs are 1 hop away from each other and will create separate independent high-bandwidth channels, which minimize contention and maximize performance. On the other hand, inter-rail/inter-stripe traffic is routed across the IRB interfaces on the leaf nodes and the spine nodes connecting the leaf nodes (processed at Layer 3).

Using the example for calculating the number of servers per stripe provided in the previous section, we can see how:

- Communication between GPU 1 and GPU 2 in server 1 happens across the server’s internal fabric (1),

- Communication between GPUs 1 in servers 1- 4, and between GPUs 8 in servers 1- 4 happens across Leaf 1 and Leaf 8 respectively (2), and

- Communication between GPU 1 and GPU 8 (in servers 1- 4) happens across leaf1, the spine nodes, and leaf8 (3)

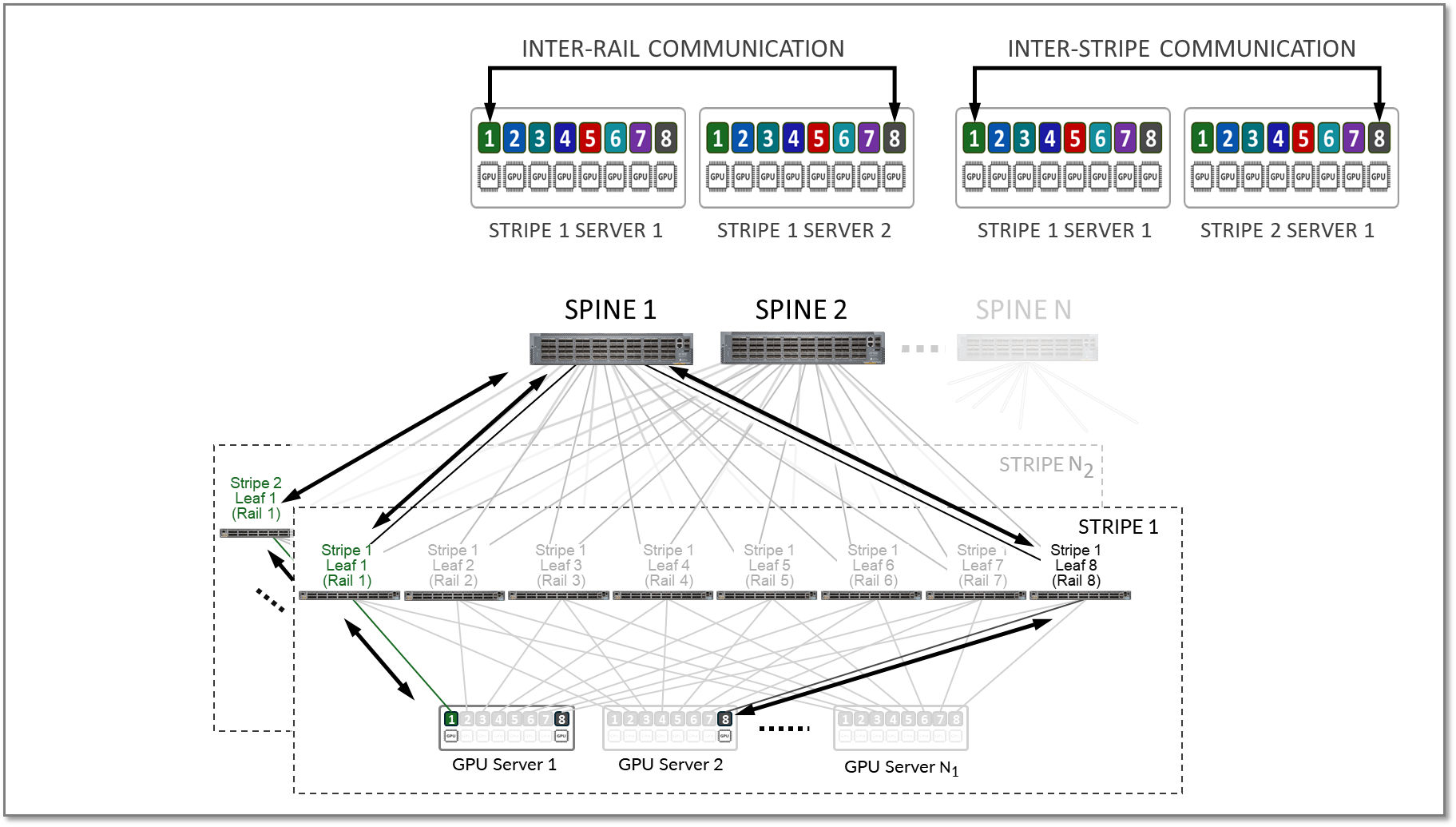

This is illustrated in Figure 12.

Figure 12: Inter-rail vs. Intra-rail GPU-GPU communication

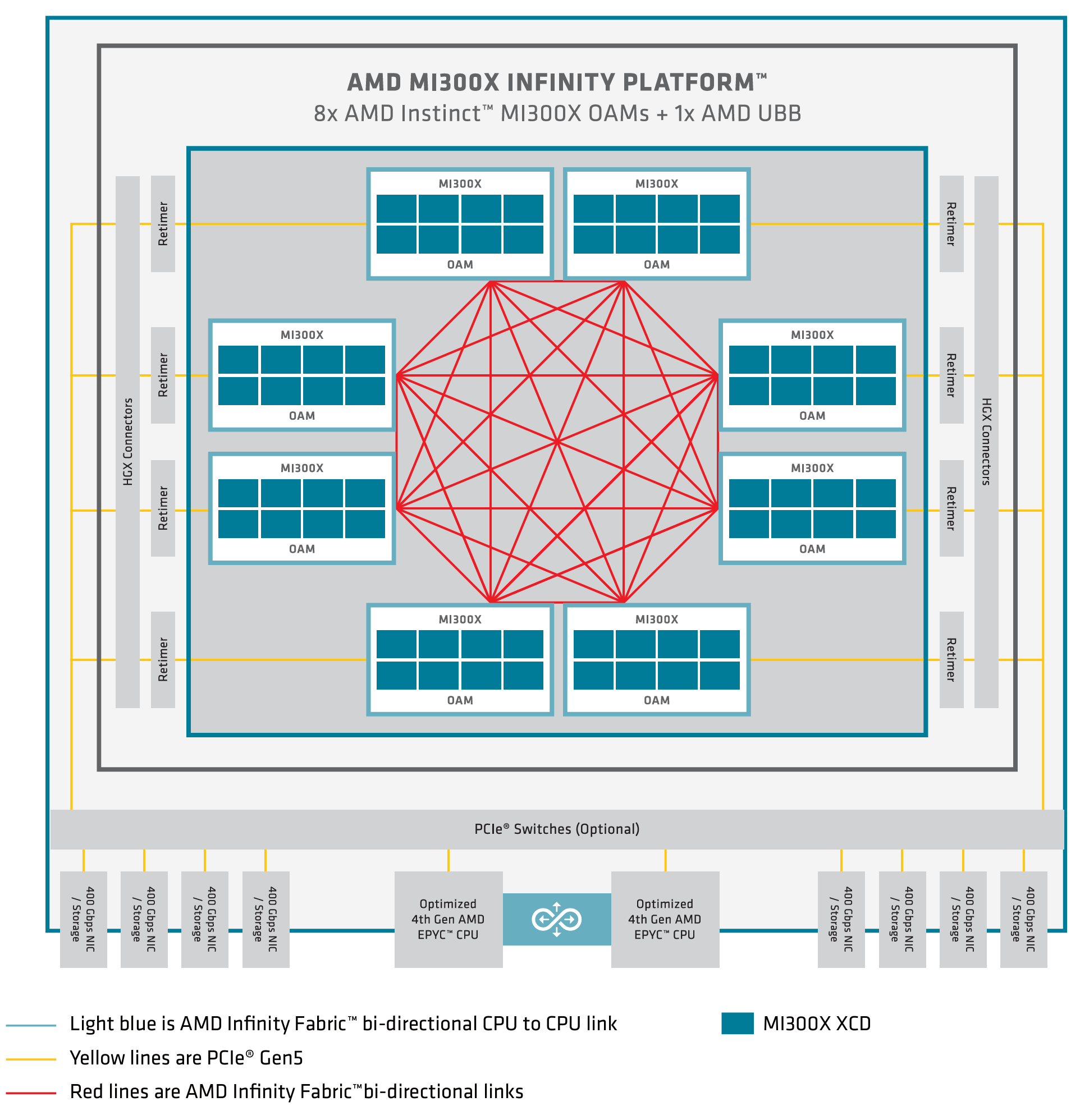

On the AMD GPU servers specifically, the GPUs are connected via the AMD Infinity fabric (which provides bidirectional 7x128GB/s per GPU). This fabric consists of seven high-bandwidth low-latency links that create an interconnected 8-GPU mesh as shown in Figure 13.

Figure 13. AMD MI300XX architecture.

AMD MI300X GPUs leverage Infinity Fabric, to provide high-bandwidth, low-latency communication between GPUs, CPUs, and other components. This interconnect can dynamically manage traffic prioritization across links, providing an optimized path for communication within the node.

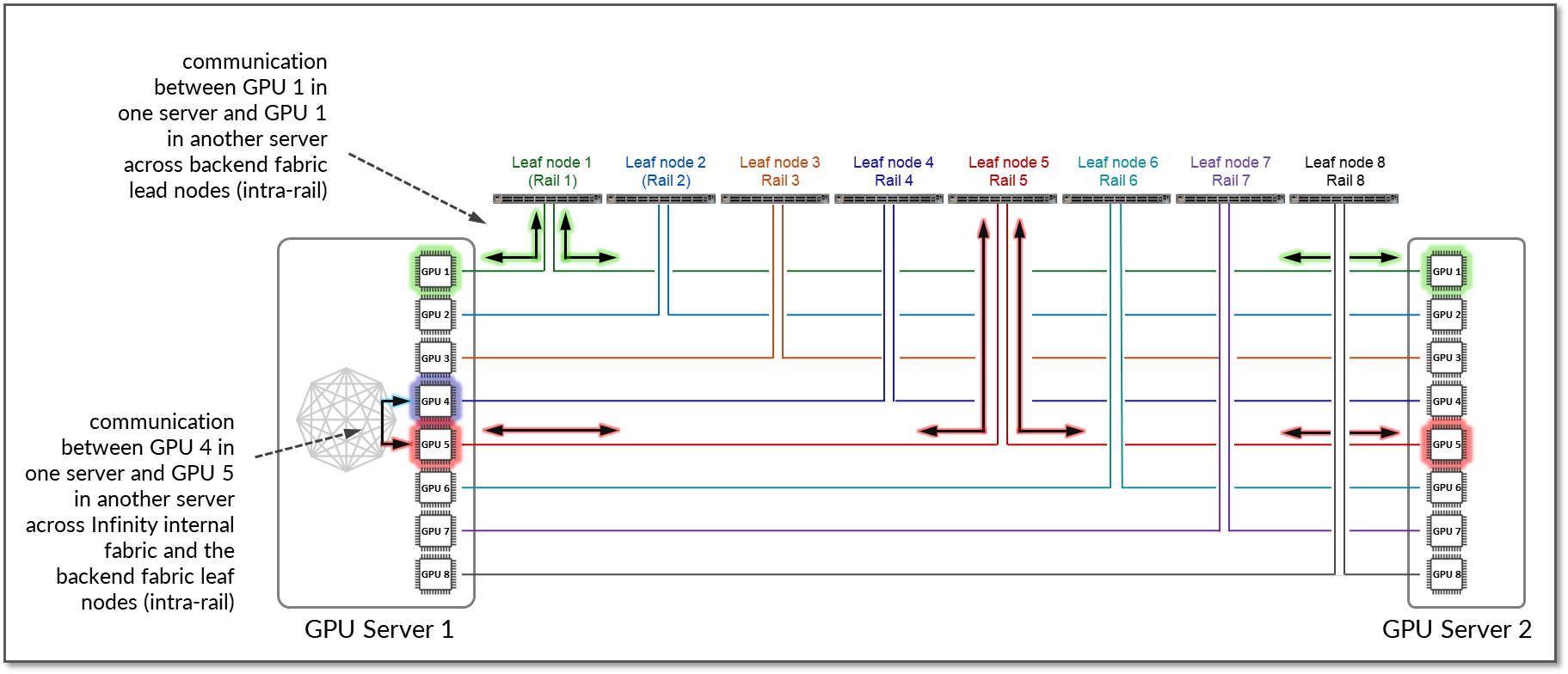

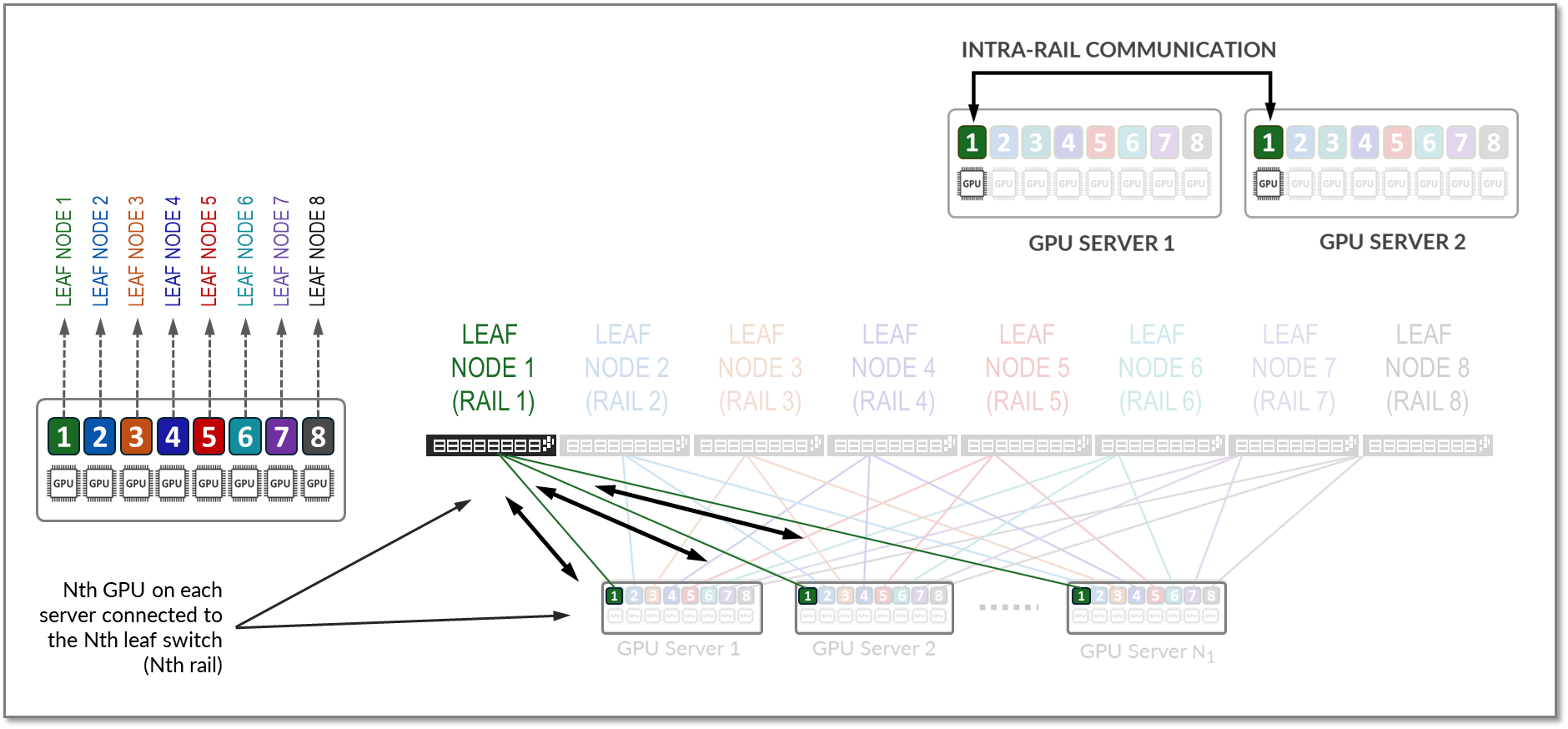

By default, AMD MI300X devices implement local optimization to minimize latency for GPU-to-GPU traffic. Traffic between GPUs of the same rank remains intra-stripe. Figure 14 shows an example where GPU1 in Server 1 communicates with GPU1 in Server 2. The traffic is forwarded by Leaf Node 1 and remains within Rail 1.

Additionally, if GPU4 in Server 1 wants to communicate with GPU5 in Server 2, and GPU5 in Server 1 is available as a local hop in AMD’s Infinity Fabric, the traffic naturally prefers this path to optimize performance and keep GPU-to-GPU communication intra-rail.

Figure 14: GPU to GPU inter-rail communication between two servers with local optimization.

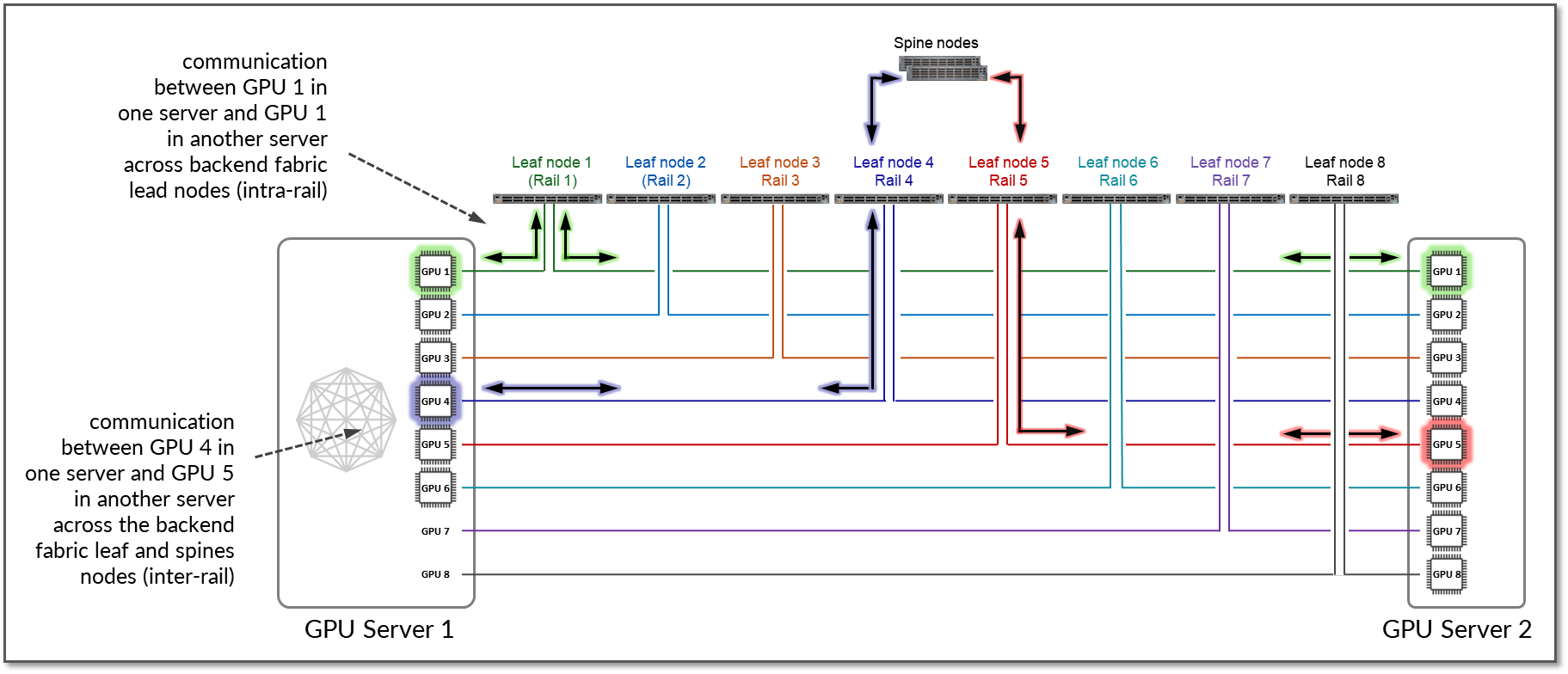

If local optimization is not feasible because of workload constraints, for example, the traffic must bypass local hops (internal fabric) and use RDMA (off-node NIC-based communication). In such case, GPU4 in Server 1 communicates with GPU5 in Server 2 by sending data directly over the NIC using RDMA, which is then forwarded across the fabric, as shown in Figure 15.

Figure 15: GPU to GPU inter-rail communication between two servers without local optimization.

Backend GPU Rail Optimized Architecture

As previously described a Rail Optimized Stripe Architecture provides efficient data transfer between GPUs, especially during computationally intensive tasks such as AI Large Language Models (LLM) training workloads, where seamless data transfer is necessary to complete the tasks within a reasonable timeframe. A Rail Optimized topology aims to maximize performance by providing minimal bandwidth contention, minimal latency, and minimal network interference, ensuring that data can be transmitted efficiently and reliably across the network.

In a Rail Optimized Architecture there are two important concepts: rail and stripe.

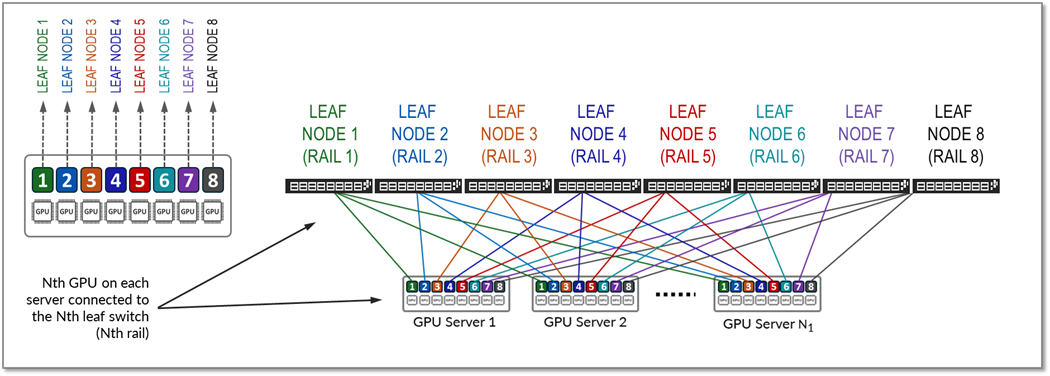

The GPUs on a server are numbered 1-8, where the number represents the GPU’s position in the server, as shown in Figure 6. This number is sometimes called rank or more specifically "local rank" in relationship to the GPUs in the server where the GPU sits, or "global rank" in relationship to all the GPUs (in multiple servers) assigned to a single job.

A rail connects GPUs of the same order across one of the leaf nodes in the fabric; that is, rail Nth connects all GPU in position Nth on all the servers, to leaf node Nth, as shown in Figure 6.

Figure 6: Rails in a Rail Optimized Architecture

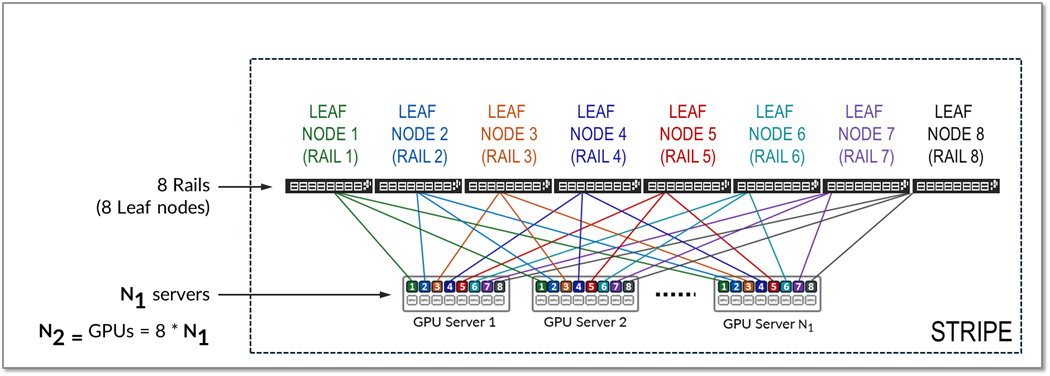

A stripe refers to a design module or building block, comprised of multiple rails, and that includes a number of Leaf nodes and GPU servers, as shown in Figure 7, that can be replicated to scale up the AI cluster.

Figure 7: Stripes in a Rail Optimized Architecture

All traffic between GPUs of the same rank (intra-rail traffic) is forwarded at the leaf node level as shown in Figure 11.

Figure 11: Intra-rail traffic example.

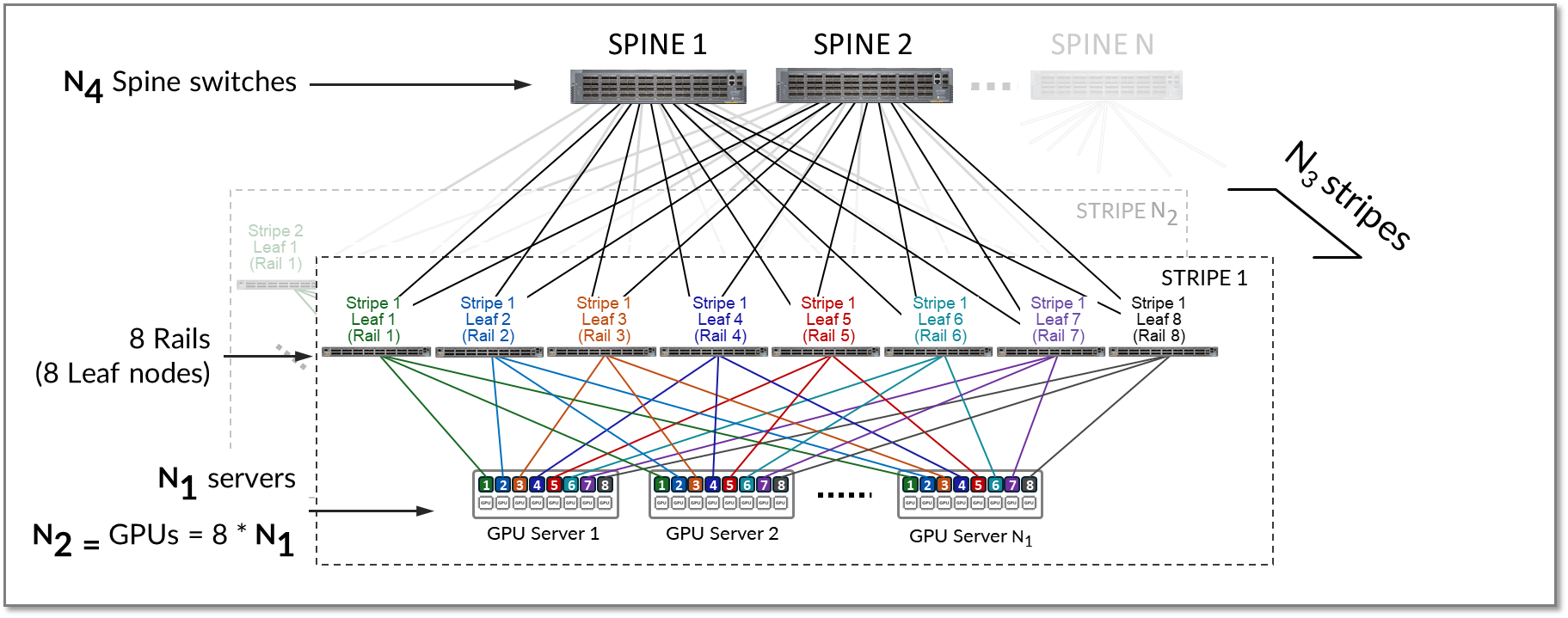

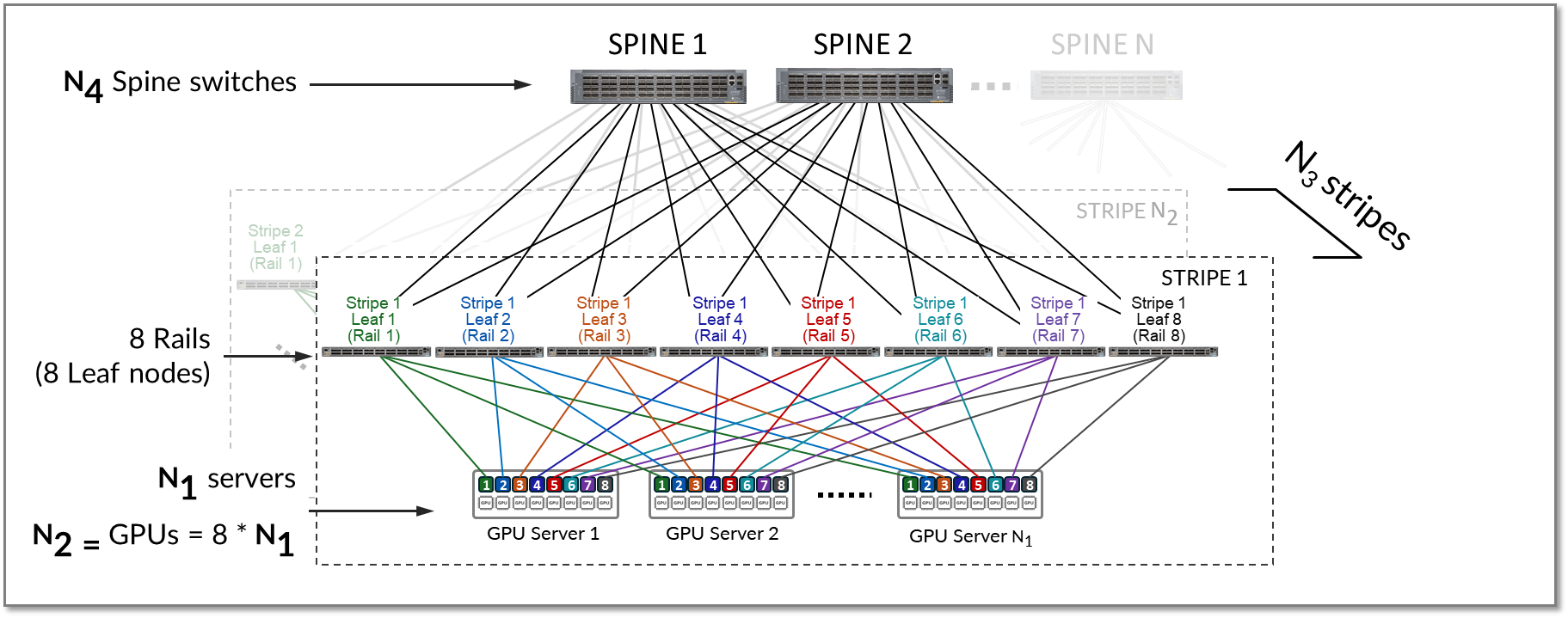

A stripe can be replicated to scale up the number of servers (N1) and GPUs (N2) in an AI cluster. Multiple stripes (N3) are then connected across Spine switches as shown in Figure 8.

Figure 8: Multiple stripes connected via Spine nodes

Both Inter-rail and inter-stripe traffic will be forwarded across the spines nodes as shown in figure 9.

Figure 9. Inter-rail, and Inter-stripe GPU to GPU traffic example.

Calculating the number of leaf, spines, servers and GPUs.

The number of leaf nodes in a single stripe in a rail optimized architecture is defined by the number of GPUs per server (number of rails). Each AMD MI300X GPU server includes 8 AMD Instinct MI300Xx GPUs. Therefore, a single stripe includes 8 leaf nodes (8 rails).

Number of leaf nodes = number of GPUs x server = 8

The maximum number of servers supported in a single stripe (N1) is defined by the number of available ports on the Leaf node which depends on the switches model.

The total bandwidth between the GPU servers and leaf nodes must match the total bandwidth between leaf and spine nodes to maintain a 1:1 subscription ratio.

Assuming all the interfaces on the leaf node operate at the same speed, half of the interfaces will be used to connect to the GPU servers, and the other half to connect to the spines. Thus, the maximum number of servers in a stripe is calculated as half the number of available ports on each leaf node. Some examples are included in Table 7.

Table 7: Maximum number of GPUs supported per stripe

|

Leaf Node QFX switch Model |

number of available 400 GE ports per switch |

Maximum number of servers supported per stripe for 1:1 Subscription (N1) |

GPUs per server |

Maximum number of GPUs supported per stripe (N2) |

|---|---|---|---|---|

| QFX5220-32CD | 32 | 32 ÷ 2 = 16 | 8 | 16 servers x 8 GPUs/server = 128 GPUs |

| QFX5230-64CD | 64 | 64 ÷ 2 = 32 | 8 | 32 servers x 8 GPUs/server = 256 GPUs |

| QFX5240-64OD | 128 | 128 ÷ 2 = 64 | 8 | 64 servers x 8 GPUs/server = 512 GPUs |

- QFX5220-32CD switches provide 32 x 400 GE ports => 16 will be used to connect to the servers and 16 will be used to connect to the spine nodes.

- QFX5230-64CD switches provide up to 64 x 400 GE ports => 32 will be used to connect to the servers and 32 will be used to connect to the spine nodes.

- QFX5240-64OD switches provide up to 128 x 400 GE ports => 64 will be used to connect to the servers and 64 will be used to connect to the spine nodes.

To achieve larger scales, multiple stripes (N3) can be connected using a set of Spine nodes (N4), as shown in Figure 9.

The number of stripes required (N3 ) is calculated based on the required number of GPUs, and the maximum number of GPUs per stripe (N2).

For example, assume that the required number of GPUs (GPUs) is 16,000 and the fabric is using QFX5240-64OD as leaf nodes.

The number of available 400G ports is 128 which means that:

- maximum number of servers per stripe (N1) = 64

- maximum number of GPUs per stripe (N2) = 512

To number of stripes (N3) required is calculated by diving the number of GPUs required, and the number of GPUs per stripe as shown:

N 3 (number of stripes required) = GPUs ÷ N 2 (maximum number of GPUs per stripe) = 16000 ÷ 256 ≈ 64 stripes

- With 64 stripes & 256 servers per stripe the cluster can provide 16,384 GPUs.

Knowing the number of stripes required (N 3) and the number of uplinks ports per leaf node (Y) you can calculate how many spine nodes are required.

Remember X = Y = N1

First the total number of leaf nodes can be calculated by multiplying the number of stripes required by 8 (number of leaf nodes per stripe).

Total number of leaf nodes = N3 x 8 = 64 x 8 = 512

Then the total number of uplinks can be obtained multiplying the number of uplinks per leaf node (N1), and the total number of leaf nodes.

Total number of uplinks = N1 x N3 = 64 x 512 = 32768

The number of spines required (N4 ) can then be determined by dividing the total number of uplinks by the number of available ports on each spine node, which as for the leaf nodes, depends on the switch model used for the spine role.

Number of spines required (N4 ) = 32768 / number of available ports on each spine node

For example, if the spine nodes are QFX5240, the number of available ports on each spine node is 128.

Table 8: Number of spines nodes for two stripes.

|

Spine Node QFX switch Model |

Maximum number of 400 GE interfaces per switch | Number of spines required (N4) with 64 stripes |

|---|---|---|

| QFX5240-64OD | 128 | 32768 ÷ 128 = 256 |

| PTX10008 | 288 | 32768 ÷ 288 ~ 128 |

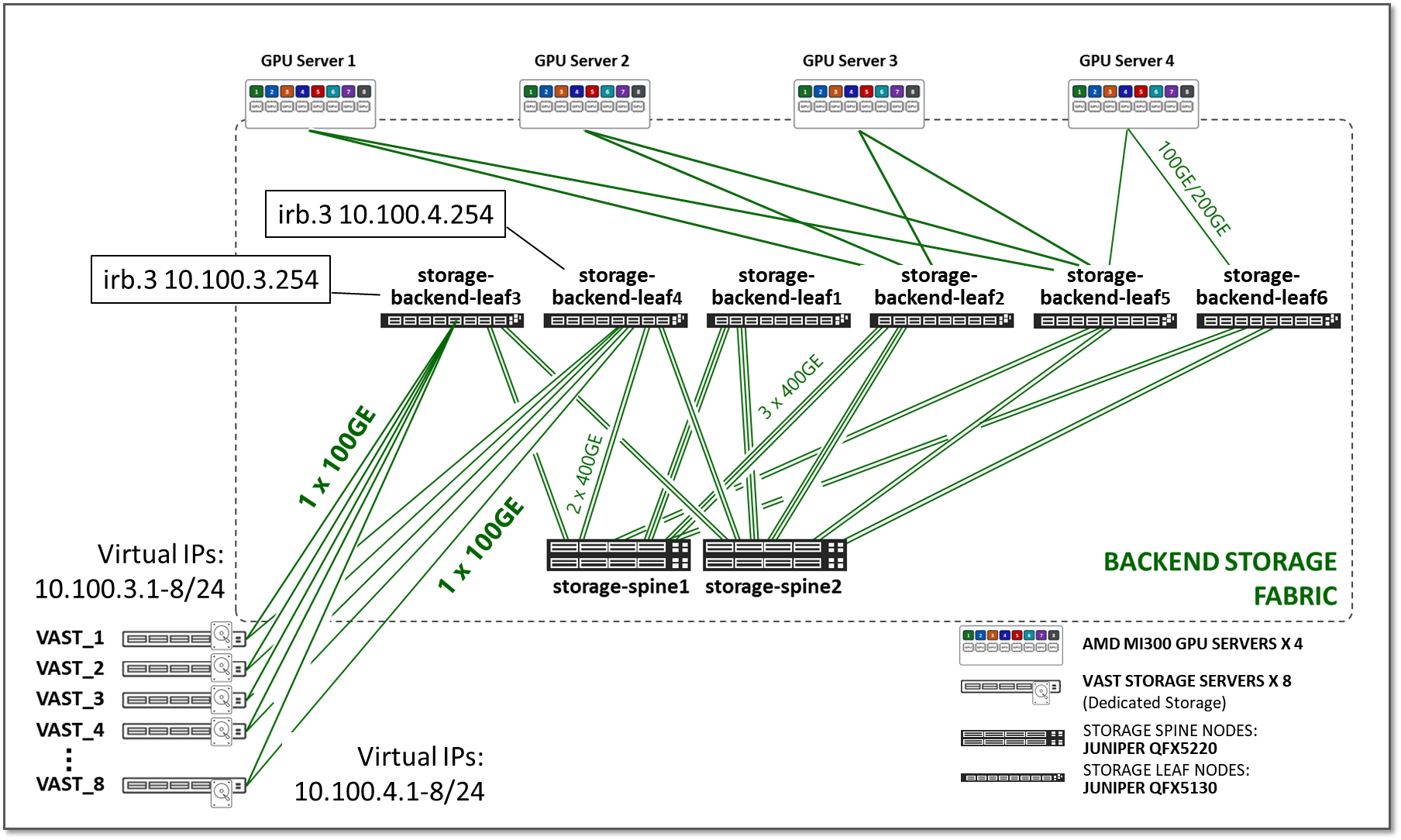

Storage Backend Fabric

In small clusters, it may be sufficient to use the local storage on each GPU server, or to aggregate this storage together using open-source or commercial software. In larger clusters with heavier workloads, an external dedicated storage system is required to provide dataset staging for ingest, and for cluster checkpointing during training.

Two leading platforms, WEKA and Vast Storage, provide cutting-edge solutions for shared storage in GPU environments. While we have tested both solutions in our lab, this JVD focuses on the Vast Storage Solution. Thus, the rest of this, as well as other sections in this document will cover details about Vast Storage devices and connectivity to the Storage Backend Fabric. Details about the WEKA storage are included in the AI Data Center Network with Juniper Apstra, NVIDIA GPUs, and WEKA Storage—Juniper Validated Design (JVD).

The Storage Backend Fabric provides the connectivity infrastructure for storage devices to be accessible from the GPU servers. The performance of this fabric significantly impacts the efficiency of AI workflows. A storage system that provides quick access to data can significantly reduce the amount of time for training AI models. Similarly, a storage system that supports efficient data querying and indexing can minimize the completion time of preprocessing and feature extraction in an AI workflow.

The Storage Backend Fabric design in the JVD also follows a 3-stage IP clos architecture as shown in Figure 16. There is no concept of rail-optimization in a storage cluster. Each GPU server has single connections to the leaf nodes, instead of one connection per GPU.

Figure 16: Storage Backend Fabric Architecture

The Storage Backend devices included in this fabric, and the connections between them, are summarized in the following tables:

Table 9: Storage Backend devices

|

Number of GPU Servers |

Number of storage server |

Storage Backend Leaf nodes switch model |

Storage Backend Spine nodes (storage-spine#) switch model |

|---|---|---|---|

|

AMD MI300X x 4 (MI300X-#; # = 1-4) |

Vast storage server x 8 (Vast#; #=1-8) |

QFX5130-32CD x 6 (storage-leaf#; #=1-6) 4 connecting the GPU servers, and 2 connecting the storage devices |

QFX5130-32CD x 2 (storage-spine#; #=1-2) |

|

Number of GPU Servers |

Number of storage server |

Storage Backend Leaf nodes (storage-leaf#) switch model |

Storage Backend Spine nodes (storage-spine#) switch model |

|---|---|---|---|

|

AMD MI300X x 4 (MI300X-1 to MI300X-4) |

Vast storage server x 8 (vast-1 to vast-8) |

QFX5130-32CD x 6 (4 connecting the GPU servers, and 2 connecting the storage devices) |

QFX5130-32CD x 2 |

QFX5230 and QFX5240 were also validated for the Storage Backend Leaf and Spine roles.

Table 10: Connections between servers, leaf and spine nodes in the Storage Backend

|

GPU Servers <=> Storage Backend Leaf Nodes |

Storage Servers <=> Storage Backend Leaf Nodes |

Storage Backend Leaf Nodes <=> Storage Backend Spines nodes |

|---|---|---|

|

1 x 200GE links per GPU server to leaf node connection Total number of 200GE links between GPU servers and storage leaf nodes = 8 (4 servers x 2 leaf nodes x 1 link per server to leaf connections) (1) |

1 x 100GE links per Vast C-node to leaf node connection Total number of 100GE links between Vast C-node and storage leaf nodes = 16 (8 c-nodes x 2 leaf nodes x 1 link per node to lead connection) (2) |

2 x 400GE links OR Total number of 400GE links between storage Backend leaf nodes and spine nodes = 8 (4 leaf nodes x 2 spines nodes x 2 links per leaf to spine connection + (2 leaf nodes x 2 spines nodes x 3 links per leaf to spine connection |

(1) AMD MI300X servers are dual homed

(2) Vast storage C-nodes are dual homed

Scaling

The size of an AI cluster varies significantly depending on the specific requirements of the workload. The number of nodes in an AI cluster is influenced by factors such as the complexity of the machine learning models, the size of the datasets, the desired training speed, and the available budget. The number varies from a small cluster with less than 100 nodes to a data center-wide cluster comprised of 10000s of compute, storage, and networking nodes. A minimum of 4 spines must always be deployed for path diversity and reduction of PFC failure paths.

Table 11: Fabric Scaling- Devices and Positioning

| Fabric Scaling | ||

|---|---|---|

| Small | Medium | Large |

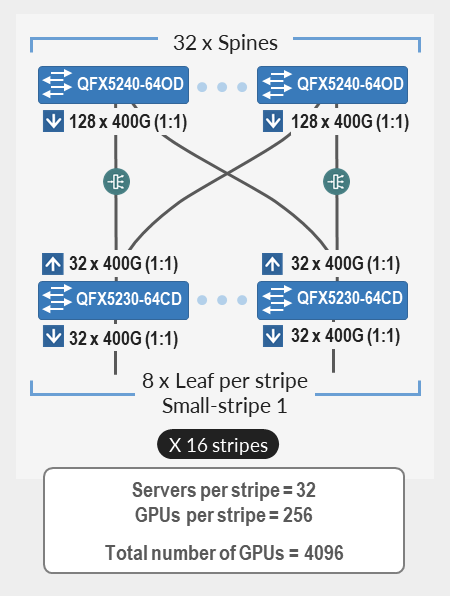

| upto 4096 GPU | upto 8192 GPU | 8192 and upto 73,728 GPU |

|

Support for up to 4096 GPUs with Juniper QFX5240-64CDs as spine nodes and QFX5230-64CD as leaf nodes (single or multi-stripe implementations). This 3-stage rail-based fabric consists of up to 32 Spines and 128 leaf nodes, maintaining a 1:1 subscription. The fabric provides physical connectivity for up to 16 stripes, with 32 servers (256 GPUs) per stripe, for a total of 4096 GPUs. |

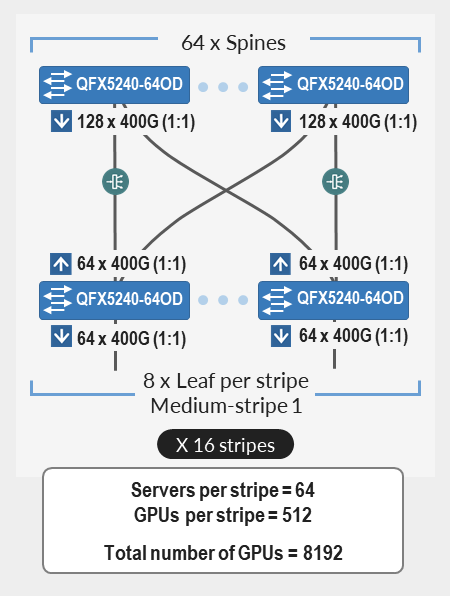

Support for more than 4096 GPU and upto 8192 GPUs with Juniper QFX5240-64CDs as both Spine and Leaf nodes. This 3-stage rail-based fabric consists of up to 64 spines, and up to 128 leaf nodes, maintaining a 1:1 subscription. The fabric provides physical connectivity for up to 16 Stripes, with 64 servers (512 GPUs) per stripe, for a total of 8192 GPUs |

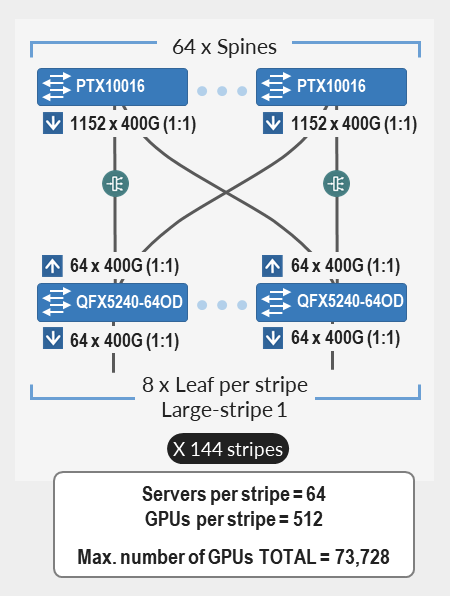

Support of more than 8192 GPU and upto . 73,728 GPUs with Juniper PTX10000 Chassis as Spine nodes and Juniper QFX5240-64CDs as leaf nodes. This 3-stage rail-based fabric consists of up to 64 spines, and up to 1152 leaf nodes, maintaining a 1:1 subscription. The fabric provides physical connectivity for up to 144 Stripes, with 64 servers (512 GPUs) per stripe, for a total of 73,728 GPUs. |

|

|

|

Juniper Hardware and Software Solution Components

The Juniper products and software versions listed below pertain to the latest validated configuration for the AI DC use case. As part of an ongoing validation process, we routinely test different hardware models and software versions and update the design recommendations accordingly.

The version of Juniper Apstra in the setup is 6.1.

The following table summarizes the validated Juniper devices for this JVD and includes devices tested for the AI Data Center Network with Juniper Apstra, NVIDIA GPUs, and WEKA Storage—Juniper Validated Design (JVD).

Table 12: Validated Devices and Positioning

| Validated Devices and Positioning | ||

|---|---|---|

| Fabric | Leaf Switches | Spine Switches |

| Frontend | QFX5130-32CD | QFX5130-32CD |

| GPU Backend |

QFX5230-64CD (CLUSTER 1-STRIPE 1) QFX5220-32CD (CLUSTER 1-STRIPE 2) QFX5240-64CD/QFX5241-64CD (CLUSTER 2) |

QFX5230-64CD (CLUSTER 1) PTX10008 JNP10K-LC1201 (CLUSTER 1) QFX5240-64CD/QFX5241-64CD (CLUSTER 2) |

| Storage Backend |

QFX5220-32CD QFX5230-64CD QFX5240-64CD/QFX5241-64CD |

QFX5220-32CD QFX5230-64CD QFX5240-64CD/QFX5241-64CD |

The following table summarizes the software versions tested and validated by role.

Table 13: Platform Recommended Release

| Platform | Role | Junos OS Release |

|---|---|---|

| QFX5240-64CD | GPU Backend Leaf | 23.4X100-D31 |

| QFX5241-64CD | GPU Backend Spine | 23.4X100-D42 |

| QFX5220-32CD | GPU Backend Leaf | 23.4X100-D20 |

| QFX5230-64CD | GPU Backend Leaf | 23.4X100-D20 |

| QFX5240-64CD | GPU Backend Spine | 23.4X100-D31 |

| QFX5241-64CD | GPU Backend Spine | 23.4X100-D42 |

| QFX5230-64CD | GPU Backend Spine | 23.4X100-D20 |

| PTX10008 with LC1201 | GPU Backend Spine | 23.4R2-S3 |

| QFX5130-32CD | Frontend Leaf | 23.43R2-S3 |

| QFX5130-32CD | Frontend Spine | 23.43R2-S3 |

| QFX5220-32CD | Storage Backend Leaf | 23.4X100-D20 |

| QFX5230-64CD | Storage Backend Leaf | 23.4X100-D20 |

| QFX5240-64CD | Storage Backend Leaf | 23.4X100-D20 |

| QFX5241-64CD | Storage Backend Leaf | 23.4X100-D42 |

| QFX5220-32CD | Storage Backend Spine | 23.4X100-D20 |

| QFX5230-64CD | Storage Backend Spine | 23.4X100-D20 |

| QFX5240-64CD | Storage Backend Spine | 23.4X100-D20 |

| QFX5241-64CD | Storage Backend Spine | 23.4X100-D42 |

IP Services for AI Networks

In the next few sections, we describe the various strategies that can be employed to handle traffic congestion and traffic load distribution in the Backend GPU fabric.

Congestion Management

AI clusters pose unique demands on network infrastructure due to their high-density, and low-entropy traffic patterns, characterized by frequent elephant flows with minimal flow variation. Additionally, most AI modes require uninterrupted packet flow with no packet loss for training jobs to be completed.

For these reasons, when designing a network infrastructure for AI traffic flows, the key objectives include maximum throughput, minimal latency, and minimal network interference over a lossless fabric, resulting in the need to configure effective congestion control methods.

Data Center Quantized Congestion Notification (DCQCN), has become the industry-standard for end-to-end congestion control for RDMA over Converged Ethernet (RoCEv2) traffic. DCQCN congestion control methods offer techniques to strike a balance between reducing traffic rates and stopping traffic all together to alleviate congestion, without resorting to packet drops.

It is important to note that DCQCN is primarily required in the GPU backend fabric, where the majority of AI workload traffic resides, while it is generally unnecessary in the frontend or storage backend."

DCQCN combines two different mechanisms for flow and congestion control:

- Priority-Based Flow Control (PFC), and

- Explicit Congestion Notification (ECN).

Priority-Based Flow Control (PFC) helps relieve congestion by halting traffic flow for individual traffic priorities (IEEE 802.1p or DSCP markings) mapped to specific queues or ports. The goal of PFC is to stop a neighbor from sending traffic for an amount of time (PAUSE time), or until the congestion clears. This process consists of sending PAUSE control frames upstream requesting the sender to halt transmission of all traffic for a specific class or priority while congestion is ongoing. The sender completely stops sending traffic to the requesting device for the specific priority.

While PFC mitigates data loss and allows the receiver to catch up processing packets already in the queue, it impacts performance of applications using the assigned queues during the congestion period. Additionally, resuming traffic transmission post-congestion often triggers a surge, potentially exacerbating or reinstating the congestion scenario.

We recommend configuring PFC only on the QFX devices acting as leaf nodes.

Explicit Congestion Notification (ECN), on the other hand, curtails transmit rates during congestion while enabling traffic to persist, albeit at reduced rates, until congestion subsides. The goal of ECN is to reduce packet loss and delay by making the traffic source decrease the transmission rate until the congestion clears. This process entails marking packets with ECN bits at congestion points by setting the ECN bits to 11 in the IP header. The presence of this ECN marking prompts receivers to generate Congestion Notification Packets (CNPs) sent back to source, which signal the source to throttle traffic rates.

Combining PFC and ECN offers the most effective congestion relief in a lossless IP fabric supporting RoCEv2, while safeguarding against packet loss. To achieve this, when implementing PFC and ECN together, their parameters should be carefully selected so that ECN is triggered before PFC.

For more information refer to Introduction to Congestion Control in Juniper AI Networks which explores how to build a lossless fabric for AI workloads using DCQCN (ECN and PFC) congestion control methods and DLB. The document was based on DLRM training model as a reference and demonstrates how different congestion parameters such as ECN and PFC counters, input drops and tail drops can be monitored to adjust configuration and build a lossless fabric infrastructure for RoCEv2 traffic.

Load Balancing

The fabric architecture used in this JVD for both the Frontend and backend follows the 2-stage clos design, with every leaf node connected to all the available spine nodes, and via multiple interfaces. As a result, multiple paths are available between the leaf and spine nodes to reach other devices.

AI traffic characteristics may impede optimal link utilization when implementing traditional Equal Cost Multiple Path (ECMP) Static Load Balancing (SLB) over these paths. This is because the hashing algorithm which looks at specific fields in the packet headers will result in multiple flows mapped to the same link due to their similarities. Consequently, certain links will be favored, and their high utilization may impede the transmission of smaller low-bandwidth flows, leading to potential collisions, congestion and packet drops. To improve the distribution of traffic across all the available paths either Dynamic Load Balancing (DLB) or Global Load Balancing (GLB) can be implemented instead.

For this JVD Dynamic Load Balancing flowlet-mode was implemented on all the QFX leaf and spines nodes. Global Load Balancing is also included as an alternative solution.

Additional testing was conducted on the QFX5240-64OD in the GPU Backend Fabric, to evaluate the benefits of Selective Dynamic Load Balancing, and Reactive path rebalancing. Notice that these load balancing mechanisms are only available on QFX devices.

Dynamic Load Balancing (DLB)

Dynamic Load Balancing (DLB) ensures that all paths are utilized more fairly, by not only looking at the packet headers, but also considering real-time link quality based on port load (link utilization) and port queue depth when selecting a path. This method provides better results when multiple long-lived flows moving large amounts of data need to be load balanced.

DLB can be configured in two different modes:

- Per packet mode: packets from the same flow are sprayed across link members of an IP ECMP group, which can cause packets to arrive out of order.

- Flowlet Mode: packets from the same flow are sent across a link member of an IP ECMP group. A flowlet is defined as bursts of the same flow separated by periods of inactivity. If a flow pauses for longer than the configured inactivity timer, it is possible to reevaluate the link members' quality, and for the flow to be reassigned to a different link.

Selective Dynamic Load Balancing (SDLB): allows implementing DLB only to certain traffic. This feature is only supported on QFX5240-64OD, and QFX5240-64QD, starting in Junos OS Evolved Release 23.4R2, at the time of this document's publication.

Reactive Path Rebalancing: allows a flow to be reassigned to a different (better) link, when the current link quality deteriorates, even if no pause in the traffic flow has exceeded the configured inactivity timer. This feature is only supported on QFX5240-64OD, and QFX5240-64QD, starting in Junos OS Evolved Release 23.4R2, at the time of this document's publication.

Global Load Balancing (GLB):

GLB is an improvement on DLB which only considers the local link bandwidth utilization. GLB on the other hand, has visibility into the bandwidth utilization of links at the next-to-next-hop (NNH) level. As a result, GLB can reroute traffic flows to avoid traffic congestion farther out in the network than DLB can detect.

AI-ML data centers have less entropy and larger data flows than other networks. Because hash-based load balancing does not always effectively load-balance large data flows of traffic with less entropy, dynamic load balancing (DLB) is often used instead. However, DLB considers only the local link bandwidth utilization. For this reason, DLB can effectively mitigate traffic congestion only on the immediate next hop. GLB more effectively load-balances large data flows by taking traffic congestion on remote links into account.

GLB is only supported for QFX-5240 (TH5) starting on 23.4R2 and 24.4R1, requires a full 3-tier CLOS architecture, and is limited to only one link between each spine and leaf. When there is more than one interface or a bundle between a pair of leaf and spine, GLB won’t work. Also, GLB supports 64 profiles in the table. This means there can be 64 leaves in the 3-stage Clos topology where GLB is running.

For additional details on the operation and configuration of GLB refer to Avoiding AI/ML traffic congestion with global load balancing | HPE Juniper Networking Blogs

ADDITIONAL REFERENCES: Introduction to Congestion Control in Juniper AI Networks explores how to build a lossless fabric for AI workloads using DCQCN (ECN and PFC) congestion control methods and DLB. The document was based on DLRM training model as a reference and demonstrates how different congestion parameters such as ECN and PFC counters, input drops and tail drops can be monitored to adjust configuration and build a lossless fabric infrastructure for RoCEv2 traffic.

Ethernet Network Adapter (NICs) for AI Data centers

AI/ML workloads have increased in complexity and scale; networking becomes crucial for efficient job completion times. The Network Adapters (NIC) are the connection points that connect the GPUs to the data center fabrics and hence these NICs should be able to handle large amounts of data and should be able to support high-speed, low-latency communications between GPU servers. Due to this at a minimum, the NICs should be able to support some of the key AI/ML functionality such as below:

- RDMA over Converged Ethernet (RoCE) and congestion control.

- Ability to handle 400G data bidirectional with low latency.

- Advanced congestion control mechanisms that are sensitive and able to react to network congestion and optimize traffic flow.

- Support GPU scalability ensuring robust performance even with increasing GPUs.

For Server NICs, we have two options:

- Broadcom Thor2—The Broadcom Thor2 network adapters were validated for AI/ML workloads and job completion times.

- AMD Pollara—AMD Pollara 400 ethernet network adapters

For more information on AMD Pensando Pollara 400 (ethernet adapter) refer to this link.