AMD Configuration

The AI servers covered as part of the JVD include 2 Supermicro AS-8125GS-TNMR2 Dual AMD EPYC 8U GPU and 2 Dell PowerEdge XE9680.

This section provides some guidelines to install and configure the interfaces and other relevant parameters based on the AI JVD lab testing. Always refer to the official manufacturer documentation when making changes and for more details.

AMD MI300Xx Setting BIOS Parameters

Each vendor has different BIOS settings based on differences in its UI and GPU mappings and the servers' internal architectures.

SuperMicro AS-8125GS-TNMR2

Boot the server into Setup mode (the boot to supermicro splash will take several minutes to appear):

| UEFI/BIOS Area | Value |

|---|---|

| Advanced -> NB Configuration | ACS Enable = Disable |

| Advanced -> NB Configuration -> xGMI | xGMI Link Width Control = Manual |

| xGMI Force Link Width Control = Force | |

| xGMI Force Link Width = 2 | |

| xGMI Max Link Width control = Manual | |

| xGMI Link Max Speed = Auto | |

| Advanced -> PCIe/PCI/PnP Configuration | Above 4G Encoding: Enabled |

| Re-Size BAR Support: Enabled | |

| SR-IOV Support: Enabled | |

| Workload = Not Configured |

DELL XE9680

The following BIOS settings are recommended by Dell for their XE9680 AI/ML server. The BIOS settings disable IOMMU and ACS on the host as well.

| UEFI/BIOS Area | Value |

|---|---|

| BIOS -> Processor Settings | Logical Processor = Disable |

| Virtualization Technology = Disable | |

| SubNumaCluster = Disable | |

| MADt Core cluster = Linear | |

| 1 BIOS -> Integrated Devices | Global SRIOV = Disable 1 |

| BIOS -> System Profile Setting | Server System Profile = Performance |

| Workload = Not Configured | |

| BIOS -> System Security | AC Recovery Delay = Random (highly recommended) |

1 Dell recommends “enabling” Global SR-IOV, but on the Dell DUTs in this lab setup, this setting was incompatible with the THOR2 NIC port mode 0 for the storage and frontend fabrics (2x200Gb vs. 1x400Gb), causing the DUT to fault on boot. Consult with your Dell account team for recommendations about this setting in your setup.”

Follow the configuration steps described in the Single-node network configuration for AMD Instinct accelerators — GPU cluster networking documentation. Notice that the disable ACS script used in step 6, must also be run before any workloads, after a server has been rebooted.

Identifying NICs and GPUs mappings

Along with the fabric and GPU server setup, this JVD which covers the configuration and setup of ethernet Network Adapters (or NIC) as below. The Broadcom BCM57608 (Thor2) ethernet network adapter was validated in Phase 1. And in Phase2, the AMD Pollara 400 NIC cards are validated.

All 4 servers are equipped with:

- 8 x AMD Instinct MI300XX OAM GPUs

and either of the below NICs

- 8 x Single port 400/200/100/50/25/10GbE Broadcom BCM57608 (Thor2) adapter with 400Gbps QDD-400G-DR4 transceivers used to connect to the GPU backend Fabric.

Or

- 8 x Single port 400G and 2 ports 200G and 4 ports 100/50/25G AMD Pensando Pollara 400 ethernet network adapter with Q112-400G-DR4 transceivers used to connect to the GPU backend Fabric.

Dell devices:

- 1 x Mellanox MT2910 Family NVIDIA® ConnectX®-7 SmartNIC with 100Gbps QSFP28 transceivers to connect to the Frontend Fabric

- 2 x Mellanox MT2910 Family NVIDIA® ConnectX®-7 SmartNIC with 200Gbps QDD-2X200G-AOC-5M transceivers to connect to the Frontend Fabric

AMD MI300x GPU Server and NIC Firmware and RCCL libraries

For the purposes of Broadcom Thor 2 NIC validation following are the main OS and firmware versions configured on the MI300x GPU servers:

Broadcom Thor 2 Ethernet Adapter

Below are the details of the Operating System (OS), firmware and AMD libraries installed:

| OS/Firmware | Version |

|---|---|

| Ubuntu | Ubuntu 24.04.2 LTS |

| Broadcom Thor2 NIC Firmware version | 231.2.63.0 |

Following are the libraries installed for RCCL test for Thor2 Network adapter:

| RCCL Test libraries | Version | command |

|---|---|---|

| rocm/noble | 6.4.0.60400-47~24.04 amd64 | apt list rocm |

| Rccl 1 | 2.22.3.60400-47~24.04 amd64 | apt list rccl |

| mpi (Open MPI) | 5.0.8a1 | mpirun –version |

|

UCX |

1.15.0 | /opt/ucx/bin/ucx_info -v |

AMD Pensando Pollara 400 Ethernet Adapter

For the purposes of the AMD Pollara 400 NIC validation, the following are the main OS and firmware versions configured on the MI300x GPU servers:

| OS/Firmware | Version |

|---|---|

| Ubuntu | Ubuntu 22.04.5 LTS |

| AMD Pollara NIC Firmware version | 1.110.0-a-79 |

Output of the ubuntu version 22.04 installed on the MI300 servers.

jnpr@mi300-01:~$ cat /etc/os-release PRETTY_NAME="Ubuntu 22.04.5 LTS" NAME="Ubuntu" VERSION_ID="22.04" VERSION="22.04.5 LTS (Jammy Jellyfish)" VERSION_CODENAME=jammy ID=ubuntu ID_LIKE=debian HOME_URL="https://www.ubuntu.com/" SUPPORT_URL="https://help.ubuntu.com/" BUG_REPORT_URL="https://bugs.launchpad.net/ubuntu/" PRIVACY_POLICY_URL="https://www.ubuntu.com/legal/terms-and-policies/privacy-policy"

Output of the AMD Pollara 400 NIC card Firmware version

jnpr@mi300-01:~$ sudo nicctl show card --detail | grep Firmware Firmware version : 1.110.0-a-79 Firmware version : 1.110.0-a-79 Firmware version : 1.110.0-a-79 Firmware version : 1.110.0-a-79 Firmware version : 1.110.0-a-79 Firmware version : 1.110.0-a-79 Firmware version : 1.110.0-a-79 Firmware version : 1.110.0-a-79

Following are the libraries installed for RCCL test for AMD Pollara 400 NIC adaper:

| RCCL Test libraries | Version | command |

|---|---|---|

| rocm/jammy | 6.3.3.60303-74~22.04 amd64 | apt list rocm |

| Rccl 1 | 7961624 | |

| mpi (Open MPI) | 5.1.0a1 | /opt/ompi/bin/mpirun –version |

|

UCX |

1.20.0 | /opt/ucx/bin/ucx_info -v |

| rccl-tests | revision 6704fc6 | Git branch https://github.com/ROCm/rccl-tests.git |

| ANP Plugin2 |

For more information on installing these software and dependent libraries, high level steps are provided later in section AMD Pollara firmware and dependent libraries, as these steps can only be performed once the NICs and GPUs are mapped as described in sections below.

In this section we will explore some of the options to find information about and configure the NICs and GPUs.

ROCm Communication Collectives Library (RCCL)

In AMD servers, the ROCm provides multi-GPU and multi-node collective communication primitives optimized for AMD GPUs. These collectives implement send and receive operations such as all-reduce, all-gather, reduce, broadcast, all-to-all, and so on across multiple GPUs in one or more GPU servers.

Communication between GPUs in a single server is implemented using xGMI (inter-chip global memory interconnect), part of AMD's Infinity Fabric technology. The Infinity Fabric is a high-bandwidth, low-latency interconnect for the various components within a system including CPUs, GPUs, memory, NICs and other devices. xGMI provides socket-to-socket communication, allowing direct CPU-to-CPU or GPU-to-GPU communication.

Communication between different servers is processed by RDMA-capable NICs (e.g., RoCEv2 over Ethernet) and routed across the GPU backend fabric. These NICs can be used by any GPU at any time as there is no hard coded 1-to-1 GPU to NIC mapping. However, the use of preferred communication paths between GPUs and NICs creates the appearance of a 1:1 correspondence.

RCCL will always choose the path that has the best connection between GPUs and between GPUs and NICs, aiming to optimize bandwidth, and latency. Optimized intra-node path will be taken before forwarding inter-node.

The rocm-smi (Radeon Open Compute Platform System

Management Interface) cli provides tools for configuring and

monitoring AMD GPUs. It can be used to identify GPUs hardware

details as well as topology information using the options such

as:

--showproductname: show product details

--showtopo

:

show hardware topology information

--showtopoaccess

:

shows the link accessibility between GPUs

--showtopohops:

shows the number of hops between GPUs

--showtopotype

:

shows the link type between GPUs

--showtoponuma

:

shows the numa nodes

--shownodesbw: shows the numa nodes bandwidth

--showhw: shows the hardware details

Examples from AMD Instinct MI300XX OAM:

The --showproductname shows the GPU series, model,

and vendor along with additional details. The example output shows

AMD

Instinct™ MI300XX Platform GPUs are installed in the

server.

jnpr@MI300X-01:/proc$ rocm-smi --showproductname ============================ ROCm System Management Interface ============================ ====================================== Product Info ====================================== GPU[0] : Card Series: AMD Instinct MI300XX OAM GPU[0] : Card Model: 0x74a1 GPU[0] : Card Vendor: Advanced Micro Devices, Inc. [AMD/ATI] GPU[0] : Card SKU: M3000100 GPU[0] : Subsystem ID: 0x74a1 GPU[0] : Device Rev: 0x00 GPU[0] : Node ID: 2 GPU[0] : GUID: 28851 GPU[0] : GFX Version: gfx942 GPU[1] : Card Series: AMD Instinct MI300XX OAM GPU[1] : Card Model: 0x74a1 GPU[1] : Card Vendor: Advanced Micro Devices, Inc. [AMD/ATI] GPU[1] : Card SKU: M3000100 GPU[1] : Subsystem ID: 0x74a1 GPU[1] : Device Rev: 0x00 GPU[1] : Node ID: 3 GPU[1] : GUID: 51499 GPU[1] : GFX Version: gfx942 ---more--

The --showhw options shows information about the

GPUs in the system, including ID

root@MI300X-01:/sys/kernel/config/bnxt_re/bnxt_re0/ports/1/cc# rocm-smi --showhw -v ====================================== ROCm System Management Interface ================================= =========================================== Concise Hardware Info ======================================= GPU NODE DID GUID GFX VER GFX RAS SDMA RAS UMC RAS VBIOS BUS PARTITION ID 0 2 0x74a1 28851 gfx942 ENABLED ENABLED ENABLED 113-M3000100-102 0000:05:00.0 0 1 3 0x74a1 51499 gfx942 ENABLED ENABLED ENABLED 113-M3000100-102 0000:27:00.0 0 2 4 0x74a1 57603 gfx942 ENABLED ENABLED ENABLED 113-M3000100-102 0000:47:00.0 0 3 5 0x74a1 22683 gfx942 ENABLED ENABLED ENABLED 113-M3000100-102 0000:65:00.0 0 4 6 0x74a1 53458 gfx942 ENABLED ENABLED ENABLED 113-M3000100-102 0000:85:00.0 0 5 7 0x74a1 26954 gfx942 ENABLED ENABLED ENABLED 113-M3000100-102 0000:A7:00.0 0 6 8 0x74a1 16738 gfx942 ENABLED ENABLED ENABLED 113-M3000100-102 0000:C7:00.0 0 7 9 0x74a1 63738 gfx942 ENABLED ENABLED ENABLED 113-M3000100-102 0000:E5:00.0 0 ========================================================================================================== ============================================ End of ROCm SMI Log ========================================= ========================================= VBIOS ========================================== GPU[0] : VBIOS version: 113-M3000100-102 GPU[1] : VBIOS version: 113-M3000100-102 GPU[2] : VBIOS version: 113-M3000100-102 GPU[3] : VBIOS version: 113-M3000100-102 GPU[4] : VBIOS version: 113-M3000100-102 GPU[5] : VBIOS version: 113-M3000100-102 GPU[6] : VBIOS version: 113-M3000100-102 GPU[7] : VBIOS version: 113-M3000100-102 ==========================================================================================

The fields are defined as follows:

| GPU | Index of the GPU on the system, starting from 0. |

| NODE | NUMA (Non-Uniform Memory Access) node ID associated with the GPU. Helps identify memory locality. Optimal GPU/NIC mapping often relies on NUMA proximity |

| DID |

Device ID of the GPU. This is a unique identifier for the specific GPU model. Useful for verifying the exact GPU model. For example, 0x74a1 corresponds to an MI300X-series GPU. |

| GUID |

GPU Unique Identifier. This value is specific to each GPU and may relate to its PCIe device. Useful for distinguishing GPUs in a multi-GPU environment. |

| GFX VER |

The version of the GPU architecture (e.g., gfx942 is part of AMD's RDNA2 family). In AMD GPUs, the GFX prefix is part of AMD's internal naming convention for their GPU microarchitecture families. GPU architecture hardware specifications — ROCm Documentation |

| GFX RAS | Status of GPU RAS (Reliability, Availability, Serviceability) features. Indicates error handling. |

| SDMA RAS | Status of SDMA (System Direct Memory Access) RAS features. |

| UMC RAS | Status of Unified Memory Controller (UMC) RAS features. |

| VBIOS |

VBIOS (Video BIOS) version. Indicates the firmware version running on the GPU. Identical firmware version (113-M3000100-102) for all GPUs indicates a uniform configuration. |

| BUS |

PCIe bus address of the GPU. Helps map the GPU to its physical slot. For example, 0000:05:00.0 is the PCIe address. It allows you to correlate GPUs to physical slots or NUMA nodes. |

| PARTITION ID | GPU partition or instance ID. For multi-instance GPUs (e.g., MI300X), this would identify instances.All values are 0 indicate no multi-instance partitioning is enabled for these GPUs. |

The --showbus options shows PCI bus related

information, including correspondence between GPU IDs and PCI Bus

IDs.

root@MI300X-01:/sys/kernel/config/bnxt_re/bnxt_re0/ports/1/cc# rocm-smi --showbus -i ============================ ROCm System Management Interface ============================ =========================================== ID =========================================== GPU[0] : Device Name: AMD Instinct MI300XX OAM GPU[0] : Device ID: 0x74a1 GPU[0] : Device Rev: 0x00 GPU[0] : Subsystem ID: 0x74a1 GPU[0] : GUID: 28851 GPU[1] : Device Name: AMD Instinct MI300XX OAM GPU[1] : Device ID: 0x74a1 GPU[1] : Device Rev: 0x00 GPU[1] : Subsystem ID: 0x74a1 GPU[1] : GUID: 51499 GPU[2] : Device Name: AMD Instinct MI300XX OAM GPU[2] : Device ID: 0x74a1 GPU[2] : Device Rev: 0x00 GPU[2] : Subsystem ID: 0x74a1 GPU[2] : GUID: 57603 ---more--- ========================================================================================== ======================================= PCI Bus ID ======================================= GPU[0] : PCI Bus: 0000:05:00.0 GPU[1] : PCI Bus: 0000:27:00.0 GPU[2] : PCI Bus: 0000:47:00.0 GPU[3] : PCI Bus: 0000:65:00.0 GPU[4] : PCI Bus: 0000:85:00.0 GPU[5] : PCI Bus: 0000:A7:00.0 GPU[6] : PCI Bus: 0000:C7:00.0 GPU[7] : PCI Bus: 0000:E5:00.0 ========================================================================================== ================================== End of ROCm SMI Log ===================================

The --showmetrics option provides comprehensive

information about the GPU status and performance, including metrics

such as temperature, clock frequency, power, and pcie

bandwidth.

root@MI300X-01:/sys/kernel/config/bnxt_re/bnxt_re0/ports/1/cc# rocm-smi --showmetrics | grep GPU.0 GPU[0] : Metric Version and Size (Bytes): 1.6 1664 GPU[0] : temperature_edge (C): N/A GPU[0] : temperature_hotspot (C): 42 GPU[0] : temperature_mem (C): 35 GPU[0] : temperature_vrgfx (C): N/A GPU[0] : temperature_vrsoc (C): 41 GPU[0] : temperature_vrmem (C): N/A GPU[0] : average_gfx_activity (%): 0 GPU[0] : average_umc_activity (%): 0 GPU[0] : average_mm_activity (%): N/A GPU[0] : average_socket_power (W): N/A GPU[0] : energy_accumulator (15.259uJ (2^-16)): 4291409153508 GPU[0] : system_clock_counter (ns): 508330314785091 GPU[0] : average_gfxclk_frequency (MHz): N/A GPU[0] : average_socclk_frequency (MHz): N/A GPU[0] : average_uclk_frequency (MHz): N/A GPU[0] : average_vclk0_frequency (MHz): N/A GPU[0] : average_dclk0_frequency (MHz): N/A GPU[0] : average_vclk1_frequency (MHz): N/A GPU[0] : average_dclk1_frequency (MHz): N/A GPU[0] : current_gfxclk (MHz): 134 GPU[0] : current_socclk (MHz): 28 GPU[0] : current_uclk (MHz): 900 GPU[0] : current_vclk0 (MHz): 29 GPU[0] : current_dclk0 (MHz): 22 GPU[0] : current_vclk1 (MHz): 29 GPU[0] : current_dclk1 (MHz): 22 GPU[0] : throttle_status: N/A GPU[0] : current_fan_speed (rpm): N/A GPU[0] : pcie_link_width (Lanes): 16 GPU[0] : pcie_link_speed (0.1 GT/s): 320 GPU[0] : gfx_activity_acc (%): 682809151 GPU[0] : mem_activity_acc (%): 60727622 GPU[0] : temperature_hbm (C): ['N/A', 'N/A', 'N/A', 'N/A'] GPU[0] : firmware_timestamp (10ns resolution): 507863813273800 GPU[0] : voltage_soc (mV): N/A GPU[0] : voltage_gfx (mV): N/A GPU[0] : voltage_mem (mV): N/A GPU[0] : indep_throttle_status: N/A GPU[0] : current_socket_power (W): 123 GPU[0] : vcn_activity (%): [0, 0, 0, 0] GPU[0] : gfxclk_lock_status: 0 GPU[0] : xgmi_link_width: 0 GPU[0] : xgmi_link_speed (Gbps): 0 GPU[0] : pcie_bandwidth_acc (GB/s): 626812796806 GPU[0] : pcie_bandwidth_inst (GB/s): 18 ---more---

The --showtopo options show how the GPUs in the

systems can communicate with each other via XGMI (Link Type)

representing one hop between any two GPUs. The weight of 15

indicates this direct communication is the preferred path.

jnpr@MI300X-01:~$ rocm-smi --showtopo

============================ ROCm System Management Interface ============================

================================ Weight between two GPUs =================================

GPU0 GPU1 GPU2 GPU3 GPU4 GPU5 GPU6 GPU7

GPU0 0 15 15 15 15 15 15 15

GPU1 15 0 15 15 15 15 15 15

GPU2 15 15 0 15 15 15 15 15

GPU3 15 15 15 0 15 15 15 15

GPU4 15 15 15 15 0 15 15 15

GPU5 15 15 15 15 15 0 15 15

GPU6 15 15 15 15 15 15 0 15

GPU7 15 15 15 15 15 15 15 0

================================= Hops between two GPUs ==================================

GPU0 GPU1 GPU2 GPU3 GPU4 GPU5 GPU6 GPU7

GPU0 0 1 1 1 1 1 1 1

GPU1 1 0 1 1 1 1 1 1

GPU2 1 1 0 1 1 1 1 1

GPU3 1 1 1 0 1 1 1 1

GPU4 1 1 1 1 0 1 1 1

GPU5 1 1 1 1 1 0 1 1

GPU6 1 1 1 1 1 1 0 1

GPU7 1 1 1 1 1 1 1 0

=============================== Link Type between two GPUs ===============================

GPU0 GPU1 GPU2 GPU3 GPU4 GPU5 GPU6 GPU7

GPU0 0 XGMI XGMI XGMI XGMI XGMI XGMI XGMI

GPU1 XGMI 0 XGMI XGMI XGMI XGMI XGMI XGMI

GPU2 XGMI XGMI 0 XGMI XGMI XGMI XGMI XGMI

GPU3 XGMI XGMI XGMI 0 XGMI XGMI XGMI XGMI

GPU4 XGMI XGMI XGMI XGMI 0 XGMI XGMI XGMI

GPU5 XGMI XGMI XGMI XGMI XGMI 0 XGMI XGMI

GPU6 XGMI XGMI XGMI XGMI XGMI XGMI 0 XGMI

GPU7 XGMI XGMI XGMI XGMI XGMI XGMI XGMI 0

======================================= Numa Nodes =======================================

GPU[0] : (Topology) Numa Node: 0

GPU[0] : (Topology) Numa Affinity: 0

GPU[1] : (Topology) Numa Node: 0

GPU[1] : (Topology) Numa Affinity: 0

GPU[2] : (Topology) Numa Node: 0

GPU[2] : (Topology) Numa Affinity: 0

GPU[3] : (Topology) Numa Node: 0

GPU[3] : (Topology) Numa Affinity: 0

GPU[4] : (Topology) Numa Node: 1

GPU[4] : (Topology) Numa Affinity: 1

GPU[5] : (Topology) Numa Node: 1

GPU[5] : (Topology) Numa Affinity: 1

GPU[6] : (Topology) Numa Node: 1

GPU[6] : (Topology) Numa Affinity: 1

GPU[7] : (Topology) Numa Node: 1

GPU[7] : (Topology) Numa Affinity: 1

================================== End of ROCm SMI Log ===================================

Usage:

cma_roce_tos OPTIONS

Options:

-h show this help

-d <dev> use IB device <dev> (default mlx5_0)

-p <port> use port <port> of IB device (default 1)

-t <TOS> set TOS of RoCE RDMA_CM applications (0)

The link type, number of hops, and weight can be also obtained

using the specific options

--showtopoweight

,

--showtopotype, and

–showtopoweight:

jnpr@MI300X-01:~/SCRIPTS$ rocm-smi --showtopoweight

============================ ROCm System Management Interface ============================

================================ Weight between two GPUs =================================

GPU0 GPU1 GPU2 GPU3 GPU4 GPU5 GPU6 GPU7

GPU0 0 15 15 15 15 15 15 15

GPU1 15 0 15 15 15 15 15 15

GPU2 15 15 0 15 15 15 15 15

GPU3 15 15 15 0 15 15 15 15

GPU4 15 15 15 15 0 15 15 15

GPU5 15 15 15 15 15 0 15 15

GPU6 15 15 15 15 15 15 0 15

GPU7 15 15 15 15 15 15 15 0

================================== End of ROCm SMI Log ===================================

jnpr@MI300X-01:~/SCRIPTS$ rocm-smi --showtopohops

============================ ROCm System Management Interface ============================

================================= Hops between two GPUs ==================================

GPU0 GPU1 GPU2 GPU3 GPU4 GPU5 GPU6 GPU7

GPU0 0 1 1 1 1 1 1 1

GPU1 1 0 1 1 1 1 1 1

GPU2 1 1 0 1 1 1 1 1

GPU3 1 1 1 0 1 1 1 1

GPU4 1 1 1 1 0 1 1 1

GPU5 1 1 1 1 1 0 1 1

GPU6 1 1 1 1 1 1 0 1

GPU7 1 1 1 1 1 1 1 0

================================== End of ROCm SMI Log ===================================

jnpr@MI300X-01:~/SCRIPTS$ rocm-smi --showtopotype

============================ ROCm System Management Interface ============================

=============================== Link Type between two GPUs ===============================

GPU0 GPU1 GPU2 GPU3 GPU4 GPU5 GPU6 GPU7

GPU0 0 XGMI XGMI XGMI XGMI XGMI XGMI XGMI

GPU1 XGMI 0 XGMI XGMI XGMI XGMI XGMI XGMI

GPU2 XGMI XGMI 0 XGMI XGMI XGMI XGMI XGMI

GPU3 XGMI XGMI XGMI 0 XGMI XGMI XGMI XGMI

GPU4 XGMI XGMI XGMI XGMI 0 XGMI XGMI XGMI

GPU5 XGMI XGMI XGMI XGMI XGMI 0 XGMI XGMI

GPU6 XGMI XGMI XGMI XGMI XGMI XGMI 0 XGMI

GPU7 XGMI XGMI XGMI XGMI XGMI XGMI XGMI 0

================================== End of ROCm SMI Log ===================================The --shownodesbw shows the bandwidth available

internally for GPU to GPU internal communication:

jnpr@MI300X-01:/home/ben$ rocm-smi --shownodesbw

============================ ROCm System Management Interface ============================

======================================= Bandwidth ========================================

GPU0 GPU1 GPU2 GPU3 GPU4 GPU5 GPU6 GPU7

GPU0 N/A 50000-50000 50000-50000 50000-50000 50000-50000 50000-50000 50000-50000 50000-50000

GPU1 50000-50000 N/A 50000-50000 50000-50000 50000-50000 50000-50000 50000-50000 50000-50000

GPU2 50000-50000 50000-50000 N/A 50000-50000 50000-50000 50000-50000 50000-50000 50000-50000

GPU3 50000-50000 50000-50000 50000-50000 N/A 50000-50000 50000-50000 50000-50000 50000-50000

GPU4 50000-50000 50000-50000 50000-50000 50000-50000 N/A 50000-50000 50000-50000 50000-50000

GPU5 50000-50000 50000-50000 50000-50000 50000-50000 50000-50000 N/A 50000-50000 50000-50000

GPU6 50000-50000 50000-50000 50000-50000 50000-50000 50000-50000 50000-50000 N/A 50000-50000

GPU7 50000-50000 50000-50000 50000-50000 50000-50000 50000-50000 50000-50000 50000-50000 N/A

Format: min-max; Units: mps

"0-0" min-max bandwidth indicates devices are not connected directly

================================== End of ROCm SMI Log ===================================rocm-smi-h

For more information about ROCm-SMI as well as for the newer AMD-SMI cli please check: ROCm Documentation, AMD SMI documentation, ROCm and AMD SMI

NICs and GPUs mappings

Next is to perform mapping of the NIC to GPUs as shown in the below steps. These will be same for both Thor2 and AMD Pollara 400 NIC.

The information from other commands can be combined with some of the options above to find correlation between GPU and NICs following these steps:

-

Identify NUMA Nodes and GPUs

Use the output from

rocm-smi --showtoponumaor justrocm-smi --showtopoto find mappings between GPUs and NUMA nodes.Look for NUMA Affinity for each GPU in the output. A description of what this attribute means is included later in this section.

Note down which GPUs are associated with which NUMA nodes.

Example:

jnpr@MI300X-01:/proc$ rocm-smi --showtoponuma ============================ ROCm System Management Interface ============================ ======================================= Numa Nodes ======================================= GPU[0] : (Topology) Numa Node: 0 GPU[0] : (Topology) Numa Affinity: 0 GPU[1] : (Topology) Numa Node: 0 GPU[1] : (Topology) Numa Affinity: 0 GPU[2] : (Topology) Numa Node: 0 GPU[2] : (Topology) Numa Affinity: 0 GPU[3] : (Topology) Numa Node: 0 GPU[3] : (Topology) Numa Affinity: 0 GPU[4] : (Topology) Numa Node: 1 GPU[4] : (Topology) Numa Affinity: 1 GPU[5] : (Topology) Numa Node: 1 GPU[5] : (Topology) Numa Affinity: 1 GPU[6] : (Topology) Numa Node: 1 GPU[6] : (Topology) Numa Affinity: 1 GPU[7] : (Topology) Numa Node: 1 GPU[7] : (Topology) Numa Affinity: 1 ================================== End of ROCm SMI Log ===================================

GPU 0–3 → NUMA Node 0

GPU 4–7 → NUMA Node 1

- Identify NUMA Nodes for NICs

Navigate to the

/sys/class/net/directory and check the NUMA node affinity for each network interface (excluding lo or docker interfaces):for iface in $(ls /sys/class/net/ | grep -Ev '^(lo|docker)'); do numa_node=$(cat /sys/class/net/$iface/device/numa_node 2>/dev/null) echo "Interface: $iface, NUMA Node: $numa_node" doneNote the NUMA node affinity for each NIC interface.

EXAMPLE:

jnpr@MI300X-01:~/SCRIPTS$ for iface in $(ls /sys/class/net/ | grep -Ev '^(lo|docker)'); do numa_node=$(cat /sys/class/net/$iface/device/numa_node 2>/dev/null) echo "Interface: $iface, NUMA Node: $numa_node" done Interface: ens61f1np1, NUMA Node: 1 Interface: enxbe3af2b6059f, NUMA Node: Interface: gpu0_eth, NUMA Node: 0 Interface: gpu1_eth, NUMA Node: 0 Interface: gpu2_eth, NUMA Node: 0 Interface: gpu3_eth, NUMA Node: 0 Interface: gpu4_eth, NUMA Node: 1 Interface: gpu5_eth, NUMA Node: 1 Interface: gpu6_eth, NUMA Node: 1 Interface: gpu7_eth, NUMA Node: 1 Interface: mgmt_eth, NUMA Node: 1 Interface: stor0_eth, NUMA Node: 0 Interface: stor1_eth, NUMA Node: 0 - Correlate GPUs to NICs Based on NUMA Affinity

Using the NUMA node affinity from Step 1 (GPUs) and Step 2 (NICs), to map each GPU to NICs within the same NUMA node:

EXAMPLE:

GPU0 (NUMA 0): - NIC: gpu0_eth (NUMA 0) - NIC: gpu1_eth (NUMA 0) - NIC: gpu2_eth (NUMA 0) - NIC: gpu3_eth (NUMA 0) - NIC: stor0_eth (NUMA 0) - NIC: stor1_eth (NUMA 0) GPU4 (NUMA 1): - NIC: gpu4_eth (NUMA 1) - NIC: gpu5_eth (NUMA 1) - NIC: gpu6_eth (NUMA 1) - NIC: gpu7_eth (NUMA 1) - NIC: mgmt_eth (NUMA 1)

jnpr@MI300X-01:~/SCRIPTS$ cat GPU-to-NIC_YL.sh

#!/bin/bash

# Temporary data files

gpu_to_numa_file="GPU-to-NUMA.tmp"

nic_to_numa_file="NIC-to-NUMA.tmp"

output_file="NIC-to-GPU.txt"

# Clear or create the output file

> "$output_file"

# Step 1: Parse GPUs and NUMA nodes

echo "Step 1: Parsing GPUs and NUMA Nodes..."

rocm-smi --showtoponuma > /tmp/rocm_smi_output.tmp 2>/dev/null

if [[ $? -ne 0 ]]; then

echo "Error: rocm-smi is not installed or failed to run."

exit 1

fi

# Extract GPU and NUMA information

grep "GPU" /tmp/rocm_smi_output.tmp | grep "Numa Node" | awk -F'[ :]' '{print $2, $NF}' | sed 's/^/GPU /' > "$gpu_to_numa_file"

# Step 2: Parse NICs and NUMA nodes

echo "Step 2: Parsing NICs and NUMA Nodes..."

> "$nic_to_numa_file"

for iface in $(ls /sys/class/net/ | grep -Ev '^(lo|docker)'); do

numa_node=$(cat /sys/class/net/$iface/device/numa_node 2>/dev/null)

if [[ $numa_node -ge 0 ]]; then

echo "NIC $iface, NUMA Node: $numa_node" >> "$nic_to_numa_file"

fi

done

# Step 3: Match GPUs to NICs based on NUMA affinity

echo "Step 3: Mapping GPUs to NICs..."

while read -r gpu_entry; do

gpu=$(echo "$gpu_entry" | awk '{print $2}')

gpu_numa=$(echo "$gpu_entry" | awk '{print $NF}')

echo "GPU$gpu (NUMA $gpu_numa):" >> "$output_file"

while read -r nic_entry; do

nic=$(echo "$nic_entry" | awk '{print $2}' | sed 's/,//')

nic_numa=$(echo "$nic_entry" | awk '{print $NF}')

if [[ "$gpu_numa" == "$nic_numa" ]]; then

echo " - NIC: $nic" >> "$output_file"

fi

done < "$nic_to_numa_file"

done < "$gpu_to_numa_file"

# Output the result

echo "Mapping complete! Results saved in $output_file."

cat "$output_file"EXAMPLE:

jnpr@MI300X-01:~/SCRIPTS$ ./GPU-to-NIC_YL.sh Step 1: Parsing GPUs and NUMA Nodes... Step 2: Parsing NICs and NUMA Nodes... Step 3: Mapping GPUs to NICs... Mapping complete! Results saved in NIC-to-GPU.txt. GPU0 (NUMA 0): - NIC: gpu0_eth - NIC: gpu1_eth - NIC: gpu2_eth - NIC: gpu3_eth - NIC: stor0_eth - NIC: stor1_eth GPU0 (NUMA 0): - NIC: gpu0_eth - NIC: gpu1_eth - NIC: gpu2_eth - NIC: gpu3_eth - NIC: stor0_eth - NIC: stor1_eth GPU0 (NUMA 0): - NIC: gpu0_eth - NIC: gpu1_eth - NIC: gpu2_eth - NIC: gpu3_eth - NIC: stor0_eth - NIC: stor1_eth GPU0 (NUMA 0): - NIC: gpu0_eth - NIC: gpu1_eth - NIC: gpu2_eth - NIC: gpu3_eth - NIC: stor0_eth - NIC: stor1_eth GPU1 (NUMA 1): - NIC: ens61f1np1 - NIC: gpu4_eth - NIC: gpu5_eth - NIC: gpu6_eth - NIC: gpu7_eth - NIC: mgmt_eth GPU1 (NUMA 1): - NIC: ens61f1np1 - NIC: gpu4_eth - NIC: gpu5_eth - NIC: gpu6_eth - NIC: gpu7_eth - NIC: mgmt_eth GPU1 (NUMA 1): - NIC: ens61f1np1 - NIC: gpu4_eth - NIC: gpu5_eth - NIC: gpu6_eth - NIC: gpu7_eth - NIC: mgmt_eth GPU1 (NUMA 1): - NIC: ens61f1np1 - NIC: gpu4_eth - NIC: gpu5_eth - NIC: gpu6_eth - NIC: gpu7_eth - NIC: mgmt_eth

You will notice that there is not a 1:1 GPU to NIC association. Instead, multiple NIC interfaces are associated with the GPU. This is because they belong to the same Non-Uniform Memory Access (NUMA) node affinity.

Systems employing a NUMA architecture contain collections of hardware resources including CPUs, GPUs memory, and PCIe devices (including NICs), grouped together in what is known as a “NUMA node”. These resources are considered "local" to each other. From the point of view of a GPU, devices in the same NUMA node are the most closely associated with that GPU. The NUMA node is identified by the NUMA Affinity.

Multiple NICs and GPUs may be connected to the same PCIe complex or switch within a NUMA node. This makes the NICs accessible to all GPUs sharing that complex. However, while all NICs in a NUMA node are accessible to any GPU in the same node, the NICs are allocated dynamically for usage by a given GPU, based on availability, traffic type, latency, and so on.

Communication Between GPUs on the Same NUMA Node (e.g., GPU1 ↔ GPU2):

GPUs on the same NUMA node (e.g., GPU1 and GPU2) communicate directly over the high-bandwidth, low-latency interconnect, such as Infinity Fabric (in AMD systems).

These interconnects avoid the CPU and main memory entirely, offering much faster communication compared to NUMA-crossing communication. Since both GPUs are "local" to the same memory controller and CPU, the communication path is highly optimized.

Communication Between GPUs on Different NUMA Nodes (e.g., GPU1 ↔ GPU4):

Communication between GPUs on different NUMA nodes (e.g., GPU1 on NUMA 0 and GPU4 on NUMA 1) must traverse additional layers of the system architecture, which introduces higher latency. The path typically follows:

- GPU1 → CPU (NUMA 0): Data is sent from GPU1 to the CPU on NUMA 0.

- Inter-NUMA Link: The CPUs in NUMA 0 and NUMA 1 are connected via an interconnect such as Infinity Fabric or UPI (Ultra Path Interconnect).

- CPU (NUMA 1) → GPU4: The data is forwarded from the CPU on NUMA 1 to GPU4.

Changing NIC attributes

This section shows you how to add or change a NIC’s Interface Name, MTU, DNS, IP Addresses and Routing table entries.

Editing and reapplying the network configuration (netplan) file

The network configuration is described in the netplan *.yaml

file found under: /etc/netplan/.

Notice that the actual file name might vary. Examples:

/etc/netplan/01-netcfg.yaml

/etc/netplan/00-installer-config.yaml

Changing any interface attribute involves editing this file and reapplying the network plan as shown below:

- Find the default names of the logical

interfaces.

You can use the following steps to achieve this:

Thor2 NIC output:

jnpr@MI300X-01:~$ > devnames1; for iface in $(ls /sys/class/net/ | grep -Ev '^(lo|docker|virbr)'); do device=$(ethtool -i $iface 2>/dev/null | grep 'bus-info' | awk '{print $2}'); if [[ $device != 0000:* ]]; then device="0000:$device"; fi; model=$(lspci -s $device 2>/dev/null | awk -F ': ' '{print $2}'); echo "$iface:$model" >> devnames1; done jnpr@MI300X-01:~$ cat devnames1 ens61f1np1:Mellanox Technologies MT2910 Family [ConnectX-7] enxbe3af2b6059f: ens41np0:Broadcom Inc. and subsidiaries BCM57608 25Gb/50Gb/100Gb/200Gb/400Gb Ethernet (rev 11) ens42np0:Broadcom Inc. and subsidiaries BCM57608 25Gb/50Gb/100Gb/200Gb/400Gb Ethernet (rev 11) ens32np0:Broadcom Inc. and subsidiaries BCM57608 25Gb/50Gb/100Gb/200Gb/400Gb Ethernet (rev 11) ens31np0:Broadcom Inc. and subsidiaries BCM57608 25Gb/50Gb/100Gb/200Gb/400Gb Ethernet (rev 11) ens21np0:Broadcom Inc. and subsidiaries BCM57608 25Gb/50Gb/100Gb/200Gb/400Gb Ethernet (rev 11) ens22np0:Broadcom Inc. and subsidiaries BCM57608 25Gb/50Gb/100Gb/200Gb/400Gb Ethernet (rev 11) ens12np0:Broadcom Inc. and subsidiaries BCM57608 25Gb/50Gb/100Gb/200Gb/400Gb Ethernet (rev 11) ens11np0:Broadcom Inc. and subsidiaries BCM57608 25Gb/50Gb/100Gb/200Gb/400Gb Ethernet (rev 11) ens61f0np0:Mellanox Technologies MT2910 Family [ConnectX-7] ens50f0np0:Mellanox Technologies MT2910 Family [ConnectX-7] ens50f1np1:Mellanox Technologies MT2910 Family [ConnectX-7]Interface

ens31np0:Where

- en: ethernet network interface.

- s31: indicates the physical location of the network interface on the system bus. slot number 31 on the bus.

- np0:

- n: Network (indicates it's a network port).

- p0: Port 0 (indicates it's the first port of this network interface).

AMD Pollara 400 NIC output

jnpr@mi300-01:~# > devnames1; for iface in $(ls /sys/class/net/ | grep -Ev '^(lo|docker|virbr)'); do device=$(ethtool -i $iface 2>/dev/null | grep 'bus-info' | awk '{print $2}'); if [[ $device != 0000:* ]]; then device="0000:$device"; fi; model=$(lspci -s $device 2>/dev/null | awk -F ': ' '{print $2}'); echo "$iface:$model" >> devnames1; done jnpr@mi300-01:~# cat devnames1 ens61f1np1:Mellanox Technologies MT2910 Family [ConnectX-7] eth3: gpu0_eth:Pensando Systems DSC Ethernet Controller gpu1_eth:Pensando Systems DSC Ethernet Controller gpu2_eth:Pensando Systems DSC Ethernet Controller gpu3_eth:Pensando Systems DSC Ethernet Controller gpu4_eth:Pensando Systems DSC Ethernet Controller gpu5_eth:Pensando Systems DSC Ethernet Controller gpu6_eth:Pensando Systems DSC Ethernet Controller gpu7_eth:Pensando Systems DSC Ethernet Controller mgmt_eth:Mellanox Technologies MT2910 Family [ConnectX-7] stor0_eth:Mellanox Technologies MT2910 Family [ConnectX-7] stor1_eth:Mellanox Technologies MT2910 Family [ConnectX-7]You can use the script

gpunic.pyto find mappings between GPUs and NIC per pcie bus, to identify how the NICS need to be renamed for consistency.EXAMPLE:

jnpr@MI300X-01:~/SCRIPTS$ sudo python3 amd_map_nic_gpu.py bus 0000:00:01.1: 0000:05:00.0 (gpu) - GPU0 0000:08:00.0 (gpu) - - 0000:08:00.1 (gpu) - - 0000:08:00.2 (gpu) - - 0000:09:00.0 (nic) - gpu0_eth bus 0000:20:01.1: 0000:25:00.0 (gpu) - - 0000:25:00.1 (gpu) - - 0000:25:00.2 (gpu) - - 0000:26:00.0 (nic) - gpu1_eth 0000:29:00.0 (gpu) - GPU1 bus 0000:20:03.1: 0000:31:00.0 (nic) - stor0_eth 0000:31:00.1 (nic) - stor1_eth bus 0000:40:01.1: 0000:45:00.0 (gpu) - - 0000:45:00.1 (gpu) - - 0000:45:00.2 (gpu) - - 0000:46:00.0 (nic) - gpu2_eth 0000:49:00.0 (gpu) - GPU2 bus 0000:60:01.1: 0000:65:00.0 (gpu) - GPU3 0000:68:00.0 (gpu) - - 0000:68:00.1 (gpu) - - 0000:68:00.2 (gpu) - - 0000:69:00.0 (nic) - gpu3_eth bus 0000:60:05.4: 0000:6e:00.0 (gpu) - - bus 0000:80:01.1: 0000:85:00.0 (gpu) - GPU4 0000:88:00.0 (gpu) - - 0000:88:00.1 (gpu) - - 0000:88:00.2 (gpu) - - 0000:89:00.0 (nic) - gpu4_eth bus 0000:a0:01.1: 0000:a5:00.0 (gpu) - - 0000:a5:00.1 (gpu) - - 0000:a5:00.2 (gpu) - - 0000:a6:00.0 (nic) - gpu5_eth 0000:a9:00.0 (gpu) - GPU5 bus 0000:c0:01.1: 0000:c5:00.0 (gpu) - - 0000:c5:00.1 (gpu) - - 0000:c5:00.2 (gpu) - - 0000:c6:00.0 (nic) - gpu6_eth 0000:c9:00.0 (gpu) - GPU6 bus 0000:c0:03.1: 0000:d2:00.0 (nic) - mgmt_eth 0000:d2:00.1 (nic) - ens61f1np1 bus 0000:e0:01.1: 0000:e5:00.0 (gpu) - GPU7 0000:e8:00.0 (gpu) - - 0000:e8:00.1 (gpu) - - 0000:e8:00.2 (gpu) - - 0000:e9:00.0 (nic) - gpu7_eth

To further identify the interfaces, you can use the

sudo ethtool <device> | grep Speedcommand.jnpr@MI300X-01:~/SCRIPTS$ sudo ethtool ens61f0np0| grep Speed Speed: 400000Mb/s jnpr@MI300X-01:~/SCRIPTS$ sudo ethtool enp47s0f0np0| grep Speed Speed: 200000Mb/s jnpr@MI300X-01:~/SCRIPTS$ sudo ethtool enp208s0f0np0| grep Speed Speed: 100000Mb/sYou want to make sure that the NICs connected to the GPU Backend fabric, the Storage Backend fabric, and the Frontend fabric are 400GE interfaces, 200GE interfaces, and 100GE interfaces respectively.

DEFAULT INTERFACE NAME NEW NAME Speed enp6s0np0 gpu0_eth 400GE enp35s0np0 gpu1_eth 400GE enp67s0np0 gpu2_eth 400GE enp102s0np0 gpu3_eth 400GE enp134s0np0 gpu4_eth 400GE enp163s0np0 gpu5_eth 400GE enp195s0np0 gpu6_eth 400GE enp230s0np0 gpu7_eth 400GE enp47s0f0np0 stor0_eth 200GE enp47s0f0np1 stor1_eth 200GE enp208s0f0np0 mgmt_eth 100GE - Find the interface’s MAC address:

You can use the

ip link show <device>command.EXAMPLE:

jnpr@MI300X-01:~/SCRIPTS$ ip link show ens61f0np0 | grep "link/ether" link/ether 5c:25:73:66:c3:ee brd ff:ff:ff:ff:ff:ff jnpr@MI300X-01:~/SCRIPTS$ ip link show enp35s0np0 | grep "link/ether" link/ether 5c:25:73:66:bc:5e brd ff:ff:ff:ff:ff:ffDEFAULT INTERFACE NAME NEW NAME MAC address enp6s0np0 gpu0_eth 7c:c2:55:bd:75:d0 enp35s0np0 gpu1_eth 7c:c2:55:bd:79:20 enp67s0np0 gpu2_eth 7c:c2:55:bd:7d:f0 enp102s0np0 gpu3_eth 7c:c2:55:bd:7e:20 enp134s0np0 gpu4_eth 7c:c2:55:bd:75:10 enp163s0np0 gpu5_eth 7c:c2:55:bd:7d:c0 enp195s0np0 gpu6_eth 7c:c2:55:bd:84:90 enp230s0np0 gpu7_eth 7c:c2:55:bd:83:10 enp47s0f0np0 stor0_eth 5c:25:73:66:bc:5e enp47s0f0np1 stor1_eth 5c:25:73:66:bc:5f enp208s0f0np0 mgmt_eth 5c:25:73:66:c3:ee - Modify the netplan configuration file using the new name and MAC addresses determined

in the previous steps.

EXAMPLE:

network: version: 2 ethernets: gpu0_eth: match: macaddress: 7c:c2:55:bd:75:d0 <= MAC address associated to the original ens61f0np0. Will become gpu0_eth. dhcp4: false mtu: 9000 <= Interface’s MTU (default = 1500) addresses: - 10.200.16.18/24 <= New IP address(s) routes: - to: 10.200.0.0/16 <= New route(s). Example shows route for 10.200.0.0/16 via 10.200.16.254 via: 10.200.16.254 from: 10.200.16.18 set-name: gpu0_eth <= New interface name ---more---Make sure to keep proper indentation, and hyphens were appropriate (e.g. before IP addresses, routes, etc.) when editing the file. For the IP addresses make sure to include the subnet mask.

The following is an example of the netplan configuration file for one of the MI300X servers in the lab:

jnpr@MI300X-01:/etc/netplan$ cat 00-installer-config.yaml network: version: 2 ethernets: mgmt_eth: match: macaddress: 5c:25:73:66:c3:ee dhcp4: false addresses: - 10.10.1.25/31 nameservers: addresses: - 8.8.8.8 routes: - to: default via: 10.10.1.24 set-name: mgmt_eth stor0_eth: match: macaddress: 5c:25:73:66:bc:5e dhcp4: false mtu: 9000 addresses: - 10.100.5.3/31 routes: - to: 10.100.0.0/21 via: 10.100.5.2 set-name: stor0_eth stor1_eth: match: macaddress: 5c:25:73:66:bc:5f dhcp4: false mtu: 9000 addresses: - 10.100.5.5/31 routes: - to: 10.100.0.0/21 via: 10.100.5.4 set-name: stor1_eth gpu0_eth: match: macaddress: 7c:c2:55:bd:75:d0 dhcp4: false mtu: 9000 addresses: - 10.200.16.18/24 routes: - to: 10.200.0.0/16 via: 10.200.16.254 from: 10.200.16.18 set-name: gpu0_eth gpu1_eth: match: macaddress: 7c:c2:55:bd:79:20 dhcp4: false mtu: 9000 addresses: - 10.200.17.18/24 routes: - to: 10.200.0.0/16 via: 10.200.17.254 from: 10.200.17.18 set-name: gpu1_eth gpu2_eth: match: macaddress: 7c:c2:55:bd:7d:f0 dhcp4: false mtu: 9000 addresses: - 10.200.18.18/24 routes: - to: 10.200.0.0/16 via: 10.200.18.254 from: 10.200.18.18 set-name: gpu2_eth gpu3_eth: match: macaddress: 7c:c2:55:bd:7e:20 dhcp4: false mtu: 9000 addresses: - 10.200.19.18/24 routes: - to: 10.200.0.0/16 via: 10.200.19.254 from: 10.200.19.18 set-name: gpu3_eth gpu4_eth: match: macaddress: 7c:c2:55:bd:75:10 dhcp4: false mtu: 9000 addresses: - 10.200.20.18/24 routes: - to: 10.200.0.0/16 via: 10.200.20.254 from: 10.200.20.18 set-name: gpu4_eth gpu5_eth: match: macaddress: 7c:c2:55:bd:7d:c0 dhcp4: false mtu: 9000 addresses: - 10.200.21.18/24 routes: - to: 10.200.0.0/16 via: 10.200.21.254 from: 10.200.21.18 set-name: gpu5_eth gpu6_eth: match: macaddress: 7c:c2:55:bd:84:90 dhcp4: false mtu: 9000 addresses: - 10.200.22.18/24 routes: - to: 10.200.0.0/16 via: 10.200.22.254 from: 10.200.22.18 set-name: gpu6_eth gpu7_eth: match: macaddress: 7c:c2:55:bd:83:10 dhcp4: false mtu: 9000 addresses: - 10.200.23.18/24 routes: - to: 10.200.0.0/16 via: 10.200.23.254 from: 10.200.23.18 set-name: gpu7_eth -

Save the file and apply the changes using the netplan apply command.

jnpr@MI300X-01:/etc/netplan$ sudo netplan apply

jnpr@MI300X-01:/etc/netplan$

- Verify the changes were correctly applied.

Check that the new interface names are correct:

Thor2 NIC output:

root@MI300X-01:/home/jnpr/SCRIPTS#

> devnames;

for iface in $(ls /sys/class/net/ | grep -Ev '^(lo|docker|virbr)'); do

device=$(ethtool -i $iface 2>/dev/null | grep 'bus-info' | awk '{print $2}');

if [[ $device != 0000:* ]];

then device="0000:$device"; fi;

model=$(lspci -s $device 2>/dev/null | awk -F ': ' '{print $2}'); echo "$iface:$model" >> devnames;

done

root@MI300X-01:/home/jnpr/SCRIPTS# cat devnames

ens61f1np1:Mellanox Technologies MT2910 Family [ConnectX-7]

enxbe3af2b6059f:

gpu0_eth:Broadcom Inc. and subsidiaries BCM57608 25Gb/50Gb/100Gb/200Gb/400Gb Ethernet (rev 11)

gpu1_eth:Broadcom Inc. and subsidiaries BCM57608 25Gb/50Gb/100Gb/200Gb/400Gb Ethernet (rev 11)

gpu2_eth:Broadcom Inc. and subsidiaries BCM57608 25Gb/50Gb/100Gb/200Gb/400Gb Ethernet (rev 11)

gpu3_eth:Broadcom Inc. and subsidiaries BCM57608 25Gb/50Gb/100Gb/200Gb/400Gb Ethernet (rev 11)

gpu4_eth:Broadcom Inc. and subsidiaries BCM57608 25Gb/50Gb/100Gb/200Gb/400Gb Ethernet (rev 11)

gpu5_eth:Broadcom Inc. and subsidiaries BCM57608 25Gb/50Gb/100Gb/200Gb/400Gb Ethernet (rev 11)

gpu6_eth:Broadcom Inc. and subsidiaries BCM57608 25Gb/50Gb/100Gb/200Gb/400Gb Ethernet (rev 11)

gpu7_eth:Broadcom Inc. and subsidiaries BCM57608 25Gb/50Gb/100Gb/200Gb/400Gb Ethernet (rev 11)

mgmt_eth:Mellanox Technologies MT2910 Family [ConnectX-7]

stor0_eth:Mellanox Technologies MT2910 Family [ConnectX-7]

stor1_eth:Mellanox Technologies MT2910 Family [ConnectX-7]AMD Pollara NIC output for same command:

jnpr@mi300-01:~$ > devnames;

for iface in $(ls /sys/class/net/ | grep -Ev '^(lo|docker|virbr)'); do

device=$(ethtool -i $iface 2>/dev/null | grep 'bus-info' | awk '{print $2}');

if [[ $device != 0000:* ]];

then device="0000:$device"; fi;

model=$(lspci -s $device 2>/dev/null | awk -F ': ' '{print $2}'); echo "$iface:$model" >> devnames;

done

jnpr@mi300-01:~$ cat devnames

ens61f1np1:Mellanox Technologies MT2910 Family [ConnectX-7]

eth3:

gpu0_eth:Pensando Systems DSC Ethernet Controller

gpu1_eth:Pensando Systems DSC Ethernet Controller

gpu2_eth:Pensando Systems DSC Ethernet Controller

gpu3_eth:Pensando Systems DSC Ethernet Controller

gpu4_eth:Pensando Systems DSC Ethernet Controller

gpu5_eth:Pensando Systems DSC Ethernet Controller

gpu6_eth:Pensando Systems DSC Ethernet Controller

gpu7_eth:Pensando Systems DSC Ethernet Controller

mgmt_eth:Mellanox Technologies MT2910 Family [ConnectX-7]

stor0_eth:Mellanox Technologies MT2910 Family [ConnectX-7]

stor1_eth:Mellanox Technologies MT2910 Family [ConnectX-7]Verify that the IP addresses were configured correctly:

user@MI300X-03:~/scripts$ ip address show gpu0_eth

4: gpu0_eth: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq state UP group default qlen 1000

link/ether 6c:92:cf:87:cc:00 brd ff:ff:ff:ff:ff:ff

inet 10.200.24.22/24 brd 10.200.24.255 scope global gpu0_eth

valid_lft forever preferred_lft forever

inet6 fe80::6e92:cfff:fe87:cc00/64 scope link

valid_lft forever preferred_lft foreverOR

jnpr@MI300X-01:/etc/netplan$ ifconfig gpu0_eth

gpu0_eth: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9000

inet 10.200.16.18 netmask 255.255.255.0 broadcast 10.200.16.255

inet6 fe80::7ec2:55ff:febd:75d0 prefixlen 64 scopeid 0x20<link>

ether 7c:c2:55:bd:75:d0 txqueuelen 1000 (Ethernet)

RX packets 253482 bytes 28518251 (28.5 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 38519 bytes 10662707 (10.6 MB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0Check that the routes were added correctly to the routing table:

jnpr@MI300X-01:/etc/netplan$ route | grep mgmt_eth default _gateway 0.0.0.0 UG 0 0 0 mgmt_eth 10.10.1.24 0.0.0.0 255.255.255.254 U 0 0 0 mgmt_eth jnpr@MI300X-01:/etc/netplan$ route | grep gpu0_eth 10.200.0.0 10.200.16.254 255.255.0.0 UG 0 0 0 gpu0_eth 10.200.16.0 0.0.0.0 255.255.255.0 U 0 0 0 gpu0_eth

OR

user@MI300X-03:~/scripts$ ip route show | grep gpu0_eth 10.200.24.0/24 dev gpu0_eth proto kernel scope link src 10.200.24.22

Check address resolution:

jnpr@MI300X-01:/etc/netplan$ ping google.com -c 5 -n PING google.com (142.250.188.14) 56(84) bytes of data. 64 bytes from 142.250.188.14: icmp_seq=1 ttl=113 time=2.16 ms 64 bytes from 142.250.188.14: icmp_seq=2 ttl=113 time=2.43 ms 64 bytes from 142.250.188.14: icmp_seq=3 ttl=113 time=191 ms 64 bytes from 142.250.188.14: icmp_seq=4 ttl=113 time=50.6 ms 64 bytes from 142.250.188.14: icmp_seq=5 ttl=113 time=12.0 ms --- google.com ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 4005ms rtt min/avg/max/mdev = 2.158/51.596/190.818/71.851 ms

AMD Pollara firmware and dependent libraries

For brevity the steps described here only pertain to enabling RCCL test for AMD Pollara 400 NIC and hence all the necessary dependent software and libraries are required to be installed for RCCL test to run. The steps involved pertain to the libraries listed in AMD Server and NIC Firmware and RCCL supporting libraries table.

- Ensure that ubuntu OS version is 22.04 as suggested in section AMD Server and NIC Firmware and RCCL supporting libraries

Install ROCm library as suggested in below steps. wget https://repo.radeon.com/amdgpu-install/6.3.3/ubuntu/jammy/amdgpu-install_6.3.60303-1_all.deb sudo apt install ./amdgpu-install_6.3.60303-1_all.deb sudo apt update sudo apt install amdgpu-dkms rocm sudo apt install cmake libstdc++-12-dev

- Install RCCL library as suggested in below steps. Note that the RCCL and ANP are

private libraries provided by AMD.

tar xf rccl-7961624_may21.tgz cd rccl-7961624 ./install.sh -l --prefix=build --disable-mscclpp --disable-msccl-kernel

- Install Unified Communication Framework (UCX). The Unified Communication Framework

(UCX), is an open source, cross-platform framework designed to provide a common set of

communication interfaces for various network programming models and interfaces, refer

AMD documentation for more information.

sudo apt install libtool git clone https://github.com/openucx/ucx.git cd ucx ./autogen.sh mkdir build cd build ../configure --prefix=/opt/ucx --with-rocm=/opt/rocm ../configure --prefix=/opt/ucx make -j $(nproc) sudo make -j $(nproc) install

- Next Install OpenMPI. Note that the OpenMPI is a GitHub link and hence GitHub

credentials may be required. The Open MPI Project is an open source Message Passing

Interface implementation that is developed and maintained by a consortium of academic,

research, and industry partners. Open MPI is therefore able to combine the expertise,

technologies, and resources from all across the High Performance Computing community in

order to build the best MPI library available, refer OpenMPI for more information.

sudo apt install flex git clone --recursive https://github.com/open-mpi/ompi.git cd ompi ./autogen.pl mkdir build cd build ../configure --prefix=/opt/ompi --with-ucx=/opt/ucx --with-rocm=/opt/rocm make -j $(nproc) sudo make install

- Install Pollara drivers and firmware. This is AMD provided firmware bundle. This

firmware will also install the ‘nicctl’ command line utility to interact with the Pollara

NICs and run commands to reset cards or configure QOS etc.

# Prerequisites sudo apt install device-tree-compiler policycoreutils ninja-build jq pkg-config libnl-3-dev libnl-route-3-dev libpci-dev # Untar the bundle itself tar xf ainic_bundle_1.113.0-a-2.tar.gz # cd into the bundle directory cd ainic_bundle_1.113.0-a-2 # untar the host software tar xf host_sw_pkg.tar.gz # cd into the host software directory cd host_sw_pkg # install the drivers and software sudo ./install.sh

Output of the Firmware version:

jnpr@MI300-01:~/ainic_bundle_1.110.0-a-79$ sudo nicctl update firmware --image ./ainic_fw_salina.tar --log-file /tmp/amd_ainic_upgrade.log ---------------------------------------------------------------------------------- Card Id Stage Progress ---------------------------------------------------------------------------------- 42424650-4c32-3530-3130-313346000000 Done 100% [02:50.941] 42424650-4c32-3530-3130-313844000000 Done 100% [02:41.200] 42424650-4c32-3530-3130-313242000000 Done 100% [02:51.584] 42424650-4c32-3530-3130-304341000000 Done 100% [02:51.281] 42424650-4c32-3530-3130-313434000000 Done 100% [02:31.062] 42424650-4c32-3530-3130-314537000000 Done 100% [02:51.480] 42424650-4c32-3530-3130-314436000000 Done 100% [02:51.077] 42424650-4c32-3530-3130-304435000000 Done 100% [02:51.367] NIC 42424650-4c32-3530-3130-313346000000 (0000:06:00.0) : Successful NIC 42424650-4c32-3530-3130-313844000000 (0000:23:00.0) : Successful NIC 42424650-4c32-3530-3130-313242000000 (0000:43:00.0) : Successful NIC 42424650-4c32-3530-3130-304341000000 (0000:66:00.0) : Successful NIC 42424650-4c32-3530-3130-313434000000 (0000:86:00.0) : Successful NIC 42424650-4c32-3530-3130-314537000000 (0000:a3:00.0) : Successful NIC 42424650-4c32-3530-3130-314436000000 (0000:c3:00.0) : Successful NIC 42424650-4c32-3530-3130-304435000000 (0000:e6:00.0) : Successful

- Once the firmware install is complete, then run the reset card so as to reflect the

firmware version.

Pollara NIC output of Firmware update

jnpr@mi300-01:~$ sudo nicctl reset card --all NIC 42424650-4c32-3530-3130-313346000000 (0000:06:00.0) : Card reset triggered, wait for completion (75 secs) NIC 42424650-4c32-3530-3130-313844000000 (0000:23:00.0) : Card reset triggered, wait for completion (75 secs) NIC 42424650-4c32-3530-3130-313242000000 (0000:43:00.0) : Card reset triggered, wait for completion (75 secs) NIC 42424650-4c32-3530-3130-304341000000 (0000:66:00.0) : Card reset triggered, wait for completion (75 secs) NIC 42424650-4c32-3530-3130-313434000000 (0000:86:00.0) : Card reset triggered, wait for completion (75 secs) NIC 42424650-4c32-3530-3130-314537000000 (0000:a3:00.0) : Card reset triggered, wait for completion (75 secs) NIC 42424650-4c32-3530-3130-314436000000 (0000:c3:00.0) : Card reset triggered, wait for completion (75 secs) NIC 42424650-4c32-3530-3130-304435000000 (0000:e6:00.0) : Card reset triggered, wait for completion (75 secs) NIC 42424650-4c32-3530-3130-313346000000 (0000:06:00.0) : Card reset successful NIC 42424650-4c32-3530-3130-313844000000 (0000:23:00.0) : Card reset successful NIC 42424650-4c32-3530-3130-313242000000 (0000:43:00.0) : Card reset successful NIC 42424650-4c32-3530-3130-304341000000 (0000:66:00.0) : Card reset successful NIC 42424650-4c32-3530-3130-313434000000 (0000:86:00.0) : Card reset successful NIC 42424650-4c32-3530-3130-314537000000 (0000:a3:00.0) : Card reset successful NIC 42424650-4c32-3530-3130-314436000000 (0000:c3:00.0) : Card reset successful NIC 42424650-4c32-3530-3130-304435000000 (0000:e6:00.0) : Card reset successful jnpr@mi300-01:~$ sudo nicctl show card --detail | grep Firm Firmware version : 1.110.0-a-79 Firmware version : 1.110.0-a-79 Firmware version : 1.110.0-a-79 Firmware version : 1.110.0-a-79 Firmware version : 1.110.0-a-79 Firmware version : 1.110.0-a-79 Firmware version : 1.110.0-a-79 Firmware version : 1.110.0-a-79

- Install ANP plugin. ANP is a plugin library designed to enhance the RCCL collective

communication library with extended network transport support. The ANP Plugin library is a

private AMD library.

sudo apt install libboost-dev export RCCL_BUILD=/home/${User}/pollara/rccl-7961624/build/release export MPI_INCLUDE=/opt/ompi/include export MPI_LIB_PATH=/opt/ompi/lib make RCCL_BUILD=$RCCL_BUILD MPI_INCLUDE=$MPI_INCLUDE MPI_LIB_PATH=$MPI_LIB_PATH - Lastly Build the RCCL tests.

Build rccl-tests git clone https://github.com/ROCm/rccl-tests.git cd rccl-tests make MPI=1 MPI_HOME=/opt/ompi NCCL_HOME=/home/${User}/pollara/rccl-7961624/build/release CUSTOM_RCCL_LIB=/home/${User}/pollara/rccl-7961624/build/release/librccl.so -j $(nproc) make MPI=1 MPI_HOME=/opt/ompi NCCL_HOME=/home/dbarmann/pollara/rccl-7961624/build/release HIP_HOME=/home/${User}/pollara/rccl-7961624/build/release CUSTOM_RCCL_LIB=/home/${User}/pollara/rccl-7961624/build/release/librccl.so -j $(nproc)

Configuring AMD DCQCN (ECN/PFC) and TOS/DSCP for RDMA Traffic

In the IP Services for AI Networks section we discussed the need for congestion control and traffic prioritization in the Backend GPU fabric to transport RoCE traffic between GPU servers. For these mechanisms to work properly, the servers need to be configured to properly react to congestions notifications from both ECN and PFC, and to mark the RDMA and non-RDMA traffic properly (matching the classification configuration of the fabric). We will cover how to configure the AMD servers to meet this requirement.

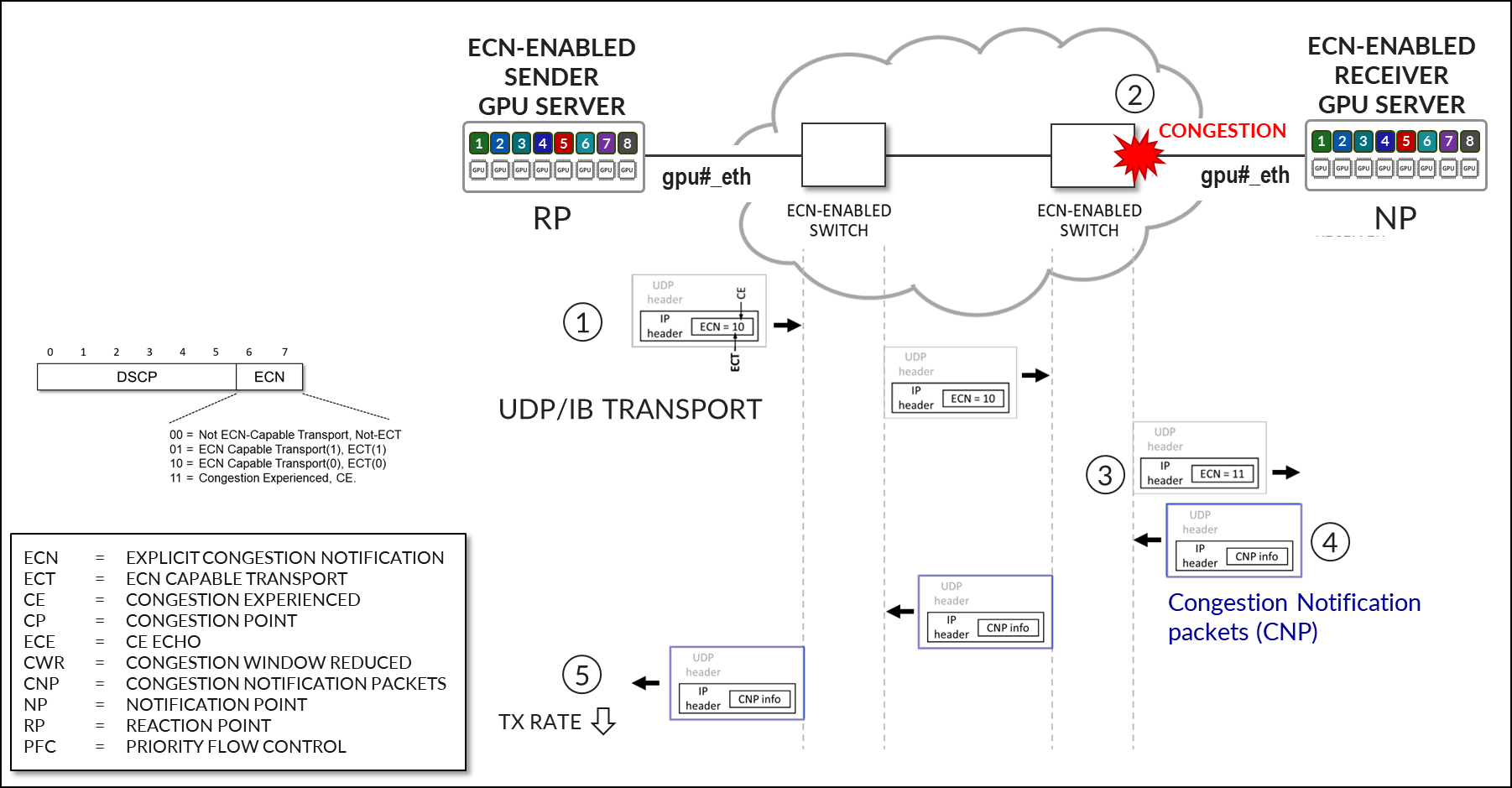

Congestion Control (CC) or ECN (Explicit congestion Notification)

Congestion Control (CC) or ECN (Explicit congestion Notification) is a standard (RFC 3168) backpressure mechanism for ethernet network devices that signals congestion and causes the traffic to temporarily slow down to avoid packet drops

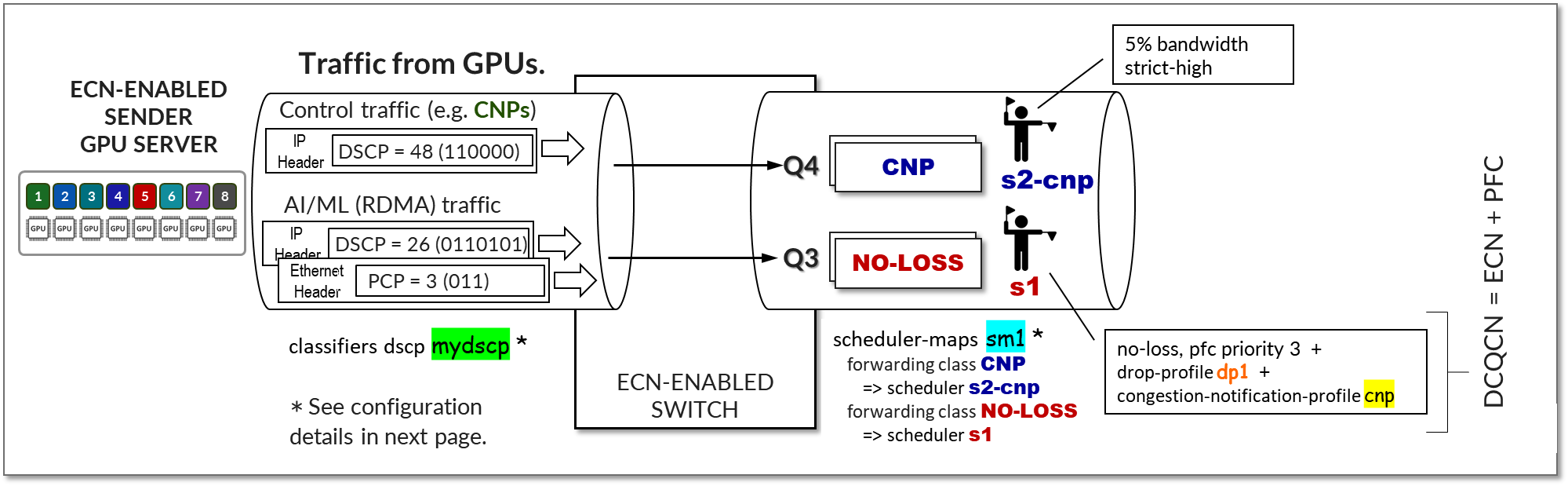

ECN for RoCE traffic relies on fabric switches that can detect congestion and implement ECN marking for traffic downstream, and devices that can respond to these markings, as shown in Figure 63.

- the receiving NIC or Notification point (NP) which transmits CNP when receiving ECN marked packets

- the sending NIC or Reaction point (RP) that receives the CNP packets and reacts accordingly.

Figure 53: DCQCN – ECN Operation

Details about DCQCN – ECN (Congestion Control in Broadcom terminology) implementation in the BCM5741X Ethernet network adapter acting as NP and RP, can be found in the following documents Traffic Control Synopsis and RoCE Congestion Control.

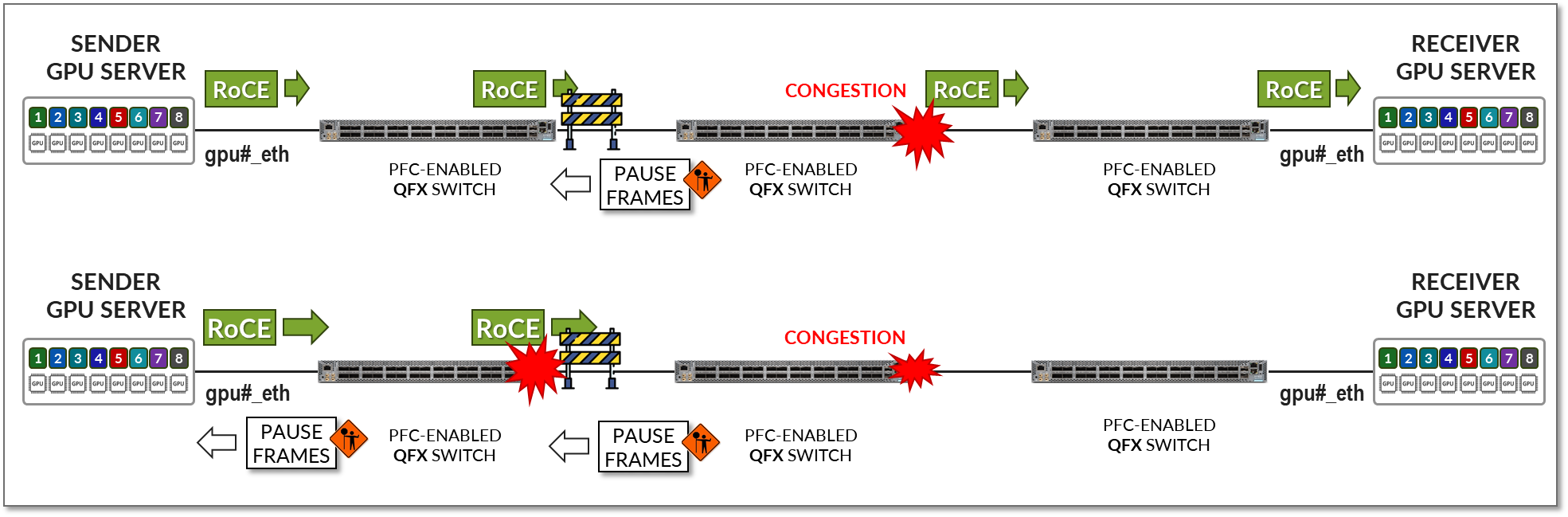

Priority Flow Control (PFC)

Priority Flow Control (PFC) is a standard (IEEE 802.1Qbb) backpressure mechanism for ethernet network devices that signals congestion and causes traffic on a particular priority to temporarily stop to avoid packet drops.

PFC for RoCE traffic relies on fabric switches that can detect congestion and generate PFC Pause frames upstream and devices that can respond to these markings:

- the sending NIC that receives the PFC Pause frames and reacts accordingly.

Details about DCQCN – PFC implementation in BCM5741X Ethernet network adapters acting as RP can be found in the following documents Traffic Control Synopsis, Priority Flow Control Feature in Ethernet Network Adapters, and Quality of Service

Figure 54: DCQCN – PFC Operation

TOS/DSCP for RDMA Traffic

RDMA traffic must be properly marked to allow the switch to correctly classify it, and to place it in the lossless queue for proper treatment. Marking can be either DSCP within the IP header, or PCP in the ethernet frame vlan-tag field. Whether DSCP or PCP is used depends on whether the interface between the GPU server and the switch is doing vlan tagging (802.1q) or not. Figure 64 shows how RDMA and CNP are marked differently and as a result, the fabric switch classified and schedules the two types of packets differently.

Figure 55: TOS/DSCP operation