Routing Director System Requirements

Before you install the Routing Director software, ensure that your system meets the requirements that we describe in these sections.

Software Requirements

You can deploy Routing Director on one or more servers of the following bare-metal hypervisors:

-

VMware ESXi 8.0

-

Red Hat Enterprise Linux (RHEL) 8.10 and Ubuntu 22.04.05 kernel-based virtual machines (KVMs).

You must install the libvirt, libvirt-daemon-kvm, bridge-utils, and qemu-kvm packages. The hypervisor requires an x86-64 CPU.

-

Proxmox VE

Hardware Requirements

This section describes the minimum hardware resources that are required on each node virtual machine (VM) in the Routing Director deployment cluster, for evaluation purposes or for small deployments.

The compute, memory, and disk requirements of the cluster nodes can vary based on the intended capacity of the system. The intended capacity depends on the number of devices to be onboarded and monitored, types of sensors, and frequency of telemetry messages. If you increase the number of devices, you'll need higher CPU and memory capacities.

To get a scale and size estimate of a production deployment and to discuss detailed dimensioning requirements, contact your Juniper Partner or Juniper Sales Representative.

The bare minimum resources required for each of the nodes in a Routing Director deployment cluster are:

| Four-node Cluster | Three-node Cluster |

|---|---|

|

16-vCPU |

24-vCPU |

|

32-GB RAM |

48-GB RAM |

|

512-GB SSD. SSDs are mandatory. |

512-GB SSD. SSDs are mandatory. |

| Four-node Cluster | Three-node Cluster |

|---|---|

|

48-vCPU |

64-vCPU |

|

96-GB RAM |

128-GB RAM |

|

2000-GB SSD |

2000-GB SSD |

The servers must have enough CPU, memory, and disk space to accommodate the hardware resources listed in this section. For node and server high-availability, deploy all the VMs on different servers.

Network Requirements

The nodes must be able to communicate with each other through SSH. The nodes must be able to sync to an NTP server. SSH is enabled automatically during the VM creation, and you will be asked to enter the NTP server address during the cluster creation. Ensure that there is no firewall blocking NTP or blocking SSH traffic between the nodes in case they are on different servers.

The node VMs must also be able to reach the DNS.

- Cluster in a Single Subnet

- Cluster in Multiple Subnets

- Cluster with Multiple NICs

- Configure IPv4 Addresses

- Configure IPv6 Addresses

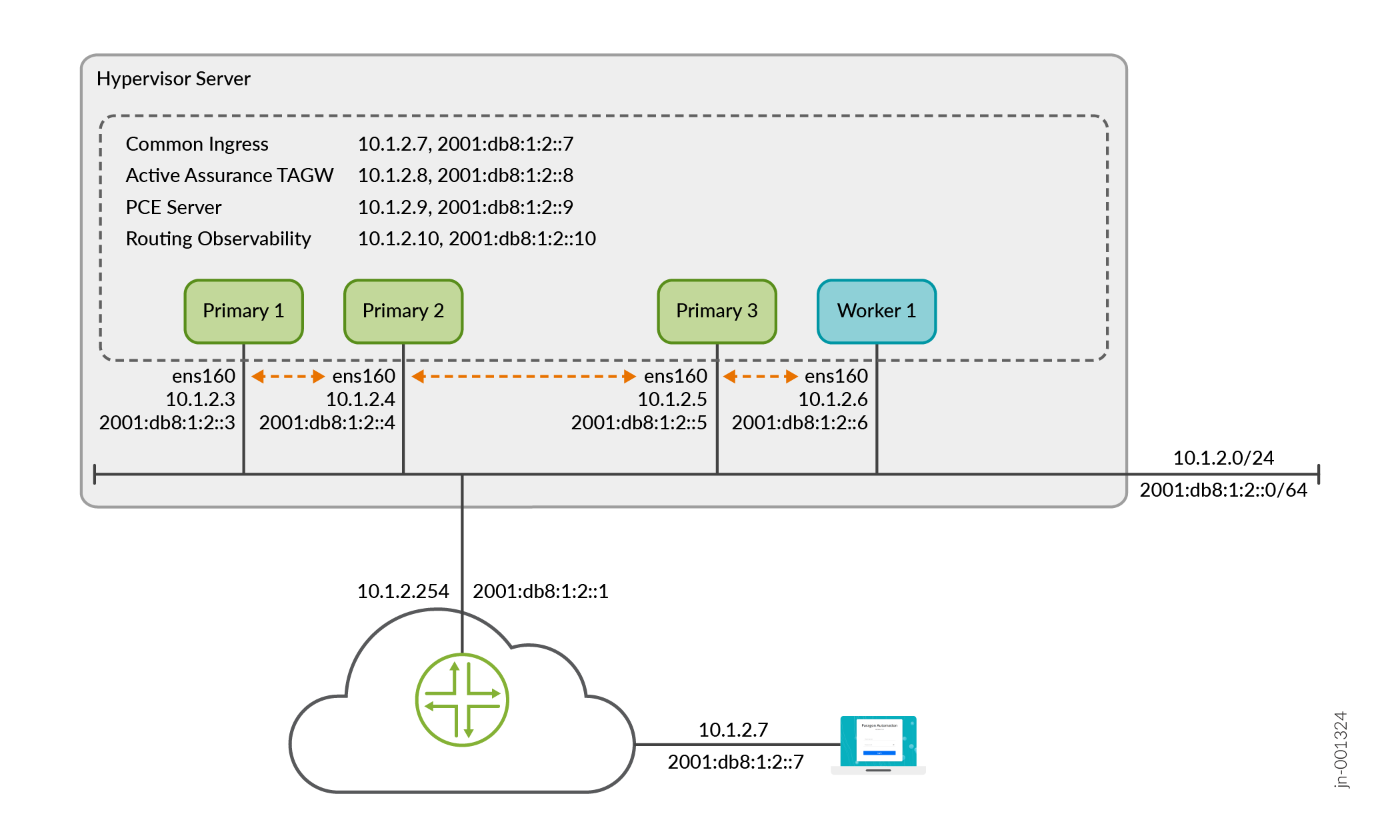

Cluster in a Single Subnet

The cluster nodes and VIP addresses can all be on the same subnet with Layer 2 (L2) connectivity between them. IP Addressing Requirements in a Single Subnet Cluster illustrates the IP and VIP addresses within the same subnet required to install a Routing Director deployment cluster.

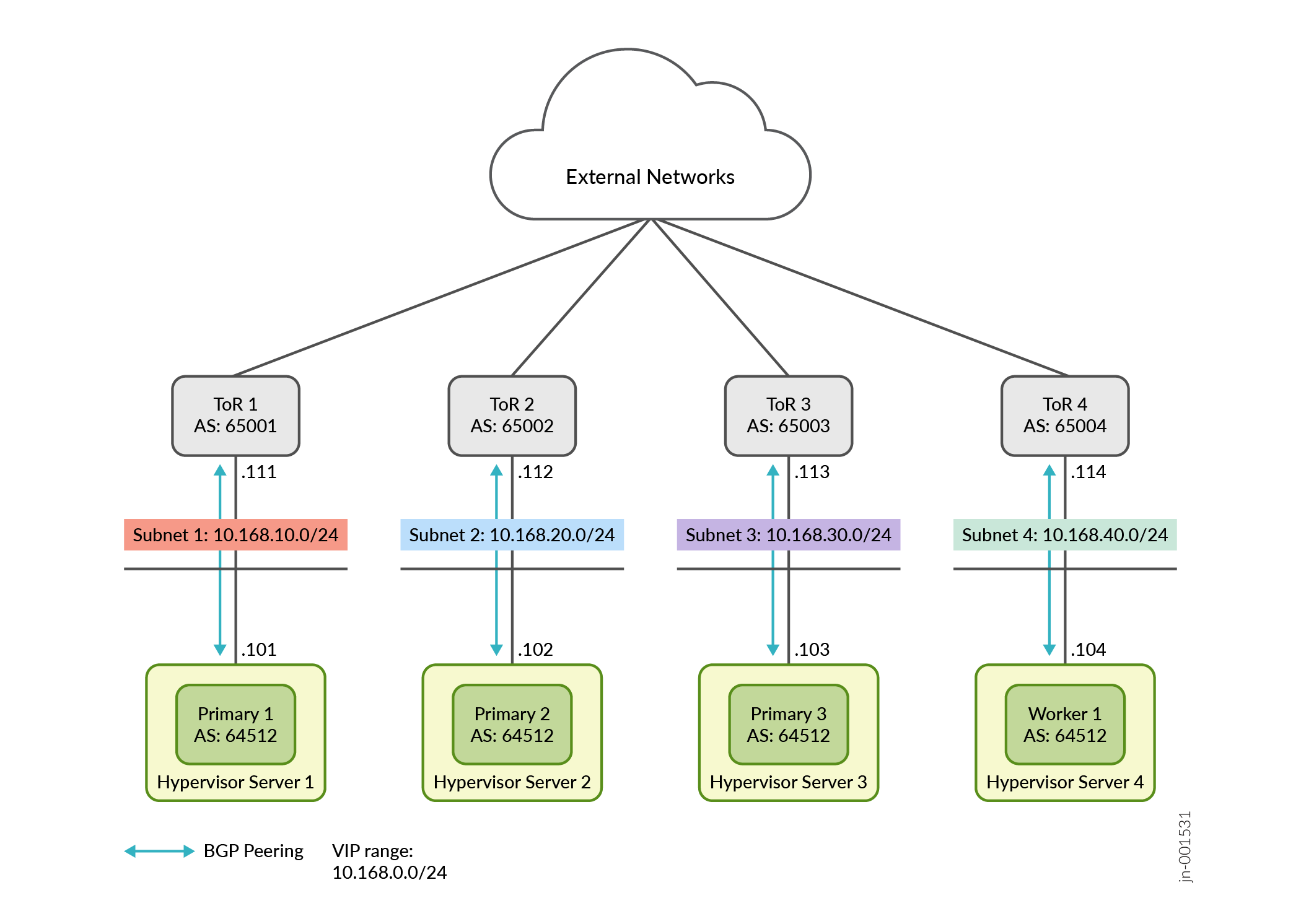

Cluster in Multiple Subnets

Alternatively, in cases where the cluster nodes are geographically distributed or are located in multiple data centers, the nodes and the VIP addresses can be in different subnets. You must configure BGP peering between each cluster node and the respective upstream gateway top-of-rack (ToR) router as well as between the routers. Additionally, the cluster nodes must have the same configured AS number.

Figure 2 illustrates a cluster in four different networks. Each node is served by a dedicated ToR router. In this example, you must configure EBGP using interface peering between the ToR and the corresponding cluster node. Your BGP configuration might differ based on your setup.

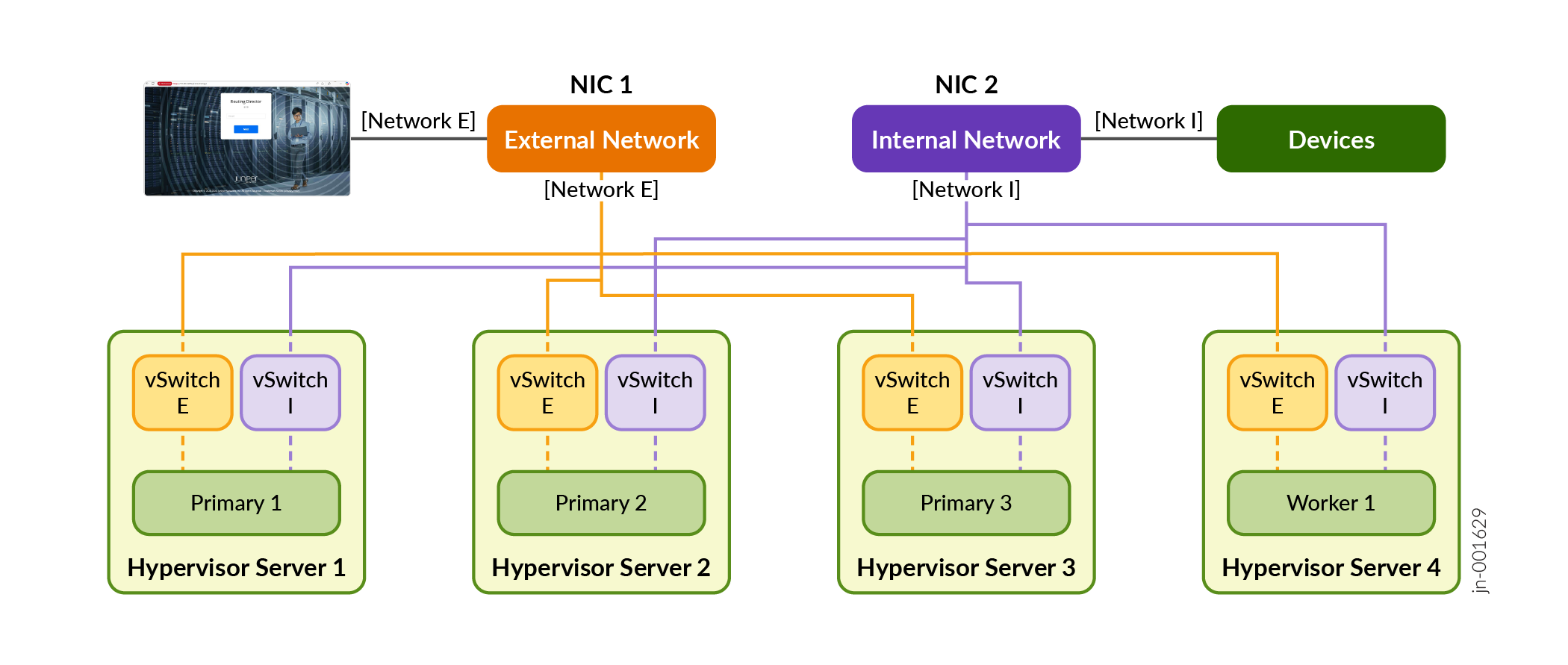

Cluster with Multiple NICs

When the device‑management network is separate from the network used to access the GUI, you can connect the Routing Director cluster to both networks by assigning each one to a different NIC.

For example, if you have Network A and Network B, each associated with its own NIC, the cluster nodes can connect to both networks using separate generic ingress VIP addresses. The cluster nodes may be installed on one or more hypervisor servers. Although the cluster can be reached through either network’s ingress VIP address, the commonly used design is to dedicate one VIP address for GUI access and the other for NETCONF and gNMI access used to access devices.

To configure Routing Director to connect to multiple networks and multiple NICs, you must configure the generic ingress VIP addresses of both networks. While both the ingress VIP addresses can be used for GUI, NETCONF, and gNMI access, you must explicitly define the VIP address of the network used for device management for NETCONF and gNMI access. For more information on the commands used, see step 8 in the deploy cluster workflow.

Figure 3 illustrates this deployment model, where the cluster nodes reside on different servers and use two NICs to connect to both the external and internal networks. In this configuration, the GUI is accessed through the external‑network VIP address, while the devices are accessed through the internal‑network VIP address.

Configure IPv4 Addresses

You need to have the following IP addresses available for the installation.

-

Four interface IP addresses, one for each of the four nodes.

Note that, in a multi-NIC setup, each NIC must also have individual IP addresses.

-

Internet gateway IP address

-

Virtual IP (VIP) addresses for:

-

Generic ingress IP address shared between gNMI, NETCONF (SSH connections from devices), and the Web GUI—This is a general-purpose VIP address that is shared between multiple services and used to access Routing Director from outside the cluster.

Alternatively, when the network used to access the GUI is different from the device‑management network, you can assign one VIP address for Web GUI access and a different VIP address for gNMI and NETCONF access. When two VIP addresses are configured using the

ingress ingress-vipoption, both the VIP addresses can be used for Web GUI, NETCONF, and gNMI access. However, only the first VIP address specified during configuration is added to the outbound SSH configuration used to adopt devices.To use a different VIP specifically for NETCONF and gNMI, you must explicitly define that VIP address using the

oc-term oc-term-hostandgnmi gnmi-term-hostoptions. Once defined, this VIP address is included in the outbound SSH configuration used to adopt devices from the corresponding network.Although either VIP address can technically be used to access the GUI, NETCONF, and gNMI, only the VIP address explicitly defined and configured for NETCONF and gNMI is added to the outbound SSH configuration.

If devices are in multiple networks, and you need to adopt devices in more than one network, you can manually edit the outbound SSH command to override the configured IP address for NETCONF and gNMI access.

-

Active Assurance Test Agent gateway (TAGW)—This VIP address serves HTTP-based traffic to the Active Assurance Test Agent endpoint.

-

PCE server—This VIP address is used to establish Path Computational Element Protocol (PCEP) sessions between Routing Director and the devices. The PCE server VIP configuration is necessary to view dynamic topology updates in your network in real-time. For information on establishing BGP-LS peering and PCEP sessions, see Dynamic Topology Workflow.

If your cluster is a multi-subnet cluster, you can also configure multiple VIP addresses, one from each subnet, for the devices to establish PCEP sessions on all VIPs.

-

Routing observability cRPD—This VIP is used by external network devices as BGP Monitoring Protocol (BMP) station IP address to establish the BMP session.

-

Routing observability IPFIX—This VIP is used to collect IPFIX data to view predictor events. Predictor events indicate routing, forwarding, and OS exceptions that are identified by Routing Director as a potential indicator of traffic loss.

The VIP addresses are added to the outbound SSH configuration that is required for a device to establish a connection with Routing Director.

Note: In a multi-subnet cluster installation where the cluster nodes are in different subnets, the VIP addresses must not be on the same subnet as the cluster nodes. -

-

Hostnames mapped to the VIP addresses—Along with VIP addresses, you can also enable devices to connect to Routing Director using hostnames. However, you must ensure that the hostnames and the VIP addresses are correctly mapped in the DNS and your device is able to connect to the DNS. If you configure Routing Director to use hostnames, the hostnames take precedence over VIP addresses and are added to the outbound SSH configuration used during onboarding devices.

Configure IPv6 Addresses

You can configure the Routing Director deployment cluster using IPv6 addresses in addition to the existing IPv4 addresses. With IPv6 addressing configured, you can use IPv6 addresses for NETCONF, gNMI, the Active Assurance TAGW,and access to the Web GUI. You must have the following additional addresses available at the time of installation:

-

Four interface IPv6 addresses, one for each of the four nodes

-

Internet gateway IPv6 address

-

One IPv6 VIP address for generic ingress or two IPv6 VIP addresses, one for the Web GUI and one for NETCONF and gNMI access

-

One IPv6 VIP address for Active Assurance TAGW

-

Hostnames mapped to the IPv6 VIP addresses—You can also use hostnames to connect to IPv6 addresses. You must ensure that the hostnames are mapped correctly in the DNS to resolve to the IPv6 addresses.

If hostnames are not configured and IPv6 addressing is enabled in the cluster, the IPv6 VIP addresses are added to the outbound SSH configuration, used for device onboarding, instead of IPv4 addresses.

You must configure the IPv6 addresses at the time of cluster deployment. You cannot configure IPv6 addresses after a cluster has been deployed using only IPv4 addresses.

We do not support configuring an IPv6 address for the PCE server and the routing observability feature.

In addition to the listed IP addresses and hostnames, you need to have the following information available with you at the time of installation:

-

Primary and secondary DNS server addresses for IPv4 and IPv6 (if needed)

-

NTP server information

Firewall Requirements

The following section lists the ports that firewalls must allow for communication within and from outside of the cluster.

You must allow intracluster communication between the nodes. In particular, you must keep the ports listed in Table 1 open for communication.

|

Port |

Protocol |

Usage |

From |

To |

Comments |

|---|---|---|---|---|---|

| Infrastructure Ports | |||||

|

22 |

TCP |

SSH for management |

All cluster nodes |

All cluster nodes |

Requires a password or SSH-key |

|

2222 |

TCP |

Deployment Shell configuration sync |

All cluster nodes |

All cluster nodes |

Requires password or SSH-key |

|

443 |

TCP |

HTTPS for registry |

All cluster nodes |

Primary nodes |

Anonymous read access Write access is authenticated |

|

2379 |

TCP |

etcd client port |

Primary nodes |

Primary nodes |

Certificate-based authentication |

|

2380 |

TCP |

etcd peer port |

Primary nodes |

Primary nodes |

Certificate-based authentication |

|

3300 |

TCP |

Ceph mon to all Ceph components |

All cluster nodes |

All cluster nodes |

— |

|

5473 |

TCP |

Calico CNI with Typha |

All cluster nodes |

All cluster nodes |

— |

|

6443 |

TCP |

Kubernetes API |

All cluster nodes |

All cluster nodes |

Certificate-based authentication |

|

6789 |

TCP |

Ceph mon to all Ceph components |

All cluster nodes |

All cluster nodes |

— |

|

6800-7300 |

TCP |

Between all OSDs and all other daemons and clients |

All cluster nodes |

All cluster nodes |

— |

|

7472 |

TCP |

MetalLB metric port |

All cluster nodes |

All cluster nodes |

Anonymous read only, no write access |

|

7946 |

UDP |

MetalLB member election port |

All cluster nodes |

All cluster nodes |

— |

| 8443 |

TCP |

HTTPS for registry data sync |

Primary nodes |

Primary nodes |

Anonymous read access Write access is authenticated |

|

9345 |

TCP |

rke2-server |

All cluster nodes |

All cluster nodes |

Token based authentication |

|

10250 |

TCP |

kubelet metrics |

All cluster nodes |

All cluster nodes |

Standard Kubernetes authentication |

|

10260 |

TCP |

RKE2 cloud controller |

All cluster nodes |

All cluster nodes |

Standard Kubernetes authentication |

|

32766 |

TCP |

Kubernetes node check for PCE service local traffic policy |

All cluster nodes |

All cluster nodes |

Read access only |

|

Calico CNI Ports |

|||||

|

4789 |

UDP |

Calico CNI with VXLAN |

All cluster nodes |

All cluster nodes |

— |

|

5473 |

TCP |

Calico CNI with Typha |

All cluster nodes |

All cluster nodes |

— |

|

51820 |

UDP |

Calico CNI with Wireguard |

All cluster nodes |

All cluster nodes |

— |

The following ports must be open for communication from outside the cluster.

| Port |

Protocol |

Usage |

From |

To |

|---|---|---|---|---|

|

179 |

TCP |

Topology visualization and traffic engineering using the topology information |

Routing Director deployment cluster node IP address |

Router IP address to which you want to set up BGP peering from Routing Director. You can use the router management IP address or the router interface IP address. |

|

443 |

TCP |

Web GUI + API |

External user computer/desktop |

Web GUI Ingress VIP address(es) |

|

443 |

TCP |

Active Assurance Test Agent |

External network devices |

Active Assurance Test Agent VIP address |

|

2200 |

TCP |

NETCONF |

External network devices |

Web GUI Ingress VIP address(es) |

|

4189 |

TCP |

PCE Server |

External network devices |

PCE Server VIP address |

|

4739 |

UDP |

Routing Observability |

External network devices |

IPFIX VIP address |

|

6800 |

TCP |

Active Assurance Test Agent |

External network devices |

Active Assurance Test Agent VIP address |

|

32767 |

TCP |

gNMI |

External network devices |

Web GUI Ingress VIP address(es) |

|

17002 |

TCP |

Routing Observability |

External network devices |

Routing observability cRPD load balancer IP address |

Web Browser Requirements

The latest versions of Google Chrome, Mozilla Firefox, and Safari.

We recommend that you use Google Chrome.