VPC to CN2 Communication in AWS EKS

SUMMARY Juniper Cloud-Native Contrail Networking (CN2) release 23.1 supports communication between AWS virtual private cloud (VPC) networks, external networks, and CN2 clusters. This feature only applies to AWS EKS environments using CN2 as the CNI. This article provides information about how CN2 implements this feature.

Understanding Kubernetes and VPC Networks

Typically, you cannot access a Kubernetes workload in an overlay network running on Amazon Elastic Kubernetes Service (EKS) from a VPC. In order to achieve AWS VPC to Kubernetes communication, you must expose the host network of your Kubernetes cluster to the VPC. Although some public cloud Kubernetes distributions offer solutions that support this feature, these solutions are tailored for traditional VM workloads instead of Kubernetes workloads. As a result, these solutions have the following drawbacks:

-

You must configure pod IPs as secondary IP addresses on node interfaces. This imposes resource constraints on the nodes, reducing the number of pods that can be supported.

-

Services are exposed through public Load Balancers. Every time a service is created, the Load Balancer begins an instantiation process which might result in more time until service exposure

CN2 release 23.1 addresses this issue by introducing a Gateway Service Instance (GSI). A GSI is a collection of Amazon Web Service (AWS) and Kubernetes resources that work together to seamlessly interconnect CN2 with VPC and external networks. Apply a GSI manifest and CN2 facilitates communication between pods and services in an Amazon EKS cluster and workloads in the same VPC.

Prerequisites

The following are required to enable VPC to CN2 communication:

-

A license for cRPD. To purchase a license, visit https://www.juniper.net/us/en/products/routers/containerized-routing-protocol-daemon-crpd.html

-

You must install the license into the EKS cluster as a

Secretwithin thecontrail-gsinamespace. -

The secret must contain the base64-encoded version of the license under the crpd-license key of .Data.

-

The following is an example

Secretwith a reference to a license:apiVersion: v1 kind: Secret metadata: name: crpd-license namespace: contrail-gsi data: crpd-license: ****** # base64 encoded crpd license

-

-

An EKS cluster running CN2 release 23.1 or later

-

AWS Identity and Access Management (IAM) role access. See the following link for instructions about how to configure a service account to assume an IAM role: Configure a Service Account to Assume an IAM role

-

Nodes within the EKS cluster must have the label:

core.juniper.net/crpd-node: "".-

The controller will only schedule the cRPD container on nodes with the following key:

core.juniper.net/crpd-node. After this label is added onto a node, none of the CN2 controllers (including the vRouter) will run on this node. This ensures that the proper amount of nodes are reserved for cRPD containers.

-

Gateway Service Instance Components

The following is an example of a gateway service instance (GSI) manifest:

apiVersion: v1

kind: Namespace

metadata:

name: contrail-gsi

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: contrail-gsi-serviceaccount

namespace: contrail-gsi

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: contrail-gsi-role

rules:

- apiGroups:

- '*'

resources:

- '*'

verbs:

- '*'

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: contrail-gsi-rolebinding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: contrail-gsi-role

subjects:

- kind: ServiceAccount

name: contrail-gsi-serviceaccount

namespace: contrail-gsi

---

apiVersion: plugins.juniper.net/v1alpha1

kind: GSIPlugin

metadata:

name: contrail-gsi-plugin

namespace: contrail-gsi

spec:

awsRegion: "us-east-2"

iamRoleARN: "arn:aws:iam::********"

vpcID: "vpc-**********"

common:

containers:

- image: <repository>/contrail-gsi-plugin:<tag>

imagePullPolicy: Always

name: contrail-gsi-plugin

initContainers:

- command:

- kubectl

- apply

- -k

- /crd

image: <repository>/contrail-gsi-plugin-crdloader:<tag>

imagePullPolicy: Always

name: contrail-gsi-plugin-crdloader

serviceAccountName: contrail-gsi-serviceaccountNote that the awsRegion, iamRoleARN, and

vpcID fields are user-defined. The iamRoleARN value is

the Amazon Resource Name (ARN) of the IAM Role that you create as part of the prerequisites

for this feature.

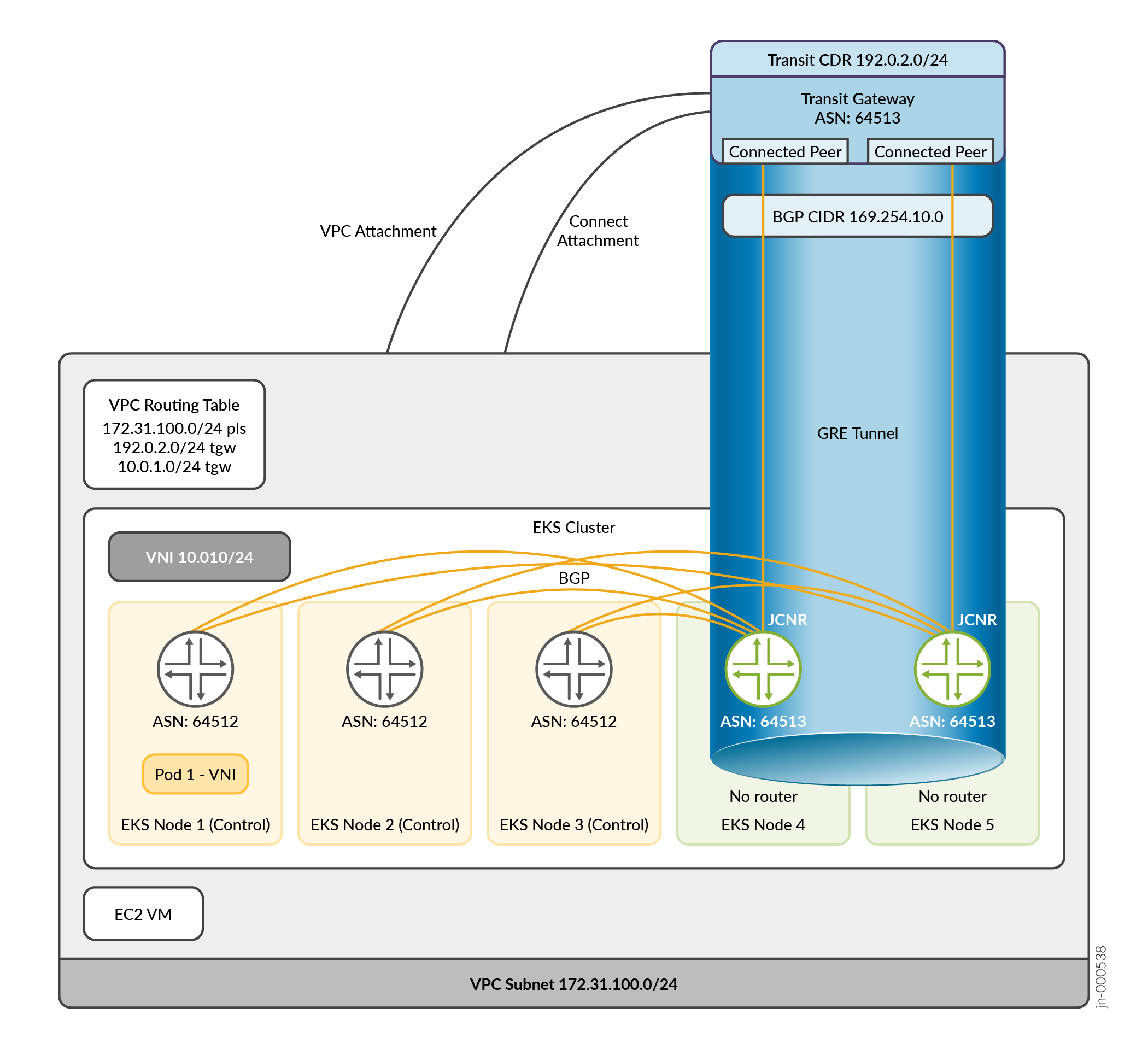

Applying a GSI manifest creates a custom controller that creates and manages the following:

AWS resources:

-

Transit gateway: A transit gateway is a network transit hub that can interconnect VPCs. A transit gateway can have

Attachmentswhich are one or more VPCs. -

Connect attachment: A transit gateway connect attachment establishes a connection between a transit gateway and third-party virtual appliances (JCNRs) running in a VPC. After you create a connect attachment, one or more Generic Routing Encapsulation (GRE) tunnels, or Transit Gateway Connect peers, can be created on the Connect attachment to connect the transit gateway and the third-party appliance. A Transit Gateway Connect peer is comprised of two BGP peering sessions over the GRE tunnel which provide routing redundancy. After you install the transit gateway resource, CN2 performs this process automatically.

-

VPC attachment: A VPC

Attachmentattaches to a transit gateway. When you attach a VPC to a transit gateway, resource and routing rules apply to that gateway.

Kubernetes resource:

-

Connected peers: A transit gateway connect peer is a GRE tunnel that facilitates communication between a transit gateway and a third-party appliance or JCNR.

CN2 GSI resource:

-

Juniper Cloud-Native Router (JCNR): JCNR is an extension of CN2 that acts as a gateway between EC2 instances and other AWS resources and the EKS cluster running CN2.

JCNRs do the following:

-

Connect via Multiprotocol BGP (MP-BGP) to CN2 control nodes and use MPLSoUDP in the data plane

-

Provide active/active L3 and L4 load balancing across the CN2 EKS nodes

You can scale JCNRs and their connected peers to a maximum of four instances. In this case, a

transit gateway provides active/active L3 and L4 load balancing across the JCNRs. As an

example of JCNR functionality, if you add a label onto a VN that you want

to expose, the JCNR advertises that VN's subnet to the transit gateway. A next hop route

between the VN's subnet and the transit gateway is added to the VPC's routing table. As a

result, the VN is routed in the VPC's network and accessible via workloads within the VPC.

This functionality is similar to a traditional physical SDN gateway, where the transit

gateway and the JCNR act as a physical gateway between the EKS cluster and the rest of the

AWS environment without the drawbacks of hostNetwork.

The following illustration depicts connectivity in a typical Amazon EKS cluster. Note that CN2 automatically generates the VPC Routing Table.

Custom Resource Implementation

CN2 implements this feature through the following custom resources (CRs):

-

TransitGateway: Represents the AWSTransitGatewayresource. -

Route: Represents an entry in a VPC routing table. ARouteis an internally-created object that doesn't require user input. -

ConnectedPeer: Represents an appliance (JCNR in CN2) that establishes BGP sessions with the transit gateway. AConnectedPeeris an internally-created object that doesn't require user input.

The following is an example of a TransitGateway manifest.

apiVersion: core.gsi.juniper.net/v1

kind: TransitGateway

metadata:

name: tgw1

namespace: contrail-gsi

spec:

subnetIDs:

- subnet-*******

- subnet-*******

- subnet-*******

connectedPeerScale: 1

transitASN: 64513

peerASN: 64513

transitCIDR: 192.0.2.0/24

bgpCidr: 169.254.10.0

controlNodeASN: 64512

licenseSecretName: crpd-license

controllerContainer:

name: controller

command: ["/manager", "-mode=client"]

image: <repository>/contrail-gsi-plugin:<tag>

imagePullPolicy: Always

crpdContainer:

name: crpd

image: <repository>/crpd:<tag>

initContainer:

name: init

image: <repository>/busybox:<tag>Use the following command for detailed information about the

TransitGateway spec:

kubectl explain transitgateway.spec

Custom Controller Implementation

The GSI is implemented through the use of custom Kubernetes controllers with a

client/server plugiin. The server-side custom controllers run on the CN2 control plane

nodes. The client runs alongside JCNR. These controllers are automatically configured when

you apply the TransitGateway and GSI objects.

Troubleshooting

This section provides information about troubleshooting various

ConnectedPeer connectivity issues, custom controller issues, and workload

reachability issues.

For custom controller issues:

-

After you apply the GSI manifest, ensure that all of the custom controller pods associated with the GSI manifest (Server, Client) are active.

kubectl get pods -n contrail-gsi -l app=contrail-gsi-plugin

The CLI output should show three active controller pods in a regular EKS deployment. If you don't receive the correct CLI output, use the following command to verify that the GSI plugin is installed.

kubectl get gsiplugins -n contrail-gsi

Check the logs of the

contrail-k8s-deployerpod. Filter results for the GSI plugin reconciler and look for any errors. -

Ensure that the custom controllers can make create, read, update, delete (CRUD) requests to the required AWS resources.

-

Check the logs of the

contrail-gsipods; one of the three pods should be active and will output log messages. -

Verify that the logs of the active pod don't contain errors about not being able to make API calls to AWS. If you do see these errors, ensure that the IAM role granted to the

contrail-gsi-serviceaccountis configured properly (refer to the Prerequistes section of this topic).

-

For TransitGateway issues:

-

After you install the

TransitGateways, ensure that their statuses change from "pending" to "available." -

Ensure that the

ConnectedPeer/cRPD pod is active.kubectl get pods -n contrail-gsi -l app=connectedpeer

If no pods show up in the output, ensure that a node is available for the

ConnectedPeerpod to be scheduled on. A valid pod contains the following label:core.juniper.net/crpd-node: "".

For ConnectedPeer issues:

-

After a

ConnectedPeerpod is active, ensure that the cRPD can establish BGP sessions with CN2 control nodes, and AWS's transit gateway. Run the following command in the CLI of the cRPD:show bgp summary

This process might fail for the following reasons:

-

A valid, active license is not installed in the cluster

-

IP connectivity for BGP is not correct

-

For workoad reachability issues:

-

If you cannot access an EKS cluster from an EC2 instance:

-

Ensure that the workload's VN is exposed to the

TransitGateway. -

Ensure that the route from the exposed VN to the VPC appears in the VPC's routing table.

-

Ensure that the EC2 instance has security groups configured to access the CIDR of the VN.

-

The custom controllers automatically create one securtity group (

gsi-sg) that allows access to all of the routes exposed to a transit gateway.

-

-