Cloud-Native Router Operator Service Module: VPC Gateway

The Cloud-Native Router Operator Service Module is an operator framework that we use to develop cRPD applications and solutions. This section describes how to use the Service Module to implement a VPC gateway between your Amazon EKS cluster and your on-premises Kubernetes cluster.

Cloud-Native Router VPC Gateway Overview

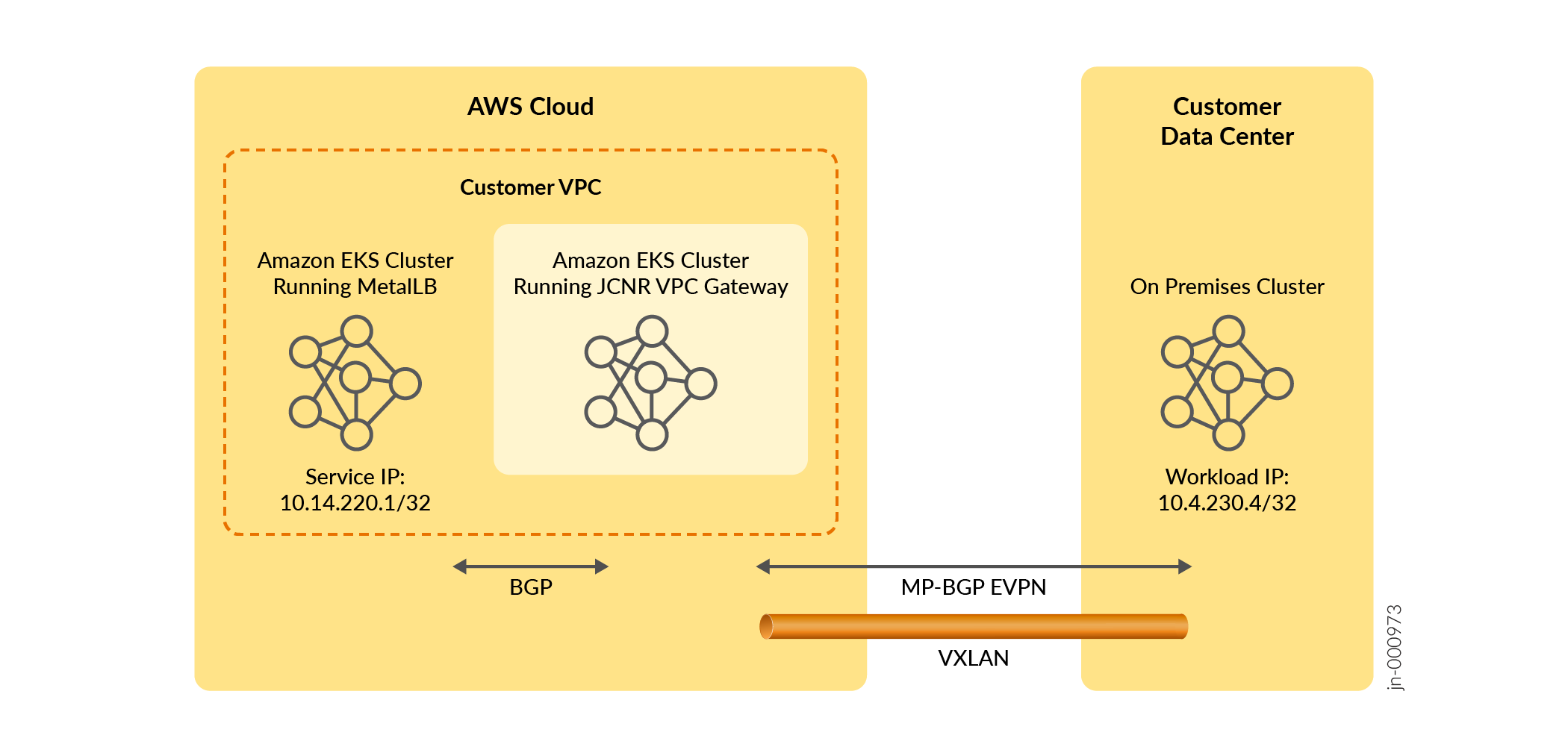

We provide the Cloud-Native Router Operator Service Module to install JCNR (with a BYOL license) on an Amazon EKS cluster and to configure it to act as an EVPN-VXLAN VPC Gateway between a separate Amazon EKS cluster running MetalLB and an on-premises Kubernetes cluster (Figure 1).

Once you configure the VPC Gateway custom resource with information on your MetalLB cluster and your on-premises Kubernetes cluster, the VPC Gateway establishes a BGP session with your MetalLB cluster and establishes a BGP EVPN session with your on-premises Kubernetes cluster. Routes learned from the MetalLB cluster are re-advertised to the on-premises cluster using EVPN Type 5 routes. Routes learned from the on-premises cluster are leaked into the route tables of the routing instance for the MetalLB cluster.

The configuration example we'll use in this section connects workloads at 10.4.230.4/32 in the on-premises cluster to services at 10.14.220.1/32 in the MetalLB cluster.

Configuring the connectivity between the AWS Cloud and the Customer Data Center is not covered in this procedure. Use your preferred AWS method for connectivity.

The VPC Gateway custom resource automatically installs Cloud-Native Router with a configuration that is specific to this application. You don't need to install Cloud-Native Router explicitly and you don't need to configure the Cloud-Native Router installation Helm chart.

Install the Cloud-Native Router VPC Gateway

This is the main procedure. Start here.

-

Prepare the clusters.

- Prepare the Cloud-Native Router VPC Gateway cluster. See Prepare the Cloud-Native Router VPC Gateway Cluster.

- Prepare the MetalLB cluster. See Prepare the MetalLB Cluster.

- Prepare the on-premises cluster. See Prepare the On-Premises Cluster

After preparing the clusters, you can start installation of the Cloud-Native Router VPC Gateway. Execute the remaining steps in the Cloud-Native Router VPC Gateway cluster. -

Download and install the Cloud-Native Router Service Module Helm chart on

the cluster.

You can download the Cloud-Native Router Service Module Helm chart from the Juniper Networks software download site. See Cloud-Native Router Software Download Packages.

-

Install the downloaded Helm chart.

helm install vpcgwy Juniper_Cloud_Native_Router_Service_Module_<release>.tgz

Note:The provided Helm chart installs the Cloud-Native Router VPC Gateway on cores 2, 3, 22, and 23. Therefore ensure that the nodes in your cluster have at least 24 cores and that the specified cores are free to use.

Check that the controller-manager and the contrail-k8s-deployer pods are up.

kubectl get pods -A

NAMESPACE NAME READY STATUS svcmodule-system controller-manager-67898d794d-4cpsw 2/2 Running cert-manager cert-manager-5bd57786d4-mf7hq 1/1 Running cert-manager cert-manager-cainjector-57657d5754-5d2xc 1/1 Running cert-manager cert-manager-webhook-7d9f8748d4-p482n 1/1 Running contrail-deploy contrail-k8s-deployer-546587dcbc-bjbrg 1/1 Running kube-system aws-node-dhsgv 2/2 Running kube-system aws-node-n6kcx 2/2 Running kube-system coredns-54d6f577c6-m7q8h 1/1 Running kube-system coredns-54d6f577c6-qc76c 1/1 Running kube-system eks-pod-identity-agent-6k6xj 1/1 Running kube-system eks-pod-identity-agent-rvqz7 1/1 Running kube-system kube-proxy-nqpsd 1/1 Running kube-system kube-proxy-vzbnv 1/1 Running

-

Configure the Cloud-Native Router VPC Gateway custom resource.

This custom resource contains information on the MetalLB cluster and the on-premises cluster.

-

Create a YAML file that contains the desired configuration. We'll

put our Cloud-Native Router VPC Gateway pods into a namespace that

we'll call

gateway.The YAML file has the following format:apiVersion: v1 kind: Namespace metadata: name: gateway --- apiVersion: workflow.svcmodule.juniper.net/v1 kind: VpcGateway metadata: name: vpc-gw namespace: gateway spec: <see table>

specsection. In thespecdefinition,applicationrefers to the MetalLB cluster andclientrefers to the on-premises cluster.Table 1: Spec Descriptions Spec Field

Description

applicationTopology

This section contains information on the MetalLB cluster.

applicationInterface

The name of the interface connecting to the MetalLB cluster.

bgpSpeakerType

Specify metallb when connecting to the MetalLB cluster.

clusters

kubeconfigSecretName

The secret containing the kubeconfig of the MetalLB cluster. name The name of the MetalLB cluster. enableV6

(Optional) True or false.

Enables or disables IPv6 in the MetalLB cluster. Default is false.

neighbourDiscovery

(Optional) True or false.

Governs how BGP neighbors (BGP speakers from the MetalLB cluster) are determined.

When set to true, BGP neighbors with addresses specified in sessionPrefix or with addresses in the application interface's subnet are accepted.

When set to false, the remote MetalLB cluster's cRPD pod IP is used as the BGP neighbor. Default is false.

routePolicyOverride

(Optional) True or false.

When set to true, a route policy called "export-onprem" is used to govern what MetalLB cluster routes are exported to the on-premises cluster. This gives you the opportunity to create your own export policy. You must create this policy manually and call it "export-onprem".

Default is false, which means that all MetalLB cluster routes are exported to the on-premises cluster.

sessionPrefix

(Optional) Used when neighbourDiscovery is set to true.

When present, it indicates the CIDR from which BGP sessions from the MetalLB cluster are accepted.

Default is to accept BGP sessions from BGP neighbors in the application interface's subnet.

client

Information related to the on-premises cluster.

address

The BGP speaker IP address of the on-premises cluster.

The Cloud-Native Router VPC Gateway establishes a direct eBGP session with this address. This eBGP session is used to learn the route to the loopback address, which is used to establish the subsequent BGP EVPN session.

asn

The AS number of the eBGP speaker in the client cluster.

The Cloud-Native Router VPC Gateway validates this when establishing the direct eBGP session with the BGP speaker in the on-premises cluster.

loopbackAddress

The loopback address of the BGP speaker in the on-premises cluster.

The Cloud-Native Router VPC Gateway uses this IP address to establish a BGP EVPN session with the BGP speaker in the on-premises cluster.

myASN

The local AS number that the Cloud-Native Router VPC Gateway uses for the direct eBGP session with the BGP speaker in the on-premises cluster.

routeTarget

The route target for the EVPN routes in the on-premises cluster. vrrp Always set to true.

This enables VRRP on interfaces towards the on-premises cluster.

clientInterface

The name of the interface connecting to the on-premises cluster.

dpdkDriver

Set to vfio-pci.

loopbackIPPool

The IP address pool used for assigning IP addresses to the cRPD instances created in the cluster (in CIDR format).

Note:The number of addresses in the pool must be at least one more than the number of replicas.

nodeSelector

(Optional) Used in conjunction with a node's labels to determine whether the VPC Gateway pod can run on a node.

This selector must match a node's labels for the pod to be scheduled on that node.

replicas

(Optional) The number of JCNRs created. Default is 1.

Armed with the MetalLB kubeconfig, the Cloud-Native Router VPC Gateway has sufficient information to configure BGP sessions automatically with the MetalLB cluster. You don't need to provide any parameters other than what's listed in the table.

apiVersion: v1 kind: Namespace metadata: name: jcnr-gateway --- apiVersion: workflow.svcmodule.juniper.net/v1 kind: VpcGateway metadata: name: vpc-gw namespace: gateway spec: dpdkDriver: vfio-pci replicas: 1 clientInterface: eth3 loopbackIPPool: 10.14.140.0/28 applicationTopology: applicationInterface: eth2 bgpSpeakerType: metallb clusters: - name: metallb-1 kubeconfigSecretName: metallb-cluster-kubeconfig client: asn: 65010 myASN: 65000 address: 10.14.205.158 loopbackAddress: 10.14.140.200 routeTarget: target-1-4 vrrp: true -

Apply the YAML file to the cluster.

kubectl apply -f vpcGateway.yaml

-

Check the pods.

kubectl get pods -A

NAMESPACE NAME READY STATUS svcmodule-system controller-manager-67898d794d-4cpsw 2/2 Running cert-manager cert-manager-5bd57786d4-mf7hq 1/1 Running cert-manager cert-manager-cainjector-57657d5754-5d2xc 1/1 Running cert-manager cert-manager-webhook-7d9f8748d4-p482n 1/1 Running contrail-deploy contrail-k8s-deployer-546587dcbc-bjbrg 1/1 Running contrail vpc-gw-crpdgroup-0-x-contrail-vrouter-nodes-s9wkk 2/2 Running contrail vpc-gw-crpdgroup-0-x-contrail-vrouter-nodes-vrdpdk-jczh5 1/1 Running jcnr jcnr-gateway-vpc-gw-crpdgroup-0-0 2/2 Running kube-system aws-node-dhsgv 2/2 Running kube-system aws-node-n6kcx 2/2 Running kube-system coredns-54d6f577c6-m7q8h 1/1 Running kube-system coredns-54d6f577c6-qc76c 1/1 Running kube-system eks-pod-identity-agent-6k6xj 1/1 Running kube-system eks-pod-identity-agent-rvqz7 1/1 Running kube-system kube-proxy-nqpsd 1/1 Running kube-system kube-proxy-vzbnv 1/1 Running

-

Create a YAML file that contains the desired configuration. We'll

put our Cloud-Native Router VPC Gateway pods into a namespace that

we'll call

-

Verify your installation.

Find the name of the configlet:

kubectl get nodeconfiglet -n jcnr

NAME AGE vpc-gw-crpdgroup-0 8h

kubectl describe nodeconfiglet -n jcnr vpc-gw-crpdgroup-0

Name: vpc-gw-crpdgroup-0 Namespace: jcnr Labels: core.juniper.net/nodeName=ip-10-75-66-162.us-west-2.compute.internal Annotations: <none> API Version: configplane.juniper.net/v1 Kind: NodeConfiglet Metadata: Creation Timestamp: 2024-06-24T23:32:35Z Finalizers: node-configlet.finalizers.deployer.juniper.net Generation: 26 Managed Fields: API Version: configplane.juniper.net/v1 Fields Type: FieldsV1 fieldsV1: f:status: .: f:message: f:status: Manager: manager Operation: Update Subresource: status Time: 2024-06-24T23:32:36Z API Version: configplane.juniper.net/v1 Fields Type: FieldsV1 fieldsV1: f:metadata: f:finalizers: .: v:"node-configlet.finalizers.deployer.juniper.net": f:ownerReferences: .: k:{"uid":"00c67217-87e7-434d-8d6a-8256f2d9d206"}: f:spec: .: f:clis: f:nodeName: Manager: manager Operation: Update Time: 2024-06-25T02:22:26Z Owner References: API Version: configplane.juniper.net/v1 Block Owner Deletion: true Controller: true Kind: JcnrInstance Name: vpc-gw-crpdgroup-0 UID: 00c67217-87e7-434d-8d6a-8256f2d9d206 Resource Version: 133907 UID: 340a19d0-9de5-414d-b2ac-c3831203877c Spec: Clis: set interfaces eth2 unit 0 family inet address 10.14.207.30/22 set interfaces eth2 mac 52:54:00:a4:c3:85 set interfaces eth2 mtu 9216 set interfaces eth3 unit 0 family inet address 10.14.205.159/22 set interfaces eth3 mac 52:54:00:ee:4b:3f set interfaces eth3 mtu 9216 set interfaces lo0 unit 0 family inet address 10.14.140.1/32 set interfaces lo0 mtu 9216 set policy-options policy-statement default-rt-to-aws-export then reject set policy-options policy-statement default-rt-to-aws-export term awsv4 from family inet set policy-options policy-statement default-rt-to-aws-export term awsv4 from protocol evpn set policy-options policy-statement default-rt-to-aws-export term awsv4 then accept set policy-options policy-statement default-rt-to-aws-export term awsv6 from family inet6 set policy-options policy-statement default-rt-to-aws-export term awsv6 from protocol evpn set policy-options policy-statement default-rt-to-aws-export term awsv6 then accept set policy-options policy-statement export-direct then reject set policy-options policy-statement export-direct term directly-connected from protocol direct set policy-options policy-statement export-direct term directly-connected then accept set policy-options policy-statement export-evpn then reject set policy-options policy-statement export-evpn term evpn-connected from protocol evpn set policy-options policy-statement export-evpn term evpn-connected then accept set policy-options policy-statement export-onprem then reject set policy-options policy-statement export-onprem term learned-from-bgp from protocol bgp set policy-options policy-statement export-onprem term learned-from-bgp then accept set routing-instances application-ri protocols bgp group vpc-gw-application local-address 10.14.207.30 set routing-instances application-ri protocols bgp group vpc-gw-application export export-evpn set routing-instances application-ri protocols bgp group vpc-gw-application peer-as 64513 set routing-instances application-ri protocols bgp group vpc-gw-application local-as 64512 set routing-instances application-ri protocols bgp group vpc-gw-application multihop set routing-instances application-ri protocols bgp group vpc-gw-application allow 10.14.207.29/22 set routing-instances application-ri protocols evpn ip-prefix-routes advertise direct-nexthop set routing-instances application-ri protocols evpn ip-prefix-routes encapsulation vxlan set routing-instances application-ri protocols evpn ip-prefix-routes vni 4096 set routing-instances application-ri protocols evpn ip-prefix-routes export export-onprem set routing-instances application-ri protocols evpn ip-prefix-routes route-attributes community export-action allow set routing-instances application-ri protocols evpn ip-prefix-routes route-attributes community import-action allow set routing-instances application-ri interface eth2 set routing-instances application-ri vrf-target target:1:4 set routing-instances application-ri instance-type vrf set routing-options route-distinguisher-id 10.14.140.1 set routing-options router-id 10.14.140.1 set protocols bgp group vpc-gw-client-lo local-address 10.14.140.1 set protocols bgp group vpc-gw-client-lo peer-as 64512 set protocols bgp group vpc-gw-client-lo local-as 64512 set protocols bgp group vpc-gw-client-lo family evpn signaling set protocols bgp group vpc-gw-client-lo neighbor 10.14.140.200 set protocols bgp group vpc-gw-client-direct export export-direct set protocols bgp group vpc-gw-client-direct peer-as 65010 set protocols bgp group vpc-gw-client-direct local-as 65000 set protocols bgp group vpc-gw-client-direct multihop set protocols bgp group vpc-gw-client-direct neighbor 10.14.205.158 Node Name: ip-10-75-66-162.us-west-2.compute.internal Status: Message: Configuration committed Status: True Events: <none> -

Verify your installation.

-

Access the cRPD pod.

kubectl exec -n jcnr jcnr-gateway-vpc-gw-crpdgroup-0-0 -c crpd -it -- sh

-

Enter CLI mode.

cli

-

Check the BGP peers.

show bgp summary Threading mode: BGP I/O Default eBGP mode: advertise - accept, receive - accept Groups: 3 Peers: 3 Down peers: 0 Unconfigured peers: 1 Table Tot Paths Act Paths Suppressed History Damp State Pending bgp.evpn.0 2 2 0 0 0 0 inet.0 4 1 0 0 0 0 Peer AS InPkt OutPkt OutQ Flaps Last Up/Dwn State|#Active/Received/Accepted/Damped... 10.14.140.200 64512 6514 6471 0 1 2d 0:49:34 Establ bgp.evpn.0: 2/2/2/0 application-ri.evpn.0: 2/2/2/0 10.14.205.158 65010 6386 6363 0 3 2d 0:01:56 Establ inet.0: 1/4/4/0 10.14.207.29 64513 5758 6352 0 0 1d 23:56:40 Establ application-ri.inet.0: 1/1/1/0-

with the iBGP speaker in the on-premises cluster at 10.14.140.200 for EVPN routes

-

with the eBGP speaker in the on-premises cluster at 10.14.205.158 for the direct eBGP session

-

with the MetalLB cluster at 10.14.207.29

-

-

Check the routes to the MetalLB cluster and the on-premises

cluster.

Check the route to the Nginx service in the MetalLB cluster:

show route 10.14.220.1 application-ri.inet.0: 4 destinations, 4 routes (4 active, 0 holddown, 0 hidden) + = Active Route, - = Last Active, * = Both 10.14.220.1/32 *[BGP/170] 1d 00:24:25, localpref 100 AS path: 64513 I, validation-state: unverified > to 10.14.207.29 via eth2show route 10.4.230.4 application-ri.inet.0: 4 destinations, 4 routes (4 active, 0 holddown, 0 hidden) + = Active Route, - = Last Active, * = Both 10.4.230.4/32 *[EVPN/170] 1d 17:51:00 > to 10.14.205.158 via eth3

-

Access the cRPD pod.

Prepare the MetalLB Cluster

The MetalLB cluster is the Amazon EKS cluster that you ultimately want to connect to your on-premises cluster. Follow this procedure to prepare your MetalLB cluster to establish a BGP session with the Cloud-Native Router VPC Gateway.

- Create the Amazon EKS cluster where you'll be running the MetalLB service.

-

Deploy MetalLB on that cluster. MetalLB provides a network load balancer

implementation for your cluster.

See https://metallb.universe.tf/configuration/ for information on deploying MetalLB.

-

Create the necessary MetalLB resources. As a minimum, you need to create

the MetalLB IPAddressPool resource and the MetalLB BGPAdvertisement

resource.

-

Create the MetalLB IPAddressPool resource.

Here's an example of a YAML file that defines the IPAddressPool resource.

apiVersion: metallb.io/v1beta1 kind: IPAddressPool metadata: name: first-pool namespace: metallb-system spec: addresses: - 10.14.220.0/24 avoidBuggyIPs: true

In this example, MetalLB will assign load balancer IP addresses from the 10.14.220.0/24 range.

Apply the above YAML to the cluster to create the IPAddressPool.

kubectl apply -f ipaddresspool.yaml

-

Create the MetalLB BGPAdvertisement resource.

Here's an example of a YAML file that defines the BGPAdvertisement resource.

apiVersion: metallb.io/v1beta1 kind: BGPAdvertisement metadata: name: example namespace: metallb-system

The BGPAdvertisement resource advertises your service IP addresses to external routers (for example, to your Cloud-Native Router VPC Gateway).

Apply the above YAML to the cluster to create the BGPAdvertisement resource.

kubectl apply -f bgpadvertisement.yaml

-

Create the MetalLB IPAddressPool resource.

-

Create the LoadBalancer service. The LoadBalancer service provides the

entry point for external workloads to reach the cluster. You can create any

LoadBalancer service of your choice.

Here's an example YAML for an Nginx LoadBalancer service.

apiVersion: apps/v1 kind: Deployment metadata: name: nginx spec: selector: matchLabels: app: nginx template: metadata: labels: app: nginx spec: containers: - name: nginx image: <image repo URL> ports: - name: http containerPort: 80 --- apiVersion: v1 kind: Service metadata: name: nginx spec: ports: - name: http port: 80 protocol: TCP targetPort: 8080 selector: app: nginx type: LoadBalancerApply the above YAML to the cluster to create the Nginx LoadBalancer service.

kubectl apply -f nginx.yaml

-

Verify your installation.

-

Take a look at the pods in your cluster.

For example:

kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE default nginx-6d66d85dc4-h6dng 1/1 Running 0 9d kube-system aws-node-vdhv9 2/2 Running 2 (28d ago) 28d kube-system coredns-54d6f577c6-lbznn 1/1 Running 1 (28d ago) 29d kube-system coredns-54d6f577c6-stljk 1/1 Running 1 (28d ago) 29d kube-system eks-pod-identity-agent-kqtcb 1/1 Running 1 (28d ago) 28d kube-system kube-proxy-fxcjq 1/1 Running 1 (28d ago) 28d metallb-system controller-5c6b6c8447-2jdzc 1/1 Running 0 28d metallb-system speaker-xhkpd 1/1 Running 0 28d

The example output shows that both MetalLB and Nginx are up.

-

Check the assigned external IP address for the Nginx service.

For example:

kubectl get svc nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE nginx LoadBalancer 10.100.65.169 10.14.220.1 80:30623/TCP 9d

In this example, MetalLB has assigned 10.14.220.1 to the Nginx LoadBalancer service. This is the overlay IP address that workloads in the on-premises cluster can use to reach services in the MetalLB cluster.

-

Take a look at the pods in your cluster.

Prepare the Cloud-Native Router VPC Gateway Cluster

-

Create the Amazon EKS cluster that you want to act as the Cloud-Native

Router VPC Gateway.

The cluster must meet the system requirements described in System Requirements for EKS Deployment.

Since you're not installing Cloud-Native Router explicitly, you can ignore any requirement that relates to downloading the Cloud-Native Router software package or configuring the Cloud-Native Router Helm chart.

-

Ensure all worker nodes in the cluster have identical interface names and

identical root passwords.

In this example, we'll use eth2 to connect to the MetalLB cluster and eth3 to connect to the on-premises cluster.

-

Once the cluster is up, create a jcnr-secrets.yaml

file with the below contents.

--- apiVersion: v1 kind: Namespace metadata: name: jcnr --- apiVersion: v1 kind: Secret metadata: name: jcnr-secrets namespace: jcnr data: root-password: <add your password in base64 format> crpd-license: | <add your license in base64 format> - Follow the steps in Installing Your License to install your Cloud-Native Router BYOL license in the jcnr-secrets.yaml file.

-

Enter the base64-encoded form of the root password for your nodes into the

jcnr-secrets.yaml file at the following line:

root-password: <add your password in base64 format>

You must enter the password in base64-encoded format. Encode your password as follows:echo -n "password" | base64 -w0

-

Apply jcnr-secrets.yaml to the cluster.

kubectl apply -f jcnr-secrets.yaml namespace/jcnr created secret/jcnr-secrets created

-

Create the secret for accessing the MetalLB cluster.

-

Base64-encode the MetalLB cluster kubeconfig file.

base64 -w0 <metalLB-kubeconfig>

<metalLB-kubeconfig>is the kubeconfig file for the MetalLB cluster.The output of this command is the base64-encoded form of the MetalLB cluster kubeconfig.

-

Create the YAML defining the MetalLB cluster kubeconfig secret.

We'll use a namespace called

jcnr-gateway, which we'll define later.apiVersion: v1 data: kubeconfig: |- <base64-encoded kubeconfig of MetalLB cluster> kind: Secret metadata: name: metallb-cluster-kubeconfig namespace: jcnr-gateway type: Opaque

<base64-encoded kubeconfig of MetalLB cluster>is the base64-encoded output from the previous step. -

Apply the YAML.

kubectl apply -f metallb-cluster-kubeconfig-secret.yaml

-

Base64-encode the MetalLB cluster kubeconfig file.

-

Install webhooks.

kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.12.0/cert-manager.yaml

- Create the jcnr-aws-configmap. See Cloud-Native Router ConfigMap for VRRP.

Prepare the On-Premises Cluster

The Cloud-Native Router VPC Gateway sets up an eBGP session and an iBGP session with the on-premises cluster:

-

The Cloud-Native Router VPC Gateway uses the eBGP session to learn the loopback IP address of the BGP speaker in the on-premises cluster. The Cloud-Native Router VPC Gateway then uses the loopback IP address to establish the subsequent iBGP session.

-

The Cloud-Native Router VPC Gateway uses the iBGP session to learn routes to the workloads in the on-premises cluster. For the iBGP session, you must configure the local and peer AS number to be 64512.

The Cloud-Native Router VPC Gateway does not impose any restrictions on the on-premises cluster as long as you configure it to establish the BGP sessions with the Cloud-Native Router VPC Gateway as described above and to expose routes to the desired workloads.

We don't cover configuring the on-premises cluster because that's very device-specific. You should configure the following, however, in order to be consistent with our ongoing example:

-

an eBGP speaker at 10.14.205.158 for the eBGP session

-

an iBGP speaker at 10.14.140.200 for exchanging EVPN routes

-

workloads reachable at 10.4.230.4/32