Upgrade Apstra on New VM (VM-VM)

Upgrading Apstra on a new VM provides Ubuntu Linux OS fixes, including security updates. You need Apstra OS admin privileges and Apstra admin group permissions to perform the upgrade.

Step 1: Pre-Upgrade Validation

Step 2: Deploy New Apstra Server

If you customized the /etc/aos/aos.conf file on the old

Apstra server (for example, if you updated the metadb field

to use a different network interface), re-apply those changes to the new

Apstra server VM. Automatic migration doesn't occur.

Step 3: Import State

Avoid performing API/GUI write operations on the old Apstra server after starting the new VM import. Changes made won't transfer to the new Apstra server.

Step 4: Import State for Intent-Based Analytics

As of Apstra 5.0.0, we no longer assign tags to probes and stages or support

the evpn-host-flap-count telemetry service.

To remove or disable any widgets or probes for intent-based analytics, add the

following arguments to the sudo aos_import_state command:

-

--iba-remove-unused widgets: Remove unused widgets from the dashboard. -

--iba-remove-probe-and-stage-tags: Remove tags from probes and stages. -

--iba-number-non-unique-probe-labels: Add serial numbers to non-unique probe labels. -

--iba-number-non-unique-dashboard-labels: Add serial numbers to non-unique dashboard labels. -

--iba-disable-probe-with-evpn-host-flap-count-service: Disable non-predefined probes using the evpn-host-flap-count service. -

--iba-strip-dashboard-labels-widget-labels: Remove leading/trailing spaces from dashboard and widget labels. -

--iba-strip-probe-labels-processor-names: Remove leading/trailing spaces from probe labels and processor names.

Step 5: Keep Old VM's IP Address (Optional)

Perform the following steps before changing the Operation Mode and upgrading the devices' agent.

To keep the old VM's IP address:

Step 6: Modify Apstra IP in Flow Config After Apstra Upgrade (if not resusing original IP)

During the Apstra upgrade with VM-to-VM, the Apstra IP address changes unless you reuse the old IP in Step 5.

If the IP address changes, update the Apstra Flow component to capture the new IP as follows:

- SSH to the Apstra Flow CLI (default credentials are apstra/apstra).

-

Open

/etc/juniper/flowcoll.yml. - Modify the EF_JUNIPER_APSTRA_API_ADDRESS field with the new IP address.

-

Run

sudo systemctl restart flowcoll.service.

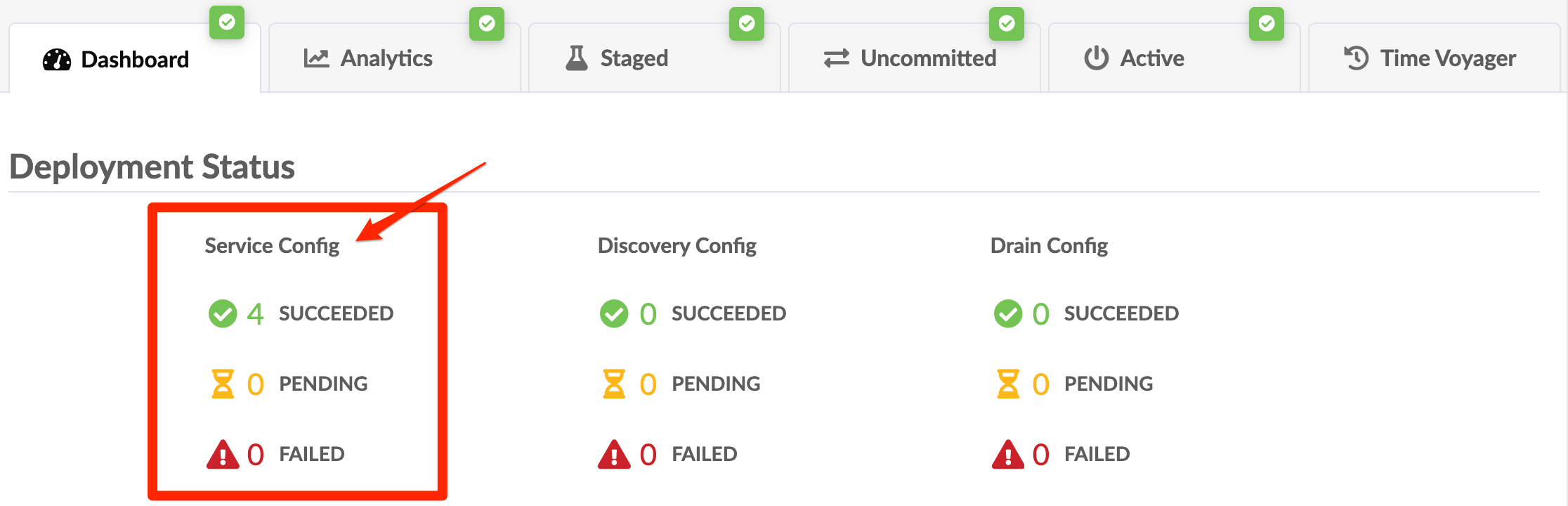

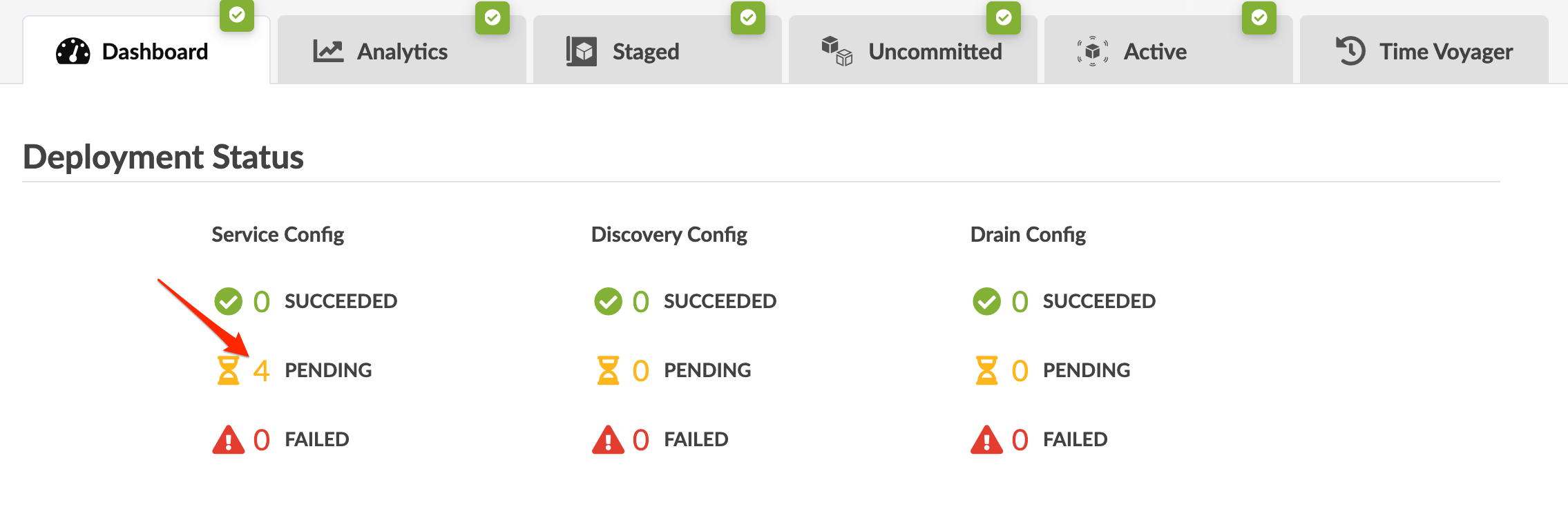

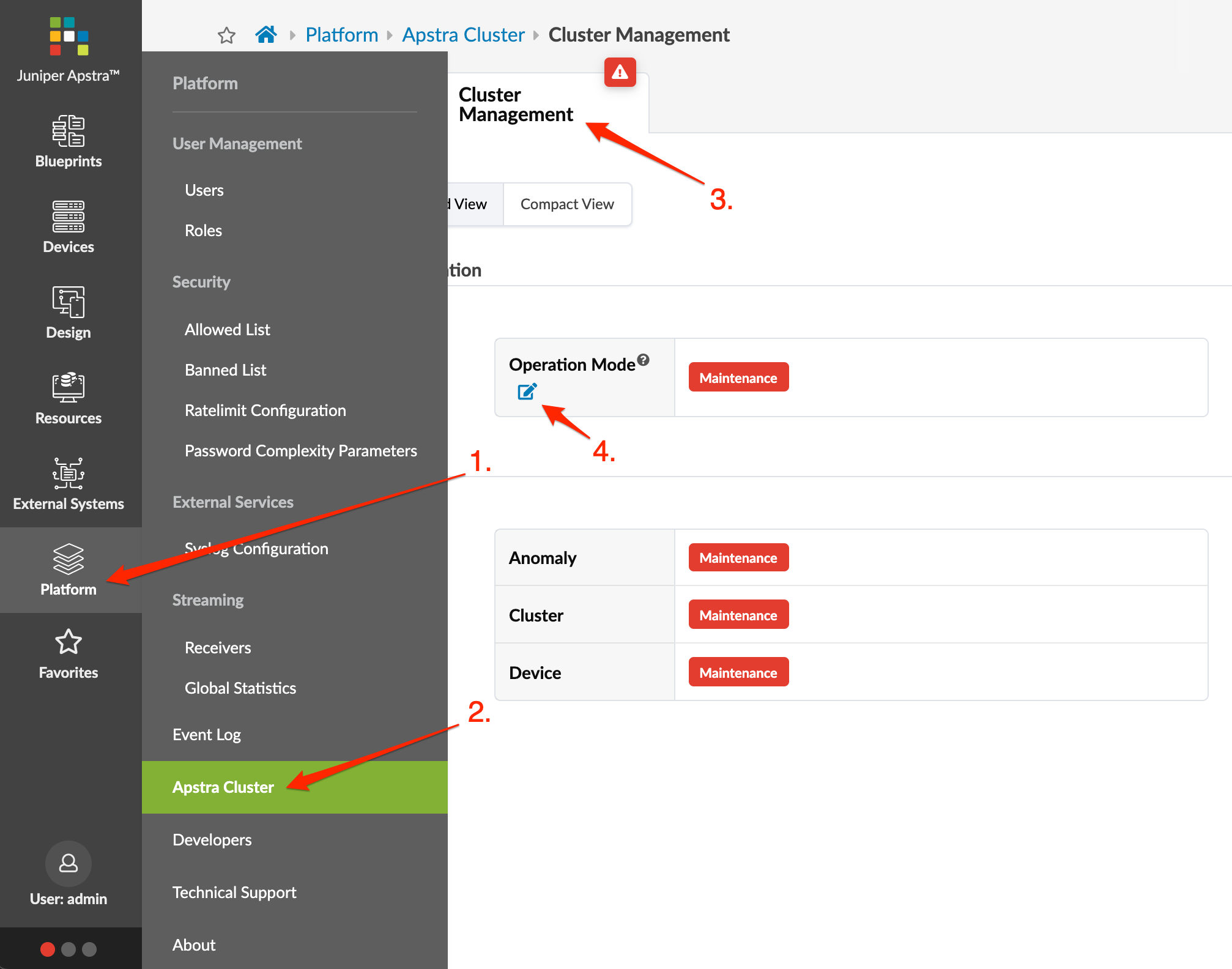

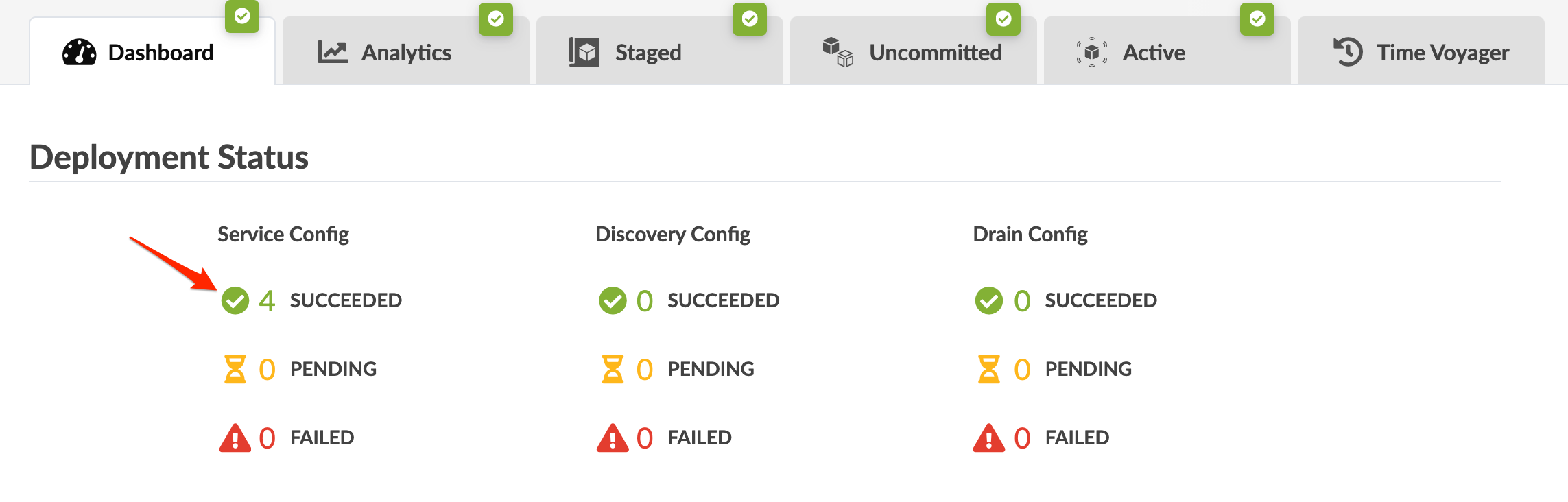

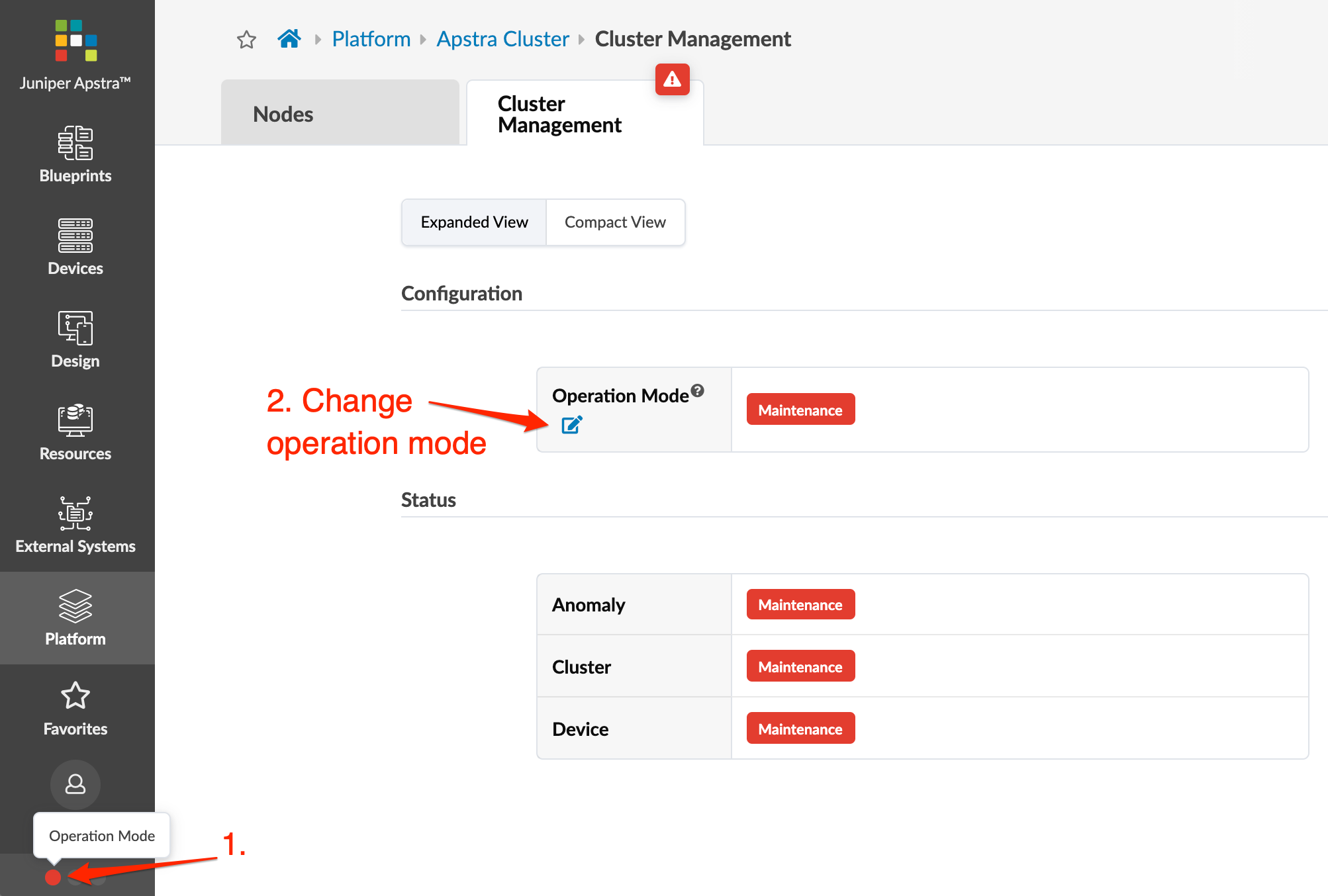

Step 7: Change Operation Mode to Normal

When you start an Apstra server upgrade, the mode switches from Normal to Maintenance automatically. Maintenance mode blocks offbox agents from going online, halts configuration pushes, and stops telemetry pulls. To revert to the previous version, shut down the new server. To complete the upgrade, switch the mode back to Normal.

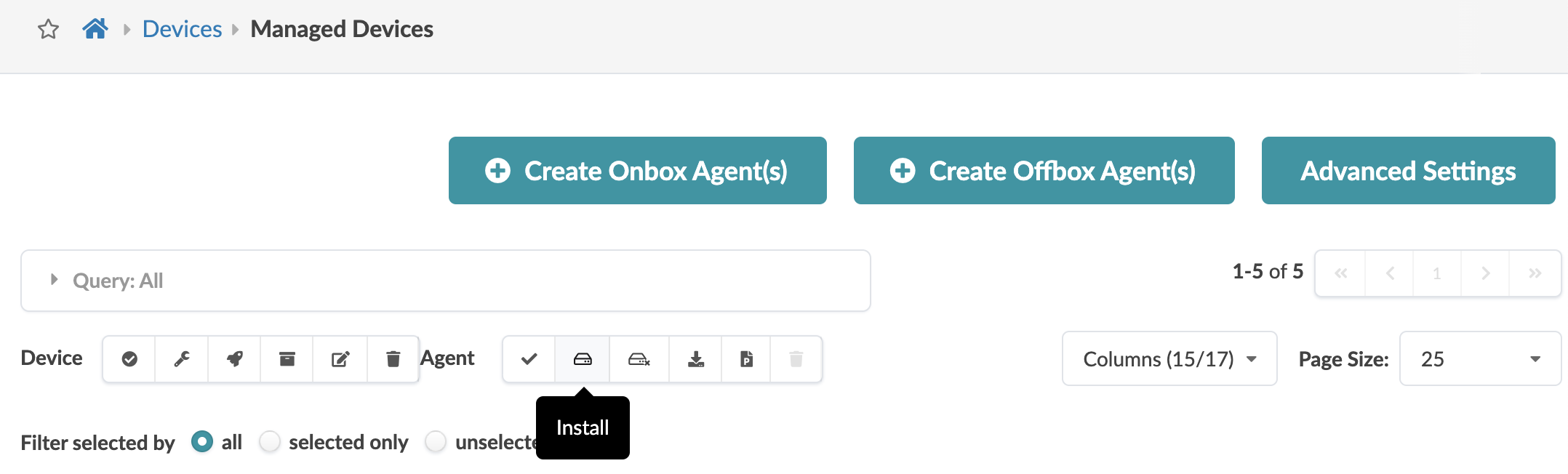

Step 8: Upgrade Onbox Agents

The Apstra server and onbox agents must be running the same Apstra version. If versions are different the agents won't connect to the Apstra server.

If you're running a multi-state blueprint, especially 5-stage, upgrade agents in stages:

-

First, upgrade superspines.

-

Next, upgrade spines.

-

Finally, upgrade leafs.

This order minimizes path hunting issues. Routing may temporarily go from leaf to spine, back to another leaf, and then to another spine. Staged upgrades reduce this risk.

Step 9: Shut Down Old Apstra Server

- Update any DNS entries to use the new Apstra server IP/FQDN based on your configuration.

- If you're using a proxy for the Apstra server, ensure it points to the new server.

-

Gracefully shut down the old Apstra server. If you confirmed shutting it

down, the

service aos stopcommand runs automatically. - If you're upgrading an Apstra cluster and you replaced your worker nodes with new VMs, shut down the old worker VMs.

Upgrade your device NOS versions to a qualified version if they are not compatible with the new Apstra version, See the Juniper Apstra User Guide for details.