Data Center Interconnect (DCI) / Remote EVPN Gateways

DCI / EVPN Gateway Overvew

Historically, enterprises have leveraged Data Center Interconnect (DCI) technology as a building block for business continuity, disaster recovery (DR), or Continuity of Operations (COOP). These service availability use cases primarily relied on the need to connect geographically separated data centers with Layer 2 connectivity for application availability and performance.

With the rise of highly virtualized Software-Defined Data Centers (SDDC), cloud computing, and more recently, edge computing, additional use cases have emerged:

- Colocation Expansion: Share compute and storage resources to colocation data center facilities.

- Resource Pooling: Share and shift applications between data centers to increase efficiency or improved end-user experience.

- Rapid Scalability: Expand capacity from a resource-limited location to another facility or data center.

- Legacy Migration: Move applications and data off older and inefficient equipment and architecture to more efficient, higher-performing, and cost-effective architecture.

With Apstra software, you can deploy and manage a vendor inclusive DCI solution that is simple, flexible, and Intent-Based. Apstra utilizes the standards-based MP-BGP EVPN with VXLAN, which has achieved broad software and hardware adoption in the networking industry. You can choose from a vast selection of cost-effective commodity hardware from traditional vendors to white-box ODMs and software options ranging from conventional vendor integrated Network Operating Systems (NOS) to disaggregated open source options.

EVPN VXLAN is a standards-based (RFC-7432) approach for building modern data centers. It incorporates both data plane encapsulation (VXLAN) and a routing control plane (MP-BGP EVPN Address Family) for extending Layer 2 broadcast domains between hosts as well as Layer 3 routed domains in spine-leaf networks. Relying on a pure Layer 3 underlay for routing of VXLAN tunneled traffic between VXLAN Tunnel Endpoints (VTEPs), EVPN introduces a new address family to the MP-BGP protocol family and supports the exchange of MAC/IP addresses between VTEPs. The advertisement of endpoint MACs and IPs, as well as "ARP/ND-suppression", eliminates the need for a great majority of Broadcast/Unknown/Multicast (BUM) traffic and relies upon ECMP unicast routing of VXLAN, from Source VTEP to Destination VTEP. This ensures optimal route selection and efficient load-sharing of forwarding paths for overlay network traffic.

Just as EVPN VXLAN works within a single site for extending Layer 2 between hosts, the DCI feature enables Layer 2 connectivity between sites. The Apstra DCI feature enables the extension of Layer 2 or Layer 3 services between data centers for disaster recovery, load balancing of active-active sites, or even for facilitating the migration of services from one data center to another.

Limitations:

-

EVPN-GW (DCI) between different vendors' EVPN fabric is not supported.

-

Apstra supports only IPv4 addresses to remote gateways on DCI. We support only IPv4 for the EVPN gateway address. But actual EVPN routes (type 2 and type 5) exchanged between the remote gateways can contain IPv6 reachability information.

DCI Deployment Options

The following characteristics apply to all deployment options:

- You can extend Apstra DCI to other Apstra-managed data centers, non-Apstra managed data centers, or even to legacy non-spine-leaf devices.

- Apstra implementation and behavior is the same in all three cases.

- Whether the remote end is another DCI GW or an ASBR, it is transparent to Apstra.

- Apstra manages neither the GWs nor ASBRs.

You can implement Data Center Interconnect using the following methods. For assistance with selecting the best option for your organization, consult your Apstra Solutions Architect (SA) or Systems Engineer (SE).

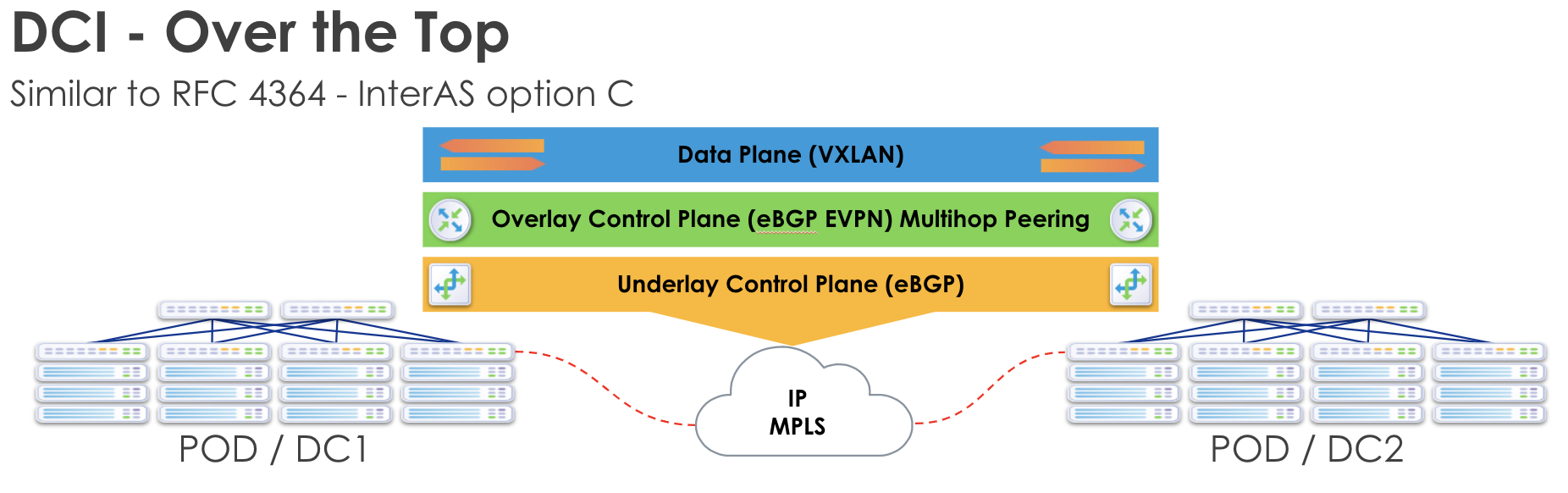

Over the Top

DCI "Over the Top" is a transparent solution, meaning EVPN routes are

encapsulated into standard IP and hidden from the underlying transport. This

makes the extension of services simple and flexible and is often chosen because

data center teams can implement it with little to no coordination with WAN or

Service Provider groups. This reduces the implementation times and internal

company friction. However, the tradeoff is scalability and resilience.

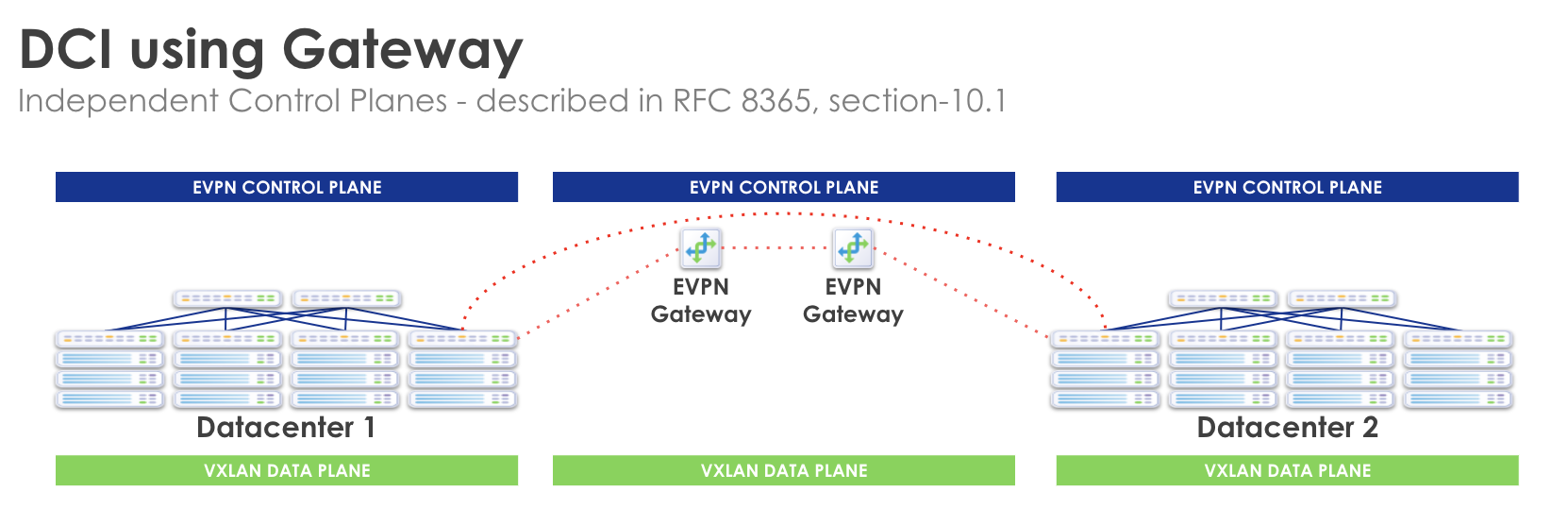

Gateway (GW)

Building upon the Apstra Remote EVPN Gateway capability, you can

optionally specify that the Remote EVPN Gateway is an external generic

system (tagged as an external router) in the same site, thus extending the EVPN

attributes to said gateway. This solution creates a fault domain per site,

preventing failures from affecting convergence in remote sites and creating

multiple fault domains. IP/MAC endpoint tables for remote sites are processed

and held in state on a generic system (tagged as external router) gateway. You

can also implement WAN QoS and security, along with optimizations that the

transport technology makes available (MPLS TE for example). However, this

solution is more operationally complex, requiring additional hardware and cost.

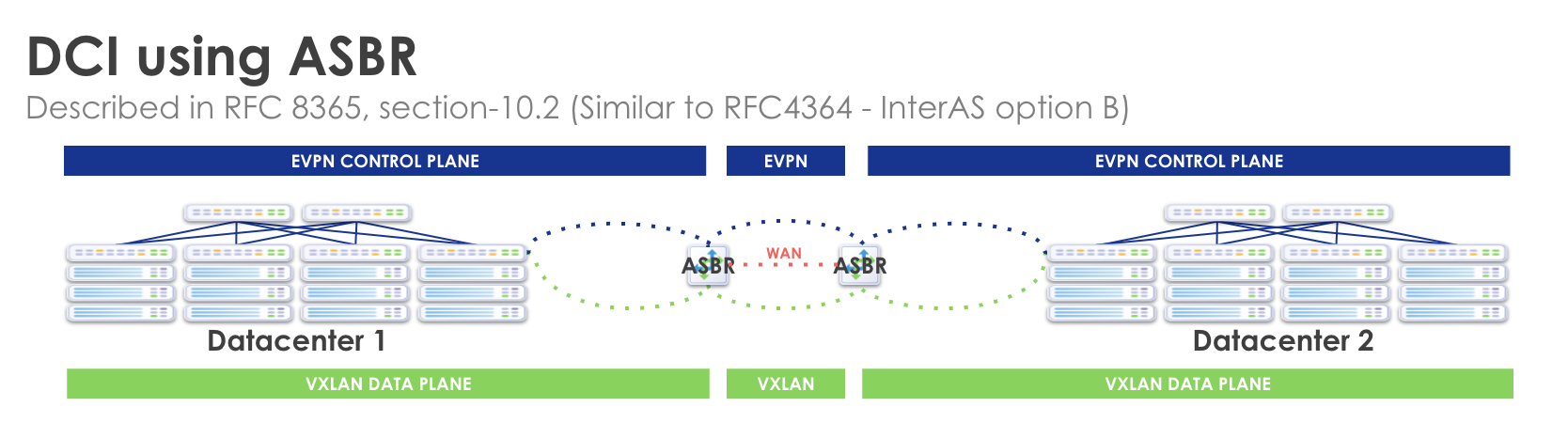

Autonomous System Border Router (ASBR)

Using the Apstra Remote EVPN Gateway capability, you can optionally

specify that the Remote EVPN Gateway is an ASBR WAN Edge Device. This

end-to-end EVPN enables uniform encapsulation and removes the dedicated GW

requirement. It is operationally complex but has greater scalability as compared

to both "DCI Using Gateway" and "Over the Top".

Implementation

You can extend routing zones and virtual networks (VN) to span across Apstra-managed blueprints (across pods) or to remote networks (across data centers) that Apstra doesn't manage. This feature introduces the EVPN Gateway (GW) role, which could be a switch that participates in the fabric or RouteServer(s) on a generic system (tagged as a server) that is connected to the fabric.

EVPN Gateways Use Cases

- Span Layer 3 isolation domains (VRFs / routing zones) to multiple Apstra-managed pods (blueprints) or extend to remote EVPN domains.

- Provide Layer 2 domain extensions for L2VNI / virtual networks.

- Help extend EVPN domain from Apstra to Apstra-managed and Apstra to unmanaged pods.

- No VXLAN traffic termination on the spine devices - connect external generic systems (tagged as external routers) on spine devices. This is to support IPv4 (underlay) external connectivity. Here spine devices don't need to terminate VXLAN traffic, unlike border leaf devices, when connected to external generic systems (tagged as external routers). In a nutshell, using this can exchange IPv4 routes to remote VTEPs (in the default routing zone/VRF) and only Layer 3 connectivity is required:

Over the Top

When BGP EVPN peering is done "over the top", the Data Center Gateway (DC-GW) is a pure IP transport function and BGP EVPN peering is established between gateways in different data centers.

The next sections describes the procedures for interconnecting two or more BGP-based Ethernet VPN (EVPN) sites in a scalable fashion over an IP network. The motivation is to support extension of EVPN sites without having to rely on typical Data Center Interconnect (DCI) technologies like MPLS/VPLS, which are often difficult to configure, sometimes proprietary, and likely legacy in nature.

"Over the Top" is a simple solution that only requires IP routing between data

centers and an adjusted MTU to support VXLAN encapsulation between gateway

endpoints. In such an implementation, EVPN routes are extended end-to-end via

MP-BGP between sites. Multi-hop BGP is enabled with the assumption that there

will be multiple Layer 3 hops between sites over a WAN. Otherwise the default

TTL decrements to 0 and packets are discarded and don't make it to the remote

router. Apstra automatically renders the needed configuration to address these

limitations.

This design merges the separate EVPN-VXLAN domains and VXLAN tunnels between sites. Merging of previously separate EVPN domains in different sites realizes the benefit of extending Layer 2 and Layer 3 (VRF) services between sites, but also renders the sites as a single fault domain. So a failure in one site is necessarily propagated. Also, anytime you stretch Layer 2 across the WAN between sites, you are also extending the flood domain and along with it, all broadcast traffic over your costly WAN links. At this time, this solution does not offer any filtering or QoS.

When separate Apstra blueprints manage individual sites (or when only one site is Apstra-managed) you must create and manage extended routing zones (VRFs) and virtual networks (Layer 2 and/or Layer 3 defined VLANs/subnets) independently in each site. You must manually map VRFs and VNs between sites (creating administrative overhead).

If you’re setting up P2P connections between two data centers (blueprints) in the same Apstra controller, each blueprint must pull resources from different IP pools to avoid build errors. To do this, create two IP pools with the same IP subnet, but with different names.

This "Over the Top" solution is the easiest to deploy, requires no additional hardware and introduces no additional WAN config other than increasing the MTU. It is the most flexible and has the lowest barrier to entry. However, the downside is that there is a single EVPN control plane and a routing anomaly in one site will affect convergence and reachability in the other site(s). The extension of Layer 2 flood domains also implies that a broadcast storm in one site extends to the other site(s).

With any DCI implementation, careful resource planning and coordination is required. Adding more sites requires an exponential increase in such planning and coordination. VTEP loopbacks in the underlay need to be leaked. VNIDs must match between sites and in some cases, additional Route Targets (RTs) must be imported. This is covered in detail later in this document.

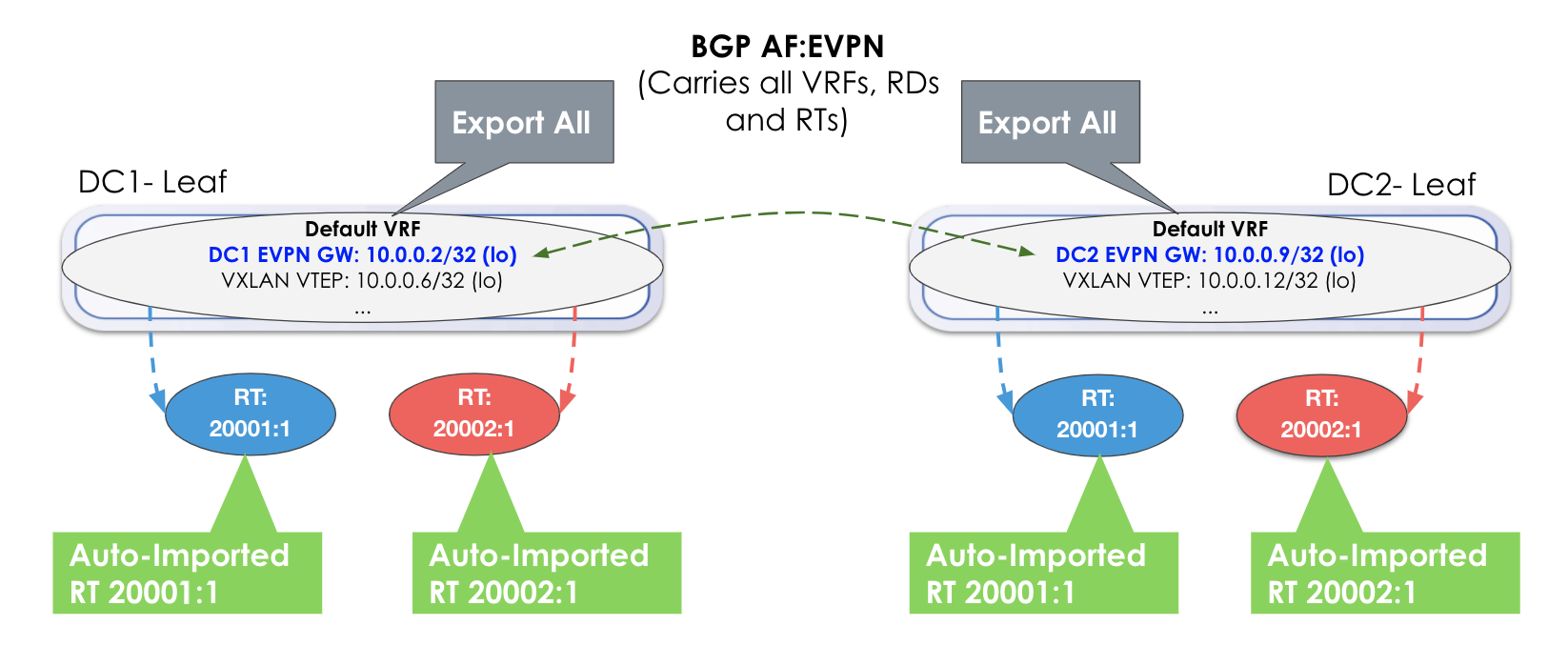

Data Plane Extension: Layer 3

VXLAN Network IDs (VNIDs) are a part of the VXLAN header that identify unique VXLAN tunnels, each of which are isolated from the other VXLAN tunnels in an IP network. Layer 3 packets can be encapsulated into a VXLAN packet or Layer 2 MAC frames can be encapsulated directly into a VXLAN packet. In both cases, a unique VNID is associated with either the Layer 3 subnet, or the Layer 2 domain. When extending either Layer 3 or Layer 2 services between sites, you are essentially stitching VXLAN tunnels between sites. VNIDs therefore need to match between sites.

It is important to understand that a particular VNID will be associated with only

one VRF (or routing zone in Apstra terminology). VNIDs exist within a VRF. They

are tied to a VRF. For Layer 3 services, the stitching, or extending, of each

VNID is done with the export and import of RTs within a routing zone (VRF).

Layer 3 subnets (routes) are identified via RTs. All VNIDs are exported

automatically at the EVPN gateway (edge) towards the WAN. Conversely, RTs of the

same value are automatically imported at the EVPN gateway (edge) coming into the

fabric. So if you coordinate the Layer 3 VNIDs at one site to match the other,

no additional configuration is needed.

In the image above, no additional export or import is required. Everything is automatically exported (Export All) and because the RTs match, they are automatically imported.

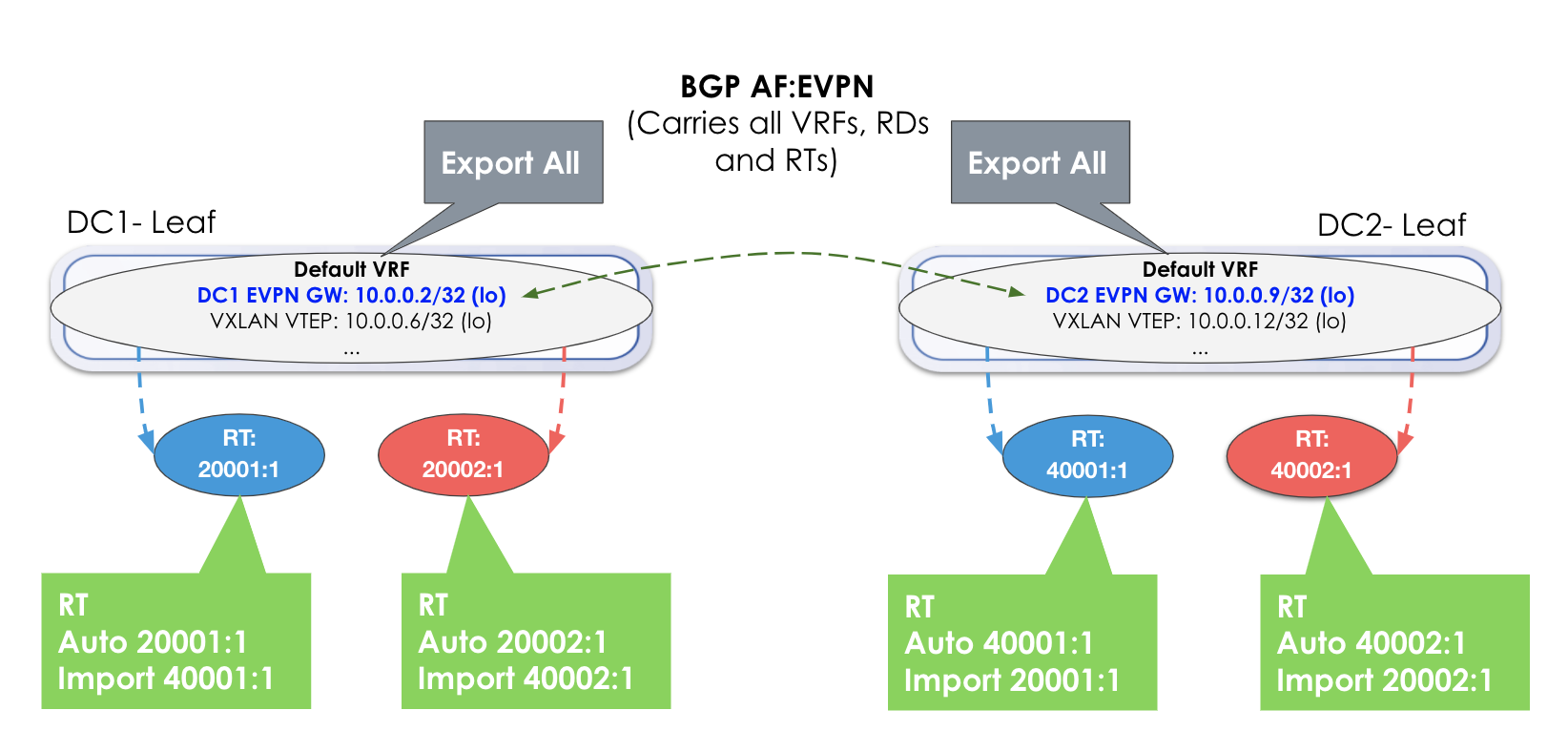

However, if a VNID in DC1 is different from a VNID in DC2, then you must import

the RTs respectively. Each respective gateway still automatically imports RTs of

the same value. In the example below, an additional step of manually adding the

RTs from the other site is required.

Data Plane Extension: Layer 2

A virtual network can be a pure Layer 2 service (Layer 3 anycast gateway is not instantiated). It can be rack-local (VLAN on server-facing ports contained within a rack) or VXLAN (select the racks to extend the Layer 2 flood and broadcast domain between racks. This Layer 2 domain has its own VNID, and the MAC frames (as opposed to IP packets) are encapsulated into the VXLAN header with the VNID of the Layer 2 domain.

The same principles apply in that all VNIDs are exported at the EVPN gateway (in this case Type-2 routes/MAC addresses), and matching RTs are automatically imported. However, the location of importing and exporting RTs is not at the routing zone level, but instead at the virtual network itself.

Apstra Workflow

- Control Plane Extension: EVPN Gateway

- Underlay VTEP Route Advertisements

- Create Remote EVPN Gateways

- Enhanced Routing Zone

- Enhanced Virtual Networks

- Remote Gateway Topology Representation

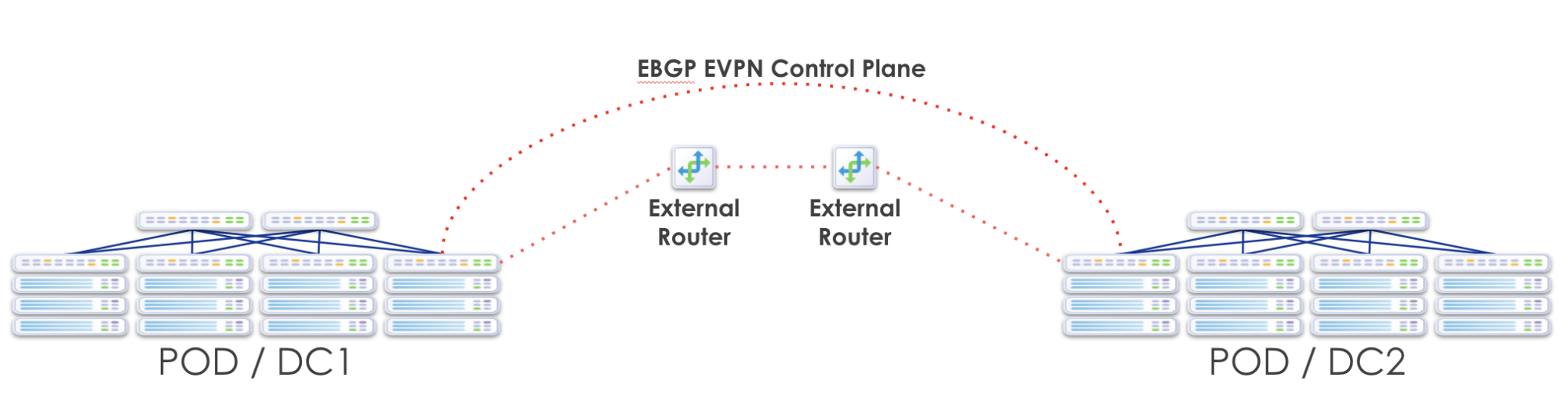

Control Plane Extension: EVPN Gateway

Apstra uses the concept of an an "EVPN Gateway". This device can theoretically be a leaf, spine or superspine fabric node, as well as the DCI device. EVPN Gateways separate the fabric-side from the network that interconnects the sites and masks the site-internal VTEPs.

In Apstra, an EVPN Gateway is a device that belongs to and resides at the edge of an EVPN fabric which is also attached to an external IP network. In an Apstra EVPN blueprint, this is always a border-leaf device. The EVPN Gateway of one data center, establishes BGP EVPN peering with a reciprocal EVPN gateway, or gateways, in another data center. The "other" EVPN gateway is the "Remote EVPN Gateway" in Apstra terminology. The Local EVPN Gateway is assumed to be one of the Apstra-managed devices in the blueprint, and is selected when creating the "Remote EVPN Gateway". The Local EVPN Gateway will be the border-leaf switch with one or more external routing connections for traffic in and out of the EVPN Clos fabric.

Due to this capability, you can configure a Local EVPN Gateway (always an Apstra-managed switch) to peer with a non Apstra-managed, or even a non Spine-Leaf device, in another DC. The EVPN Gateway BGP peering is used to carry all EVPN attributes from inside a pod, to outside the pod. In the Apstra environment, each blueprint represents a data center. If two or more sites are under Apstra management, you still must configure each site to point to the "Remote EVPN Gateway(s)" in other sites. We recommend that you create multiple, redundant EVPN Gateways for each data center. There is also currently a full mesh requirement between EVPN gateways, although in future releases this requirement will be removed.

Underlay VTEP Route Advertisements

The underlay reachability to VTEP IP addresses, or an equivalent summary route, must be established reciprocally. Each site must advertise these VTEP loopbacks from within the default routing zone into the exported BGP (IPv4) underlay advertisements. Loopbacks in the routing policy are enabled by default.

Create Remote EVPN Gateways

By default, ESI MAC msb (most significant byte) is set to 2 on all blueprints. Every Apstra blueprint that's connected must have a unique msb to prevent service-impacting issues. Before creating gateways, change ESI MAC msb accordingly. (You can leave one of them at the default value.)

Remote EVPN Gateway is a logical function that you could instantiate anywhere and on any device. It requires BGP support in general, L2VPN/EVPN AFI/SAFI specifically. To establish a BGP session with an EVPN gateway, IP connectivity, as well as connectivity to TCP port 179 (IANA allocates BGP TCP ports), should be available.

For resilience, we recommend having at least two remote gateways for the same remote EVPN domain.

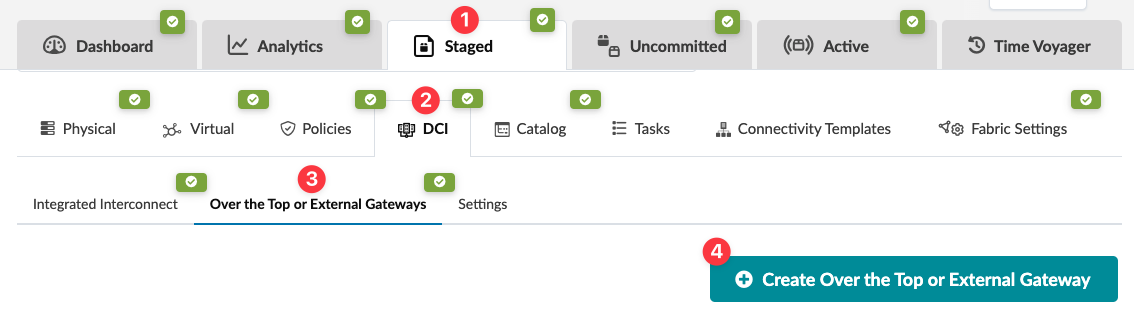

- From the blueprint, navigate to Staged > DCI > Over the Top

or External Gateways and click Create Over the

Top or External Gateway.

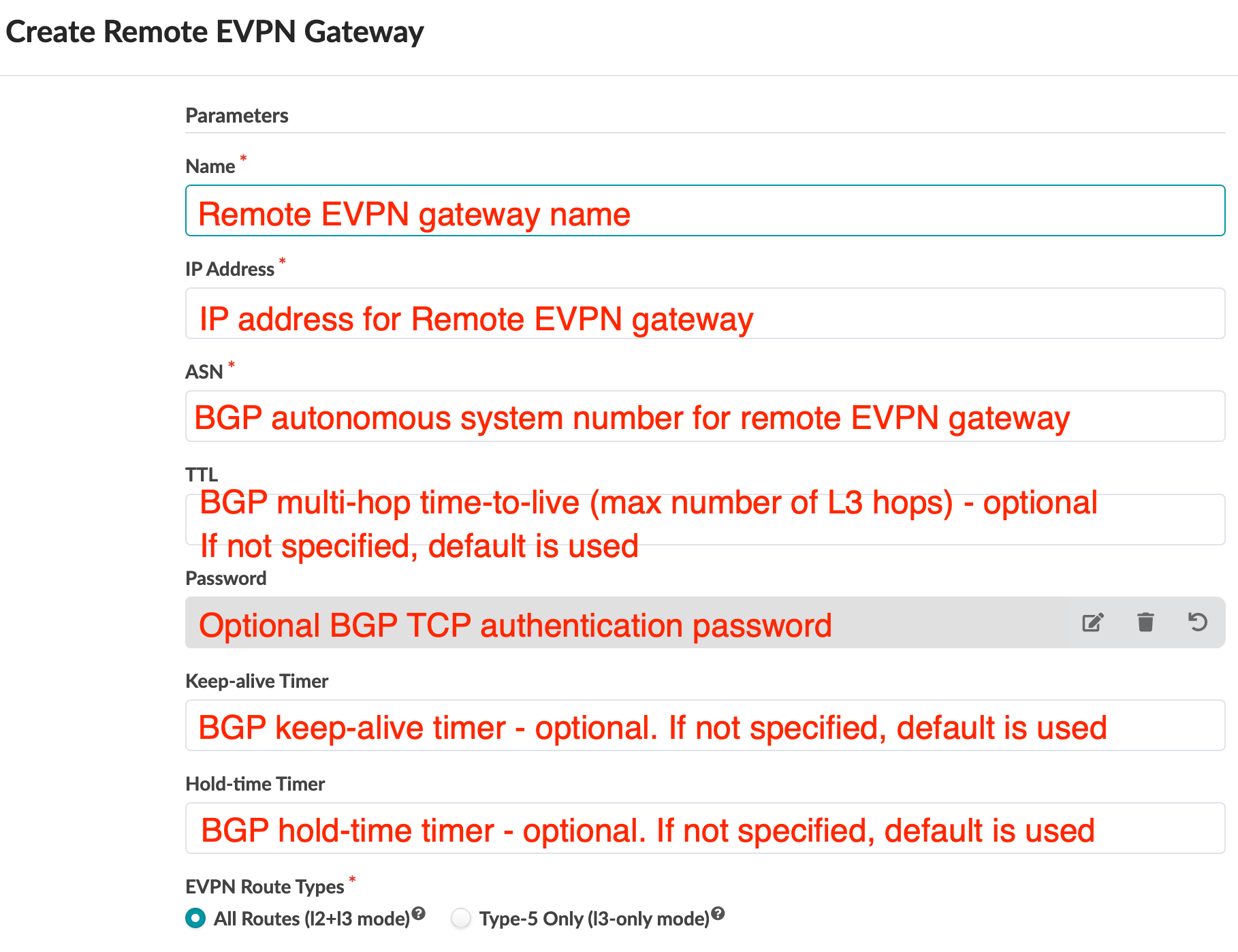

- In the dialog that opens, fill in the following information for the remote

EVPN gateway.

When extending L2 networks between data center fabrics you have the option to exchange only EVPN Route Type RT-5 prefixes (interface-less model). This is useful when there is no need to exchange all host routes between data center locations. This results in smaller requirements for the routing information base (RIB), also known as the routing table, and the forwarding information base (FIB), also known as the forwarding table, on DCI equipment.

- Select the Local Gateway Nodes. These are the devices in the

blueprint that will be configured with a Local EVPN Gateway. You can select

one or more devices to peer with the configured remote EVPN gateway. You can

use the query function to help you locate the appropriate nodes. We

recommend using multiple border-leaf devices which have direct connections

to external generic systems (tagged as external routers).

- Click Create to stage the gateway and return to the table view.

- When you are ready to deploy the devices in the blueprint, commit your changes.

We recommend using multiple remote EVPN gateways. To configure additional remote EVPN gateways, repeat the steps above.

If you are configuring the Remote EVPN Gateway(s) to another Apstra blueprint, you must configure and deploy the remote EVPN gateway(s) separately in that blueprint.

Once the change is deployed, Apstra monitors the BGP session for the remote EVPN gateways. To see any anomalies from the blueprint, navigate to Active > Anomalies.

Enhanced Routing Zone

RT (route-target) import/export policies on devices that are part of extended

service govern EVPN route installation. Specify route target policies to add

import and export route-targets that Apstra uses for routing zones/VRFs. You do

this when you create routing zones. Navigate to Staged > Virtual

> Routing Zones and click Create Routing

Zone. For more information, see Routing Zones.

The generated default route-target for routing zones is <L3 VNI>:1. You can't change this default value.

To confirm that correct routes are received at VTEP make sure L3VNIs and route target are identical between the blueprint and remote EVPN domains.

Enhanced Virtual Networks

You can add additional import and export route-targets that Apstra uses for virtual networks.

The default route target that Apstra generates for virtual networks is <L2 VNI>:1. You can’t alter this.

For Intra-VNI communication L2VNI specific RT is used. The import RT is used to determine which received routes are applicable to a particular VNI. To establish connectivity, Layer 2 VNIs must be the same between the blueprint and the remote domains. SVI subnets must be identical across domains.

Remote Gateway Topology Representation

Remote EVPN gateways are represented on the topology view as cloud elements with

dotted line connections to the blueprint elements with which BGP sessions are

established as shown in the image below. (Image below is slightly different from

more recent versions.)