Step 1: Begin

This guide walks you through the simple steps to install Juniper® Paragon Automation and use Juniper® Paragon Automation to onboard, manage, and monitor networks devices.

Meet Paragon Automation

Paragon Automation provides end-to-end transport network automation and simplifies the adoption of network automation for device, network, and service life cycles from Day 0 to Day 2.

You can onboard ACX7000 Series, PTX Series, MX Series and Cisco Systems routers listed in Paragon Automation Supported Hardware to Paragon Automation and manage them.

Install Paragon Automation

Before you install the Paragon Automation application, ensure that your server(s) meet the requirements listed in this section. A Paragon Automation cluster should contain only four nodes [virtual machines (VMs)], with three nodes acting as both primary and worker nodes and one node acting as a worker-only node.

Requirements

Hardware Requirements

Each node VM must have the following minimum hardware resources:

-

16-core vCPU

-

32-GB RAM

-

300-GB SSD (SSDs are mandatory)

-

These VMs do not need to be in the same server, but the nodes need to be able to communicate over an L2 network.

-

The hardware resources needed for each node VM depends on the size of the network that you want to onboard. To get a scale and size estimate of a production deployment and to discuss detailed dimensioning requirements, contact your Juniper Partner or Juniper Sales Representative.

Software Requirements

Use VMware ESXi 8.0 to deploy Paragon Automation.

Network Requirements

The four nodes must be able to communicate with each other through SSH. You need to have the following addresses available for the installation, all in the same IP network.

-

Four IP addresses, one for each of the four nodes

-

Network gateway IP address

-

A Virtual IP (VIP) address for generic ingress shared between gNMI, SSH ingress, and the Web UI.

-

A VIP address for Paragon Active Assurance Test Agent gateway.

-

(Recommended) A VIP address to establish Path Computational Element Protocol (PCEP) sessions between Paragon Automation and the devices for collecting label-switched path (LSP) information from the device.

Browser Requirements

Paragon Automation is supported on the latest version of Google Chrome, Mozilla Firefox, and Safari.

Create and Configure VMs

A system administrator can install Paragon Automation by downloading an OVA bundle and using the OVA bundle to deploy the node VMs on one or more VMware ESXi servers. Alternatively, you can also extract the OVF and VMDK files from the OVA bundle and use them to deploy the node VMs. Paragon Automation runs on a Kubernetes cluster with three primary/worker nodes and one worker-only node. The installation is air-gapped but you need Internet access to download the OVA bundle to your computer.

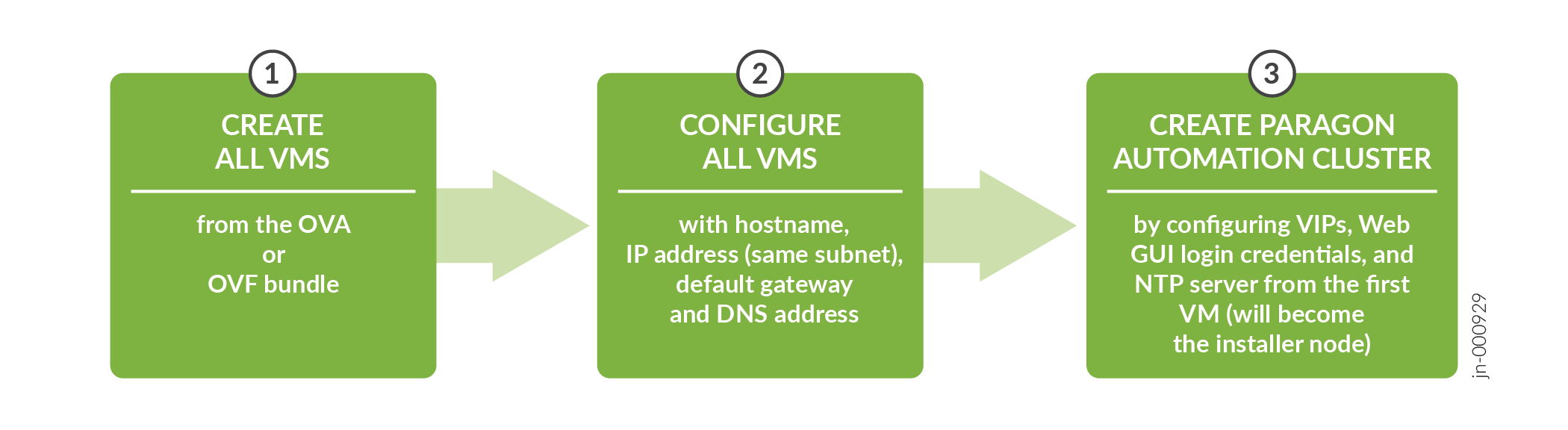

Figure shows the workflow for installing Paragon Automation.

You use the OVA (or OVF and VMDK files) bundle to create your node VMs. The software download files come prepackaged with the OS and all packages required to create the VMs and deploy your Paragon Automation cluster. The VMs have a Linux base OS of Ubuntu 22.04.4 LTS (Jammy Jellyfish).

Once the VMs are created, you must configure each VM in the same way. When all the VMs are configured, you can deploy the Paragon Automation cluster from the first VM.

-

Download the OVA bundle onto your computer.

You can use the OVA as a whole to create the VMs or alternatively, extract and use the OVF and .vmdk files from the OVA to create your VMs. -

Log in to the VMware ESXi 8.0 server to install Paragon Automation.

-

Create the node VMs.

To create the node VMs:

-

Right-click the Host icon and select Create/Register VM.

The New virtual machine wizard appears.

- On the Select creation type page, select Deploy a virtual machine from an OVF or OVA file and click Next.

-

On the Select OVF and VMDK files page, enter a name for the node VM.

Click to upload or drag and drop the OVA file or the OVF file along with the .vmdk files. Review the list of files to be uploaded and click Next.

-

On the Select storage page, select the datastore that can accommodate 300-GB SSD for the node VM.

Click Next. The extraction of files begins and takes a few minutes.

-

On the Deployment options page:

-

Select the virtual network to which the node VM will be connected.

-

Select the Thick disk provisioning option.

-

Enable the VM to power on automatically.

Click Next.

-

-

On the Ready to complete page, review the VM settings and click Finish to create the node VM.

-

Power on the VM.

-

Follow steps 3.a to 3.g to create three more nodes. Enter appropriate VM names when prompted.

Alternatively, if you are using VMware vCenter, you can right-click the VM, and click the Clone > Clone to Virtual Machine option to clone the newly created VM.

Clone the VM thrice to create the remaining node VMs. Enter appropriate VM names when prompted.

-

After all the VMs are created, verify that the VMs have the correct specifications and are powered on.

-

-

Configure the Nodes.

To configure the nodes:

-

Connect to the node VM console of the first VM node.

You are logged in to the node as the root user automatically and prompted to change your password.

-

Enter and re-enter the new password.

You are automatically logged out of the VM.

Note:We recommend that you enter the same password for all the VMs.

-

When prompted, log in again as root user with the newly configured password.

-

Configure the hostname and IP address of the VM, gateway, and DNS servers when prompted.

For information, see Install Paragon Automation.

-

When prompted, if you are sure to proceed, review the information displayed, type y, and press Enter.

-

Ping each node from the other three nodes to ensure that the nodes can reach each other.

-

You can now deploy the cluster.

Deploy the Cluster

Use the Paragon Shell CLI commands to deploy the Paragon Automation cluster.

To deploy a Paragon Automation cluster by using the Paragon Shell CLI commands:

-

Go back to the first node VM. If you have been logged out, log in again as the root user with the previously configured password.

You are placed in Paragon Shell operational mode.

********************************************************************* WELCOME TO PARAGON SHELL! You will now be able to execute Paragon CLI commands! ********************************************************************* root@eop> -

Enter the configuration mode in Paragon Shell.

root@eop> configure Entering configuration mode [edit]

-

Configure the following cluster parameters.

root@eop# set paragon cluster nodes kubernetes 1 address 10.1.2.3 [edit] root@eop# set paragon cluster nodes kubernetes 2 address 10.1.2.4 [edit] root@eop# set paragon cluster nodes kubernetes 3 address 10.1.2.5 [edit] root@eop# set paragon cluster nodes kubernetes 4 address 10.1.2.6 [edit] root@eop# set paragon cluster ntp ntp-servers pool.ntp.org [edit] root@eop# set paragon cluster common-services ingress ingress-vip 10.1.2.7 [edit] root@eop# set paragon cluster applications active-assurance test-agent-gateway-vip 10.1.2.8 [edit] root@eop# set paragon cluster applications web-ui web-admin-user "user-admin@juniper.net" [edit] root@eop# set paragon cluster applications web-ui web-admin-password Userpasswd [edit]

Where:

-

The IP addresses of

kubernetesnodes with indexes 1 through 4 must match the static IP addresses configured on the node VMs. The Kubernetes nodes with indexes 1, 2, and 3 are the primary and worker nodes, the node with index 4 is the worker-only node. -

ntp-serversis the NTP server for synchronizing. -

web-admin-userandweb-admin-passwordare the e-mail address and password that the first user can use to log in to the Web GUI. -

ingress-vipis the VIP address for the generic ingress IP address. -

test-agent-gateway-vipis the VIP address for the Paragon Active Assurance TAGW.

The VIP addresses are added to the outbound SSH configuration that is required for a device to establish a connection with Paragon Automation.

-

-

Configure the PCE server VIP address.

root@eop# set paragon cluster applications pathfinder pce-server pce-server-vip pce-server-vip

Where, pce-server-vip is the VIP address used by the PCE server to establish Path Computational Element Protocol (PCEP) sessions between Paragon Automation and the devices managed by it.

-

Configure hostnames for generic ingress and Paragon Active Assurance TAGW:

root@eop# set paragon cluster common-services ingress system-hostname ingress-vip-dns-hostname [edit] root@eop# set paragon cluster applications active-assurance test-agent-gateway-hostname nginx-ingress-controller-hostname [edit]

Where:

-

system-hostnameis the hostname for the generic ingress VIP address. -

test-agent-gateway-hostnameis the hostname for the Paragon Active Assurance TAGW VIP address.

When you configure hostnames, the hostnames are added to the outbound SSH configuration instead of the VIP addresses.

-

-

Configure the PCE server VIP address.

root@eop# set paragon cluster applications pathfinder pce-server pce-server-vip pce-server-vip

Where, pce-server-vip is the VIP address used by the PCE server to establish Path Computational Element Protocol (PCEP) sessions between Paragon Automation and the devices managed by it.

-

(Optional) Configure the following settings for SMTP-based user management.

root@eop# set paragon cluster mail-server smtp-allowed-sender-domains sender-domains [edit] root@eop# set paragon cluster mail-server smtp-relayhost relayhost-hostname [edit] root@eop# set paragon cluster mail-server smtp-relayhost-username relayhost-username [edit] root@eop# set paragon cluster mail-server smtp-relayhost-password relayhost-password [edit] root@eop# set paragon cluster mail-server smtp-sender-email sender-e-mail-address [edit] root@eop# set paragon cluster mail-server smtp-sender-name sender-name [edit] root@eop# set paragon cluster papi papi-local-user-management false [edit] root@eop-primary# set paragon cluster mail-server smtp-enabled true [edit]

Where:

-

sender-domains are the e-mail domains from which Paragon Automation sends e-mails to users.

-

relayhost-hostname is the name of the SMTP server that relays messages.

-

relayhost-username (optional) is the username to access the SMTP (relay) server.

-

relayhost-password (optional) is the password for the SMTP (relay) server.

-

sender-e-mail-address is the e-mail address that appears as the sender's e-mail address to the e-mail recipient.

-

sender-name is the name that appears as the sender’s name in the e-mails sent to users from Paragon Automation.

-

papi-local-user-management falsedisables local authentication.

Note:- SMTP configuration is optional at this point. SMTP settings can be configured after the cluster has been deployed also. For information about how to configure SMTP after cluster deployment, see Configure SMTP Settings in Paragon Shell.

-

For details about the behavior of Paragon Automation with different combinations of local authentication and SMTP configuration, see User Activation and Login.

-

-

(Optional) Install custom user certificates.

Note:Before you install user certificates, you must copy the custom certificate file and certificate key file to the /root/epic/config folder in the Linux root shell of the node from which you are deploying the cluster.

root@eop# set paragon cluster common-services ingress user-certificate use-user-certificate true [edit] root@eop# set paragon cluster common-services ingress user-certificate user-certificate-filename "certificate.cert.pem" [edit] root@eop# set paragon cluster common-services ingress user-certificate user-certificate-key-filename "certificate.key.pem" [edit]

Where:

-

certificate.cert.pem is the user certificate file name.

-

certificate.key.pem is the user certificate key file name.

Note:Installing certificates is optional at this point. You can configure Paragon Automation to use custom user certificates after cluster deployment also. For information about how to install user certificates after cluster deployment, see Install User Certificates.

-

-

Commit the configuration and exit configuration mode.

root@eop# commit commit complete [edit] root@eop# exit Exiting configuration mode root@eop>

-

Generate the configuration files.

root@eop> request paragon config Paragon inventory file saved at /epic/config/inventory Paragon config file saved at /epic/config/config.yml

The inventory file contains the IP addresses of the VMs. The config.yml file contains minimum Paragon Automation cluster parameters required to deploy a cluster.

The

request paragon configcommand also generates a config.cmgd file in the config directory. The config.cmgd file contains all thesetcommands that you executed in step 3. If the config.yml file is inadvertently edited or corrupted, you can redeploy your cluster using theload set config/config.cmgdcommand in the configuration mode. -

Generate SSH keys on the cluster nodes.

When prompted, enter the SSH password for the VMs. Enter the same password that you configured to log in to the VMs.

root@eop> request paragon ssh-key Setting up public key authentication for ['10.1.2.3','10.1.2.4','10.1.2.5','10.1.2.6'] Please enter SSH username for the node(s): root Please enter SSH password for the node(s): password checking server reachability and ssh connectivity ... Connectivity ok for 10.1.2.3 Connectivity ok for 10.1.2.4 Connectivity ok for 10.1.2.5 Connectivity ok for 10.1.2.6 SSH key pair generated in 10.1.2.3 SSH key pair generated in 10.1.2.4 SSH key pair generated in 10.1.2.5 SSH key pair generated in 10.1.2.6 copied from 10.1.2.3 to 10.1.2.3 copied from 10.1.2.3 to 10.1.2.4 copied from 10.1.2.3 to 10.1.2.5 copied from 10.1.2.3 to 10.1.2.6 copied from 10.1.2.4 to 10.1.2.3 copied from 10.1.2.4 to 10.1.2.4 copied from 10.1.2.4 to 10.1.2.5 copied from 10.1.2.4 to 10.1.2.6 copied from 10.1.2.5 to 10.1.2.3 copied from 10.1.2.5 to 10.1.2.4 copied from 10.1.2.5 to 10.1.2.5 copied from 10.1.2.5 to 10.1.2.6 copied from 10.1.2.6 to 10.1.2.3 copied from 10.1.2.6 to 10.1.2.4 copied from 10.1.2.6 to 10.1.2.5 copied from 10.1.2.6 to 10.1.2.6Note:If you have configured different passwords for the VMs, ensure that you enter corresponding passwords when prompted.

-

Deploy the cluster.

root@eop> request paragon deploy cluster Process running with PID: 231xx03 To track progress, run 'monitor start /epic/config/log' After successful deployment, please exit Paragon-shell and then re-login to the host to finalize the setup

The cluster deployment begins and takes over an hour to complete.

-

(Optional) Monitor the progress of the deployment onscreen.

root@eop> monitor start /epic/config/log

The progress of the deployment is displayed. Deployment is complete when you see an output similar to this onscreen.

<output snipped> PLAY RECAP ******************************************************************************************** 10.1.2.3 : ok=109 changed=33 unreachable=0 failed=0 rescued=0 ignored=0 10.1.2.4 : ok=34 changed=1 unreachable=0 failed=0 rescued=0 ignored=0 10.1.2.5 : ok=34 changed=1 unreachable=0 failed=0 rescued=0 ignored=0 10.1.2.6 : ok=30 changed=0 unreachable=0 failed=0 rescued=0 ignored=0 Monday 15 July 2024 18:56:14 +0000 (0:00:00.819) 0:01:23.328 *********** =============================================================================== Unpack 3rdparty OS packages -------------------------------------------------------------------- 8.59s Gathering Facts -------------------------------------------------------------------------------- 4.18s add etcd user on running nodes ----------------------------------------------------------------- 3.85s Gathering Facts -------------------------------------------------------------------------------- 3.61s Gathering Facts -------------------------------------------------------------------------------- 3.04s Gathering Facts -------------------------------------------------------------------------------- 2.98s readonly : Synchronize k8s readonly config to rest of nodes ------------------------------------ 2.07s kubernetes/addons/traefik : Install Helm Chart ------------------------------------------------- 1.82s kubernetes/addons/traefik-paa : Install Helm Chart --------------------------------------------- 1.74s Record installation status --------------------------------------------------------------------- 1.64s kubernetes/addons/metadata : Create common/metadata -------------------------------------------- 1.38s kubernetes/addons/traefik-paa : upload ingress spec files -------------------------------------- 1.30s kubernetes/addons/traefik : upload ingress spec files ------------------------------------------ 1.28s kubernetes/addons/rook-quota : get rook total capacity ----------------------------------------- 1.25s kubernetes/addons/traefik : apply traefik ingress routes --------------------------------------- 1.09s Record installation status --------------------------------------------------------------------- 0.98s kubernetes/addons/traefik : apply traefik additional services ---------------------------------- 0.90s kubernetes/addons/traefik : create default ingress cert ---------------------------------------- 0.89s kubernetes/addons/metadata : Create config_yml ------------------------------------------------- 0.87s kubernetes/addons/metadata : Get docker labels ------------------------------------------------- 0.86s Playbook run took 0 days, 1 hours, 1 minutes, 23 seconds registry-5749 root@eop>

Alternatively, if you did not choose to monitor the progress of the deployment onscreen using the

monitorcommand, you can view the contents of the log file using thefile show /epic/config/logcommand. The last few lines of the log file must look similar to sample output. We recommend that you check the log file periodically to monitor the progress of the deployment.Upon successful completion of the deployment, the Paragon Automation cluster is created.

The console output displays the Paragon Shell welcome message and the IP addresses of the four nodes (called Controller-1 through Controller-4), the Paragon Active Assurance TAGW VIP address, the Web admin user e-mail address, and Web GUI IP address.

Welcome to Juniper Paragon Automation OVA This VM 10.1.2.3 is part of an EPIC on-prem system. =============================================================================== Controller IP : 10.1.2.3, 10.1.2.4, 10.1.2.5, 10.1.2.6 PAA Virtual IP : 10.1.2.8 UI : https://10.1.2.7 Web Admin User : admin-user@juniper.net =============================================================================== ova: 20240503_2010 build: eop-release-2.0.0.6928.g6be8b6ce52 *************************************************************** WELCOME TO PARAGON SHELL! You will now be able to execute Paragon CLI commands! *************************************************************** root@Primary1>The CLI command prompt displays your login username and the node hostname that you configured previously. For example, if you entered Primary1 as the hostname of your primary node, the command prompt is

root@Primary1 >.

You can now log in to the Paragon Automation GUI by using the Web admin user ID and password.

Log in to Paragon Automation

To log in to the Paragon Automation Web GUI:

-

Enter https://web-ui-ip-address in a Web browser to open the Paragon Automation login page.

-

Enter the Web admin user e-mail address and password that you configured while deploying Paragon Automation.

The New Account page appears. You are now logged into Paragon Automation. You can now create organizations, sites, and users.

Add an Organization, a Site, and Users

Add an Organization

After you log in to the Paragon Automation GUI for the first time after installation, you must create an organization. After you create the organization, you are the Super User for the organization.

You can add only one organization in this release. Adding more than one organization can lead to performance issues and constrain the disk space in the Paragon Automation cluster.

To create an organization:

After you create an organization, you can add sites and users to the organization.

Create a Site

A site represents the location where devices are installed. You must be a Super User to add a site.

Add Users

The Super User can add users and define roles for the users.

To add a user to the organization: