Foundation Principles

Several years ago, virtualization was the most fashionable keyword in IT because it revolutionized the way servers were built. Virtualization was about the adoption of virtual machines (VMs) instead of dedicated, physical servers for hosting and building new applications. When it came to scaling, portability, capacity management, cost, and more, VMs were a clear winner (as they are today). You can find tons of comparisons between the two approaches.

If virtualization was the keyword then, the keywords now are cloud, SDN, and containers.

Today, the heavily discussed comparisons are between VMs and containers, and how containers promise a new way to build and scale applications. While many small organizations are thinking of containers as something too wild, or too early, to adopt, the simple fact is that from Gmail to YouTube to Search, everything at Google runs in containers, and they run two billion containers a week. This might give you a clue as to where the industry is heading.

But what is a container and how is it comparable to a VM? Let’s start this Day One book with a comparison.

Containers Overview

From a technical perspective, the concept of a container is rooted in the Namespaces and Cgroups concept in Linux, but the term is also inspired by the actual metal cargo shipping containers that you see on seafaring ships. Both kinds of containers share the ability to isolate contents, maintain carrier independence, offer portability, and much more.

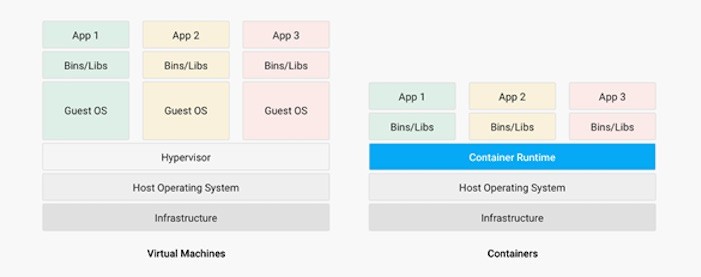

Containers are a logical packaging mechanism. YYou can think of containers as a lightweight virtualization that runs an application and its dependencies in the same operating system, but in different contexts that remove the need to replicate an entire OS as shown in Figure 1. By doing this the application is confined in a lightweight package that can be developed and tested individually, then implemented and scaled much faster than the traditional VM. Developers just need to build and configure this lightweight piece of software so that most of the application is containerized and publicly available without the need to manage and support the application per OS.

Many developers would call the container runtime shown in Figure 1.1 as the Hypervisor of Containers. Although this term is not technically correct, it may be useful in visualizing the hierarchy.

As in many VM technologies, the most common hypervisors are KVM and VMware ESX/ESXi. In container technologies, Docker and Rkt are the most common, with Docker being the most widely deployed. Let’s review some useful numbers in comparing VMs with containers.

Juniper vSRX versus cSRX

Currently most common applications such as Redis, Ngnix, Mongo, MySQL, WordPress, Jenkins , Kibana, and Perl have been containerized and are offered publicly at https://hub.docker.com allowing developers to quickly build and test their applications.

There are lots of available tests that compare performance and scaling for any given application while running in containers versus VM. The comparisons all dwell on the benefits of running your application in containers, but what about network function virtualizations (NFV) such as firewall, NAT, routing, and more?

When it comes to VM-based NFV, most network vendors already implement a virtualized flavor of the hardware equipment that could be run on the hypervisor of a standard x86 hardware. Built on Junos, vSRX is a Juniper Networks SRX Series Services Gateway in a virtualized form factor that delivers networking and security features similar to those available for the physical SRX just as it does for the containerized based NFV. It’s the new trend. Juniper cSRX is the industry’s first containerized firewall offering a compact footprint with a high-density firewall for virtualized and cloud environments. Table 1 lists a comparison between vSRX and cSRX in which you can see the idea of the cSRX being a lightweight NFV.

Table 1: vSRX versus cSRX

vSRX | cSRX | |

Use Cases | Integrated routing, security, NAT, VPN, High Performance | L4-L7 Security, Low Footprint |

Memory Requirement | 4GB Minimum | In MBs |

NAT | Yes | Yes |

IPSec VPN | Yes | No |

Boot-up Time | ~minutes | <1second |

Image size | In GBs | In MBs |

Using micro services techniques, the application can be split into smaller services with each part (a container in this case) doing a specific job.

Understanding Docker

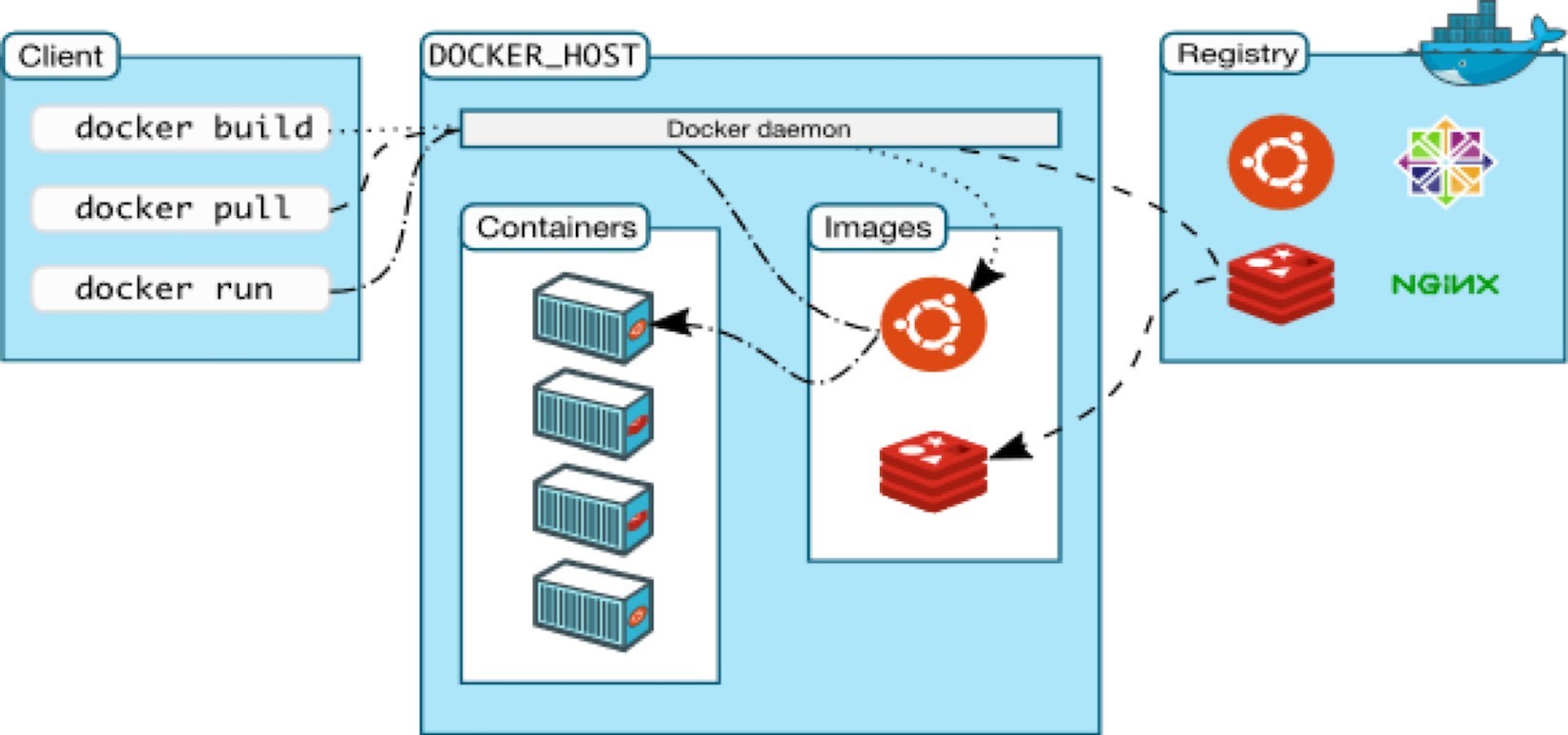

As discussed, containers allow a developer to package up an application with all of the parts it needs, such as libraries and other dependencies, and ship them all out as one package. Docker is software that facilitates creating, deploying, and running containers.

The starting point is the source code for the Docker image file, and from there you can build the image to be stored and distributed to any registry – most commonly a Docker hub – and use this image to run the containers.

Docker uses the client-server architecture shown in Figure 2. The Docker client and daemon can run on the same system, or you can connect a Docker client to a remote Docker daemon. The Docker daemon does the heavy lifting of building, running, and distributing your Docker containers. The Docker client and daemon communicate using a REST API over UNIX sockets or a network interface.

Containers don’t exist in a vacuum, and in production environments you won’t have just one host with multiple containers, but rather multiple hosts running hundreds, if not thousands, of containers, which raises two important questions:

How do these containers communicate with each other on the same host or in different hosts, as well as with the outside world? (Basically, the networking parts of containers.)

Who determines which containers get launched on which host? Based on what? Upgrade? Number of containers per application? Basically, who orchestrates that?

These two questions are answered, in detail, throughout the rest of the book, but if you want a quick answer, just think Juniper Contrail and Kubernetes!

Let’s start with the basic foundations of the Juniper Contrail Platform.

Contrail Platform Overview

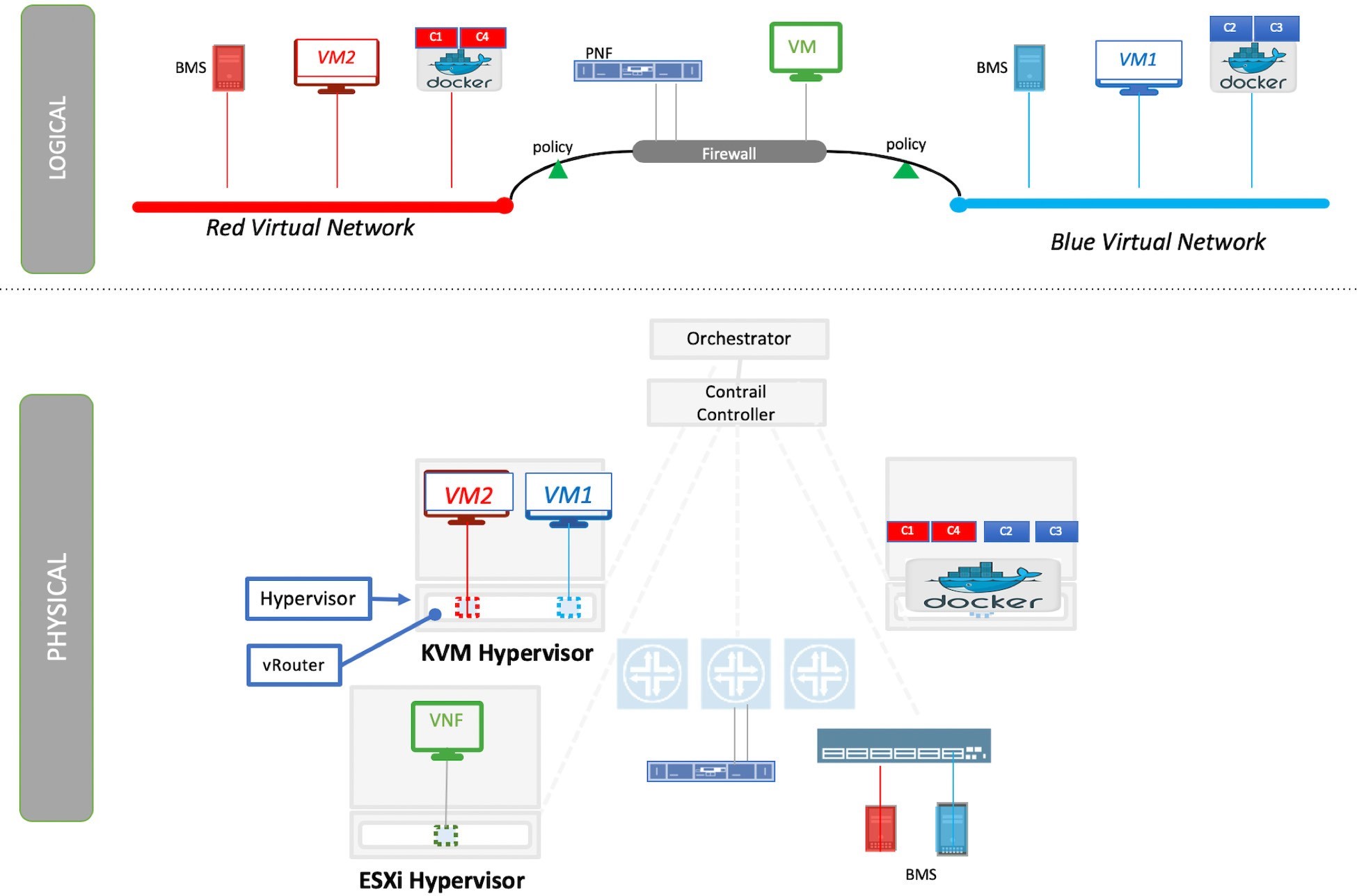

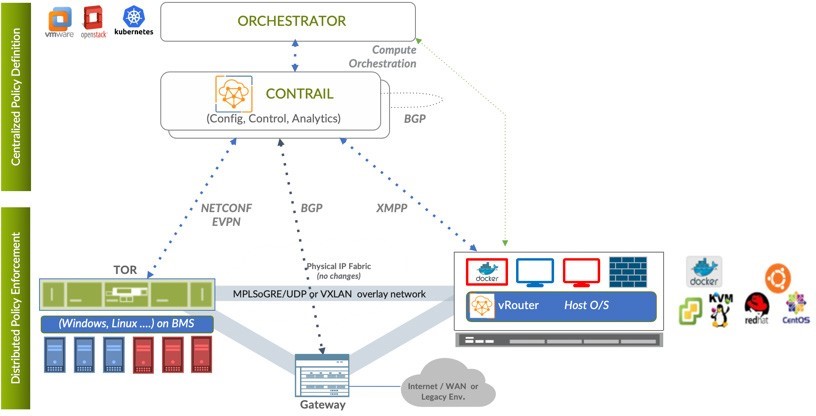

The Juniper Contrail Platform provides dynamic end-to-end networking, networking policy, and control for any cloud, any workload, and any deployment, all from a single user interface. Although the focus of this book is building a secure container network orchestrated by Kubernetes, Contrail can build virtual networks that integrate containers, VMs, and bare metal servers.

Virtual networks are a key concept in the Contrail system. Virtual networks are logical constructs implemented on top of physical networks. They are used to replace VLAN-based isolation and provide multitenancy in a virtualized data center. Each tenant or an application can have one or more virtual networks. Each virtual network is isolated from all the other virtual networks unless explicitly allowed by network policy. Virtual networks can be extended to physical networks using a gateway. Finally, virtual networks are used to build service–chaining.

As shown in Figure 3, the network operator only deals with the logical abstraction of the network, then Contrail does the heavy lifting which includes, but is not limited to, building polices, exchanging routes, and building tunnels on the physical topology.

Contrail Architecture Fundamentals

Contrail runs in a logically centralized, physically-distributed model with its two main components, Contrail controller and Contrail vRouter. The controller is the control and management plane that manages and configures the vRouter, collecting and presenting analytics. The Contrail vRouter is the forwarding plane that provides Layer 2 and Layer 3 services, and distributed firewall capabilities, while implementing policies between virtual networks.

Contrail integrates with many orchestrators such as OpenStack, VMware, Kubernetes, OpenShift, and Mesos. It uses multiple protocols to provide SDN to these orchestrators, as shown in Figure 4 where Extensible Messaging and Presence Protocol (XMPP) is an open XML technology for real-time communication, defined in RFC 6120. In Contrail, XMPP offers two main functionalities: distributing routing information and pushing configurations, which are similar to what IBGP does in MPLS VPNs models, plus NETCONF in device management.

Figure 4 also illustrates:

BGP is used to exchange routes with physical routers and Contrail device manager can use NETCONF to configure this Gateway.

Ethernet VPN (EVPN) is a standards-based technology, RFC 7432, that provides virtual multipoint bridged connectivity between different Layer 2 domains over an IP network. Contrail Controller exchanges EVPN routes with TOR switches (acting as a Layer 2 VXLAN gateway) to offer faster recovery with active-active VXLAN forwarding.

MPLSoGRE, MPLSoUDP, or VXLAN, are three different kind of overlay tunnels to carry traffic over IP networks. They are all IP packets, but in VXLAN you use the VNI values in the VXLAN header for segmentation, whereas in MPLSoGRE and MPLSoUDP you use the MPLS label value for segmentation.

To simplify the relationship between Contrail vRouter, Contrail Controller, and the IP fabric from an architectural prospective, compare it to the MPLS VPN model whereas any service provider’s vrouter is like a PE router and the VM/container is like CE, but the vRouter is just a tool of the Contrail Controller, and when it comes to bare metal servers, the top of rack would be the PE.

This Day Onebook uses the words compute node and host interchangeably. Both mean the entity hosts the containers that need a compute node to host it. This host could be a physical server in your DC, or a VM in either your data center or the public cloud.

Contrail vRouter

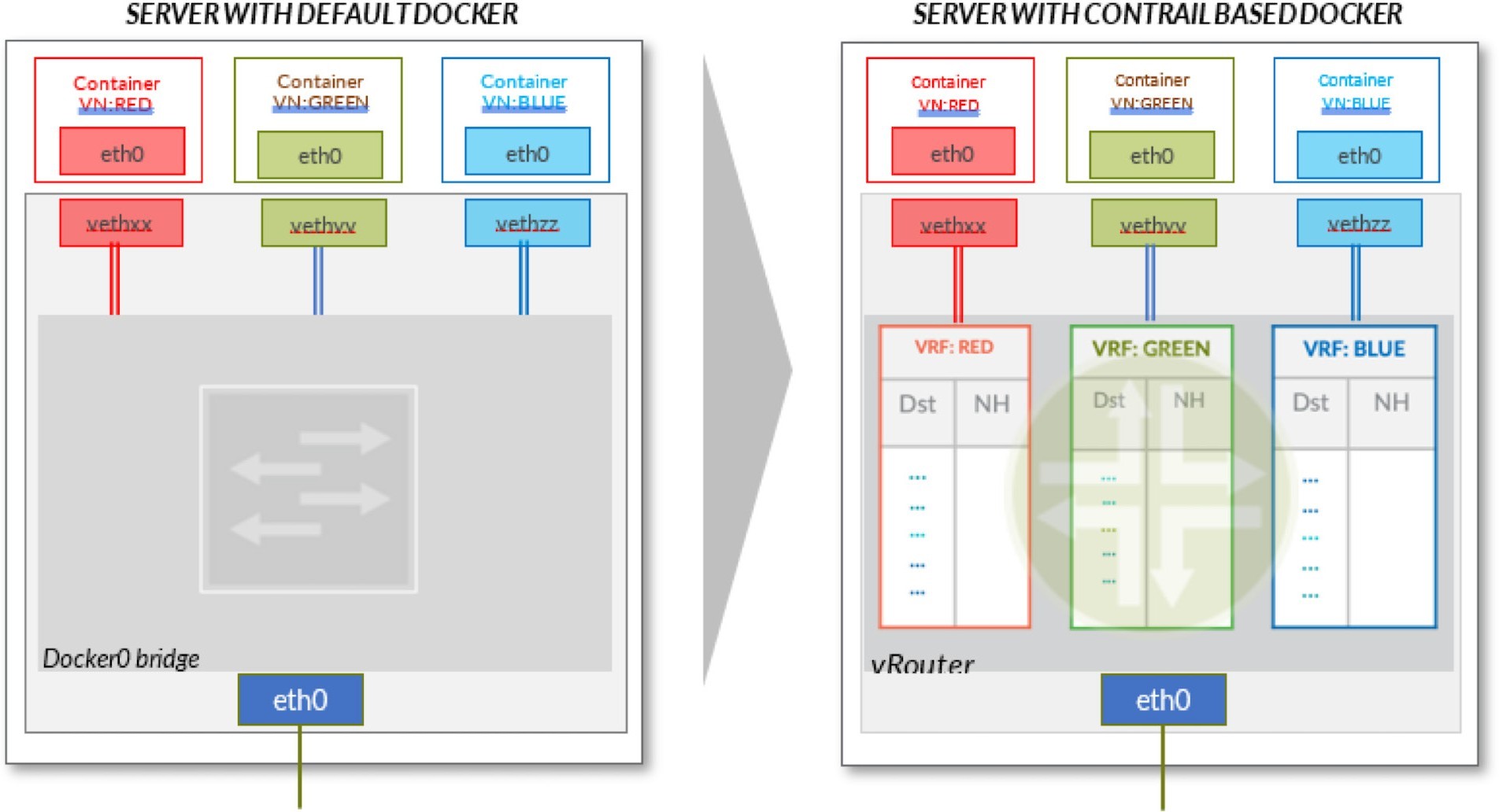

Contrail vRouter is composed of the Contrail components on the compute node/host shown in Figure 5. For a compute node in the default Docker setup, containers on the same host communicate with each other, as well as with other containers and services hosted on the other host with a Docker bridge. In Contrail networking, on each compute node the vRouter creates a VRF table per virtual network, offering a long list of features.

From the perspective of the control plane, the Contrail vRouter:

Receives low-level configuration (routing instances and forwarding policy).

Exchanges routes.

Installs forwarding state into the forwarding plane.

Reports analytics (logs, statistics, and events).

From the prospective of the data plane, the Contrail vRouter:

Assigns received packets from the overlay network to a routing instance based on the MPLS label or Virtual Network Identifier (VNI).

Proxies DHCP, ARP, and DNS.

Applies forwarding policy for the first packet of each new flow then programs the action to the flow entry in the flow table of the forwarding plane.

Forwards the packetst after a destination address lookup (IP or MAC) in the Forwarding Information Base (FIB), encapsulating/decapsulating packets sent to or received from the overlay network.