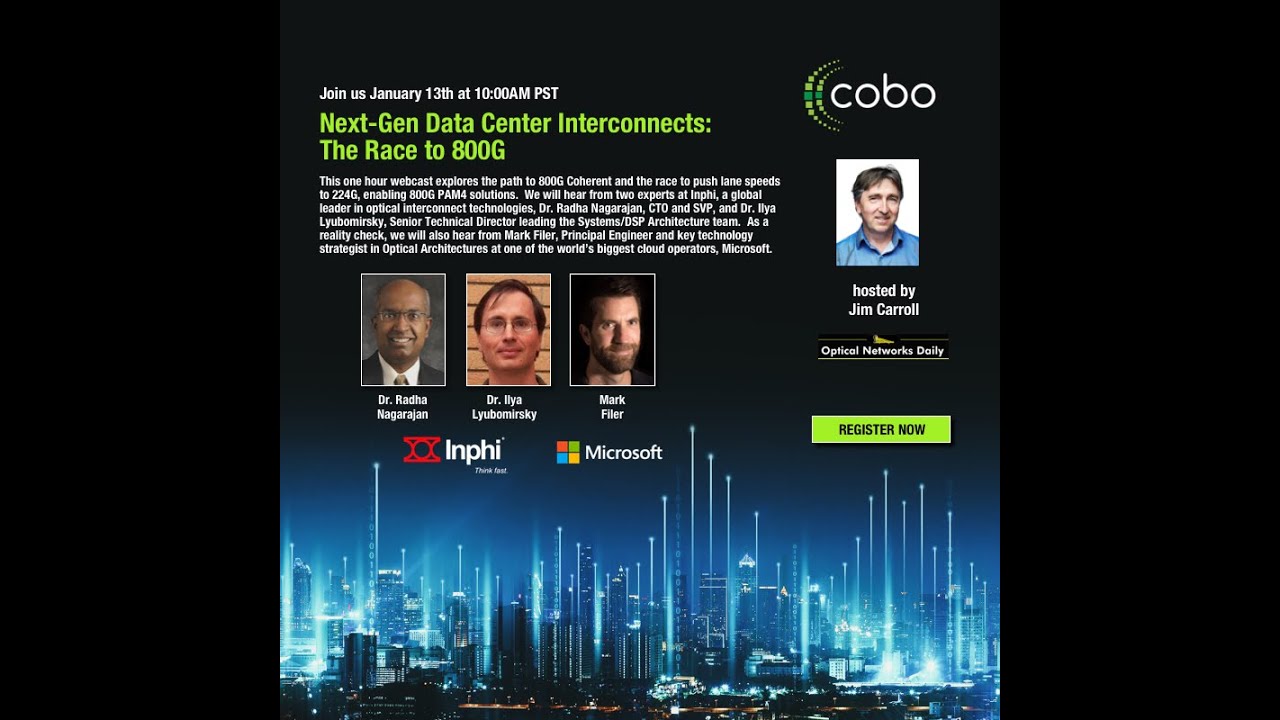

Next-Gen Data Center Interconnects: The Race to 800G Webcast with Inphi and Microsoft

Mark Filer: “People are expecting something higher than 400G and they are expecting it soon.”

Watch this fascinating webcast to gain a new perspective on 800G. Two experts from Inphi, a global leader in optical interconnect technologies, and Mark Filer from Microsoft explore the path to 800G Coherent and the race to push lane speeds to 224G.

You’ll learn

How data centers have evolved and where 800G fits in

If there is a demand for 800G among network operators (hint: it’s affirmative)

The innovation, architectures, and hardware that future data center networks will require

Who is this for?

Host

Guest speakers

Transcript

0:00 [Music]

0:13 so i've really been looking forward to this webinar on 800g the race to 800 g as as we know 400 g

0:20 400 zr is just starting to come to market now so what we're talking about here is sort

0:26 of the next next thing on the horizon we've got two great companies represented here first infine

0:34 which as many of you may know has been in the news a lot over the past year for both business

0:41 reasons which we can't really talk about in this in this webinar as well as for the technology reasons

0:47 um it was about a year ago that they started sampling their spica 8 800g

0:54 7 nanometer dsp which provides a pathway to things such as 8 by 100 g optical transceiver modules

1:02 very interesting and of course we have the perspective of one of the largest

1:07 hyperscale cloud operators in the world which i think will give us um really a reality check as to

1:15 whether this next next wave on the horizon is something that is coming soon perhaps

1:21 or perhaps a little bit later so we've got a lot to talk about let's kick it off rada um

1:28 take it away hi this is radha nagarajan from infi thank you all for the great introduction

1:36 together with uh ilya liu bemersky we're going to give you a perspective

1:41 on 800g so there are a wide spectrum of

1:47 you in the audience i'm going to take a step back and just look at data centers

1:55 data center architectures how they've evolved and where does 800 gig fit in there's been

2:02 a considerable evolution they listen to interconnect and data centers in general

2:10 have gone from sort of hierarchical structures with a lot of over subscription to

2:16 flatter and flatter uh architectures um it's a little bit of an

2:21 exaggeration this slide is borrowed from microsoft what has happened over the last couple

2:28 of years the elimination of layers very high speed interconnects between layers minimization of over subscription

2:35 and data center aggregation and what has actually driven this is lots of interconnect resulted in

2:42 driven versus interconnect rich environment machine to machine traffic a lot of what

2:48 we call east-west traffic and also a very high radix interconnection

2:54 between servers and sort of elimination of the switching layers

2:59 for applications that require lower and lower latency

3:04 so what i'm going to focus on is ratix and i'm going to tell you why it matters

3:10 and then how it drives data center interconnect and interconnect speeds

3:15 here's a chart that we borrowed from facebook what it shows

3:22 is the switch radix versus the number of levels that you actually

3:28 need to interconnect x number of servers

3:33 for example if your radix is at 32 which is out here and if you wanted to interconnect 100

3:40 000 servers which is a typical size of a fairly large data center you probably need four levels of network

3:48 and if you were to actually go to something like 128

3:53 you need something between two and three levels essentially you need three levels so by going from one radix to another

4:00 radix is essentially in how many small tributaries that your switch gets

4:05 split up that determines what your interconnect speeds are so

4:12 where do we actually care about radix at least in this perspective

4:20 it sort of drives the interconnect speeds i have switch generation here 12.8 is in

4:28 deployment 25.6 coming up and 51t is the one after that

4:33 at 12.8 at radix 32 sort of a simplified view though even for a given radix the data center

4:39 architectures are quite a bit different for for between different operators

4:44 you have a 400 gig interconnect or if you had in 128 the examples of both

4:50 uh your interconnect speeds optical interconnect speeds become 100 giga and as you go to 51t uh at 25 6 you go

4:58 to 800 and 1.6 at radix of 128 you go from 1

5:04 2 and 4. as you can well imagine we have the whole breadth of interconnects out there and deployed

5:11 differently under different architectures all the way from 1 to 400 and 800 is coming trust me i can

5:19 say who is deploying and who isn't that isn't the focus of the talk but we are getting there so

5:26 we want to go 2x and speed scaling what do we do let's start with 400 gig

5:32 today 400 gig uh two flavors one is between data center interconnects

5:38 60 gigabyte 16 qualm quan 16 one lambda or 53 gigabyte pam4

5:45 four lambdas this is what a pam signal looks like and we take this and put them in two axes this is what a

5:51 qualm 16 signal looks like the easiest or the easier way to scale

5:57 is why don't you just increase the number of wavelengths 2x number of lambdas and that is the

6:04 path at 800 gig eight landers um the first implementation of 800 gig inside data center is following

6:10 the issue usually is power size cost and it's not really scalable so what you

6:16 do after eight even eight is a bit of a challenge the next axis is when we just increase

6:24 the baud rate by 2x and the challenges there are the analog bandwidth but it looks like we have a

6:31 handle on analog bandwidth because the next axis is even more

6:36 complicated for a given bandwidth can we just increase the

6:42 modulation format complexity snr you don't really want to be dealing with

6:48 pam16 that's what it takes from pam4 to increase the double the baud

6:53 rates or qualm 256 and then let's look at some

6:58 engineering tradeoffs with a quant 64 90 gigabyte work or a pan

7:03 six 90 gigabyte work uh the consensus that's building it 800 gig single lambda would most

7:10 likely be uh 12 120 gigabyte

7:16 com16 and about 112 gigabyte pan 4. we'll get into the

7:22 details in a moment all right technology choices in data center interconnects

7:28 view at 100 gig inside data center 802.3

7:34 psm4 cwdm4 and 2 to 10 kilometers lr4 also cwdm

7:41 wavelengths 10 to 100 kilometers there were two options spam 4 in which invite deployed

7:47 together with microsoft and coherent between 100 to 600 gig coherent dwdm

7:53 and a variety of coherent formats going all the way up to 10 000 kilometers

7:58 at 400 gig there was a real opportunity and has been exploited um to collapse

8:06 a lot of these into a common format although i wouldn't claim that you can go up to 10 000 kilometers in commercial deployments

8:13 but zr zr plus they are making an impact um although at really uh long distances

8:20 there are still uh custom-made deployments but zr and zr

8:26 plus would would cover things all the way up to about a thousand kilometers and um two things happened uh one is the

8:33 collapse of the coherent formats and into one and also the dsp type processing moved

8:41 inside the data center a 200 gig and 400 gig is essentially m4 and 400 gig is 500 gig per lambda

8:50 and a 200 gig is 50 gig per lambda dr4 and fr4 and it an

8:58 800 gig uh view to the future um we think it's going to be 200 gig per

9:05 lander we'll make a case for it in terms of power consumption in terms of complexity

9:10 200 gig per lambda makes a whole lot more sense than 800 100 gig per lambda and eight

9:16 wavelengths when it gets 200 gig per lambda you may be you are probably going to be

9:23 dispersion limited even at 13 10 so it might just be limited to

9:28 two kilometers and you'll probably have coherent moving closer and closer to the data center we don't think coherent is

9:35 going to move inside the data center anytime soon and just for

9:41 to get some discussions going there is a possibility that we may actually have a cwdm

9:47 lr coherent with a distance optimized and a lower power dsp

9:55 so 400 gig four lambda 800 gig eight lambda 800 gig for lambda the variety of uh

10:03 trade-offs here going from 50 gig pam to 100 gig cam the number of host uh lanes remain the

10:10 same that's sort of important going from 100 gig to 200 gig what do we think this transition would happen

10:16 it's got to do with uh dsp area dsp power although the

10:24 analog bandwidth requirements are going to go up from power consumption perspective

10:31 i think this transition would happen uh that that we would go from 100 gig pam

10:39 to 200 gig pound on the line slide and we could potentially accommodate all of these

10:45 in a qsfpddra osfp form factor the question that always

10:51 comes up um also another point is moving to 800 gig

10:56 for lambda you need 200 gig per lambda to get to 1.6 t

11:01 modules which i showed you um in the slide on ratings

11:07 why wouldn't you do an 800 gig coherent one lambda inside data center the number of issues here as to why

11:15 coherent may not move inside or will not move inside data center 800 gig power the power is still going

11:22 to be high for 800 gig coherent one lambda backward compatibility to pam4 very

11:29 important uh the established infrastructure right now inside data center spam 4

11:34 and backward compatibility is will matter and synergy with copper applications

11:41 there are some challenges for pam4 i said reach extension beyond 10 kilometers i would say reach extension

11:47 beyond two kilometers is a challenge but a variety of other solutions can be

11:53 brought to bear on this but we think it's spam inside data center 800 and 16 com

12:00 outside all right for those of you on the audience who do not deal with

12:06 pluggable modules um here is a primer

12:12 the pan 400 gig that we deployed for dci between data center is what we

12:17 call a qsfp28 module and for the 400g zrs either a

12:24 qsfpdd or osfp they're slightly wider for qsfp and slightly longer for

12:32 qsfp dd and for a one ru which is typically one and

12:39 three quarters of an inch in height and a 19 inch with rack both of these formats will accommodate

12:44 32 modules which fits neatly into a 32 radix type data center architecture so

12:50 they're slightly different um in in physical dimensions but both of these two both of these formats uh will work

12:59 so um we have been focused on silicon photonics uh we have been developing silicon

13:05 photonics for the 400 gzr and 400g um dr4 modules

13:11 uh this is a typical performance of the max under modulators with um over about about 40 gigahertz bandwidth

13:18 and high high-speed photo detectors the first two are prerequisites for 400 gig inside data center

13:24 and outside data center you really have to have uh polarization

13:29 maintaining components as well polarization beam splitters polarization combiners low loss and high

13:35 extinction ratio before i pass on to my colleague i'd like to show you some of the work that

13:41 we've done and deployed uh something that jim referred to earlier on uh we announced about a year

13:49 back 400 gig zr modules gsrp dd form factor which is a similar form

13:55 factor to dr4 and fr4 inside data center using an advanced 7 nanometer node for

14:01 the dsp an advanced silicon photonics node from by cmos foundry

14:06 it's 8 by 26 gigabyte pan 4 coming in 802.3 bs format standard

14:13 output is a one channel one lambda 59 60 gigabyte quantum 16 nominally 80 to

14:21 120 kilometer um span reach we have a dsp and a silicon photonics engine inside the module and um we've

14:29 shown this data earlier on polarization scramble 50 kilo radians less than half db penalty and the spec

14:36 is 26 db for zr and this is a typical 400 g switch that you can buy in

14:44 a market and a fully loaded spectrum and you can

14:49 plug these modules directly into a router or a switch and convert that uh into a line

14:56 system and that is the uh the beauty of pluggable modules either

15:02 for 400g zr or 400g dr4 fr4

15:08 and uh the same trend would sort of follow at 800 gig

15:14 where the modules would be switch pluggable and of course there's a challenge

15:20 for cooling but that would be the same trend that

15:25 would follow uh for 800g as well i am going to pass on to ilia uh

15:34 to look at uh the details of 200 gig per lambda modulation equalization and fpc

15:40 ilia great thank you rada great uh set up and background for uh the rest of

15:46 the presentation where um we'll focus on uh 200 gig per lambda pam technology

15:52 and specifically i'll try to give you a flavor on some of the important trade-offs with modulation

15:58 formats equalization techniques and forward error correction um

16:03 radar if we can go to the next slide um

16:12 okay great so i i'd like to start uh first to give a little bit of background uh on

16:17 the existing 400 gig standards because i think there's some interesting trends there that perhaps

16:23 could give us some insights on on 800 gig so here i've

16:28 organized the ieee and msa standards according to

16:33 kind of the generation and the reach at the top i have the 400 gig

16:38 standards based on eight lanes of 50 gig pam4 and you can see that these standards

16:46 pretty much cover all the key data center applications from the short reach multi-mode at 100 meter

16:52 uh based on parallel uh multimode fiber uh you know the fr8 eight lanes of

16:58 a single mode lwdm going up two kilometers uh lra going out to 10 kilometers and

17:05 even er8 uh going out to 40 kilometers uh the current generation of standards

17:11 is based on a hundred gig per lane so four lanes uh to give a 400 g

17:16 um rate and as radar pointed out one of the important trends is to reduce

17:22 the number of optical uh lanes to reduce the optics cost and the power of the modules

17:28 uh so that's the current generation based on four lanes of 100 gig per lane pam technology and again we're

17:33 able to cover most of the key data center applications you know the short reach uh multi-mode sr4 uh dr4 at 500 meters

17:42 you know fr4 going out to two kilometers and lr4 one i think interesting trend

17:49 here though is that we're starting to see some of the limitations uh of going to higher baud rate uh using

17:55 you know intensity modulation and direct detection uh and especially uh the impact of fiber

18:00 chromatic dispersion is starting to uh you know uh starting to have an impact on the reach so for example the

18:06 ieee decided to limit the lr4 reach from the traditional 10 kilometer to six

18:11 kilometers uh to uh to mitigate some of the dispersion penalty uh and even though we have uh an msa

18:18 that has extended this reach to 10 kilometers essentially kind of leveraging the industry advancements in

18:24 dsp technology and equalization that can mitigate this dispersion penalty uh still we're

18:30 starting to see the impact of fiber impairments on on scaling the

18:36 baud rate and i'll talk a lot more about that in the following slides in the context of 200 gig per lambda

18:42 now uh the ieee recently started a new project uh beyond 400 gigabit ethernet

18:50 and although this project is just starting off and we're going to start working on the objectives

18:55 i can speculate here that most likely we will have an 800 gig objective and very

19:01 likely we will look at pam technology for a 200 gig per lane

19:07 and going out to one to two kilometers uh fr4 type of distances uh and um

19:13 i believe you know i will need a stronger fact uh to deal with you know going to higher

19:19 baud rates um and most likely uh we'll need you know to leverage the dsp technology to

19:25 mitigate uh some of the impairments that we're going to have to deal with when we scale to higher baud

19:31 rates so in the next slide i'd like to actually go over the key

19:36 impairments in in in an optical 200 gig per lambda system

19:43 so here i show a little cartoon um just describing the key components uh in

19:49 a pam4 optical interconnect the key components are the dac at the transmitter

19:55 the driver and the laser modulator we have of course the fiber link and at the receiver the photodiode the

20:02 tiaa and the afe and adc and some of the key impairments that we have to consider

20:10 are the transmitter analog bandwidth limitations and electrical reflections so as an example of that i borrowed a

20:17 picture from an 800 gig pluggable msa white paper shown below here

20:22 which shows two uh designs for for 200 gig per lambda transmitter

20:28 based on eml technology and you can see in the frequency response uh the the bandwidth

20:35 analog bandwidth is around 50 gigahertz and there are lots of ripples in the frequency response

20:40 perhaps due to reflections in these uh interconnects and and components so that's something that's very important to to consider in

20:47 in our designs and i'll focus in more on that in the following slides

20:52 uh in the optics we have to carefully consider the laser rin noise it's a

20:58 level dependent noise which has a very important impact on higher order modulation and

21:03 in particular it would impact pem6 more than pam4 and i'll show you some of the ramifications of this uh in in the

21:10 following slides uh and for the fiber propagation the fibrochromatic dispersion as i mentioned

21:15 is a key impairment and we're already seeing that at 100 gig per lane the fiber chromatic dispersion penalty

21:22 actually scales quadratically with baud rate so we expect this to be even even more of a limiter for 200 gig per

21:28 lambda and i'll have a separate slide where i'll just focus in on on the chromatic dispersion impact um there are other other you know

21:36 optical propagation effects like pmd uh and at the receiver uh the key component there is the tia

21:44 and we have to carefully consider you know the specifications of the tiaa noise the linearity and analog bandwidth

21:50 so i'll provide a slide which gives an analysis on on ta performance as well

21:58 okay so um i talked about the impairments what what are the tools that we have uh in our toolbox to deal

22:05 with these impairments uh here i show um kind of a simplified receiver dsp architecture

22:12 and try to highlight some of the key components and tools that we have so first of all in the analog part the

22:17 afe the most important component is the adc and this is really the component that enables dsp

22:24 architecture and and today we have adc technology that can go out to beyond you

22:29 know 100 gig a giga sample per second and analog bandwidth so 50 gigahertz or better

22:35 uh in the dsp domain we have some very powerful tools starting with linear equalization based

22:42 on the ffe and today we can uh you know implement

22:47 ffes with 10 to 30 taps or more and the ffv is extremely useful for both

22:53 mitigating you know analog bandwidth imperfections as well as reflections and all kinds of

22:58 imperfections in the frequency response we can implement a one type dfe which as

23:04 i'll show is very useful for kind of extending uh the ban you know mitigating the bandwidth limitations and

23:10 it also helps a little bit with chromatic dispersion and uh when we're really stressed with a

23:16 very bad channel uh then the most powerful equalization is based on what's called maximum likelihood sequence detection

23:23 and we've implemented kind of a simplified low power mlsd and i'll show you some

23:30 results on mlsd as well and finally a very important tool is the forward air correction um

23:38 you know in in the existing standards uh kp4 fec is the kind of the workfor workhorse

23:45 that we use for both 50 gig and 100 gig per lane pam technology and it's really important to enable

23:53 the reaches and also enable low-cost optics and as we scale to higher baud rates

24:00 i believe we're going to need stronger fec but at the same time we have to keep that fact you know low power and low

24:05 complexity uh and low latency uh one of the advantages of the dsp architecture

24:11 that i show here is that we have available in the effect module not only the heart decisions but also the soft

24:16 information the reliability information and we can exploit that information to

24:22 design you know stronger facts while keeping the complexity fairly low so what i'd like to do in the

24:28 following slides is to discuss a little bit on fact and then i'll move on to the equalization and modulation

24:34 trade-offs here i show effect architecture based on

24:39 so-called concatenated codes this is actually the architecture that we standardized in the 400 gigs gr

24:46 standard it's a very flexible and powerful architecture because it allows you to start with a

24:52 with an existing faq so in this case we can start with the kp4 fact that already exists in the switch host

24:58 asic and we can build on it by adding a simpler uh code inside the dsp of the optical

25:05 module and we can also you know as i mentioned previously exploit the soft information

25:11 in the dsp of the optical module which is very close to the channel and by combining these two effects

25:17 together we we can get substantial improvements in performance in the coding gain while keeping the effect fairly simple

25:23 in the optical module to keep that power low and the latency fairly low

25:28 so this is the architecture we've been working on and uh on the next slide um i'd like to show

25:34 you some simulation results on performance of these concatenated codes

25:40 so these curves show uh several designs of a uh kp4 effect for the auto code and

25:47 different levels of complexity of the of the fact for the inner code uh starting with

25:52 the purple curve which is just kp4 fact by itself uh then the yellow curve we're adding

25:57 a fairly like the most the simplest effect you can think of the just a single parity check code inside

26:03 the optical module dsp and with soft decision decoding we already get about a one

26:08 one and a half db enhanced coding gain uh then the red curve uh we're using a more sophisticated

26:14 hamming code extended hamming code and we get an additional 1db improvements and we can keep going using

26:20 more sophisticated uh you know inner codes like the bch code uh twirl correcting code in the

26:25 blue curve and what we find is that as we use more and more sophisticated and

26:31 complicated inner codes we kind of uh have a diminishing return in terms of the trade-off between complexity power

26:38 and coding gain and that we found a good trade-off is actually this red curve which is um is using a hamming code for the inner

26:46 code and interestingly this is also the design uh we used for the 400 gigs er effect

26:52 standard and with this code we'll have an overall coding gain of about 9.4 db

26:58 while keeping the the complexity and the latency fairly low so i'm going to assume in the following

27:03 slides that will have one of these stronger effects available to us and next i'd like to focus more on the

27:08 modulation and equalization you know trade-offs so here i show

27:14 some system simulation results on the bid errate as a function of the analog component

27:20 bandwidth so the anal component bandwidth is a very important kind of parameter we have to consider um

27:26 and here i'm sweeping the the bandwidth of all the components you know the the driver the the dac the modulator the ka and the

27:34 adc and as we sweep the analog component bandwidth here we're simulating the bit air rate

27:40 for for both the pam4 system at 113 gigabyte uh and a pam-6 at 90 gigabyte

27:47 the horizontal dash curve is is the fact limit for one of the more uh you know advanced facts that i

27:53 just discussed and you can see some interesting trends here so for example with ffe only

28:00 uh which is uh blue curve versus black curve we see that there's a kind of uh

28:06 crossing crossover point uh where uh when you go beyond 45 gigahertz

28:12 you know pam4 gives a better performance while for for really lower analog bandwidth

28:18 m6 gives better performance due to its lower baud rate with the dfe when we add the dfi which

28:24 is shown in the red curve and the green curve that crossover point shifts to lower bandwidths and we

28:30 see that then um you know pam4 performs better even at 40 gigahertz and beyond and when

28:36 we add the mlsd equalization which is shown in the pink dash curve then really pam4 shows a very very good

28:44 performance much more robust to to analog component bandwidth limitations and and good bit error rate uh so based

28:50 on these results we we feel that pem4 is a good choice for modulation format for

28:56 uh for future 200 gig interconnects uh and in this slide uh it just confirms the

29:02 analysis that i just showed and here we're just focusing more on the ta and looking at

29:07 the impact of ka noise and k bandwidth so sweeping out that parameter space uh and comparing pam four to pam six and

29:14 you can see here uh you know red is bad you know it's below the effect threshold uh we wanna be in the green and blue region

29:20 and and this slide shows uh that pam4 has a much wider kind of good region of operation which

29:25 shows it's more robust and and a good choice for modulation uh so if you go to the next slide radar here i

29:33 just want to kind of finish the technical discussion focusing on the chromatic dispersion as i mentioned that's the key

29:39 optical impairment as we go to 200 gig per lambda so here i'm focusing

29:44 on pam 4 technology and and looking at the impact of equalization on the performance and i'm plotting the

29:51 simulated optical power penalty as a function of chromatic dispersion and what we can see here is that with

29:57 just with linear equalization which is the red curve we really don't have enough equalization

30:03 power to deal with a two kilometer fr4 spec and we need to go to at least an ffe dfe

30:09 architecture which should work the the dispersion penalty would be 1db or lower and with mlsd we really

30:17 are doing very well the dispersion penalty is below half a db and we could potentially even go to

30:22 longer distances beyond two kilometers uh so i'm running out of time so i'll just go to the conclusion slide

30:29 so in conclusion you know next generation 800g data center optical interconnect should be designed considering the unique application

30:36 requirements of data center operators the choice of modulation format dsp

30:41 architecture and fec requires careful analysis on the engineering trade-offs analog component technology and optical

30:47 channel specs and our optical system simulations uh show that pam4

30:52 is a good choice for 200 gig per lambda it's a feasible technology for 800g fr4 and dr4

30:58 synergistic with low latency ai breakout and copper and i will coherent technology on the

31:05 other hand may be the most uh most useful for longer reach applications such as uh 40 kilometers and maybe even 10 kilometers

31:12 where coherent dispersion tolerance and lower fiber loss at 1550 nanometers are the key

31:17 advantages um rather do you want to add anything to these i know this is great ilia um we'll we'll yield the floor to mark

31:24 and then take questions in another session okay thank you so um yeah thanks uh for for uh

31:32 inviting me to to speak here today and uh radha and ilya thanks for the uh interesting uh presentation there

31:40 um i wanted to kind of come around um with all the talk about the race to

31:46 800 gig and um you know the next gen uh solutions being uh just on the horizon um and just kind

31:54 of hedge that in with a reality check for i think um you know at least where we are at

32:01 microsoft um may not be indicative of of plans for the other hyperscalers

32:07 um and end users of these devices but at least gives a gives one uh counterpoint um

32:14 as uh as to where we we see things going um in our data

32:20 center ecosystem and so um

32:25 uh like with uh like with anything whenever we start talking about uh something more than six

32:33 months out in a public forum uh it's probably a good idea to caveat it with a disclaimer like like the one

32:40 shown here um it's meant to be a little more tongue-in-cheek than uh specific legal

32:46 legal language but uh just all that to say that you know uh at least that microsoft plans can change

32:53 quickly um but at the same time change is slow to happen it's a big shift so um uh you know

33:00 take take what i say here uh when i'm projecting forward with a grain of salt

33:06 um first i want to start out with the question um is there demand for 800 gig

33:13 you know i think that's the premise of the webinar and then rata nilia would have you believe that there is and the answer is uh pretty

33:20 straightforward at least as far as i can tell yes there is demand um i participate in

33:26 the oif and i'm on the board there and uh back uh the first part of last year we issued

33:33 a survey to network operators we issued it to over 25 network operators or so

33:38 and had them answer questions about beyond 400 gig um and and particularly in scope there

33:45 was uh kind of beyond 400 zr like the 400 gig coherent applications but the answers to

33:51 the questions shed some light on on what would be happening inside the data center as well

33:58 one of the relevant questions there i have in the top right is when are you expecting to need a standard coherent

34:03 wide side interface with capacity larger than 400 gig per lambda um and if you check out the answers uh

34:11 uh over 70 of the respondents came back with 2023 or before um and

34:18 and you know uh another um 25 or so uh said said sometime after

34:24 2025 um but you know big takeaway for there is uh yeah people are expecting uh

34:33 something higher than 400 gig and they're expecting it pretty soon that's two years away uh the next question what router port

34:40 speed do you plan to deploy after 400 gig and uh you know the vast majority here

34:45 um almost 50 percent said 800 gig you know uh some others said one

34:50 points one one t or 1.6 t i think it's pretty unclear what

34:55 the next mac rate uh would look like beyond a 800 gig perhaps but um and then some

35:02 answered other i'm not really sure what they had in mind um but uh you know one of the interesting things that came back to

35:08 in this survey response is what people expect the power of these modules to consume and as you

35:15 can see there the powers that people are saying are about where 400 zr is today and um you know as we know from

35:22 from history and early engineering projections from from what people are looking at for

35:28 what would be maybe an 800 zr um application uh that's going to be quite

35:34 a tall order so um to me this tells me that people are already pretty power constrained

35:40 and they kind of want to have their cake and eat it too but you know we'll just have to see how these things play out

35:46 so you know all of that is backdrop for what i'm about to show you which is the the very microsoft centric view uh i

35:54 would say um and i think it's helpful first and i apologize if if you've seen this

36:01 in other forms before we've been showing uh the next few slides in a few different

36:06 forums like ofc and e-cock for the last couple years but i always feel like it's helpful to level

36:12 set with the audience on how we build things today and that better informs you know our use cases

36:18 and requirements um for the future so looking at what we do at 100

36:24 gig sorry 100 gig and 400 gig um how we build our data centers today

36:29 so my slides will advance uh there we go um so you know we start with the notion

36:36 of iraq which is uh comprised of a bunch of servers tens of servers

36:42 and those servers are connected into the network by a

36:48 top of rack switch and the connections are made with dac cables that direct attached copper

36:56 that are about two meters in length and uh you know in our 100 gig ecosystem they're running off of 50 gig interfaces

37:02 from the servers um there's typically some over subscription of the tor device and that's usually a single switch asic

37:09 device um that has some over subscription

37:14 as it goes to the next tier of switches and those are connected in a class

37:21 fabric across uh multiple you know redundant switches with aoc cables

37:30 typically about 30 meters or less in link the aocs run at 100 gig and dissipate

37:37 around two to two and a half watts per per inch um

37:42 and tens of these racks um are um changed together across these tier

37:49 one switching devices and that makes up what we call a row or a pod set so this is

37:55 kind of the one of the main building blocks that you'd see in our in our physical

38:00 data centers um and other thing here is the tier one and the tor devices are typically um

38:08 uh both single switch devices um kind of the one ru pizza box type of switch uh commodity switch you

38:15 might have seen on the market um going to uh how we then build our data centers

38:23 um connect our data centers together um sorry i'm jumping ahead of myself how we

38:30 connect the the pod sets together within the data center a single data center facility

38:35 um we have uh uh what we call our tier two routers that

38:42 are um connected to our tier one devices across the different pod sets with parallel

38:49 single mode fiber and that fiber is actually a permanent part of the infrastructure it's amortized over the

38:54 lifetime of the data center to mitigate the cost but also because we

38:59 know we will use these fibers across generations those are about a kilometer in length uh

39:07 maximum and um the the tier twos are you note they're a different color

39:13 here that's to denote that they're multi-chip um modular switches that um

39:19 have fabric cards and um line cards for separate line cards for northbound and

39:25 southbound connected ports and um the optics that connect into those

39:31 devices on either end are uh typically psm4 although in some

39:37 legacy data centers we might use cwdm4 to cover a longer distance where we don't have

39:42 that parallel infrastructure and my note about these being modular swift is switches is significant because

39:49 it allows us to mix and match clusters of different generations 100 gig cluster or 40 gig cluster

39:55 and we do the impedance matching uh the term we like to use of of those different generations using the

40:02 line cards in those tier two devices and everything you see here is what

40:07 makes up what we call a data center now the way we connect our

40:12 data centers together within a metropolitan area is

40:18 maybe a little unique today but i think people will see more of this type of

40:23 approach going forward with 400 zr coming onto the market um but what we have is we have

40:29 two um hubs within a region that we identify as rng rngs regional

40:36 network gateways and the data centers are connected um

40:42 again uh in a in a class fabric across the metropolitan area

40:47 over dwdm line systems using pam4 technology which rodder referred to

40:52 earlier on in his deck um and this is um this is

40:58 uh the distances here that um we're talking about are typically

41:04 latency constrained we say 100 kilometers on average it's it's actually

41:09 a bit shorter than that but the it's to ensure that the round trip time from any

41:15 server and any one data center to another data center is bounded by uh a certain amount on the

41:21 order of microseconds that uh makes at the application layer um to the user

41:27 the the um uh if you had storage served out of one data center and

41:32 compute out of another for the same application it should feel seamless like it's coming out of one mega

41:38 data center facility and so that's where this distance comes from um again the the pam4 modules are

41:46 directly plugged into the routers here and and uh dissipate uh about what a

41:52 gray optics does uh that generation around four and a half watts and um the capacity that we would have

41:59 is a single percentage of the total server capacity for a given uh given region for our largest regions

42:06 today this is petabits per seconds of cross-sectional bandwidth going across the metro so just summarizing what this

42:15 looks like for the 100 gig ecosystem i'm at 100 gig down at the bottom it's the dex

42:21 uh connecting the servers to tours two meters uh the tourist to tier ones that's 100

42:27 gig aoc is 30 meters tier 1 to tier 2 100 gig psn fours

42:32 at a kilometer and then 100 gig dwdm pam4 um connecting

42:39 the data centers to the regional hubs and the regional hubs to each other we egress the regional hubs um

42:47 going toward the capital i internet and toward our um private backbone to connect uh to

42:53 other data centers across the the world um using kind of more traditional

42:59 long-haul types of solutions there so this is what it looks like at 100 gig

43:04 going to 400 gig you have to watch closely here uh boom 400 gig

43:12 um so if you'll notice uh basically nothing changed topologically the only thing that

43:17 changed was we scaled up um the the bandwidth of all of these links that are interconnecting the data

43:24 the switching tiers by factor of four and um this is no worthy um because

43:33 of the point that i'll get to on my next slide um the elephant in the room here and

43:40 something that hasn't been much of a major consideration up to this point in time and this point in time meaning the 400

43:46 gig ecosystem is power um the equipment uh network equipment power consumption

43:53 is already problematic for the 400 gig ecosystem and the charts at the right uh are are

43:59 highlighting this phenomena um switches in the 400 gig time frames are projected to be around 3x the power

44:06 of the 100 gig switches uh the optics are coming in around three to four x

44:12 100 gig and this challenge is the the total power envelopes available in the data center facilities

44:18 and so the plots on the right um the one on the bottom uh we generated based on some internal

44:23 data um showing over the generations of the server server length bandwidth or you

44:28 can think of it it really is the mac rates um the uh the power uh

44:34 the relative power of the networking devices versus the servers um uh is ever encroaching and once you

44:42 scale to um 800 gig uh it's it's up around 20 of the total

44:48 data center power being consumed just by moving bits not by the revenue generating servers um

44:55 likewise the plot above it is facebook's data on uh you know without calling out

45:01 the specific generations but showing the same phenomenon that as you go to

45:06 quote next generation um the network power is a big slice of that pie and that's bad

45:13 news when you're um uh your services is cloud right

45:18 um so as i mentioned it's using power that that could be generating revenue it's it's

45:23 uh capacity that is lost uh on the servers uh to the servers and uh

45:30 it costs money uh obviously it's just burning power that that could otherwise be done more efficiently and it's not

45:36 green it doesn't um support our our own sustainability initiatives internally to

45:42 to um build things this way and so you know looking at it like that it

45:47 makes the trajectory of a transition transition to anything beyond 400 gig um you know doing things the same way we

45:55 are now up here all but impossible uh or at least very inefficient um so um looking at possible solutions

46:05 um how do we um really start to chip away at this problem one is photonic innovation and a big

46:12 piece of that um we believe at microsoft uh is uh co-package optics

46:18 and coke package optics if you're not aware is just the notion of uh um packaging together uh

46:25 the switch silicon or you know any type of system on chip really with uh the photonic engines that are um

46:33 primarily based on silicon photonics on a common substrate and by you know this is talked about a

46:39 lot in the industry but by moving the the optical engines closer to the uh the

46:45 asic itself you can reduce the the trace length and and certainty's power and really

46:51 uh drive efficiencies and power and you know what we're seeing is that compared to kind of state of the art plugable

46:57 technologies when you're looking at link efficiency uh the power efficiency of 25

47:02 picojoules per bit on average for a plugable going on board

47:08 like a kobo approach you know improves that by moving the optics closer and eliminating re-timers but really

47:14 uh you see some great efficiency gains by um um co-packaging you know almost uh 30 to

47:21 50 reduction in in the uh the power that's dissipated by the optics

47:26 and even with an eye towards second gen co-package optics where some additional optimizations could perhaps be made

47:33 really driving that down into the sub five people joule per bit range so we we see that as a big uh a big area

47:41 but we also um have to attack it in other ways on for example um collapsing tears as rada

47:49 touched on earlier by using multi-home nics on our servers fanning out

47:55 horizontally and bypassing tours and i'll have a little more detail on that later um

48:00 having simplified forwarding requirements on our switching asics uh you know less fib less buffer equals

48:08 less work and less power um additional integration things like

48:13 integrating the encryption directly on the switching a6 and lastly uh some types of alternate

48:18 cooling you know i showed liquid cooling uh specifically immersion cooling there but we're actually studying uh several

48:25 different approaches to that at microsoft but but the big takeaway is we can't

48:30 just keep scaling link bandwidths like what i showed going from 100 gig to 400

48:36 gig that's the last time um we can do it like that and continue building things

48:41 the same way next-gen systems are going to require all of the above um

48:46 additionally i wanted to just provide this retrospective here if you look at the rollout of our what

48:53 i'll call our 40 gig ecosystem or 100 gig ecosystem and then kind of projecting forward into

48:58 400 gig and you overlap those with ga dates for you know i'll just say optical modules

49:06 at 100 gig 200 gig 400 gig and 800 gig you can see that we tend to move pretty

49:12 slowly we're not at the bleeding edge um deploying things right when they are available

49:17 and once we deploy an ecosystem we sit in that ecosystem for a good

49:23 several years before we start ramping that down and ramping up a new technology

49:29 and just so just looking at the the turnover and churn in our networks we we skipped

49:34 entirely any type of 200 gig solution we're just starting to to look at

49:41 rolling out and deploying our first 400 gig ports in the early part of 2021 the first half

49:47 but won't be really deploying 400 gig in earnest until 2022 and we expect that to take some

49:53 time to uh reach full maturity in our own internal data center ecosystems

49:58 so by the time an 800 gig solution is ready as others have called it out we won't be ready uh at microsoft so

50:06 it's just you know again helpful historical context to to to kind of frame um

50:13 how real is the need for 800g at least for us um and especially in light of the

50:20 power challenges uh we mentioned before um so i want to revisit rada's slide here who

50:27 i guess he stole from facebook but then added uh this table down here and i'll

50:32 say um just that he's he's spot on um with the uh with the radix argument but i would

50:39 argue he didn't quite take it far enough when we project out to 51.2 t

50:45 switches um if you look at the radix there and consider that the lane speed

50:50 uh of those is natively 100 gig uh that gives you you know 512 radex on

50:57 that switch and that allows you to build a network that services over 100 000 servers uh in a

51:04 data center um by um uh leveraging the 100 gig um building

51:12 blocks so 100 gig being the native electrical lane speed is really the simplest uh network that you can you can build uh

51:20 it's one mac uh equals one electrical lane equals one optical lane and the idea here being that you you you

51:28 fan out these um these hundred gig interfaces horizontally so that you're able to to build

51:36 you know a highly power efficient and very flat uh network kind of the errata was getting it um and

51:43 and you know 100 000 servers is nothing to shake a stick at that's that's effectively a 100 megawatt data center

51:49 that's just massive capacity um and you know so i want to show here

51:55 just how that looks at least for this first tier of the network where again today this is revisiting what we do with the

52:01 dac cables going to the tier 1 devices using aocs with a tor in between

52:07 um what we envision doing when we're enabled uh by you know these

52:14 advances in photonics including cpo and and um um

52:19 the 400 gig uh availability for for the servers we would have here at the server a 400

52:27 gig dr4 interface and that would fan out across these tier 1 switches

52:35 um providing that that horizontal scaling that i was referring

52:40 to um and in that way allow you to completely and

52:45 eliminate the tour at the top of rack and just have some type of patch panel or shuffle panel as a d mark

52:51 at the top of rack now and you know when you when you go and compare

52:57 the efficiencies of the old way of doing things on the left um with you know this what i i'm we're

53:04 calling toward bypass um with 100 gig lanes um you can see

53:09 there's a host of advantages um one being the failure domain you know a tour is a single point of

53:15 failure for an entire rack and again if you're serving content maybe that's not a big deal but if

53:20 you're uh providing um compute as a service uh and uh that that could be huge right

53:28 um whereas if you're multi-homing your nick you have that four-way resiliency or i would say

53:34 in-way resiliency depending on the number of uh uh interfaces or planes on your server

53:42 i'm looking at the switch asic count uh four to eight x um in this case when compared to the

53:48 number of switch asics it would take to build this network here um space and power

53:54 again uh reduce space obviously saving the space of the tour and then a third the power um primarily

54:01 by um uh reducing the number of switch a6 but also running more efficient

54:06 uh co-package optics on on the tier one devices uh uh in terms of switch radix um

54:14 on these especially when you get to these high radix switches that are that are um coming up in the future you

54:19 you can't leverage the higher radix which is fully you leave stranded ports on your tors here because again we're

54:26 just a few tins of servers per rack but some of these uh some of these um

54:31 switch chips are 64 or 128 radix um whereas uh here um by fanning out

54:39 again and leveraging the full 100 gig lanes on on these switches you you um can leverage the full radix of

54:47 all the switches in the tier ones on the switch chips um again uh uh i mentioned before

54:54 that we typically run some over subscription going from the tour to the tier one um whereas uh in this scenario it's

55:02 fully non-blocking or i guess non-oversubscribed um and then lastly

55:08 reach limitations of dac cables and eocs are looming whereas you know common

55:14 optical solution like a dr4 based interface would allow you you know

55:19 up to 200 kilometers or more of reach um so that goes away

55:25 so um apologies if i went a little bit over in time um but it's a bit of a nuanced

55:31 discussion so i wanted to to try try and uh be sure i covered it well

55:36 um but the the power um uh in summary the power is the main limiter for

55:41 for beyond 400 gig data centers uh as we see it um we can't continue to simply scale the

55:49 link bandwidths while building the networks exactly as we do today um and additionally the historical

55:56 ecosystem life cycles would indicate that we won't be ready for 800 gig when the industry is

56:04 and really a 32 by 100 gig cpo will suit our needs better um 100 gig electrical lanes will be a

56:12 foundational building block for power efficient data center designs for the foreseeable future

56:18 just that that idea of what i was showing with the tor bypass and and lastly um future data center

56:25 networks are going to require a combination of photonic innovation which we lump cpo

56:30 into that optimize network architectures and advanced hardware implementations

56:37 and i think that's it so thanks for your time

56:42 all right um thank you mark uh we really appreciate that especially

56:47 that um you're you're going green and imperative i think many people across the industry

56:53 are really uh working hard on that problem um so i also wanted to say that these

57:01 slides will be available to everyone we'll send them out in an email to all the uh registered

57:06 attendees probably within a couple of days um at this point we're going to take questions for about 15 minutes we have a

57:13 lot of great questions that have come in um and we'll you know try to answer as many of them as we can get to

57:19 if we don't um you know we'll follow up on that as well so first brought up um

57:26 the question of power in dsps um that's that's the first one we're hearing some considerations for pam5 or

57:33 pam6 are there inefficiencies expected in dsps when

57:38 pam signaling goes up to pam 8 or 16. so

57:45 um this is a two-part answer i'll get elia to chime in as well

57:51 so the when you go to a higher complexity in modulation format uh

57:58 you're working against two things one is your snr is going up exponentially uh

58:03 the good old shannon and secondly your linearity requirements are going up

58:11 uh just as bad um so yes if you go to uh a higher order

58:19 modulation format let's let's call it for what it is uh you suffer snr uh the game you're

58:24 trying to beat and you consume a lot more power and the tia and driver trying to linearize them and that's uh

58:32 you know partially one of the reasons why we think uh pam4 um is is

58:39 a good compromise ilya yeah i think you summarized it really nicely so

58:45 so just to make it more quantitative you know when you go to a pam six versus

58:50 a pan four the the eye opening for your pam six is is decreased uh by a factor of five

58:57 thirds so that's already a penalty in snr of like uh about four db or so also you know higher order

59:04 modulation is more sensitive to to many impairments like for example rin i mentioned earlier

59:10 uh rin is a is a very important impairment in in lasers and it impacts higher order

59:17 modulation more because it's a it's a level dependent noise uh so pam6 suffers more from rin and you

59:23 actually saw that in my plots where um pam6 kind of exhibited an air

59:28 floor at higher analog bandwidths uh and and other impairments isi

59:34 it's it's more sensitive to isi so there are a lot of advantages uh if you can get a high enough uh

59:39 analog bandwidth uh for pam4 it's definitely uh there's a big advantage there for robustness

59:45 for implementation purposes it's more complexity many advantages and also backward

59:51 compatibility of course all right great another question for

59:57 rada on the symbol transmit rate is it still capped around 25 gigabyte owing to

1:00:02 limitations on direct modulation of lasers okay this is an interesting question for

1:00:08 dmls uh it is probably capped at about gigabyte although there are

1:00:16 announcements from several vendors of uh dmls operating at uh at the 53 gigabyte

1:00:25 uh data rate but largely at 53 gigabyte or 100 gig per lane

1:00:30 dr4 fr4 implementations have mostly been uh been eml

1:00:38 and silicon photonics yes there seems to be a natural stop at dmls i wouldn't count

1:00:45 them out but but there seems to be okay um ilya what kind of specs are

1:00:50 required for the adc to enable the dsp for the pam4 receiver

1:00:56 yeah i mean that's a that's a great question so so the adc is a key component uh of uh of the system um

1:01:04 i went over the the requirements on analog bandwidth and i think those are valid for the adc

1:01:09 um so so you need uh analog bandwidth of better than 45 gigahertz for pam4

1:01:15 ideally better than 50 gigahertz um and of course there are many other specs

1:01:20 like specs on uh you know enob you know various uh

1:01:27 distortions um and it really probably would require a separate presentation and discussion just on that

1:01:33 but i think the key spec you know analog bandwidth i would say around 50 gigahertz okay um could you also talk a little bit

1:01:40 about the latency um the latency of you know 300 nanoseconds is it too large for 800g in a 2k

1:01:48 application and i guess latency of of the fact in general here yeah

1:01:55 that's another very good question uh has a lot of implications and trade-offs so um you

1:02:00 know when designing facts there's kind of a fundamental um trade-off between

1:02:06 uh coding gain uh effect overhead and effect latency uh so it's it's very

1:02:12 difficult to to uh to have a very large coding gain very low overhead and very low latency

1:02:18 you have to make some trade-offs um so we believe you know to get the kind of coding gain we need

1:02:25 uh we um we try to play as much we try to optimize that that parameter

1:02:31 space as much as we can you know the overhead keeping that overhead at around six percent not not more than six percent and keeping

1:02:37 that latency less than 300 nanoseconds um 300 nanoseconds i think is a

1:02:43 reasonable number um if you think about a two kilometer link uh you know the latency that just

1:02:48 the propagation delay of that link is is you know microseconds so i think uh that latency is still less much less

1:02:55 than the propagation delay uh but it really depends a lot on the application so if we're talking about it like an ai application where

1:03:02 uh you know the the the links are are very short maybe in the ai cluster then you might

1:03:08 need even lower latency and and there are ways to design these facts that i talked about the concatenated effects to have even lower

1:03:14 latency so in general do you think these facts are going to continue to evolve and we're going to see them

1:03:20 you know in the next and the next generation after that i think so yeah i mean i think fec is a

1:03:27 is a critical um technology to enable uh you know higher speeds

1:03:32 to enable lower cost optics uh and i think even maybe lower power i i think

1:03:38 it's it's a consideration there because you know um so um i think it's going to

1:03:43 evolve we're going to have a discussion on fact for sure in the ieee when we talk about 800 gig

1:03:49 uh so yeah it's going to continue to evolve and it's a very interesting design space you know

1:03:54 somewhat different from long haul where you know we're just trying to push the fact to its lit no to the shannon limit

1:04:00 uh uh in uh in data center there are there are interesting trade-offs with latency and power so

1:04:06 uh it's uh it's an exciting field actually for fact designers okay um so

1:04:12 a lot of these questions i think have to do with engineering trade-offs and you know this this next one is uh

1:04:18 looking at pam6 uh 90.7 gigabyte compatible with 75

1:04:23 gigahertz dwm spacing well in data center wdm the the channel

1:04:30 spacing is actually much wider than than the dwdm

1:04:36 systems that are used for long haul and metro so inside data centers we we typically use what's called cwdm

1:04:43 and where the channel spacing is i think it's around four nanometers uh for cwdm so it's really not an issue

1:04:50 uh uh for for for the you know the spectral um bandwidth of pam6 um uh

1:04:57 so i think uh it's not an issue for the for the type of channel spacings we use for inside data center

1:05:04 okay um uh another one for you ilya and that's on mlsd how many taps

1:05:12 are you assuming in your mlsd and can you comment on the complexity and power

1:05:19 um you know i don't want to talk too much about the implementation of the mlsd except to say that that we are designing

1:05:26 um you know kind of um i would say innovative mlsd architectures for very low power

1:05:33 low complexity uh and it's possible to do that there are there are a lot of trade-offs uh for example pem6 versus

1:05:40 pam4 you know pem6 would require more complicated uh mlsd uh you know so-called

1:05:46 trellis architecture um and uh you know there are a lot of implications

1:05:51 uh so i probably don't want to get too deep into it but just to say that the type of mlsds that we design

1:05:57 are low power mlsds for data center applications okay uh does the additional dfe

1:06:04 tap provide improvements because of equalization without noise amplification or are there other reasons yeah so the

1:06:12 so uh so to think about the dfe first consider just the ffv by itself right so the ffv by itself

1:06:19 can in principle invert the channel and and completely correct uh for the bandwidth limitation but by

1:06:24 inverting the channel the ffe will also enhance noise and that's where the ffe runs out of

1:06:30 juice when you have a very severe bandwidth limitation the dfe helps by canceling the post

1:06:37 cursor isi without noise enhancement so it makes the job of the ffe easier less noise enhancement and hence

1:06:44 better tolerance to to bandwidth limitations and we saw that in the in the um kind of the simulations that i showed

1:06:50 when we added the dfp both pam-6 and pam-4 but especially pm4 because of its higher baud rate

1:06:56 uh benefited a lot from that in terms of its uh robustness to bandwidth limitations okay so one more clarification on sort

1:07:04 of on that line is there any particular reason why you didn't investigate m6 with ffe

1:07:10 and mlssd i think yes i touched on that already so so um

1:07:17 there's several reasons first of all pam6 because of its lower baud rate doesn't really benefit much

1:07:22 from mlsd as you go to say as long as the analog bandwidth is say greater than 40 or 45 gigahertz

1:07:29 you don't get much benefit for pem6 but on the other hand the complexity of the mlsd scales up very rapidly uh

1:07:37 for pam6 so these are the reasons why it's not it's not really useful i think

1:07:43 for pem6 okay a question for mark uh considering power and thermal performance

1:07:51 would there be some case to adopt um cpo at 800g somewhere in your topology at least to

1:07:58 test it out um yeah i guess um

1:08:05 cpo chiplets based uh in 800 gig denominations is kind of what's

1:08:10 in scope there like i mentioned an 800 gig mac itself may not be a good fit

1:08:16 for for what we're looking to do anytime soon but um uh but yeah as a test vehicle or

1:08:22 something we could see you know or like proof of concept um and prototyping we could see you know

1:08:29 leveraging some of the 800 gig photonic developments uh in a cpo

1:08:34 application you know to to just get some hands-on time with the technologies okay and how about kobo

1:08:42 in general um could you be testing that as an opportunity of reducing power and cost yeah

1:08:50 i was just typing a response to that one um yeah so kobo um as of now there are some

1:08:57 you know tinted programs inside microsoft that uh are evaluating kobo and some of

1:09:04 those applications um but for our main stream networking uh

1:09:10 deployments there is not currently a plan to um to roll out kobo we will be

1:09:17 focused on transitioning to co-package optics um in the 2023-2024

1:09:24 time frames there was also a question on the timelines so that that's answering that one okay um you had another question about

1:09:31 how two servers in the same rack can communicate with each other or in the case of a tour bypass scheme

1:09:39 yeah they they just uh through the tier one switch now so

1:09:44 there's not a tour anymore but the the ship uh the tier one would be able to talk to

1:09:50 all the servers um in the same same row in the same rock

1:09:56 okay um let's see i i guess there's a there's a still kind

1:10:01 of a wider question here that i'm going to uh give back to radha and ilya and

1:10:06 that's kind of to see if you guys together or individually want to give up an answer to mark um

1:10:14 to basically uh you know address those power power

1:10:20 concerns okay um as as mark said uh power is the

1:10:27 elephant in the room uh there is no denying uh if you go to higher and higher baud

1:10:33 rates uh is simply we are consuming uh more power

1:10:39 and mark has um very elegantly pointed out uh why uh core packaging yeah essentially

1:10:46 reducing the the 30's reach between the switch and the and the package uh would reduce

1:10:54 it and in this case is also advocating for a lower data rate on our side

1:11:00 we have a multi-pronged approach i'll let elia comment as well and elia had said a number of times a

1:11:07 low paraffaq low power mlsd so the first approach is just just

1:11:13 architecture there's several architecture um unfortunately a lot of which are

1:11:20 proprietary that leads to a lower optimal power consumption secondly

1:11:28 uh this is this never ending march uh hopefully never ends on um

1:11:34 smaller cmos notes our current generation cmos a6 7 nanometer going to five

1:11:43 and uh going to five it's not so much on density uh the chip on the the dsp areas

1:11:49 don't scale so much but the power goes down um i have to go back and take a look uh

1:11:56 how much uh the power reductions we're getting between um seven and five but we are uh designing

1:12:02 chips at the five nanometer node uh that that is one and uh these will be

1:12:09 the two uh approaches that we are taking to get power reduction down and then mark focused on power dissipation which is a

1:12:16 flip side a power consumption if we didn't consume so much you don't have to dissipate or are cool as much uh but um ilia

1:12:26 yes i i agree with you radha so so for us like in the dsp and architecture team in infi low power is really kind of a

1:12:34 passion for us so with every generation of technology you know the some of the key innovations that we work

1:12:39 on are lowering the power of the dsp architecture and effect uh i

1:12:44 think that is actually one of the big differences between say long haul and data center is uh

1:12:50 the in data center uh dsp we really focus a lot on low power architectures

1:12:57 and there's a lot that was done that we've done but we cannot publish because it's proprietary implementations

1:13:03 but uh i mean there are uh thing you know you can read up read about in the literature various approaches for for low power

1:13:09 architectures um that uh that uh you can think about now the other thing is um uh you know with

1:13:17 each generation we can also reduce power by going to um a lo you know smaller uh node right uh

1:13:24 cmos node that also helps especially for uh dsp heavy architectures right

1:13:29 they scale um scale well to lower power at uh as we go to a smaller node so that

1:13:35 that should help as well okay all right well fantastic and with that we're just about out of time here so we're not

1:13:42 going to be able to take any more of these great questions that have come in but we will do our best to answer them offline

1:14:04 you