Product

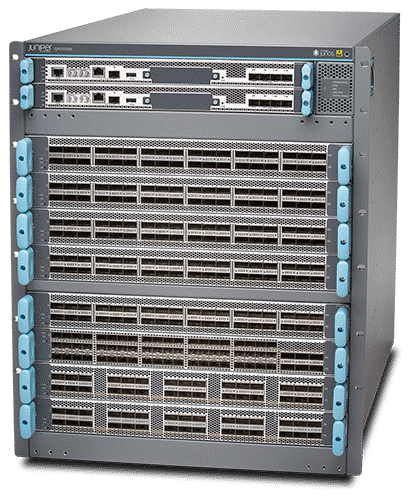

QFX10008 and QFX10016 Switches

QFX10008 and QFX10016 switches offer a highly scalable network foundation to support demanding data center, campus, and routing environments. Using our custom silicon Q5 ASICs, the switches include deep buffers and 1GbE/10GbE/40GbE/100GbE/200GbE interface options. With up to 96 Tbps throughput and an extensive set of line cards that support rich Layer 2 and Layer 3 services, they provide long-term investment protection.

Architectural flexibility means you’ll never be locked into a proprietary solution. The switches operate in diverse network environments, including spine-and-leaf fabrics and EVPN-VXLAN overlay architectures.

Manage your QFX100008 and QFX10016 with turnkey Juniper Apstra intent-based networking software, which automates the entire network lifecycle to simplify design, deployment, and operations and provides closed-loop assurance.

Key Features

Use Case: Data Center Fabric Spine

Chassis Options:

- QFX10008: 13 U chassis, up to 8 line cards

- QFX10016: 21 U chassis, up to 16 line cards

Port Density:

- QFX10000-30C-M: 30 x 100/40GbE, MACsec-enabled

- QFX10000-30C: 30 x 100/40GbE

- QFX10000-36Q: 36 x 40GbE or 12 x 100GbE

- QFX10000-60S-6Q: 60 x 1/10GbE with 6 x 40GbE or 2 x 100GbE

- QFX10000-12C-DWDM: 6 x 200Gbps

Throughput: Up to 96 Tbps (bidirectional)

Deep Buffers: 100 ms per port

Precision Time Protocol (PTP)

Features + Benefits

High Throughput

The switches deliver up to 96 Tbps bidirectional wire-speed switching with low latency and jitter, along with full Layer 2 and Layer 3 performance.

Architectural Flexibility

Support for IP fabrics and EVPN-VXLAN overlays for Layer 2 and Layer 3 networks delivers the flexibility you need to quickly deploy and support new services.

MACsec Protection

MACsec capability at speeds up to 100GbE provides a high-performance method of encrypting traffic in the data center.

Deep Buffers

100 ms per-port buffers absorb network traffic spikes to ensure performance at scale. The switches support up to 12GB of total packet buffer.

Fabric Management

Intent-based Juniper Apstra software provides full Day 0 through Day 2+ capabilities for IP/EVPN fabrics with closed-loop assurance in the data center.

Resource Center

Practical Resources

Day One Books

Architecture Guides

Support

Training and Community

Community

CUSTOMER SUCCESS

Canadian Startups Push the Limits in CENGN’s Cloud

Accelerating innovation is key to a strong economy and national competitiveness, and in Canada, that mission falls to CENGN, the Centre of Excellence in Next Generation Networks. CENGN provides small and medium-sized businesses with cloud-based infrastructure to validate their technology and accelerate the commercialization of their products.

QFX10008 and QFX10016

QFX10008 and QFX10016 Switches support the most demanding data center, campus, and routing environments. With our custom silicon Q5 ASICs, deep buffers, and up to 96 Tbps throughput, these switches deliver flexibility and capacity for long-term investment protection.

| Technical Features | |

Use Case: Data Center Fabric Spine Chassis Options:

Port Density:

Throughput: Up to 96 Tbps (bidirectional) |

|

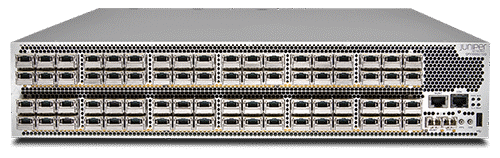

QFX10002

QFX10002 fixed-configuration switches are very dense, highly scalable platforms designed for today’s most demanding data center, campus, and routing environments. They’re loaded with power and functionality, all housed in a compact, 2 U form factor.

| Technical Features | |

Use Case: Data Center Fabric Leaf/Spine Port Density:

Throughput: Up to 2.88/5.76/12 Tbps (bidirectional) Deep Buffers: 100 ms per port |

|

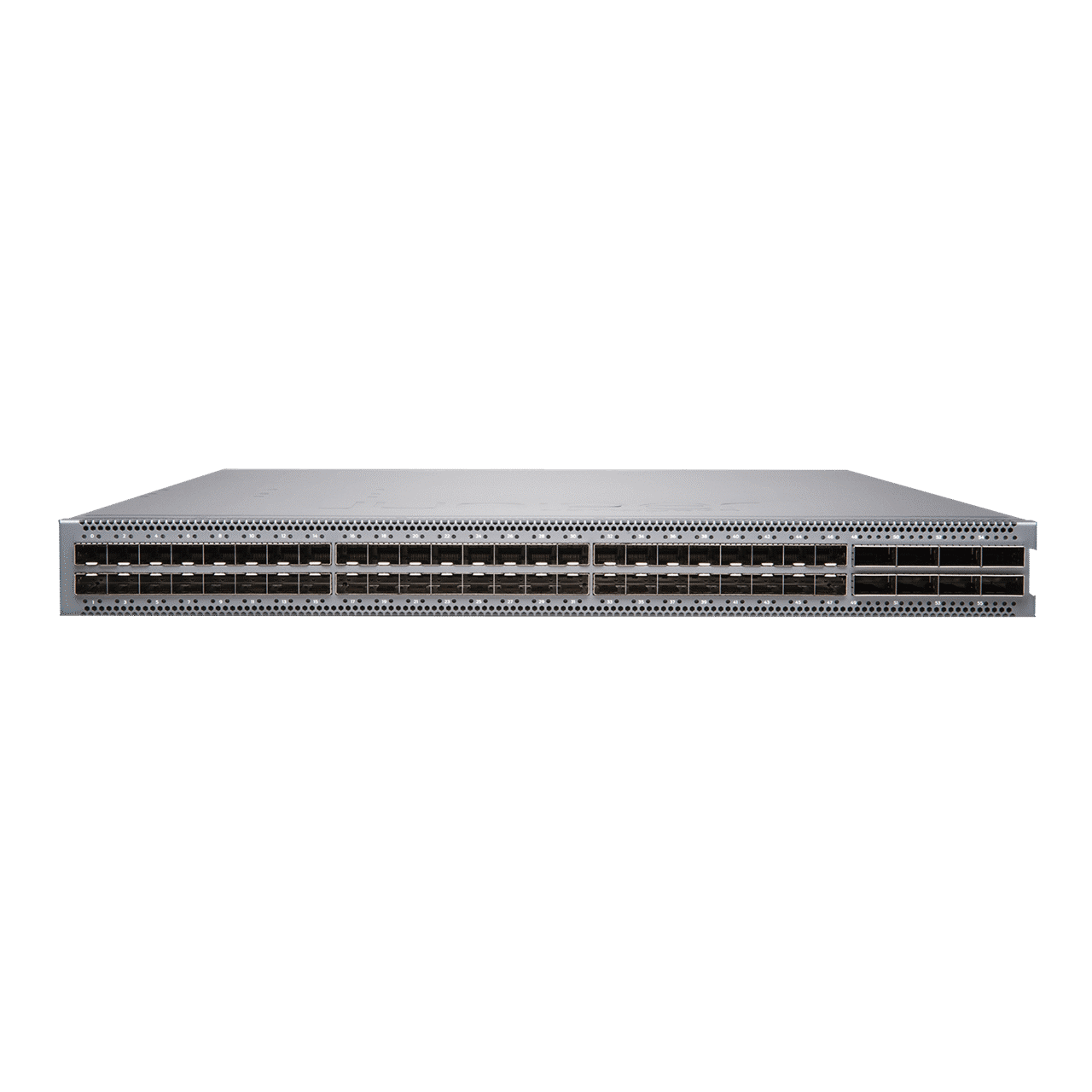

QFX5120

The QFX5120 line offers 1/10/25/40/100GbE switches designed for data center, data center edge, data center interconnect and campus deployments with requirements for low-latency Layer 2/Layer 3 features and advanced EVPN-VXLAN capabilities.

| Technical Features | |

Use Case: Data Center Fabric Leaf/Spine, Campus Distribution/Core, applications requiring MACsec Port Density:

Throughput: Up to 2.16/4/6.4 Tbps (bidirectional) MACsec: AES-256 encryption on all ports (QFX5120-48YM) |

|

Try it. Right now.

Live Events and On-Demand Demos

Find the QFX10008 and QFX10016 in these solutions

Data center networks

Simplify operations and ensure reliability with the modern, automated data center. Juniper helps you automate and continuously validate the entire network life cycle to ease design, deployment, and operations.

QFX10000 Series FAQs

What are the port densities, speeds, and configuration options of the QFX10008 and QFX10016 switches?

As members of the Juniper QFX10000 line of Switches, the QFX10008 and QFX10016 modular chassis can accommodate any combination of the following QFX10000 line cards:

- QFX10000-36Q: 36-port 40GbE QSFP+ or 12-port 100GbE QSFP28

- QFX10000-30C: 30-port 100GbE QSFP28/40GbE QSFP+

- QFX10000-60S-6Q: 60-port 1GbE/10GbE SFP/SFP+ with 6-port 40GbE QSFP+ / 2-port 100GbE QSFP28

- QFX10000-30C-M: 30-port 100GbE QSFP28/40GbE QSFP+ with MACsec

- QFX10K-12C-DWDM: 6-port 200GbE Coherent DWDM with MACsec

What are the main benefits of the QFX10008 and QFX10016 switches?

The QFX10008 and QFX10016 high-performance modular core and spine switches deliver industry-leading scalability, density, and flexibility, helping cloud and data center operators build automated data center networks that provide superior long-term investment protection.

What is the throughput of the QFX10008 and QFX10016?

The QFX10008 and QFX10016 can deliver up to 48 Tbps and 96 Tbps of bidirectional throughput, respectively.

What are the top features of the QFX10008 and QFX10016?

Three key features are described below.

- Virtual output queue (VOQ): The switches’ VOQ-based architecture is designed for very large deployments, in which packets are queued and dropped on ingress during congestion with no head-of-line blocking.

- Automation: The switches support a number of network automation features, including operations and event scripts, automatic rollback, zero-touch provisioning (ZTP), and Python scripting.

- High availability: The modular spine and core switches deliver a number of HA features, such as an extra slot for a redundant routing engine (RE) module, to ensure uninterrupted, carrier-class performance.

Who should use the QFX10008 and QFX10016?

Network operators challenged to meet rapid and ongoing traffic growth in data center and campus network deployments will benefit from the switches, which deliver up to 96 Tbps of system throughput, scalable to over 200 Tbps in the future. Their industry-leading scale and density redefine per-slot economics, enabling operators to do more with less while simplifying network design and reducing OpEx.