vSRX Virtual Firewall Cluster Staging and Provisioning for KVM

You can provision the vSRX Virtual Firewall VMs and virtual networks to configure chassis clustering.

The staging and provisioning of the vSRX Virtual Firewall chassis cluster includes the following tasks:

Chassis Cluster Provisioning on vSRX Virtual Firewall

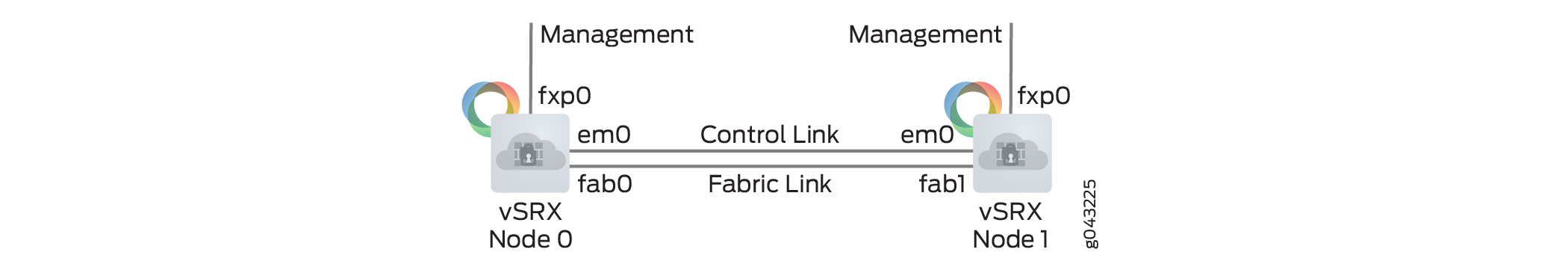

Chassis cluster requires the following direct connections between the two vSRX Virtual Firewall instances:

Control link, or virtual network, which acts in active/passive mode for the control plane traffic between the two vSRX Virtual Firewall instances

Fabric link, or virtual network, which acts in active/active mode for the data traffic between the two vSRX Virtual Firewall instances

Note:You can optionally create two fabric links for more redundancy.

The vSRX Virtual Firewall cluster uses the following interfaces:

Out-of-band Management interface (fxp0)

Cluster control interface (em0)

Cluster fabric interface (fab0 on node0, fab1 on node1)

The control interface must be the second vNIC. You can optionally configure a second fabric link for increased redundancy.

vSRX Virtual Firewall supports chassis cluster using the virtio driver and interfaces, with the following considerations:

When you enable chassis cluster, you must also enable jumbo frames (MTU size = 9000) to support the fabric link on the virtio network interface.

If you configure a chassis cluster across two physical hosts, disable igmp-snooping on each host physical interface that the vSRX Virtual Firewall control link uses to ensure that the control link heartbeat is received by both nodes in the chassis cluster.

hostOS# echo 0 > /sys/devices/virtual/net/<bridge-name>/bridge/multicast_snooping

After you enable chassis cluster, the vSRX Virtual Firewall instance maps the second vNIC to the control link, em0. You can map any other vNICs to the fabric link.

For virtio interfaces, link status update is not supported. The link status of virtio interfaces is always reported as Up. For this reason, a vSRX Virtual Firewall instance using virtio and chassis cluster cannot receive link up and link down messages from virtio interfaces.

The virtual network MAC aging time determines the amount of time that an entry remains in the MAC table. We recommend that you reduce the MAC aging time on the virtual networks to minimize the downtime during failover.

For example, you can use the brctl setageing bridge 1 command to set aging to 1 second for the Linux bridge.

You configure the virtual networks for the control and fabric links, then create and connect the control interface to the control virtual network and the fabric interface to the fabric virtual network.

Creating the Chassis Cluster Virtual Networks with virt-manager

In KVM, you create two virtual networks (control and fabric) to which you can connect each vSRX Virtual Firewall instance for chassis clustering.

To create a virtual network with virt-manager:

- Launch

virt-managerand select Edit>Connection Details. The Connection details dialog box appears. - Select Virtual Networks. The list of existing virtual networks appears.

- Click + to create a new virtual network for the control link. The Create a new virtual network wizard appears.

- Set the subnet for this virtual network and click Forward.

- Select Enable DHCP and click Forward.

- Select Isolated virtual network and click forward.

- Verify the settings and click Finish to create the virtual network.

Creating the Chassis Cluster Virtual Networks with virsh

In KVM, you create two virtual networks (control and fabric) to which you can connect each vSRX Virtual Firewall for chassis clustering.

To create the control network with virsh:

Configuring the Control and Fabric Interfaces with virt-manager

To configure the control and fabric interfaces for chassis

clustering with virt-manager:

Configuring the Control and Fabric Interfaces with virsh

To configure control and fabric interfaces to a vSRX Virtual Firewall

VM with virsh:

Configuring Chassis Cluster Fabric Ports

After the chassis cluster is formed, you must configure the interfaces that make up the fabric (data) ports.

Ensure that you have configured the following:

Set the chassis cluster IDs on both vSRX Virtual Firewall instances and rebooted the vSRX Virtual Firewall instances.

Configured the control and fabric links.