AWS Elastic Load Balancing and Elastic Network Adapter

This section provides an overview of the AWS ELB and ENA features and also describes how these features are deployed on vSRX Virtual Firewall instances.

Overview of AWS Elastic Load Balancing

This section provides information about AWS ELB.

Elastic Load Balancing (ELB) is a load-balancing service for Amazon Web Services (AWS) deployments.

ELB distributes incoming application or network traffic across ntra availability zones, such as Amazon EC2 instances, containers, and IP addresses. ELB scales your load balancer as traffic to your application changes over time, and can scale to the vast majority of workloads automatically.

AWS ELB using application load balancers enables automation by using certain AWS services:

Amazon Simple Notification Service—For more information, see https://docs.aws.amazon.com/sns/latest/dg/welcome.html.

AWS Lambda—For more information, see https://docs.aws.amazon.com/lambda/latest/dg/welcome.html.

AWS Auto Scale Group—For more information, see https://docs.aws.amazon.com/autoscaling/ec2/userguide/AutoScalingGroup.html.

Benefits of AWS Elastic Load Balancing

Ensures elastic load balancing for intra available zone by automatically distributing the incoming traffic.

Provides flexibility to virtualize your application targets by allowing you to host more applications on the same instance and to centrally manage Transport Layer Security (TLS) settings and offload CPU-intensive workloads from your applications.

Provides robust security features such as integrated certificate management, user authentication, and SSL/TLS decryption.

Supports auto-scaling a sufficient number of applications to meet varying levels of application load without requiring manual intervention.

Enables you to monitor your applications and their performance in real time with Amazon CloudWatch metrics, logging, and request tracing.

Offers load balancing across AWS and on-premises resources using the same load balancer.

AWS Elastic Load Balancing Components

AWS Elastic Load Balancing (ELB) components include:

Load balancers—A load balancer serves as the single point of contact for clients. The load balancer distributes incoming application traffic across multiple targets, such as EC2 instances, in multiple availability zones (AZs), thereby increasing the availability of your application. You add one or more listeners to your load balancer.

Listeners or vSRX instances—A listener is a process for checking connection requests, using the protocol and port that you configure. vSRX Virtual Firewall instances as listeners check for connection requests from clients, using the protocol and port that you configure, and forward requests to one or more target groups, based on the rules that you define. Each rule specifies a target group, condition, and priority. When the condition is met, the traffic is forwarded to the target group. You must define a default rule for each vSRX Virtual Firewall instance, and you can add rules that specify different target groups based on the content of the request (also known as content-based routing).

Target groups or vSRX application workloads—Each vSRX Virtual Firewall application as target group is used to route requests to one or more registered targets. When you create each vSRX Virtual Firewall instance as a listener rule, you specify a vSRX Virtual Firewall application and conditions. When a rule condition is met, traffic is forwarded to the corresponding vSRX Virtual Firewall application. You can create different vSRX Virtual Firewall applications for different types of requests. For example, create one vSRX Virtual Firewall application for general requests and other vSRX Virtual Firewall applications for requests to the microservices for your application.

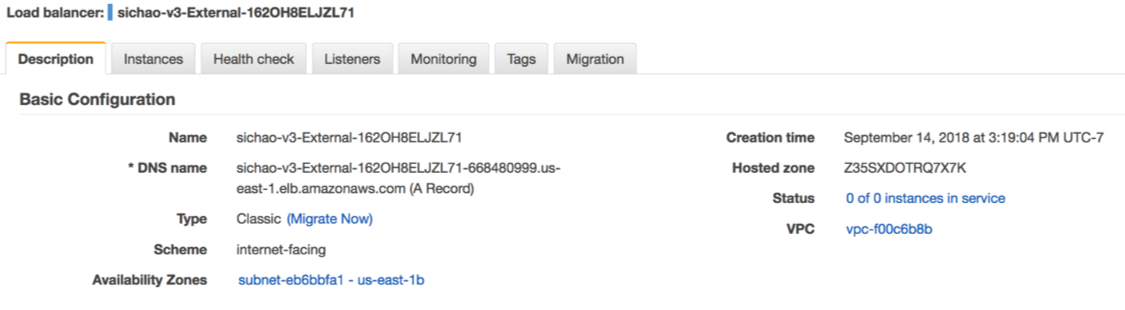

AWS ELB supports three types of load balancers: application load balancers, network load balancers, and classic load balancers. You can select a load balancer based on your application needs. For more information about the types of AWS ELB load balancers, see AWS Elastic Load Balancing.

Overview of Application Load Balancer

Starting in Junos OS Release 18.4R1, vSRX Virtual Firewall instances support AWS Elastic Load Balancing (ELB) using the application load balancer to provide scalable security to the Internet-facing traffic using native AWS services. An application load balancer automatically distributes incoming application traffic and scales resources to meet traffic demands.

You can also configure health checks to monitor the health of the registered targets so that the load balancer can send requests only to the healthy targets.

The key features of an application load balancer are:

Layer-7 load balancing

HTTPS support

High availability

Security features

Containerized application support

HTTP/2 support

WebSockets support

Native IPv6 support

Sticky sessions

Health checks with operational monitoring, logging, request tracing

Web Application Firewall (WAF)

When the application load balancer receives a request, it evaluates the rules of the vSRX Virtual Firewall instance in order of priority to determine which rule to apply, and then selects a target from the vSRX Virtual Firewall application for the rule action. You can configure a vSRX Virtual Firewall instance rule to route requests to different target groups based on the content of the application traffic. Routing is performed independently for each target group, even when a target is registered with multiple target groups.

You can add and remove targets from your load balancer as your needs change, without disrupting the overall flow of requests to your application. ELB scales your load balancer as traffic to your application changes over time. ELB can scale majority of workloads automatically.

The application load balancer launch sequence and current screen can be viewed using the vSRX Virtual Firewall instance properties. When running vSRX Virtual Firewall as an AWS instance, logging in to the instance through SSH starts a session on Junos OS. Standard Junos OS CLI can be used to monitor health and statistics of the vSRX Virtual Firewall instance. If the #load_balancer=true tag is sent in user data, then boot-up messages mention that the vSRX Virtual Firewall interfaces are configured for ELB and auto-scaling support. Interfaces eth0 and eth1 are then swapped.

If an unsupported Junos OS configuration is sent to the vSRX Virtual Firewall instance in user data, then the vSRX Virtual Firewall instance reverts to its factory-default configuration. If the #load_balancer=true tag is missing, then interfaces are not swapped.

Deployment of AWS Application Load Balancer

AWS ELB application load balancer can be deployed in two ways:

vSRX Virtual Firewall behind AWS ELB application load balancer

ELB sandwich

- vSRX Virtual Firewall Behind AWS ELB Application Load Balancer Deployment

- Sandwich Deployment of AWS ELB Application Load Balancer

vSRX Virtual Firewall Behind AWS ELB Application Load Balancer Deployment

In this type of deployment, the vSRX Virtual Firewall instances are attached to the application load balancer, in one or more availability zones (AZs), and the application workloads are behind the vSRX Virtual Firewall instances. The application load balancer sends traffic only to the primary interface of the instance. For a vSRX Virtual Firewall instance, the primary interface is the management interface fxp0.

To enable ELB in this deployment, you have to swap the management and the first revenue interface.

Figure 1 illustrates the vSRX Virtual Firewall behind AWS ELB application load balancer deployment.

Enabling AWS ELB with vSRX Virtual Firewall Behind AWS ELB Application Load Balancer Deployment

The following are the prerequisites for enabling AWS ELB with the vSRX Virtual Firewall behind AWS ELB application load balancer type of deployment:

All incoming and outgoing traffic to ELB are monitored from the ge-0/0/0 interface associated with the vSRX Virtual Firewall instance.

The vSRX Virtual Firewall instance at launch has two interfaces in which the subnets containing the interfaces are connected to the internet gateway (IGW). The two interface limit is set by the AWS auto scaling group deployment. You need to define at least one interface in the same subnet as the AWS ELB. The additional interfaces can be attached by the lambda function.

Source or destination check is disabled on the eth1 interface of the vSRX Virtual Firewall instance.

For deploying an AWS ELB application load balancer using the vSRX Virtual Firewall behind AWS ELB application load balancer method:

The vSRX Virtual Firewall instance contains:

Cloud initialization (cloud-init) user data with ELB tag as #load_balancer=true.

The user data configuration with #junos-config tag, fxp0 (dhcp), ge-0/0/0 (dhcp) (must be DHCP any security group that it needs to define)

Cloud-Watch triggers an Simple Notification Service (SNS), which in turn triggers a Lambda function that creates and attaches an Elastic Network Interface (ENI) with Elastic IP address (EIP) to the vSRX Virtual Firewall instance. Multiple new ENIs (maximum of 8) can be attached to this instance.

The vSRX Virtual Firewall Instance must be rebooted. A reboot must be performed for all subsequent times the vSRX Virtual Firewall instance launches with swapped interfaces.

Note:Chassis cluster is not supported if you try to swap the ENI between instances and IP monitoring.

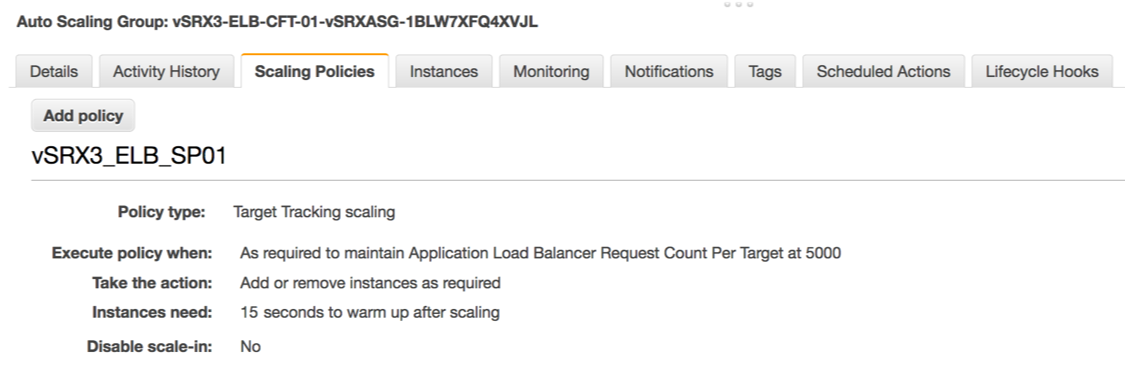

You can also launch the vSRX Virtual Firewall instance in an Auto Scaling Group (ASG). This launch can be automated using a cloud formation template (CFT).

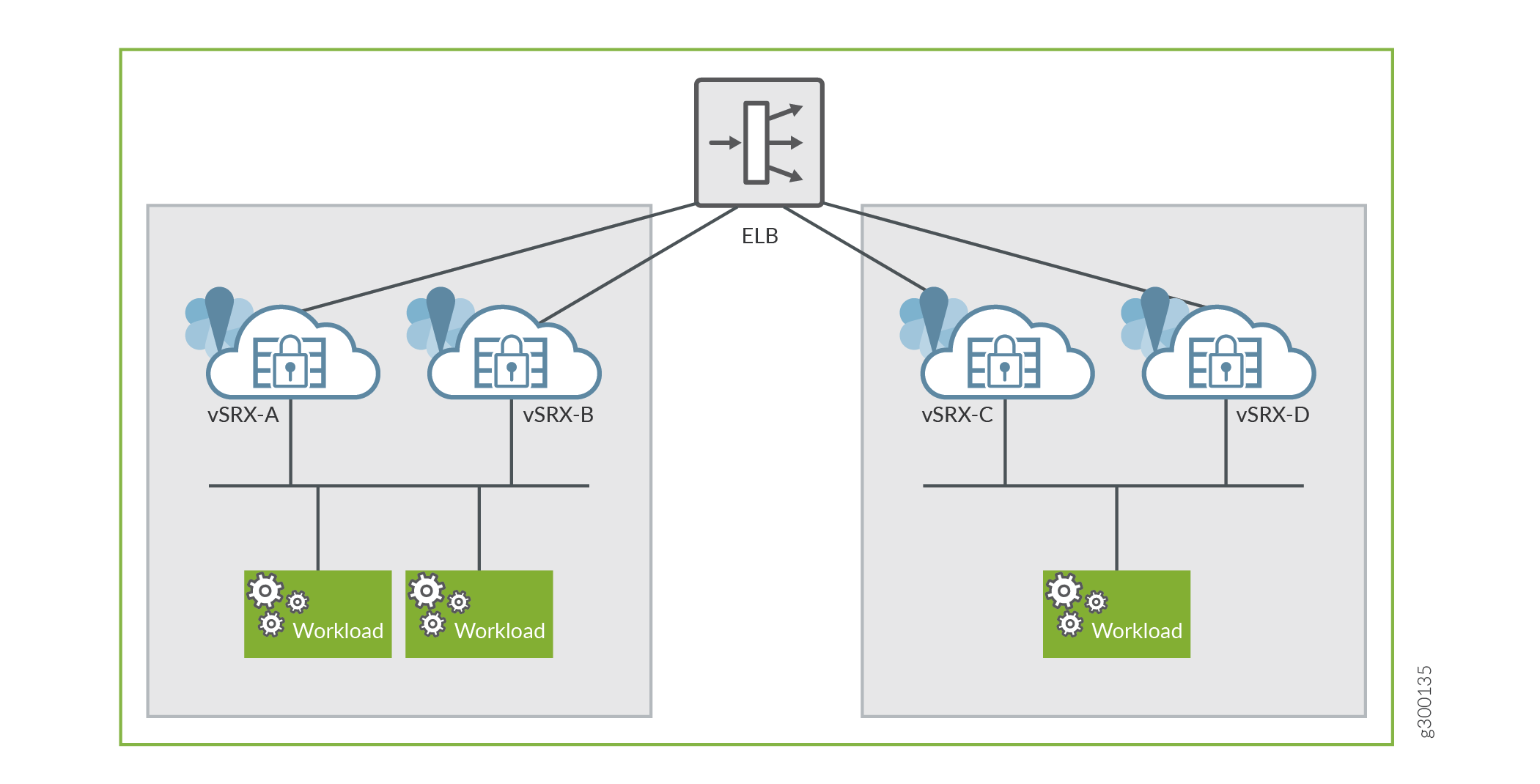

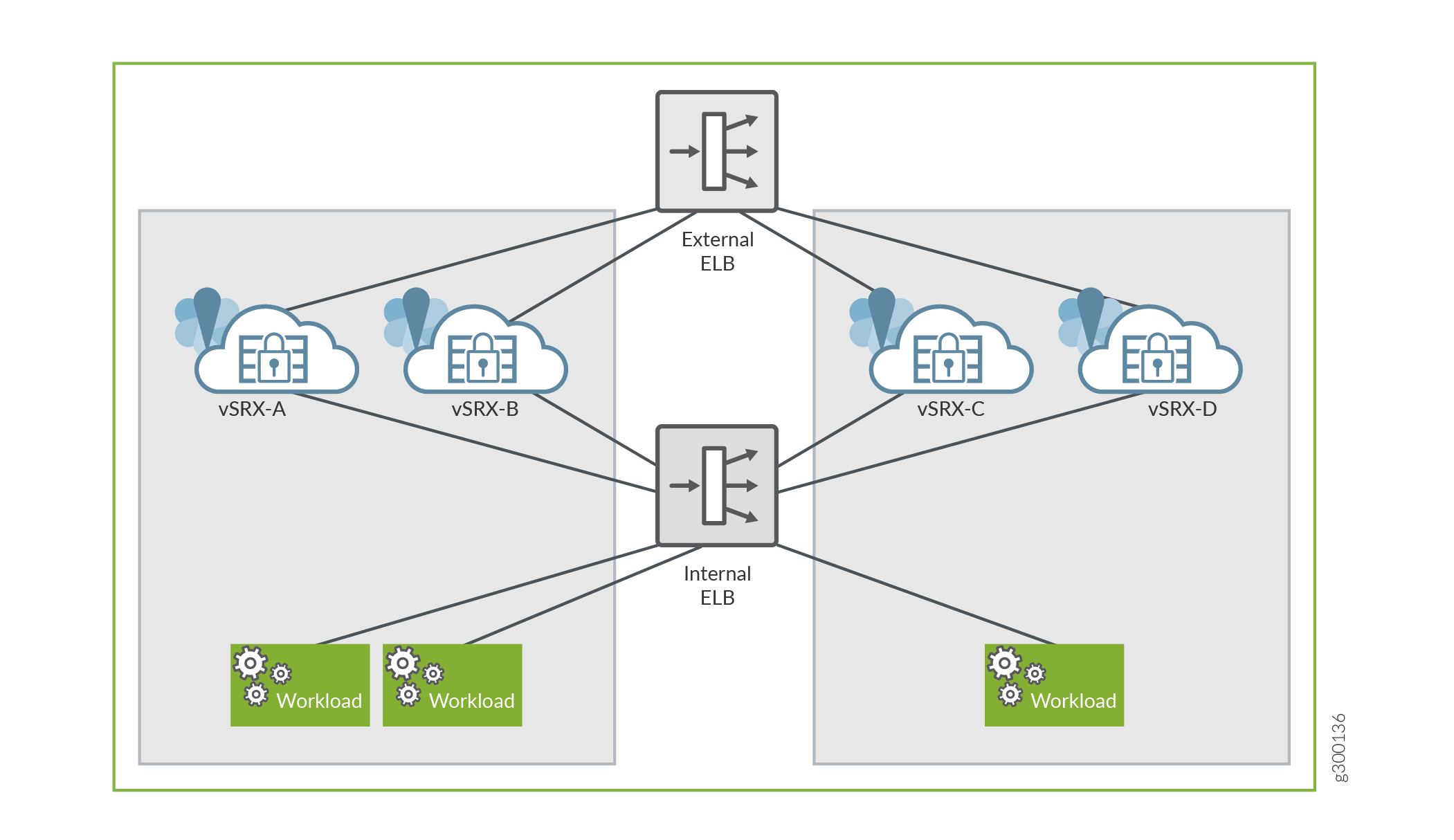

Sandwich Deployment of AWS ELB Application Load Balancer

In this deployment model, you can scale both, security and applications. vSRX Virtual Firewall instances and the applications are in different ASGs and each of these ASGs is attached to a different application load balancer. This type of ELB deployment is elegant and simplified way to manually scale vSRX Virtual Firewall deployments to address planned or projected traffic increases while also delivering multi-AZ high availability. The deployment ensures inbound high availability and scaling for AWS deployments.

Because the load balancer scales dynamically, its virtual IP address (VIP) is a fully qualified domain name (FQDN). This FQDN resolves to multiple IP addresses according to the availability zone. To enable this resolution, the vSRX Virtual Firewall instance should be able to send and receive traffic from the FQDN (or the multiple addresses that it resolves to).

You configure this FQDN by using the set security zones

security-zone ELB-TRAFFIC address-book address ELB dns-name FQDN_OF_ELB command.

Figure 2 illustrates the AWS ELB application load balancer sandwich deployment for vSRX Virtual Firewall.

Enabling Sandwich Deployment of AWS Application Load Balancer for vSRX Virtual Firewall

For AWS ELB application load balancer sandwich deployment for vSRX Virtual Firewall:

vSRX Virtual Firewall receives the #load_balancer=true tag in cloud-init user data.

In Junos OS, the initial boot process scans the mounted disk for the presence of the flag file in the setup_vsrx file. If the file is present, it indicates that the two interfaces with DHCP in two different virtual references must be configured. This scan and configuration update is performed in the default configuration and on top of the user data if the flag file is present.

Note:If user data is present, then the boot time after the second or the third mgd process commit increases.

You must reboot the vSRX Virtual Firewall instance. Perform reboot for all the subsequent times the vSRX Virtual Firewall instance is launched with swapped interfaces.

Note:Chassis cluster support for swapping the Elastic Network Interfaces (ENIs) between instances and IP monitoring does not work.

You can also launch vSRX Virtual Firewall instance in an ASG and automate the deployment using a cloud formation template (CFT).

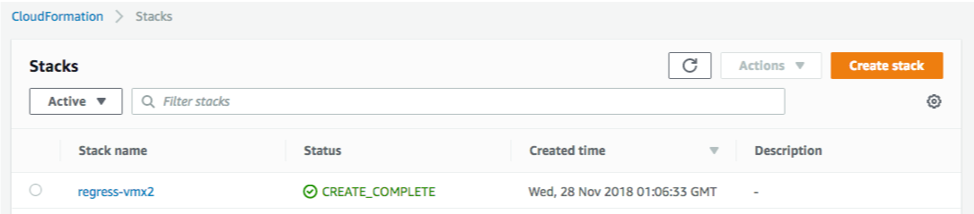

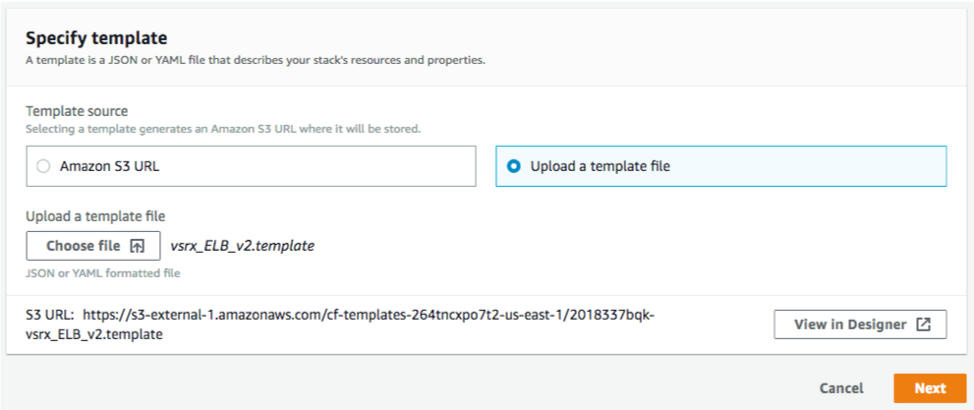

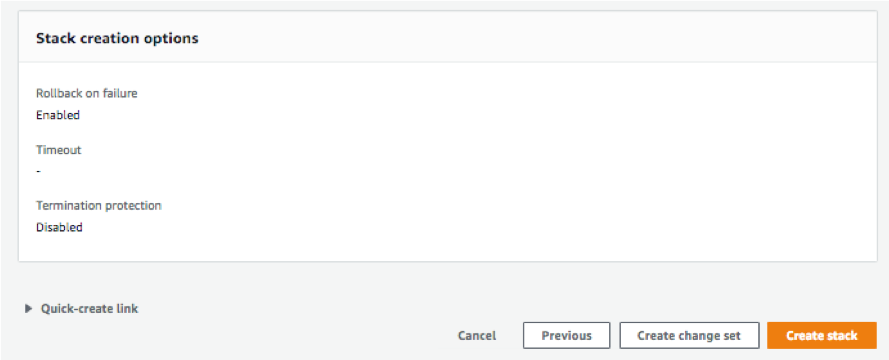

Invoking Cloud Formation Template (CFT) Stack Creation for vSRX Virtual Firewall Behind AWS Application Load Balancer Deployment

This topic provide details on how to invoke cloud formation template (CFT) stack creation for the non-sandwich deployment (with vSRX Virtual Firewall Behind AWS Application Load Balancer) which contains only one load balancer.

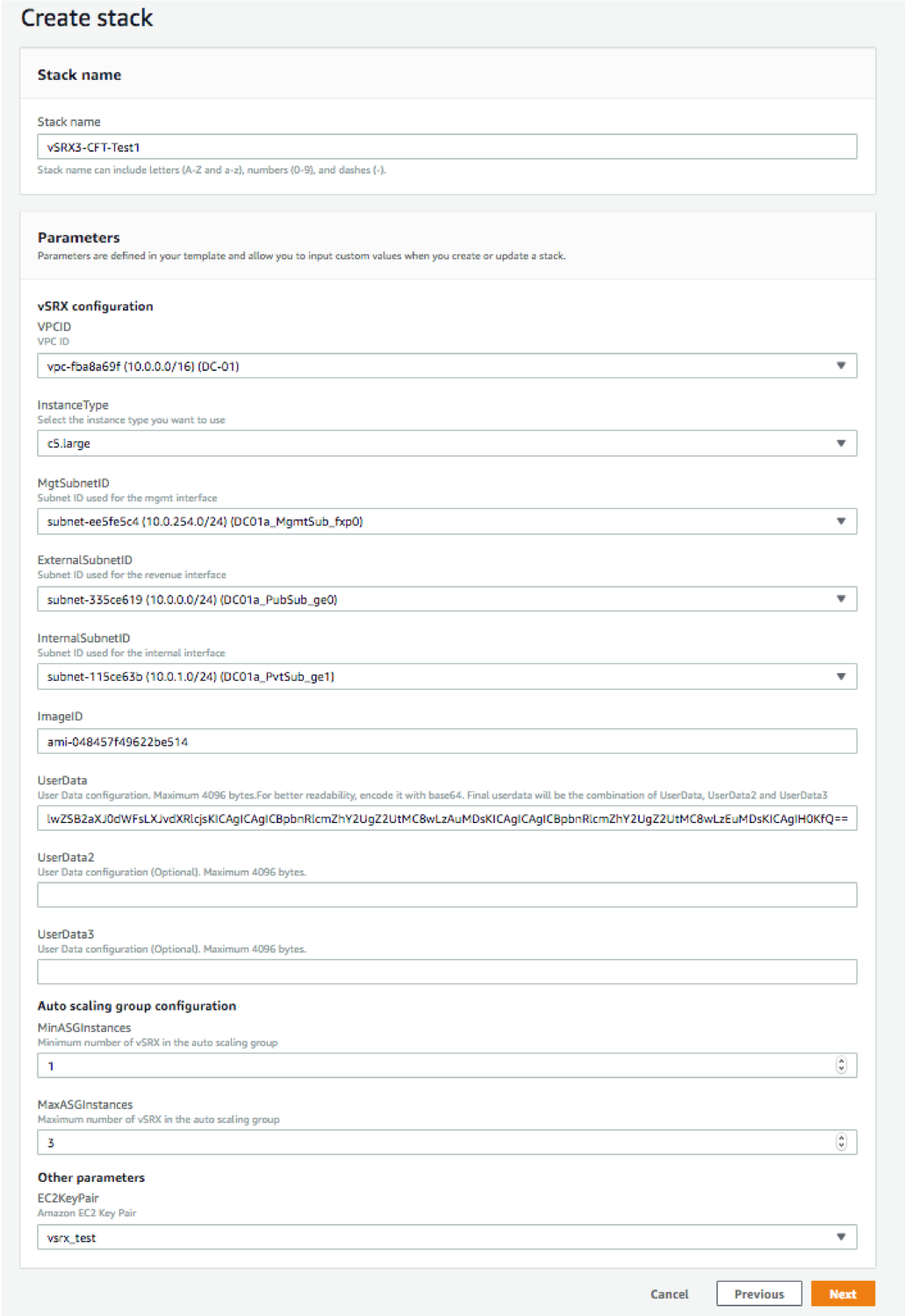

Before you invoke the CFT stack creation, ensure you have the following already available within AWS environment:

VPC created and ready to use.

A management subnet

An external subnet (subnet for vSRX Virtual Firewall interface receiving traffic from the ELB).

An internal subnet (subnet for vSRX Virtual Firewall interface sending traffic to the workload).

An AMI ID of the vSRX Virtual Firewall instance that you want to launch.

User data (the vSRX Virtual Firewall configuration that has to be committed before the traffic is forwarded to the workload. This is a base 64 encoded data not more than 4096 characters in length; you may use up to three user data fields if a single field data exceeds 4096 characters).

EC2 key file.

Get the lambda function file add_eni.zip from Juniper vSRX Virtual Firewall GitHub repository and upload it to your instances S3 bucket. Use this information in the Lambda S3 Location field of the template.

Your AWS account should have permissions to create Lambda functions on various resources in your region.

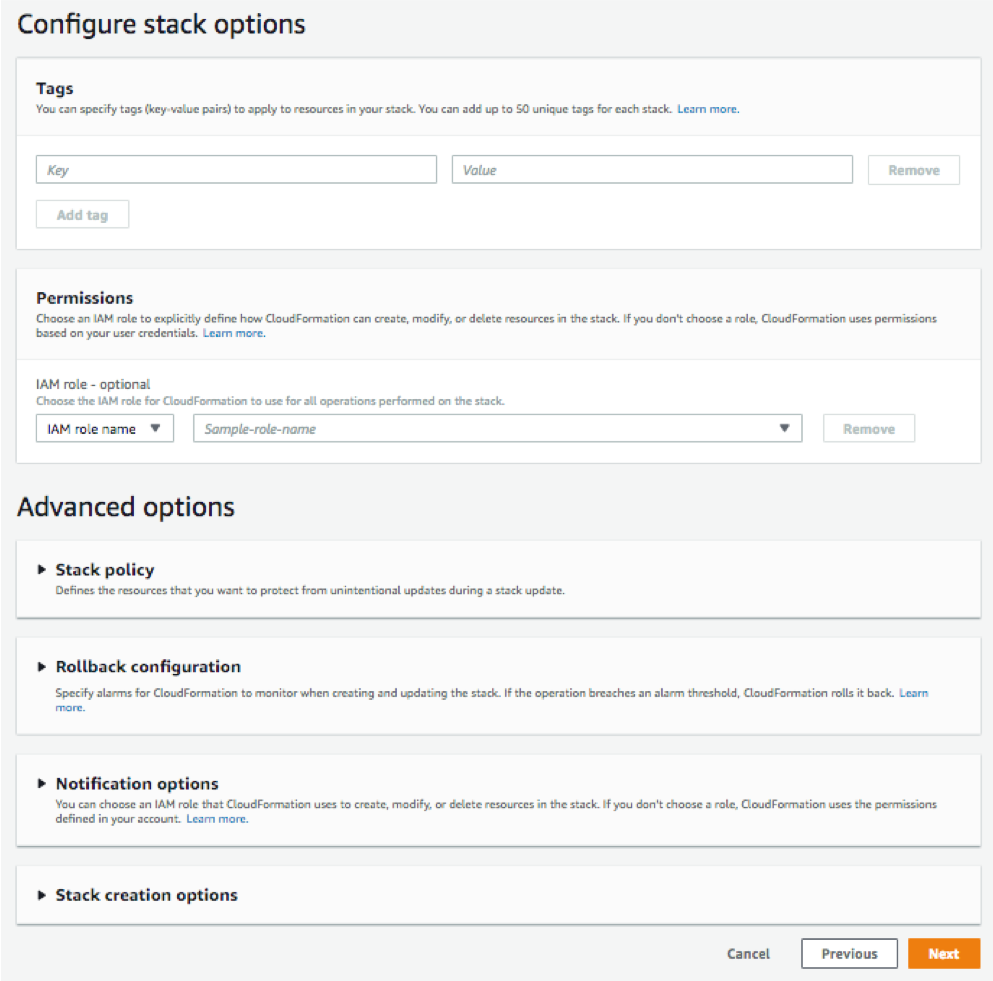

Follow the following steps to invoke CFT stack creation for AWS ELB with vSRX Virtual Firewall behind AWS ELB application load balancer deployment.

Sample Configuration of AWS Elastic Load Balancer with vSRX Virtual Firewall instance for HTTP Traffic

You need to have your DNS server IP and your Web Server IP (or if your web server is behind a load balancer, then use that load balancer’s IP address below instead of the Web Server IP).

After using your IP addresses in the below configuration, convert this configuration into Base 64 format (refer to: https://www.base64encode.org/) and then paste the converted configuration into the UserData field. By doing so, applies the below configuration to the existing default configuration on a vSRX Virtual Firewall launched in AWS, during the stack creation process.

#load_balancer=true

#junos-config

system {

name-server {

<Your DNS Server IP>

}

syslog {

file messages {

any any;

}

}

}

security {

address-book {

global {

address websrv <Your Web Server IP>/32>;

}

}

nat {

source {

rule-set src-nat {

from interface ge-0/0/0.0;

to zone trust;

rule rule1 {

match {

source-address 0.0.0.0/0;

destination-port {

80;

}

}

then {

source-nat {

interface;

}

}

}

}

}

destination {

pool pool1 {

address <Your Web Server IP>/32>;

}

rule-set dst-nat {

from interface ge-0/0/0.0;

rule rule1 {

match {

destination-address 0.0.0.0/0;

destination-port {

80;

}

}

then {

destination-nat {

pool {

pool1;

}

}

}

}

}

}

}

policies {

from-zone untrust to-zone trust {

policy mypol {

match {

source-address any;

destination-address any;

application any;

}

then {

permit;

}

}

}

}

zones {

security-zone trust {

host-inbound-traffic {

system-services {

any-service;

}

protocols {

all;

}

}

interfaces {

ge-0/0/1.0;

}

}

security-zone untrust {

host-inbound-traffic {

system-services {

any-service;

}

protocols {

all;

}

}

interfaces {

ge-0/0/0.0;

}

}

}

}

interfaces {

ge-0/0/0 {

unit 0 {

family inet {

dhcp;

}

}

}

ge-0/0/1 {

unit 0 {

family inet {

dhcp;

}

}

}

}

routing-instances {

ELB_RI {

instance-type virtual-router;

interface ge-0/0/0.0;

interface ge-0/0/1.0;

}

}

Overview of AWS Elastic Network Adapter (ENA) for vSRX Virtual Firewall Instances

Amazon Elastic Compute Cloud (EC2) provides the Elastic Network Adapter (ENA), the next-generation network interface and accompanying drivers that provide enhanced networking on EC2 vSRX Virtual Firewall instances.

Amazon EC2 provides enhanced networking capabilities through the Elastic Network Adapter (ENA).

Benefits

Supports multiqueue device interfaces. ENA makes uses of multiple transmit and receive queues to reduce internal overhead and to increase scalability. The presence of multiple queues simplifies and accelerates the process of mapping incoming and outgoing packets to a particular vCPU.

The ENA driver supports industry-standard TCP/IP offload features such as checksum offload and TCP transmit segmentation offload (TSO).

Supports receive-side scaling (RSS) network driver technology that enables the efficient distribution of network receive processing across multiple CPUs in multiprocessor systems, for multicore scaling. Some of the ENA devices support a working mode called low-latency queue (LLQ), which saves several microseconds.

Understanding AWS Elastic Network Adapter

Enhanced networking uses single-root I/O virtualization (SR-IOV) to provide high-performance networking capabilities on supported instance types. SR-IOV is a method of device virtualization that provides higher I/O performance and lower CPU utilization when compared to traditional virtualized network interfaces. Enhanced networking provides higher bandwidth, higher packet per second (pps) performance, and consistently lower inter-instance latencies. There is no additional charge for using enhanced networking.

ENA is a custom network interface optimized to deliver high throughput and packet per second (pps) performance, and consistently low latencies on EC2 vSRX Virtual Firewall instances. Using ENA for vSRX Virtual Firewall C5.large instances (with 2 vCPUs and 4-GB memory), you can utilize up to 20 Gbps of network bandwidth. ENA-based enhanced networking is supported on vSRX Virtual Firewall instances.

The ENA driver exposes a lightweight management interface with a minimal set of memory-mapped registers and an extendable command set through an admin queue. The driver supports a wide range of ENA adapters, is link-speed independent (that is, the same driver is used for 10 Gbps, 25 Gbps, 40 Gbps, and so on), and negotiates and supports various features. The ENA enables high-speed and low-overhead Ethernet traffic processing by providing a dedicated Tx/Rx queue pair per CPU core.

The DPDK drivers for ENA are available at https://github.com/amzn/amzn-drivers/tree/master/userspace/dpdk.

When AWS ELB application load balancers are used, the eth0 (first) and eth1 (second) interfaces are swapped for the vSRX Virtual Firewall instance. The AWS ENA detects and rebinds the interface with its corresponding kernel driver.