High Availability Overview

High Availability (HA) on NorthStar Controller is an active/standby solution. That means that there is only one active node at a time, with all other nodes in the cluster serving as standby nodes. All of the nodes in a cluster must be on the same subnet for HA to support virtual IP (VIP). On the active node, all processes are running. On the standby nodes, those processes required to maintain connectivity are running, but NorthStar processes are in a stopped state. If the active node experiences a hardware- or software-related connectivity failure, the NorthStar HA_agent process elects a new active node from amongst the standby nodes. Complete failover is achieved within five minutes. One of the factors in the selection of the new active node is the user-configured priorities of the candidate nodes.

All processes are started on the new active node, and the node configures the virtual IP address based on the user configuration (via net_setup.py). The virtual IP can be used for client-facing interfaces as well as for PCEP sessions.

Throughout your use of NorthStar Controller HA, be aware that you must replicate any changes you make to northstar.cfg to all cluster nodes so the configuration is uniform across the cluster. cMGD configuration changes, on the other hand, are replicated across the cluster nodes automatically.

Failure Scenarios

NorthStar Controller HA protects the network from the following failure scenarios:

-

Hardware failures (server power outage, server network-facing interfaces, or network-facing Ethernet cable failure)

-

Operating system failures (server operating system reboot, server operating system not responding)

-

Software failures (failure of any process running on the active server when it is unable to recover locally)

Failover and the NorthStar Controller User Interfaces

If failover occurs while you are working in the NorthStar Controller Java Planner client, the client is disconnected and you must re-launch NorthStar Controller using the client-facing interface virtual IP address.

If the server has only one interface or if you only want to use one interface, the network-facing interface is then also the client-facing interface.

The Web UI also loses connectivity upon failover, requiring you to log in again.

Support for Multiple Network-Facing Interfaces

Up to five network-facing interfaces are supported for High Availability (HA) deployments, one of which you designate as the cluster communication (Zookeeper) interface. The net_setup.py utility allows configuration of the monitored interfaces in both the host configuration (Host interfaces 1 through 5), and JunosVM configuration (JunosVM interfaces 1 through 5). In HA Setup, net_setup.py enables configuration of all the interfaces on each of the nodes in the HA cluster.

The ha_agent sends probes using ICMP packets (ping) to remote cluster endpoints (including the Zookeeper interface) to monitor the connectivity of the interfaces. If the packet is not received within the timeout period, the neighbor is declared unreachable. The ha_agent updates Zookeeper on any interface status changes and propagates that information across the cluster. You can configure the interval and timeout values for the cluster in the HA setup script. Default values are 10 seconds and 30 seconds, respectively.

Also in the HA setup utility is an option to configure whether switchover is to be allowed for each interface.

For nested VM configurations, you may need to modify supervisord-junos.sh to support the additional interfaces for junosVM.

LSP Discrepancy Report

During an HA switchover, the PCS server performs LSP reconciliation. The reconciliation produces the LSP discrepancy report which identifies LSPs that the PCS server has discovered might require re-provisioning.

Only PCC-initiated and PCC-delegated LSPs are included in the report.

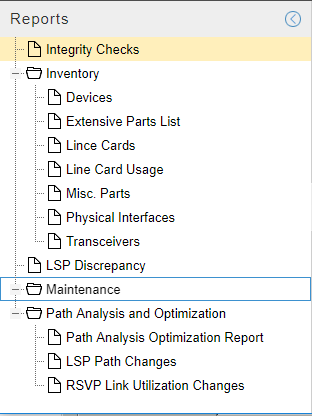

Access the report by navigating to Applications > Reports. Figure 1 shows a list of available reports, including the LSP Discrepancy report.

Cluster Configuration

The NorthStar implementation of HA requires that the cluster have a quorum, or majority, of voters. This is to prevent “split brain” when the nodes are partitioned due to failure. In a five-node cluster, HA can tolerate two node failures because the remaining three nodes can still form a simple majority. The minimum number of nodes in a cluster is three.

There is an option within the NorthStar Controller setup utility for configuring an HA cluster. First, configure the standalone servers; then configure the cluster.

See Configuring a NorthStar Cluster for High Availabilityin the NorthStar Controller Getting Started Guide for step-by-step cluster installation/configuration instructions.

Ports that Must be Allowed by External Firewalls

Among the ports used by NorthStar, there are a number that must be allowed by external firewalls in order for NorthStar Controller servers to communicate. See NorthStar Controller System Requirements in the NorthStar Controller Getting Started Guide for a list of ports used by NorthStar Controller that must be allowed by external firewalls. The ports with the word cluster in their purpose descriptions pertain specifically to HA configuration.