Solution Architecture

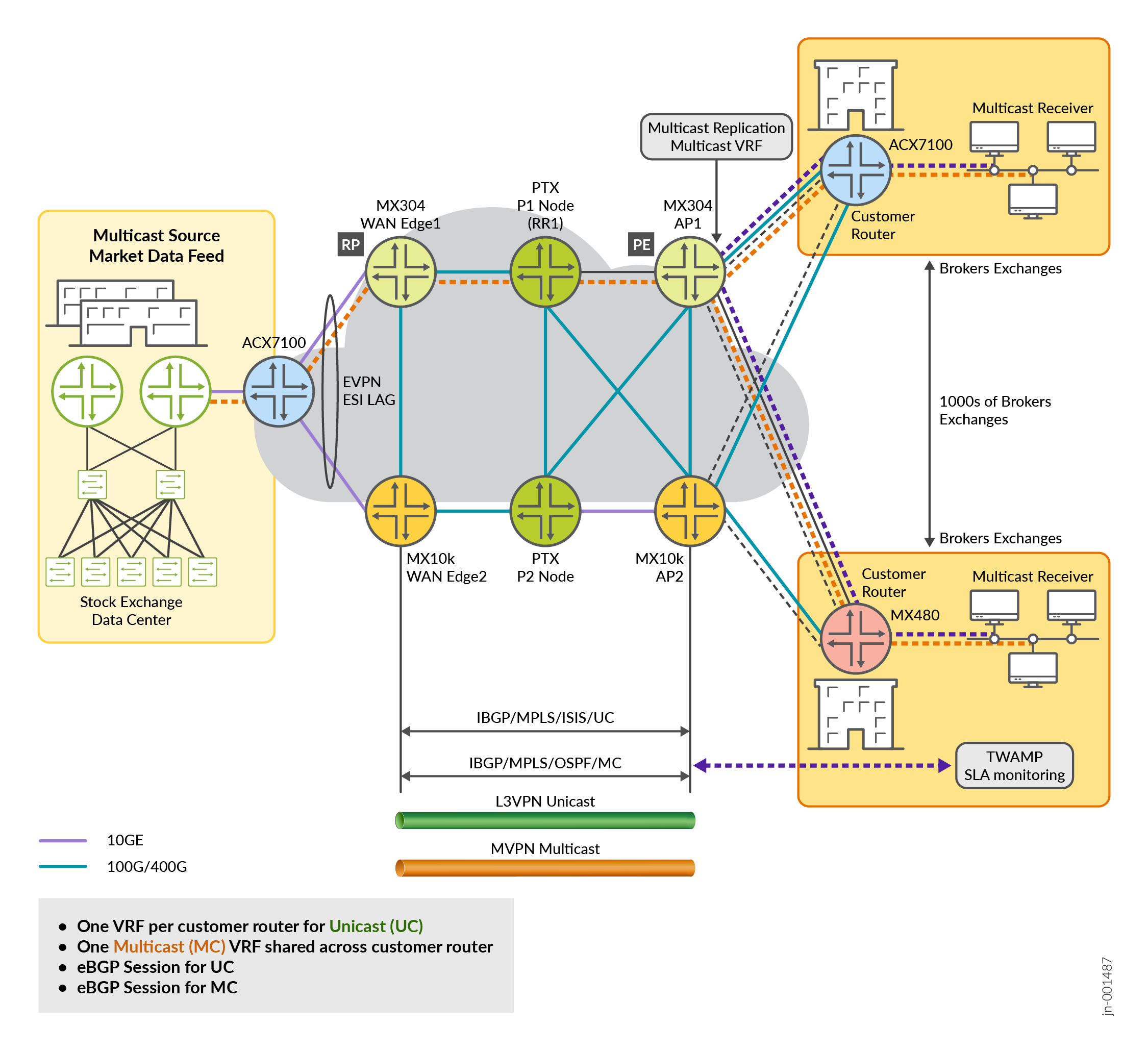

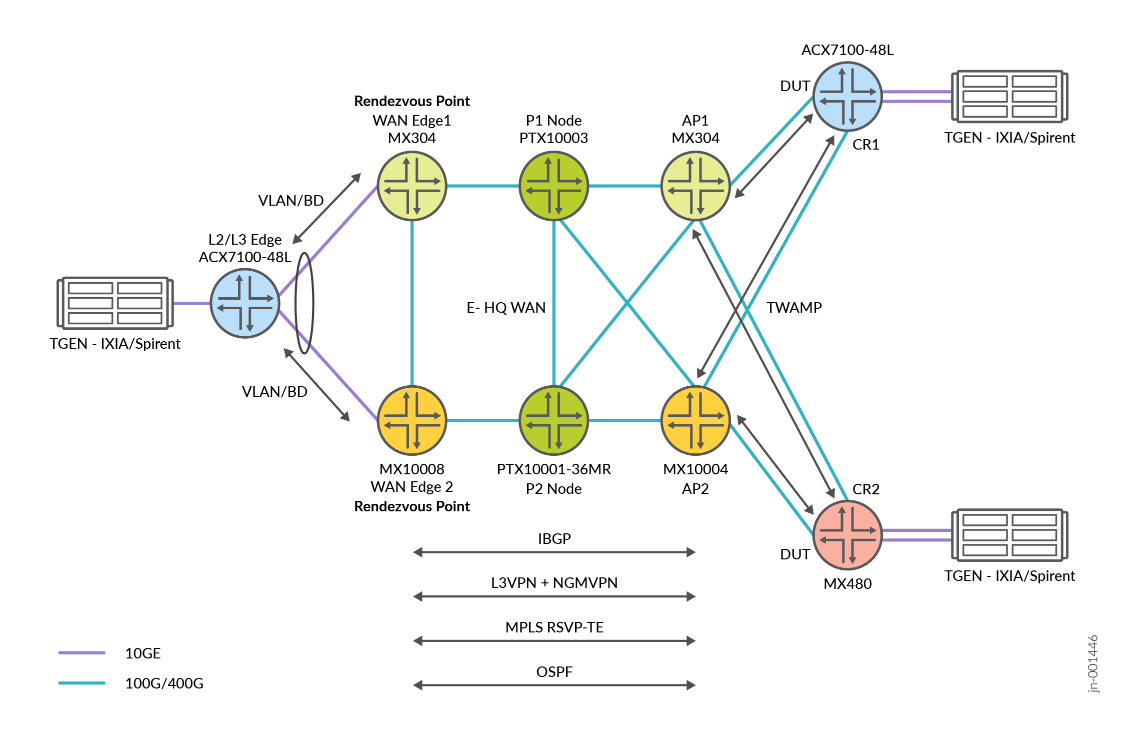

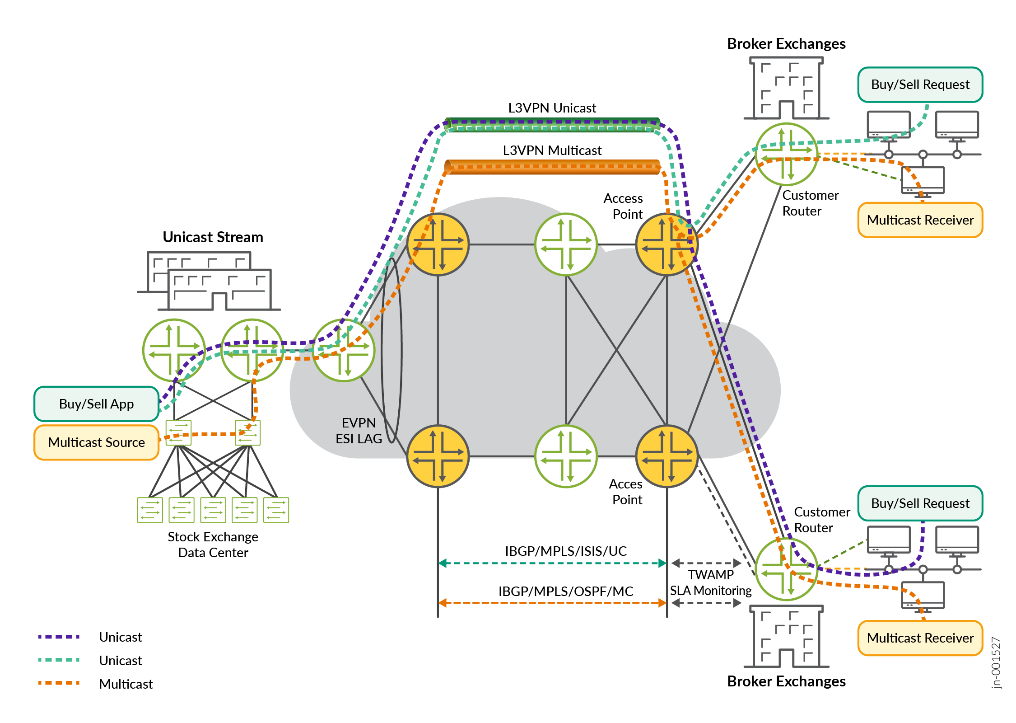

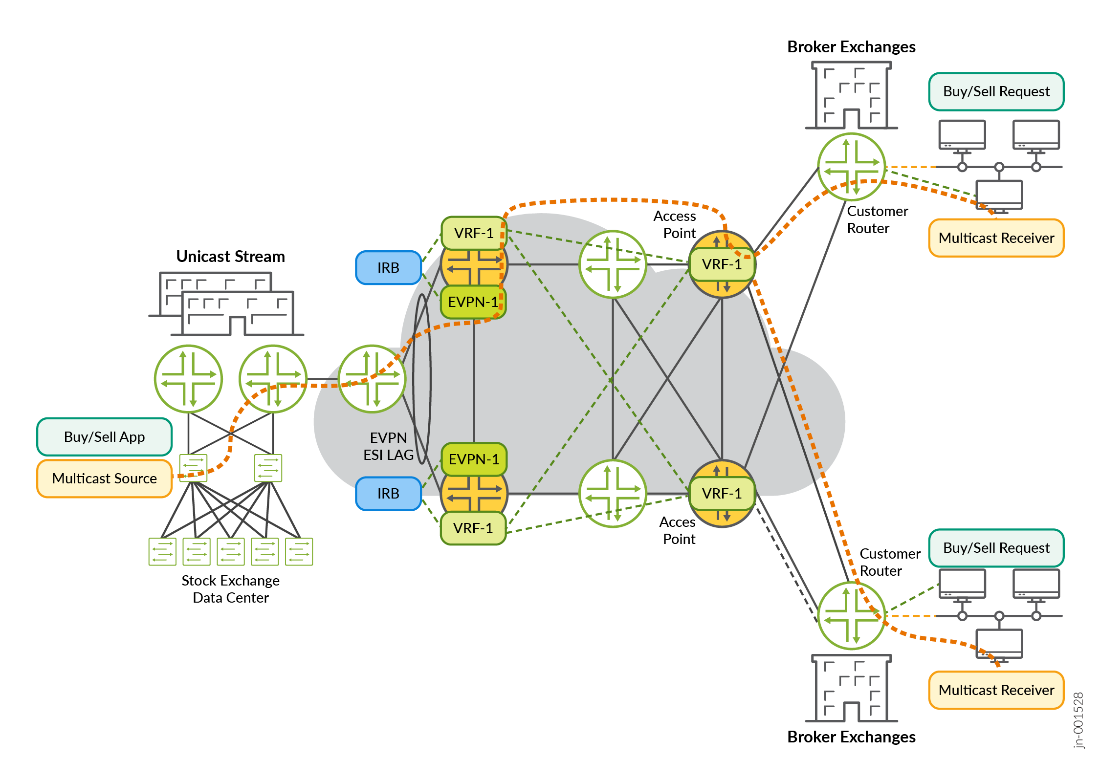

The architecture is designed to support latency-sensitive, deterministic, and ultra-fast trade execution. In this architecture, EVPN with L3VPN-NGMVPN is implemented to handle multicast at scale and to meet the performance requirements of the finance and stock exchange WAN network. We build the topology of this WAN network as shown in Figure 4, the stock exchange server is connected to the WAN edges through an L2 switch. This L2 switch acts as a bridge to connect the core SP network that has been designated for Pes role as Access Points ( APs ) and has a connection with the Customer Router (CR) . The CR is further connected to the Multicast Subscribers / Listeners / Interested Receivers. For more information, see Figure 1 . These CRs are acting as a default gateway to the end-user Personal Computers (PCs) from where users access the finance and Stock exchange application for selling and buying orders.

This JVD for the Enterprise WAN of the stock exchange network has the following major components:

Next-Generation Multicast VPN (NGMVPN) with MPLS and RSVP-TE

Traditional multicast mechanisms struggle with operational complexity and scalability when serving thousands of flows across geographically dispersed sites. Next-Generation MVPN, leveraging MPLS as a data plane, provides a more efficient, scalable, and resilient way to transport multicast market data over MPLS backbones. It enables exchanges to replicate multicast streams closer to the client edge, reducing core bandwidth consumption while ensuring deterministic delivery. Following the significant roles of NG-MVPN in this solution:

- NGMVPN distributes multicast traffic

- MPLS provides a label-switched network, creating dedicated and predictable paths

- Resource Reservation Protocol - Traffic Engineering (RSVP-TE)

acts as a traffic transport enabler to reserve network resources in

advance:

- Ensures consistent performance for critical applications

- Allows precise control over bandwidth and network path

Rendezvous Point (RP) Redundancy

The implementation of a Rendezvous Point (RP) in an environment with ESI-LAG is designed to ensure redundancy and seamless multicast delivery. Instead of binding the RP to a physical ESI-LAG interface, it is always placed on a loopback address, which both PE devices in the dual-homed pair advertise into the routing domain. This allows the RP to remain consistently reachable regardless of which PE is active. For high availability and load balancing, Juniper typically leverages an Anycast RP model, where both PEs are configured with the same RP address and in some cases EVPN deployments, rely on the EVPN control plane to propagate state.

ANYCAST Rendezvous Point (RP)

On Juniper Networks Devices, Anycast RP is implemented to provide redundancy and load sharing in multicast environments using PIM-SM. Instead of relying on a single Rendezvous Point (RP), multiple routers are configured with the same loopback IP address, which serves as the Anycast RP address for the domain. Each router in the PIM domain is then pointed to this common address as its RP. To ensure all RPs are aware of active multicast sources, the RPs exchange source information so that receivers can join streams regardless of which physical RP they reach. This design removes the single point of failure associated with a traditional RP, balances multicast load across multiple routers, and provides seamless failover if one RP goes down, all while remaining fully standards-based and interoperable with other vendors.

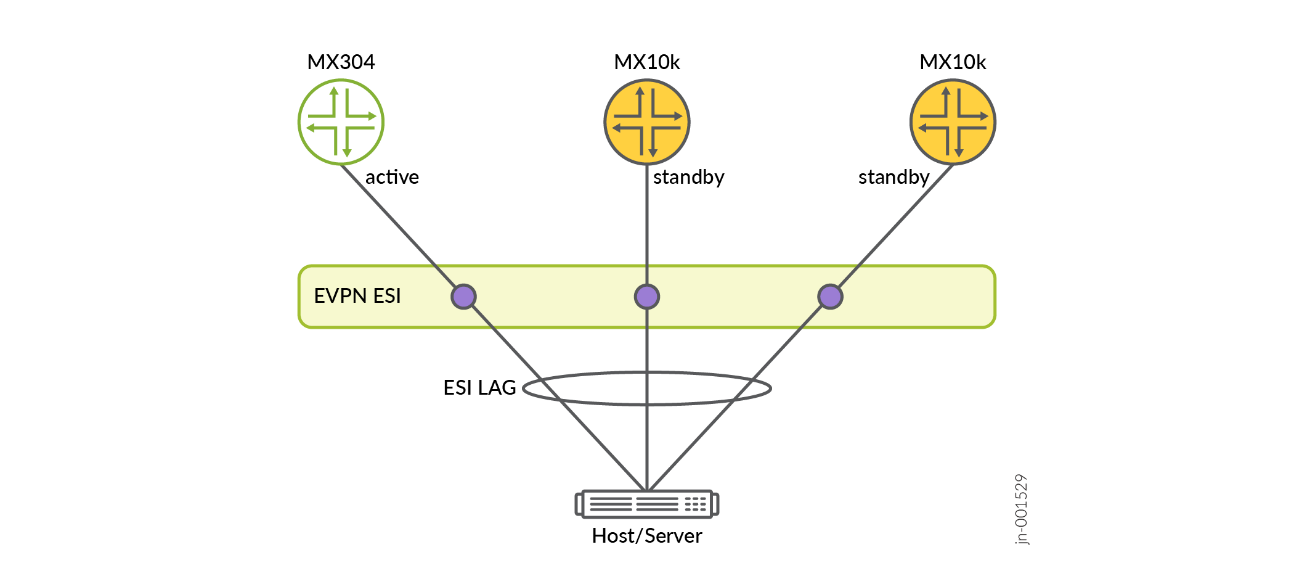

EVPN (Ethernet Virtual Private Network) with Single-Active

Imagine the stock exchange network as a critical highway system for financial transactions. EVPN acts as an intelligent traffic management system that can instantly reroute the traffic if one route is blocked.

In this implementation:

- The Single-Active state of ESI model ensures immediate failover

- One network path remains actively processing traffic

- A standby path is constantly ready to take over instantaneously

The Single-Active solution is more predictive for packet forwarding in this solution. One should avoid using active/active solutions for predictive packet forwarding and mitigate packet re-ordering. Reordering and consistent latency are the factors that lead to not using active-active solutions. The application-level redundancy helps to achieve high performance of the network.

If the primary network path experiences an interruption (like a hardware failure), the standby path becomes active instantaneously, ensuring uninterrupted network connectivity for critical financial transactions.

Following are some of the benefits of using EVPN in this solution.

EVPN Efficient MAC Address Management

- Prevents MAC address flapping

- Provides consistent MAC address learning across network devices

- Supports large-scale network environments

- Enables efficient handling of MAC address mobility

EVPN Improved Convergence Times - In critical environments like financial trading or emergency communications, convergence times can be lifesaving as it has:

- Extremely fast failover times (milliseconds)

- Minimal network disruption during topology changes

- Predictable and consistent recovery behavior

- Reduction in potential service interruptions

EVPN Protocol Flexibility

- Supports multiple underlying transport mechanisms

- Works with MPLS, IP, and other transport protocols

- Provides vendor-neutral implementation options

- Enables gradual network evolution

- Supports mixed-vendor network environments

Layer 3 Virtual Private Network

Layer 3 Virtual Private Network (L3VPN) in this solution provides connectivity from a brokerage customer to the stock exchange server to place orders like buying and selling the securities including stock, options, bonds, and so on. As mentioned earlier, in stock exchanges the stock transaction can view as below where buy and sell happen with unicast traffic and updates and current stock prices sent as multicast traffic.

Stock Transaction: unicast (sell) 🡪 multicast (advertise ) 🡪 unicast (buy ) 🡪 updated multicast (sold)

Figure 4 shows traffic flow of unicast and multicast traffic designed into this solution. Figure 5 provides a more detailed view of the finance and Stock Exchange Data Center side, where EVPN instances are used for all L2 traffic. With IRB, this layer-3 traffic is then steered to L3VPNs for unicast and Multicast.

Two-Way Active Measurement Protocol (TWAMP) SLA Monitoring

TWAMP SLA monitoring is like a network health inspector constantly checking every critical network path. It:

- Measures network performance in real-time

- Tracks metrics such as:

- Latency

- Packet loss

- Jitter

- Sends immediate alerts for performance degradation

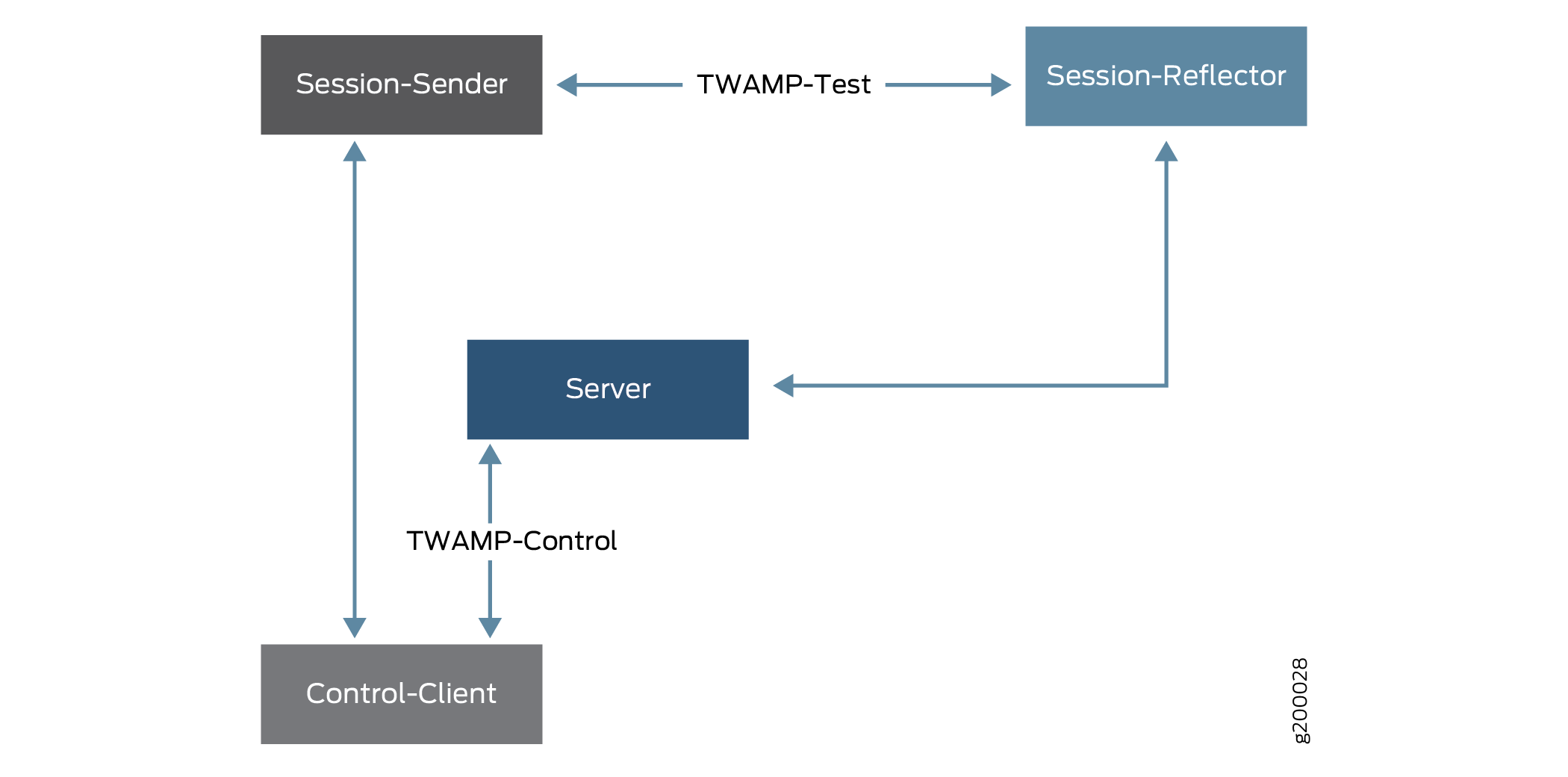

Usually, TWAMP operates between interfaces on two devices playing specific roles. TWAMP is often used to check Service Level Agreement (SLA) compliance, and the TWAMP feature is often presented in that context. TWAMP uses two related protocols, running between several defined elements:

- TWAMP-Control—Initiates, starts, and ends test sessions. The TWAMP-Control protocol runs between a Control-Client element and a Server element.

- TWAMP-Test—Exchanges test packets between two TWAMP elements. The TWAMP-Test protocol runs between a Session-Sender element and a Session-Reflector element.

Figure 6 shows four elements as follows:

The TWAMP client-server architecture is implemented as follows:

TWAMP client

- Control-Client sets up, starts and stops the TWAMP test sessions.

- Session-Sender creates TWAMP test packets that are sent to the Session-Reflector in the TWAMP server.

TWAMP server

- Session-Reflector sends back a measurement packet when a test packet is received but does not maintain a record of such information.

- Server manages one or more sessions with the TWAMP client and listens for control messages on a TCP port.

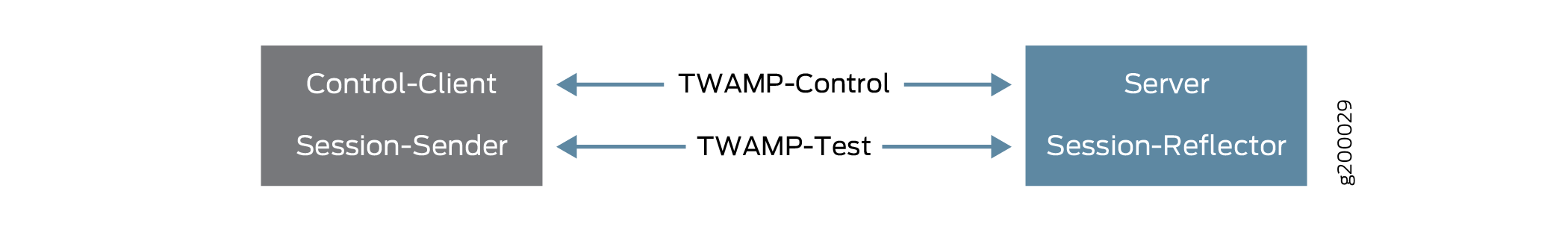

Figure 7 shows packaging of these elements into TWAMP client and TWAMP server processes.

Stock Exchange Significance: Ensures that trading platforms maintain highest possible performance standards, critical for millisecond-sensitive financial transactions.

Class of Service with Multifield Classifiers

Class of Service (CoS) framework provides a powerful mechanism to classify, prioritize, and manage network traffic based on application or service requirements. It ensures predictable performance during congestion by categorizing packets into multiple forwarding classes and queues, each governed by defined scheduling and drop policies. Within this framework, the multifield (MF) classifier plays a crucial role by enabling granular classification decisions that go beyond simple DSCP or EXP bit inspection. Instead, it examines multiple packet header fields such as source and destination IP addresses, TCP/UDP ports, protocol types, VLAN tags, or ingress interfaces, hence Juniper routers accurately identify traffic patterns and assign them to appropriate forwarding classes.

For example, the MF classifier can recognize real-time traffic like voice and video streams based on UDP port ranges and classify them into high-priority queues such as expedited-forwarding or assured-forwarding, while default data flows are directed to best-effort classes. Once packets are classified, they are processed through schedulers, shapers, and drop profiles that determine transmission priority and bandwidth allocation per queue. This ensures that delay-sensitive traffic, such as VoIP or video conferencing, receives strict priority treatment, while data or research traffic is handled fairly using weighted queuing.

The multifield classifier is the most granular and flexible classification mechanism in Junos OS class of service. Unlike simple code-point classifiers (based only on DSCP or EXP bits), an MF classifier examines multiple fields in the packet header simultaneously.

Class of Service (CoS) in Junos OS is designed to manage and prioritize network traffic, ensuring predictable performance even during congestion. It provides differentiated handling of packets based on user-defined policies aligning with business-critical priorities such as real-time applications, voice/video, or control-plane traffic.

The CoS process typically follows this logical sequence:

Classifier → Rewrite → Scheduler → Drop Profile → Queuing → Transmission

Class of Service in Junos OS performs the following functions:

- Identifies and prioritizes network traffic based on multiple

parameters:

- Source IP address

- Destination IP address

- Protocol type

- Application type

- Ensures mission-critical traffic (like trading transactions) gets highest priority

- Allocates lower bandwidth to less critical traffic