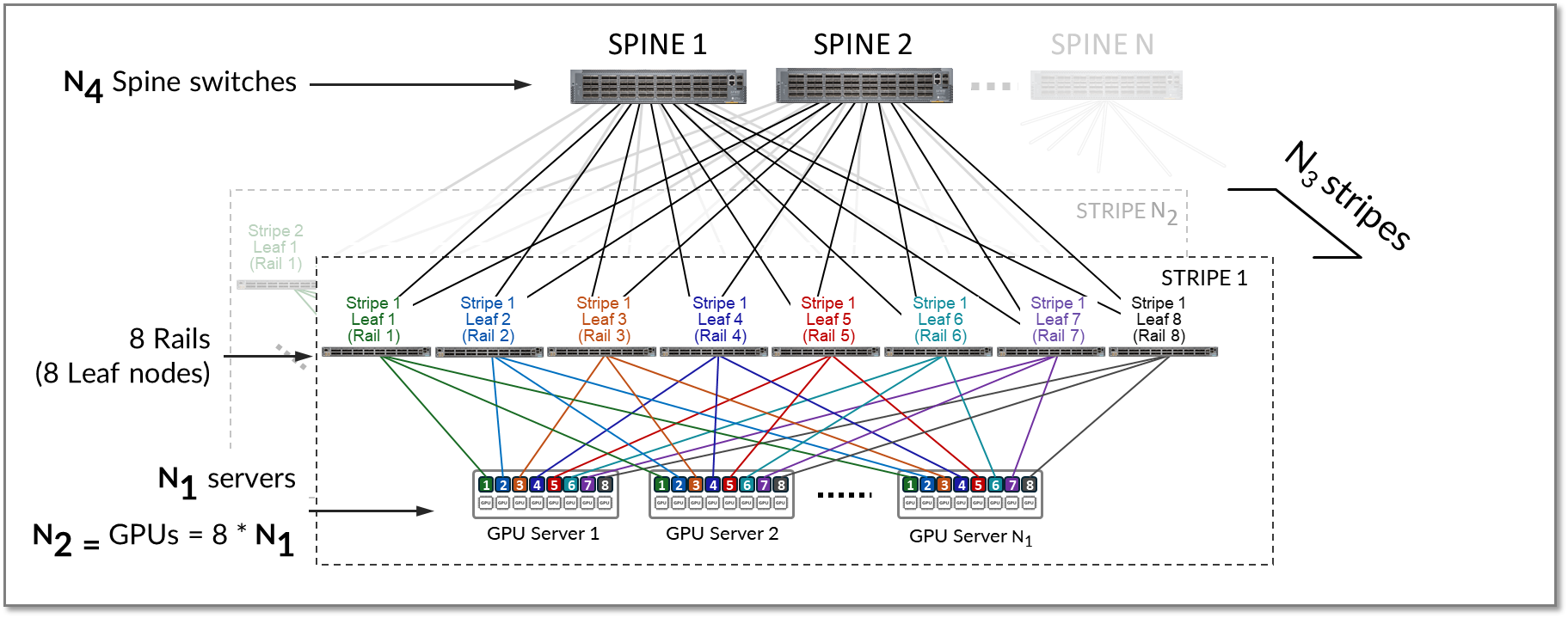

Backend GPU Rail Optimized Stripe Architecture

As previously described a Rail Optimized Stripe Architecture provides efficient data transfer between GPUs, especially during computationally intensive tasks such as AI Large Language Models (LLM) training workloads, where seamless data transfer is necessary to complete the tasks within a reasonable timeframe. A Rail Optimized topology aims to maximize performance by providing minimal bandwidth contention, minimal latency, and minimal network interference, ensuring that data can be transmitted efficiently and reliably across the network.

In a Rail Optimized Stripe Architecture there are two important concepts: rail and stripe.

The GPUs on a server are numbered 1-8, where the number represents the GPU’s position in the server, as shown in Figure 6. This number is sometimes called rank or more specifically "local rank" in relationship to the GPUs in the server where the GPU sits, or "global rank" in relationship to all the GPUs (in multiple servers) assigned to a single job.

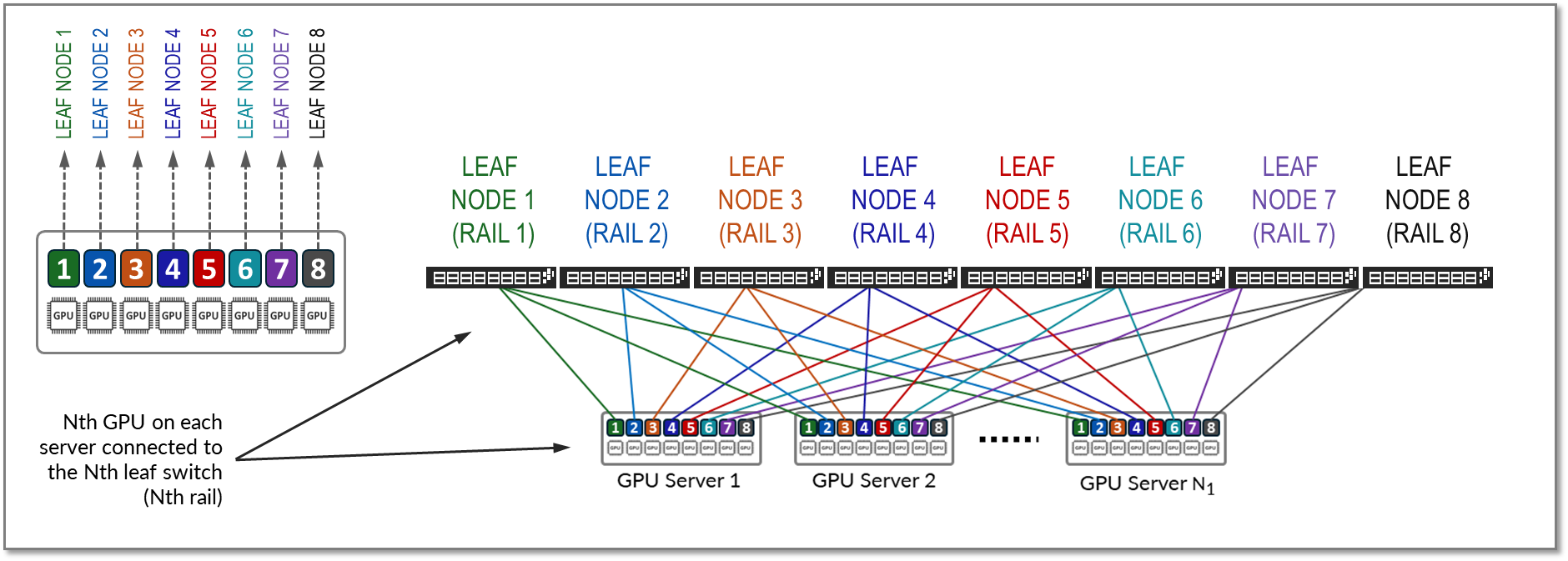

A rail connects GPUs of the same order across one of the leaf nodes in the fabric; that is, rail Nth connects all GPUs in position Nth on all the servers, to leaf node Nth, as shown in Figure 9.

Figure 9: Rails in a Rail Optimized Architecture

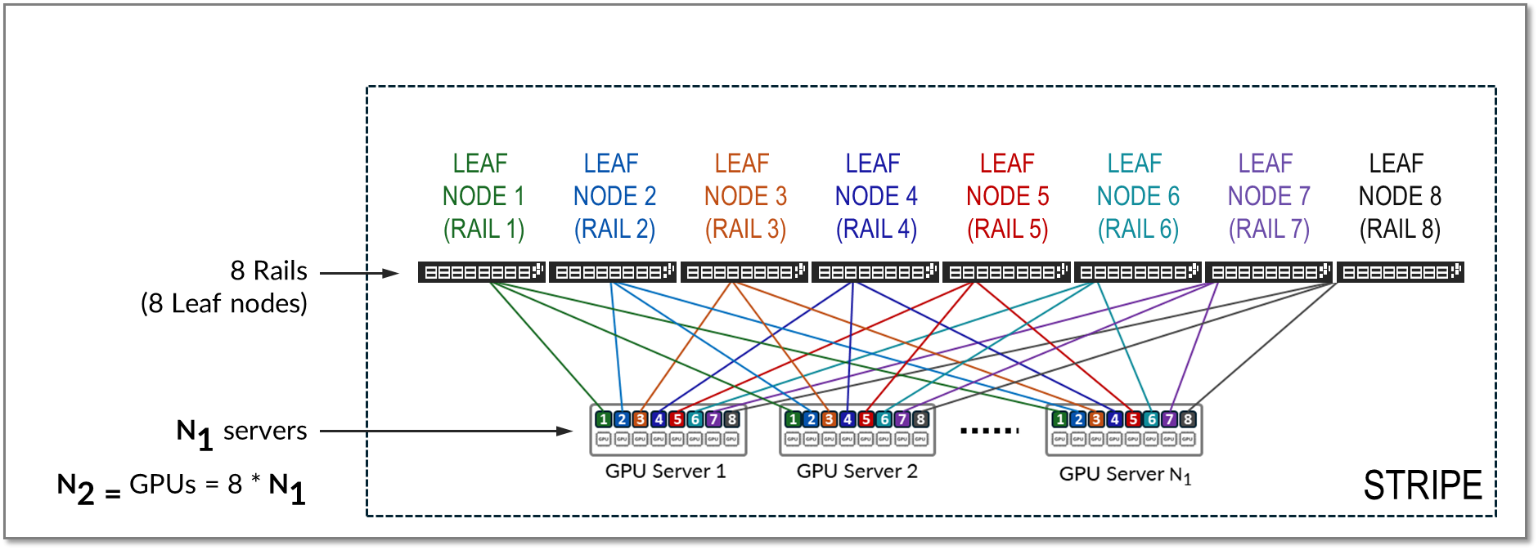

A stripe refers to a design module or building block, comprised of multiple rails, and that includes a number of Leaf nodes and GPU servers.

Figure 10: Stripes in a Rail Optimized Architecture

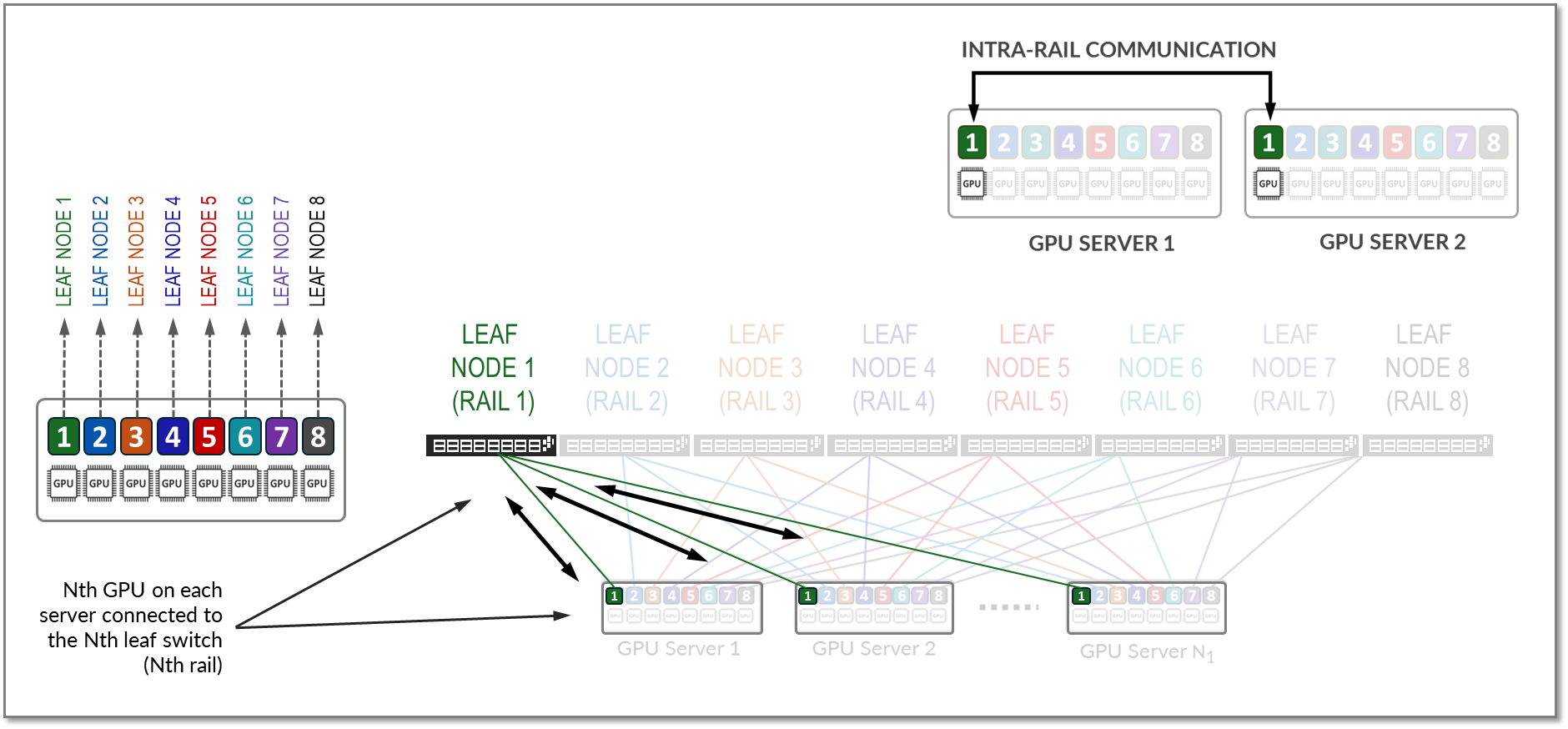

All traffic between GPUs of the same rank (intra-rail traffic) is forwarded at the leaf node level as shown in Figure 11.

Figure 11: Intra-rail GPU to GPU traffic example.

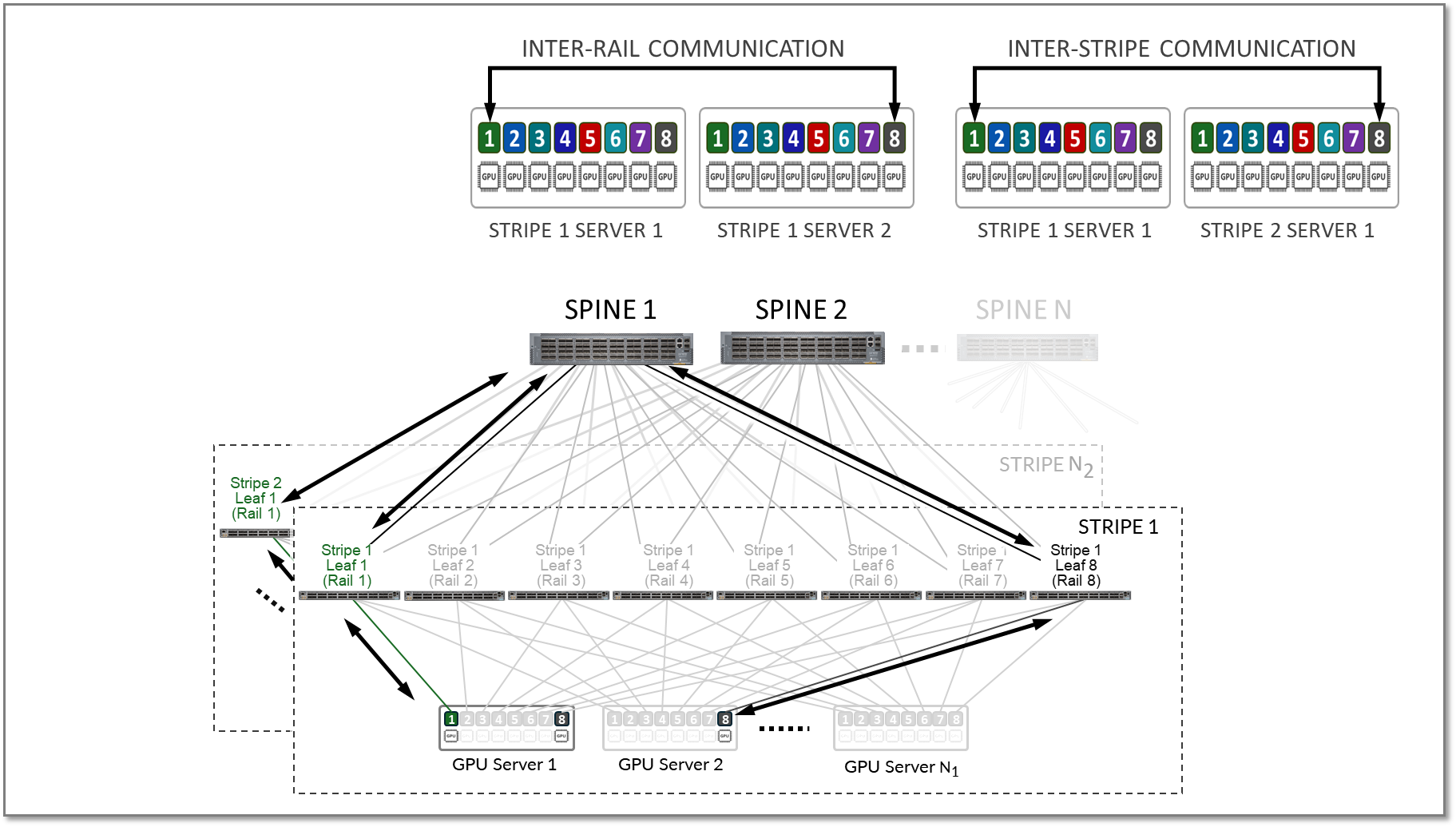

A stripe can be replicated to scale up the number of servers (N1) and GPUs (N2) in an AI cluster. Multiple stripes (N3) are then connected across Spine switches as shown in Figure 12.

Figure 12: Multiple stripes connected via Spine nodes

Both Inter-rail and inter-stripe

traffic will be forwarded across the spines nodes as shown in

figure 13.

Both Inter-rail and inter-stripe

traffic will be forwarded across the spines nodes as shown in

figure 13.

Figure 13. Inter-rail, and Inter-stripe GPU to GPU traffic example.