ON THIS PAGE

Benefits of Multicast Forwarding with IGMP Snooping or MLD Snooping in an EVPN-VXLAN Environment

Supported IGMP or MLD Versions and Group Membership Report Modes

Summary of Multicast Traffic Forwarding and Routing Use Cases

Use Case 2: Inter-VLAN Multicast Routing and Forwarding—IRB Interfaces with PIM

Use Case 3: Inter-VLAN Multicast Routing and Forwarding—PIM Gateway with Layer 2 Connectivity

Use Case 4: Inter-VLAN Multicast Routing and Forwarding—PIM Gateway with Layer 3 Connectivity

Use Case 5: Inter-VLAN Multicast Routing and Forwarding—External Multicast Router

Overview of Multicast Forwarding with IGMP Snooping or MLD Snooping in an EVPN-VXLAN Environment

Internet Group Management Protocol (IGMP) snooping and Multicast Listener Discovery (MLD) snooping constrain multicast traffic in a broadcast domain to interested receivers and multicast devices. In an environment with a significant volume of multicast traffic, using IGMP or MLD snooping preserves bandwidth because multicast traffic is forwarded only on those interfaces where there are multicast listeners. IGMP snooping optimizes IPv4 multicast traffic flow. MLD snooping optimizes IPv6 multicast traffic flow.

Starting in Junos OS Release 21.2R1, we support optimized inter-subnet multicast (OISM) routing and forwarding in EVPN-VXLAN edge-routed bridging (ERB) overlay networks. See Optimized Inter-Subnet Multicast in EVPN Networks for full details on OISM configuration and operation. You configure IGMP snooping (or, if supported, MLD snooping) on the fabric leaf devices as part of OISM configuration. OISM enables efficient multicast routing and forwarding for both internal and external multicast sources and receivers in ERB overlay fabrics.

Starting with Junos release 22.3R1, EX4400 switches support IPv4 and IPv6 multicast traffic in an IPv6 EVPN-VXLAN overlay with an IPv6 underlay.

Starting with Junos OS Release 17.2R1, QFX10000 switches support IGMP snooping in an Ethernet VPN (EVPN)-Virtual Extensible LAN (VXLAN) ERB (EVPN-VXLAN topology with a collapsed IP fabric).

Starting with Junos OS Release 17.3R1, QFX10000 switches support exchanging traffic between multicast sources and receivers in an EVPN-VXLAN ERB overlay using IGMP. These switches also support multicast traffic flow from and to sources and receivers in an external Protocol Independent Multicast (PIM) domain. A Layer 2 multicast VLAN (MVLAN) and associated IRB interfaces enable the exchange of multicast traffic between these two domains.

IGMP snooping support in an EVPN-VXLAN network is available on the following switches in the QFX5000 line as described below. In releases up until Junos OS Releases 18.4R2 and 19.1R2, with IGMP snooping enabled, these switches only constrain flooding for multicast traffic coming in on the VXLAN tunnel network ports; they still flood multicast traffic coming in from an access interface to all other access and network interfaces:

-

Starting with Junos OS Release 18.1R1, QFX5110 switches support IGMP snooping as leaf devices in an EVPN-VXLAN centrally-routed bridging (CRB) overlay (EVPN-VXLAN topology with a two-layer IP fabric) for forwarding multicast traffic within VLANs. You can’t configure IRB interfaces on a VXLAN with IGMP snooping for forwarding multicast traffic between VLANs. (You can only configure and use IRB interfaces for unicast traffic.)

-

Starting with Junos OS Release 18.4R2 (but not Junos OS Releases 19.1R1 and 19.2R1), QFX5120-48Y switches support IGMP snooping as leaf devices in an EVPN-VXLAN CRB overlay.

-

Starting with Junos OS Release 19.1R1, QFX5120-32C switches support IGMP snooping as leaf devices in an EVPN-VXLAN CRB overlay.

-

Starting in Junos OS Releases 18.4R2 and 19.1R2, selective multicast forwarding is enabled by default on QFX5110 and QFX5120 switches when you configure IGMP snooping in EVPN-VXLAN networks, further constraining multicast traffic flooding. With IGMP snooping and selective multicast forwarding, these switches send the multicast traffic only to interested receivers in both the EVPN core and on the access side for multicast traffic coming in either from an access interface or an EVPN network interface.

-

Starting with Junos OS Release 20.2R1, QFX5120-48T switches support IGMP snooping as leaf devices in multihomed EVPN-VXLAN CRB overlay fabrics.

-

Starting with Junos OS Release 20.4R1, QFX5120-48YM switches support IGMP snooping as leaf devices in multihomed EVPN-VXLAN CRB overlay fabrics.

Starting with Junos OS Release 19.3R1, EX9200 switches, MX Series routers, and vMX virtual routers support IGMP version 2 (IGMPv2) and IGMP version 3 (IGMPv3), IGMP snooping, selective multicast forwarding, external PIM gateways, and external multicast routers with an EVPN-VXLAN CRB overlay.

Starting in Junos OS Releases 20.4R1, in EVPN-VXLAN CRB overlay fabrics, QFX5110, QFX5120 and the QFX10000 line of switches support IGMPv3 with IGMP snooping for IPv4 multicast traffic, and MLD version 1 (MLDv1) and MLD version 2 (MLDv2) with MLD snooping for IPv6 multicast traffic. You can configure these switches to process IGMPv3 and MLDv2 source-specific multicast (SSM) reports, but these devices can’t process both SSM reports and any-source multicast (ASM) reports at the same time. When you configure them to operate in SSM mode, these devices drop any ASM reports. When not configured to operate in SSM mode (the default setting), these devices process any ASM reports but drop IGMPv3 and MLDv2 SSM reports.

Unless called out explicitly, the information in this topic applies to IGMPv2, IGMPv3, MLDv1, and MLDv2 on the devices that support these protocols in the following IP fabric architectures:

-

EVPN-VXLAN ERB overlay

-

EVPN-VXLAN CRB overlay

On a Juniper Networks switching device, for example, a QFX10000 switch, you can configure a VLAN. On a Juniper Networks routing device, for example, an MX480 router, you can configure the same entity, which is called a bridge domain. To keep things simple, this topic uses the term VLAN when referring to the same entity configured on both Juniper Networks switching and routing devices.

Benefits of Multicast Forwarding with IGMP Snooping or MLD Snooping in an EVPN-VXLAN Environment

In an environment with a significant volume of multicast traffic, using IGMP snooping or MLD snooping constrains multicast traffic in a VLAN to interested receivers and multicast devices, which conserves network bandwidth.

Synchronizing the IGMP or MLD state among all EVPN devices for multihomed receivers ensures that all subscribed listeners receive multicast traffic, even in cases such as the following:

IGMP or MLD membership reports for a multicast group might arrive on an EVPN device that is not the Ethernet segment’s designated forwarder (DF).

An IGMP or MLD message to leave a multicast group arrives at a different EVPN device than the EVPN device where the corresponding join message for the group was received.

Selective multicast forwarding conserves bandwidth usage in the EVPN core and reduces the load on egress EVPN devices that do not have listeners.

The support of external PIM gateways enables the exchange of multicast traffic between sources and listeners in an EVPN-VXLAN network and sources and listeners in an external PIM domain. Without this support, the sources and listeners in these two domains would not be able to communicate.

Supported IGMP or MLD Versions and Group Membership Report Modes

Table 1 outlines the supported IGMP versions and the membership report modes supported for each version.

|

IGMP Versions |

Any-Source Multicast (ASM) (*,G) Only |

Source-Specific Multicast (SSM) (S,G) Only |

ASM (*,G) + SSM (S,G) |

|---|---|---|---|

|

IGMPv2 |

Yes (default) |

No |

No |

|

IGMPv3 |

Yes (default) |

Yes (if configured) |

No |

|

MLDv1 |

Yes (default) |

No |

No |

|

MLDv2 |

Yes (default) |

Yes (if configured) |

No |

To explicitly configure EVPN devices to process only SSM (S,G) membership reports for

IGMPv3 or MLDv2, set the evpn-ssm-reports-only configuration option at the

[edit protocols igmp-snooping vlan vlan-name] hierarchy level.

You can enable SSM-only processing for one or more VLANs in an EVPN routing instance (EVI).

When enabling this option for a routing instance of type virtual switch,

the behavior applies to all VLANs in the virtual switch instance. When you enable this

option, the device doesn’t process ASM reports and drops them.

If you don’t configure the evpn-ssm-reports-only option, by default, EVPN

devices process IGMPv2, IGMPv3, MLDv1, or MLDv2 ASM reports and drop IGMPv3 or MLDv2 SSM

reports.

Summary of Multicast Traffic Forwarding and Routing Use Cases

Table 2 provides a summary of the multicast traffic forwarding and routing use cases that we support in EVPN-VXLAN networks and our recommendation for when you should apply a use case to your EVPN-VXLAN network.

Use Case Number |

Use Case Name |

Summary |

Recommended Usage |

|---|---|---|---|

1 |

Intra-VLAN multicast traffic forwarding |

Forwarding of multicast traffic to hosts within the same VLAN. |

We recommend implementing this basic use case in all EVPN-VXLAN networks. |

2 |

Inter-VLAN multicast routing and forwarding—IRB interfaces with PIM |

IRB interfaces using PIM on Layer 3 EVPN devices. These interfaces route multicast traffic between source and receiver VLANs. |

We recommend implementing this basic use case in all EVPN-VXLAN networks except when you prefer to use an external multicast router to handle inter-VLAN routing (see use case 5). |

3 |

Inter-VLAN multicast routing and forwarding—PIM gateway with Layer 2 connectivity |

A Layer 2 mechanism for a data center, which uses IGMP and PIM, to exchange multicast traffic with an external PIM domain. |

We recommend this use case in either EVPN-VXLAN ERB overlays or EVPN-VXLAN CRB overlays. |

4 |

Inter-VLAN multicast routing and forwarding—PIM gateway with Layer 3 connectivity |

A Layer 3 mechanism for a data center, which uses IGMP (or MLD) and PIM, to exchange multicast traffic with an external PIM domain. |

We recommend this use case in EVPN-VXLAN CRB overlays only. |

5 |

Inter-VLAN multicast routing and forwarding—external multicast router |

Instead of IRB interfaces on Layer 3 EVPN devices, an external multicast router handles inter-VLAN routing. |

We recommend this use case when you prefer to use an external multicast router instead of IRB interfaces on Layer 3 EVPN devices to handle inter-VLAN routing. |

For example, in a typical EVPN-VXLAN ERB overlay, you can implement use case 1 for intra-VLAN forwarding and use case 2 for inter-VLAN routing and forwarding. Or, if you want an external multicast router to handle inter-VLAN routing in your EVPN-VXLAN network instead of EVPN devices with IRB interfaces running PIM, you can implement use case 5 instead of use case 2. If there are hosts in an existing external PIM domain that you want hosts in your EVPN-VXLAN network to communicate with, you can also implement use case 3.

When implementing any of the use cases in an EVPN-VXLAN CRB overlay, you can use a mix of spine

devices—for example, MX Series routers, EX9200 switches, and QFX10000 switches. However, if

you do this, keep in mind that the functionality of all spine devices is determined by the

limitations of each spine device. For example, QFX10000 switches support a single routing

instance of type virtual-switch. Although MX Series routers and EX9200

switches support multiple routing instances of type evpn or

virtual-switch, on each of these devices, you would have to configure a

single routing instance of type virtual-switch to interoperate with the

QFX10000 switches.

Use Case 1: Intra-VLAN Multicast Traffic Forwarding

We recommend this basic use case for all EVPN-VXLAN networks.

This use case supports the forwarding of multicast traffic to hosts within the same VLAN and includes the following key features:

-

Hosts that are single-homed to an EVPN device or multihomed to more than one EVPN device in all-active mode.

Note:EVPN-VXLAN multicast uses special IGMP and MLD group leave processing to handle multihomed sources and receivers, so we don’t support the

immediate-leaveconfiguration option in the[edit protocols igmp-snooping]or[edit protocols mld-snooping]hierarchies in EVPN-VXLAN networks. -

Routing instances:

-

(QFX Series switches) A single routing instance of type

virtual-switch. -

(MX Series routers, vMX virtual routers, and EX9200 switches) Multiple routing instances of type

evpnorvirtual-switch.-

EVI route target extended community attributes associated with multihomed EVIs. BGP EVPN Type 7 (Join Sync Route) and Type 8 (Leave Synch Route) routes carry these attributes to enable the simultaneous support of multiple EVPN routing instances.

For information about another supported extended community, see the “EVPN Multicast Flags Extended Community” section.

-

-

-

IGMPv2, IGMPv3, MLDv1 or MLDv2. For information about the membership report modes supported for each IGMP or MLD version, see Table 1. For information about IGMP or MLD route synchronization between multihomed EVPN devices, see Overview of Multicast Forwarding with IGMP or MLD Snooping in an EVPN-MPLS Environment.

-

IGMP snooping or MLD snooping. Hosts in a network send IGMP reports (for IPv4 traffic) or MLD reports (for IPv6 traffic) expressing interest in particular multicast groups from multicast sources. EVPN devices with IGMP snooping or MLD snooping enabled listen to the IGMP or MLD reports, and use the snooped information on the access side to establish multicast routes that only forward traffic for a multicast group to interested receivers.

IGMP snooping or MLD snooping supports multicast senders and receivers in the same or different sites. A site can have either receivers only, sources only, or both senders and receivers attached to it.

-

Selective multicast forwarding (advertising EVPN Type 6 Selective Multicast Ethernet Tag (SMET) routes for forwarding only to interested receivers). This feature enables EVPN devices to selectively forward multicast traffic to only the devices in the EVPN core that have expressed interest in that multicast group.

Note:We support selective multicast forwarding to devices in the EVPN core only in EVPN-VXLAN CRB overlays.

When you enable IGMP snooping or MLD snooping, selective multicast forwarding is enabled by default.

-

EVPN devices that do not support IGMP snooping, MLD snooping, and selective multicast forwarding.

Although you can implement this use case in an EVPN single-homed environment, this use case is particularly effective in an EVPN multihomed environment with a high volume of multicast traffic.

All multihomed interfaces must have the same configuration, and all multihomed peer EVPN devices must be in active mode (not standby or passive mode).

An EVPN device that initially receives traffic from a multicast source is known as the ingress device. The ingress device handles the forwarding of intra-VLAN multicast traffic as follows:

With IGMP snooping or MLD snooping enabled (which also enable selective multicast forwarding on supporting devices):

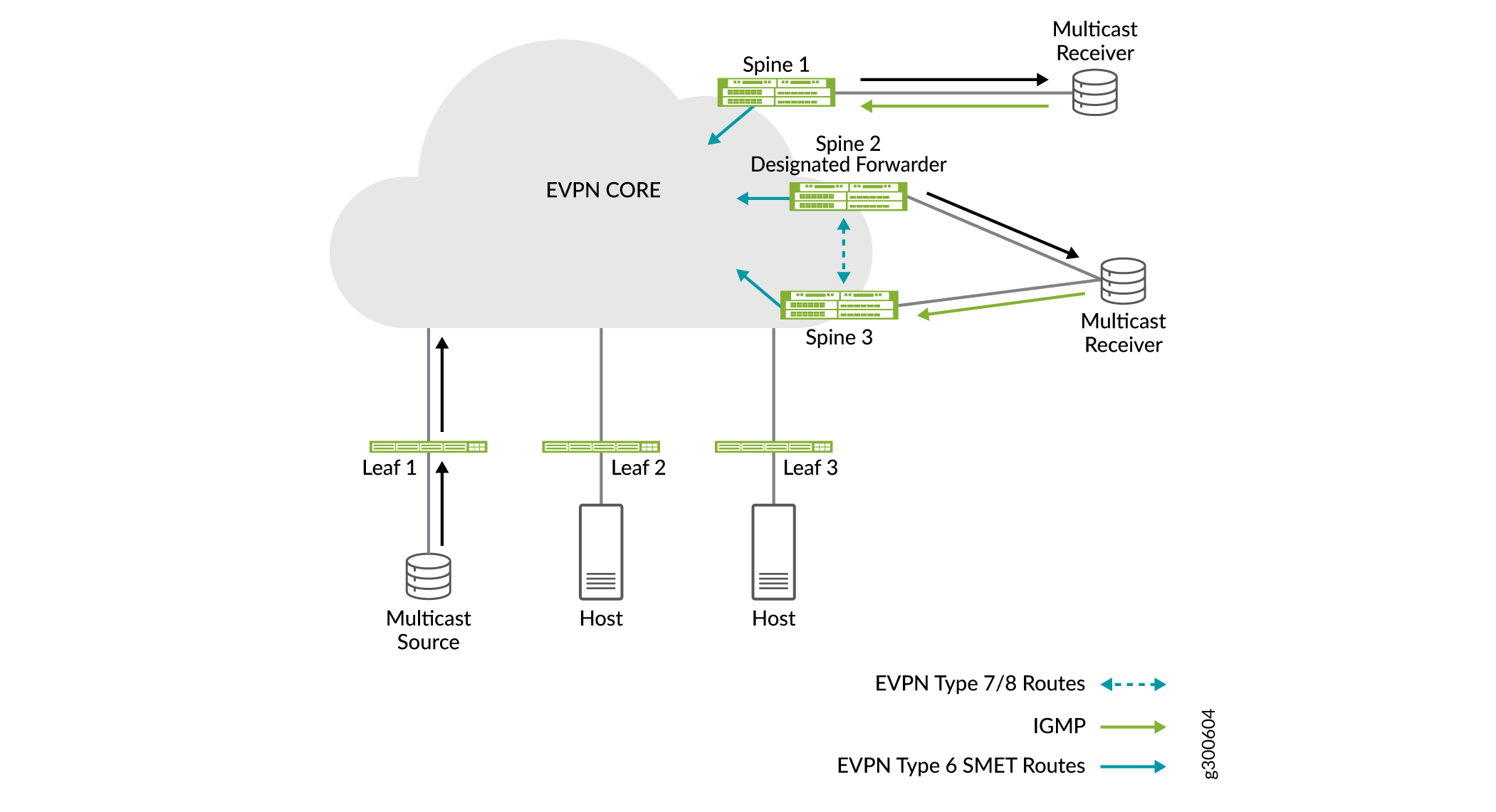

As shown in Figure 1, the ingress device (leaf 1) selectively forwards the traffic to other EVPN devices with access interfaces where there are interested receivers for the same multicast group.

The traffic is then selectively forwarded to egress devices in the EVPN core that have advertised the EVPN Type 6 SMET routes.

If any EVPN devices do not support IGMP snooping or MLD snooping, or the ability to originate EVPN Type 6 SMET routes, the ingress device floods multicast traffic to these devices.

If a host is multihomed to more than one EVPN device, the EVPN devices exchange EVPN Type 7 and Type 8 routes as shown in Figure 1. This exchange synchronizes IGMP or MLD membership reports received on multihomed interfaces to coordinate status from messages that go to different EVPN devices or in case one of the EVPN devices fails.

Note:The EVPN Type 7 and Type 8 routes carry EVI route extended community attributes to ensure the right EVPN instance gets the IGMP state information on devices with multiple routing instances. QFX Series switches support IGMP snooping only in the default EVPN routing instance (

default-switch). In Junos OS releases before 17.4R2, 17.3R3, or 18.1R1, these switches did not include EVI route extended community attributes in Type 7 and Type 8 routes, so they don’t properly synchronize the IGMP state if you also have other routing instances configured. Starting in Junos OS releases 17.4R2, 17.3R3, and 18.1R1, QFX10000 switches include the EVI route extended community attributes that identify the target routing instance, and can synchronize IGMP state if IGMP snooping is enabled in the default EVPN routing instance when other routing instances are configured.In releases that support MLD and MLD snooping in EVPN-VXLAN fabrics with multihoming, the same behavior applies to synchronizing the MLD state.

If you have configured IRB interfaces with PIM on one or more of the Layer 3 devices in your EVPN-VXLAN network (use case 2), note that the ingress device forwards the multicast traffic to the Layer 3 devices. The ingress device takes this action to register itself with the Layer 3 device that acts as the PIM rendezvous point (RP).

Use Case 2: Inter-VLAN Multicast Routing and Forwarding—IRB Interfaces with PIM

We recommend this basic use case for all EVPN-VXLAN networks except when you prefer to use an external multicast router to handle inter-VLAN routing (see Use Case 5: Inter-VLAN Multicast Routing and Forwarding—External Multicast Router).

For this use case, IRB interfaces using Protocol Independent Multicast (PIM) route multicast traffic between source and receiver VLANs. The EVPN devices on which the IRB interfaces reside then forward the routed traffic using these key features:

Inclusive multicast forwarding with ingress replication

IGMP snooping or MLD snooping (if supported)

Selective multicast forwarding

The default behavior of inclusive multicast forwarding is to replicate multicast traffic and flood the traffic to all devices. For this use case, however, we support inclusive multicast forwarding coupled with IGMP snooping (or MLD snooping) and selective multicast forwarding. As a result, the multicast traffic is replicated but selectively forwarded to access interfaces and devices in the EVPN core that have interested receivers.

For information about the EVPN multicast flags extended community, which Juniper Networks devices that support EVPN and IGMP snooping (or MLD snooping) include in EVPN Type 3 (Inclusive Multicast Ethernet Tag) routes, see the “EVPN Multicast Flags Extended Community” section.

In an EVPN-VXLAN CRB overlay, you can configure the spine devices so that some of them perform inter-VLAN routing and forwarding of multicast traffic and some do not. At a minimum, we recommend that you configure two spine devices to perform inter-VLAN routing and forwarding.

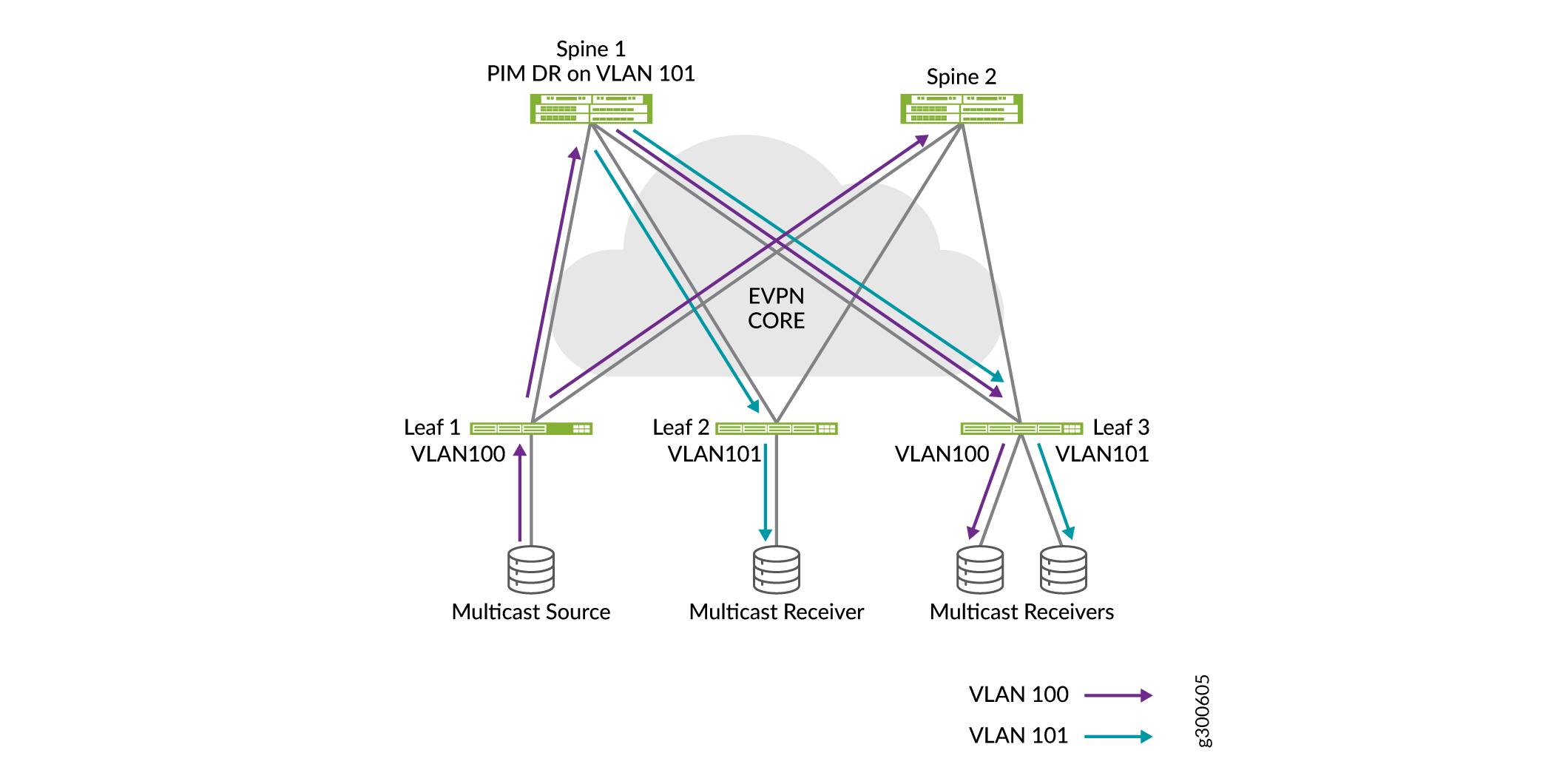

When there are multiple devices that can perform the inter-VLAN routing and forwarding of multicast traffic, one device is elected as the designated router (DR) for each VLAN.

In the sample EVPN-VXLAN CRB overlay shown in Figure 2, assume that multicast traffic needs to be routed from source VLAN 100 to receiver VLAN 101. Receiver VLAN 101 is configured on spine 1, which is designated as the DR for that VLAN.

After the inter-VLAN routing occurs, the EVPN device forwards the routed traffic to:

Access interfaces that have multicast listeners (IGMP snooping or MLD snooping).

Egress devices in the EVPN core that have sent EVPN Type 6 SMET routes for the multicast group members in receiver VLAN 2 (selective multicast forwarding).

To understand how IGMP snooping (or MLD snooping) and selective multicast forwarding reduce the impact of the replicating and flooding behavior of inclusive multicast forwarding, assume that an EVPN-VXLAN CRB overlay includes the following elements:

100 IRB interfaces using PIM starting with irb.1 and going up to irb.100

100 VLANs

20 EVPN devices

For the sample EVPN-VXLAN CRB overlay, m represents the number of VLANs, and n represents the number of EVPN devices. Assuming that IGMP snooping (or MLD snooping) and selective multicast forwarding are disabled, when multicast traffic arrives on irb.1, the EVPN device replicates the traffic m * n times or 100 * 20 times, which equals a rate of 20,000 packets. If the incoming traffic rate for a particular multicast group is 100 packets per second (pps), the EVPN device would have to replicate 200,000 pps for that multicast group.

If IGMP snooping (or MLD snooping) and selective multicast forwarding are enabled in the sample EVPN-VXLAN CRB overlay, assume that there are interested receivers for a particular multicast group on only 4 VLANs and 3 EVPN devices. In this case, the EVPN device replicates the traffic at a rate of 100 * m * n times (100 * 4 * 3), which equals 1200 pps. Note the significant reduction in the replication rate and the amount of traffic that must be forwarded.

When implementing this use case, keep in mind that there are important differences for EVPN-VXLAN CRB overlays and EVPN-VXLAN ERB overlays. Table 3 outlines these differences

EVPN VXLAN IP Fabric Architectures |

Support Mix of Juniper Networks Devices? |

All EVPN Devices Required to Host All VLANs In EVPN-VXLAN Network? |

All EVPN Devices Required to Host All VLANs that Include Multicast Listeners? |

Required PIM Configuration |

|---|---|---|---|---|

EVPN-VXLAN ERB overlay |

No. We support only QFX10000 switches for all EVPN devices. |

Yes |

Yes |

Configure PIM distributed designated router (DDR) functionality on the IRB interfaces of the EVPN devices. |

EVPN-VXLAN CRB overlay |

Yes. Spine devices: We support mix of MX Series routers, EX9200 switches, and QFX10000 switches. Leaf devices: We support mix of MX Series routers and QFX5110 switches. Note:

If you deploy a mix of spine devices, keep in mind that the

functionality of all spine devices is determined by the limitations

of each spine device. For example, QFX10000 switches support a single

routing instance of type |

No |

No. However, you must configure all VLANs that include multicast listeners on each spine device that performs inter-VLAN routing. You don’t need to configure all VLANs that include multicast listeners on each leaf device. |

Do not configure DDR functionality on the IRB interfaces of the spine devices. By not enabling DDR on an IRB interface, PIM remains in a default mode on the interface, which means that the interface acts the designated router for the VLANs. |

In addition to the differences described in Table 3, a hair pinning issue exists with an EVPN-VXLAN CRB overlay. Multicast traffic typically flows from a source host to a leaf device to a spine device, which handles the inter-VLAN routing. The spine device then replicates and forwards the traffic to VLANs and EVPN devices with multicast listeners. When forwarding the traffic in this type of EVPN-VXLAN overlay, be aware that the spine device returns the traffic to the leaf device from which the traffic originated (hair-pinning). This issue is inherent with the design of the EVPN-VXLAN CRB overlay. When designing your EVPN-VXLAN overlay, keep this issue in mind especially if you expect the volume of multicast traffic in your overlay to be high and the replication rate of traffic (m * n times) to be large.

Use Case 3: Inter-VLAN Multicast Routing and Forwarding—PIM Gateway with Layer 2 Connectivity

We recommend the PIM gateway with Layer 2 connectivity use case for both EVPN-VXLAN ERB overlays and EVPN-VXLAN CRB overlays.

For this use case, we assume the following:

You have deployed a EVPN-VXLAN network to support a data center.

In this network, you have already set up:

Intra-VLAN multicast traffic forwarding as described in use case 1.

Inter-VLAN multicast traffic routing and forwarding as described in use case 2.

There are multicast sources and receivers within the data center that you want to communicate with multicast sources and receivers in an external PIM domain.

We support this use case with both EVPN-VXLAN ERB overlays and EVPN-VXLAN CRB overlays.

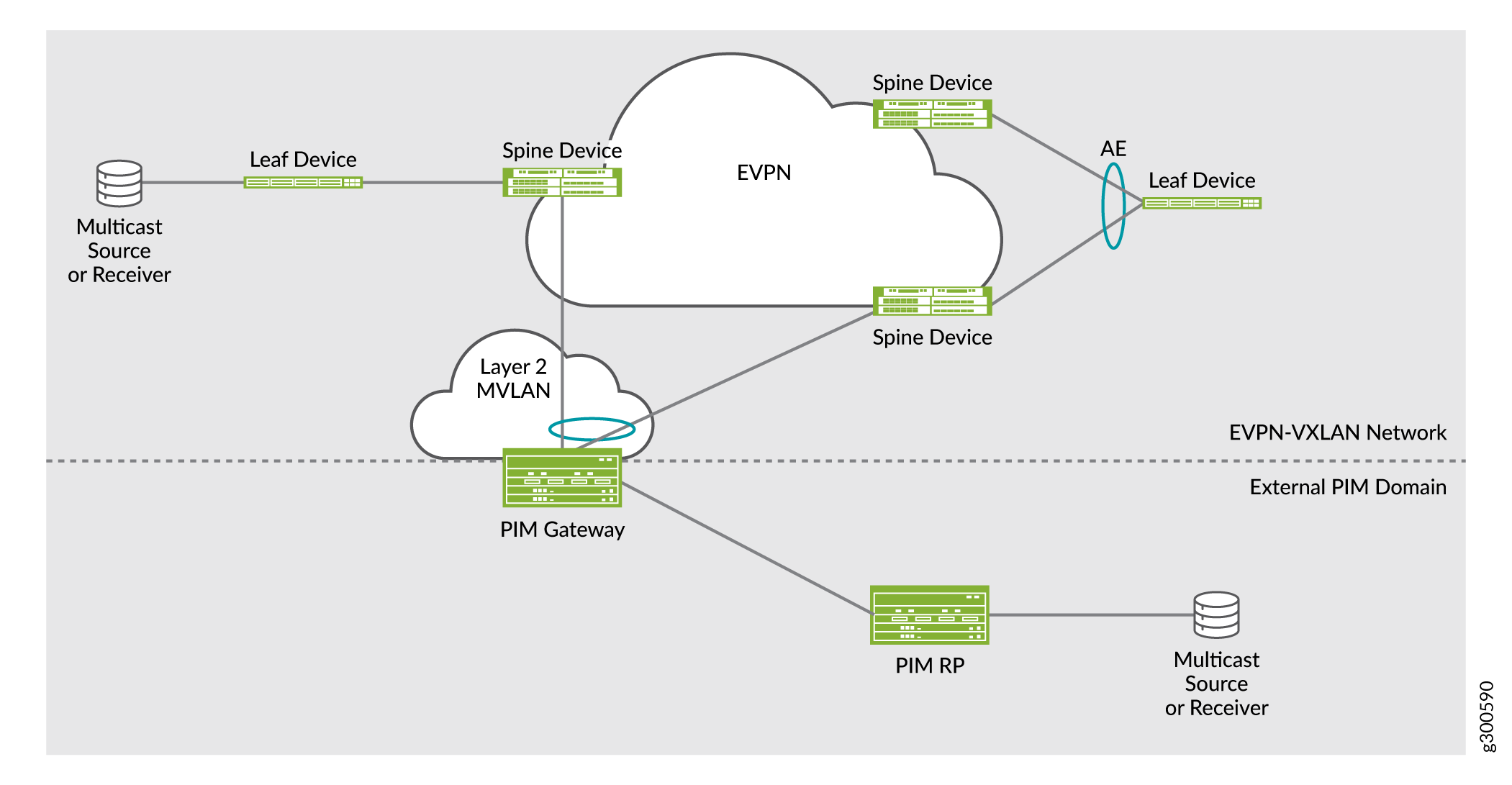

The use case provides a mechanism for the data center, which uses IGMP (or MLD) and PIM, to exchange multicast traffic with the external PIM domain. Using a Layer 2 multicast VLAN (MVLAN) and associated IRB interfaces on the EVPN devices in the data center to connect to the PIM domain, you can enable the forwarding of multicast traffic from:

An external multicast source to internal multicast destinations

An internal multicast source to external multicast destinations

Note:In this section, external refers to components in the PIM domain. Internal refers to components in your EVPN-VXLAN network that supports a data center.

Figure 3 shows the required key components for this use case in a sample EVPN-VXLAN CRB overlay.

Components in the PIM domain:

A PIM gateway that acts as an interface between an existing PIM domain and the EVPN-VXLAN network. The PIM gateway is a Juniper Networks or third-party Layer 3 device on which PIM and a routing protocol such as OSPF are configured. The PIM gateway does not run EVPN. You can connect the PIM gateway to one, some, or all EVPN devices.

A PIM rendezvous point (RP) is a Juniper Networks or third-party Layer 3 device on which PIM and a routing protocol such as OSPF are configured. You must also configure the PIM RP to translate PIM join or prune messages into corresponding IGMP (or MLD) report or leave messages then forward the reports and messages to the PIM gateway.

Components in the EVPN-VXLAN network:

Note:These components are in addition to the components already configured for use cases 1 and 2.

EVPN devices. For redundancy, we recommend multihoming the EVPN devices to the PIM gateway through an aggregated Ethernet interface on which you configure an Ethernet segment identifier (ESI). On each EVPN device, you must also configure the following for this use case:

A Layer 2 multicast VLAN (MVLAN). The MVLAN is a VLAN that is used to connect the PIM gateway. In the MVLAN, PIM is enabled.

An MVLAN IRB interface on which you configure PIM, IGMP snooping (or MLD snooping), and a routing protocol such as OSPF. To reach the PIM gateway, the EVPN device forwards multicast traffic out of this interface.

To enable the EVPN devices to forward multicast traffic to the external PIM domain, configure:

-

PIM-to-IGMP translation:

For EVPN-VXLAN ERB overlays, configure PIM-to-IGMP translation by including

pim-to-igmp-proxy upstream-interface irb-interface-nameconfiguration statements at the[edit routing-options multicast]hierarchy level. Specify the MVLAN IRB interface for the IRB interface parameter. You also must set IGMP passive mode usingigmp interface irb-interface-name passiveconfiguration statements at the[edit protocols]hierarcy level on the upstream interfaces where you setpim-to-igmp-proxy.For EVPN-VXLAN CRB overlays, you do not need to include the

pim-to-igmp-proxy upstream-interface irb-interface-nameorpim-to-mld-proxy upstream-interface irb-interface-nameconfiguration statements. In this type of overlay, the PIM protocol handles the routing of multicast traffic from the PIM domain to the EVPN-VXLAN network and vice versa. Multicast router interface:

Configure the multicast router interface by including the

multicast-router-interfaceconfiguration statement at the[edit routing-instances routing-instance-name bridge-domains bridge-domain-name protocols (igmp-snooping | mld-snooping) interface interface-name]hierarchy level. For the interface name, specify the MVLAN IRB interface.

-

PIM passive mode. For EVPN-VXLAN ERB overlays only, you must ensure that the PIM gateway views the data center as only a Layer 2 multicast domain. To do so, include the

passiveconfiguration statement at the[edit protocols pim]hierarchy level.

Use Case 4: Inter-VLAN Multicast Routing and Forwarding—PIM Gateway with Layer 3 Connectivity

We recommend the PIM gateway with Layer 3 connectivity use case for EVPN-VXLAN CRB overlays only.

For this use case, we assume the following:

You have deployed a EVPN-VXLAN network to support a data center.

In this network, you have already set up:

Intra-VLAN multicast traffic forwarding as described in use case 1.

Inter-VLAN multicast traffic routing and forwarding as described in use case 2.

There are multicast sources and receivers within the data center that you want to communicate with multicast sources and receivers in an external PIM domain.

We recommend the PIM gateway with Layer 3 connectivity use case for EVPN-VXLAN CRB overlays only.

This use case provides a mechanism for the data center, which uses IGMP (or MLD) and PIM, to exchange multicast traffic with the external PIM domain. Using Layer 3 interfaces on the EVPN devices in the data center to connect to the PIM domain, you can enable the forwarding of multicast traffic from:

An external multicast source to internal multicast destinations

An internal multicast source to external multicast destinations

Note:In this section, external refers to components in the PIM domains. Internal refers to components in your EVPN-VXLAN network that supports a data center.

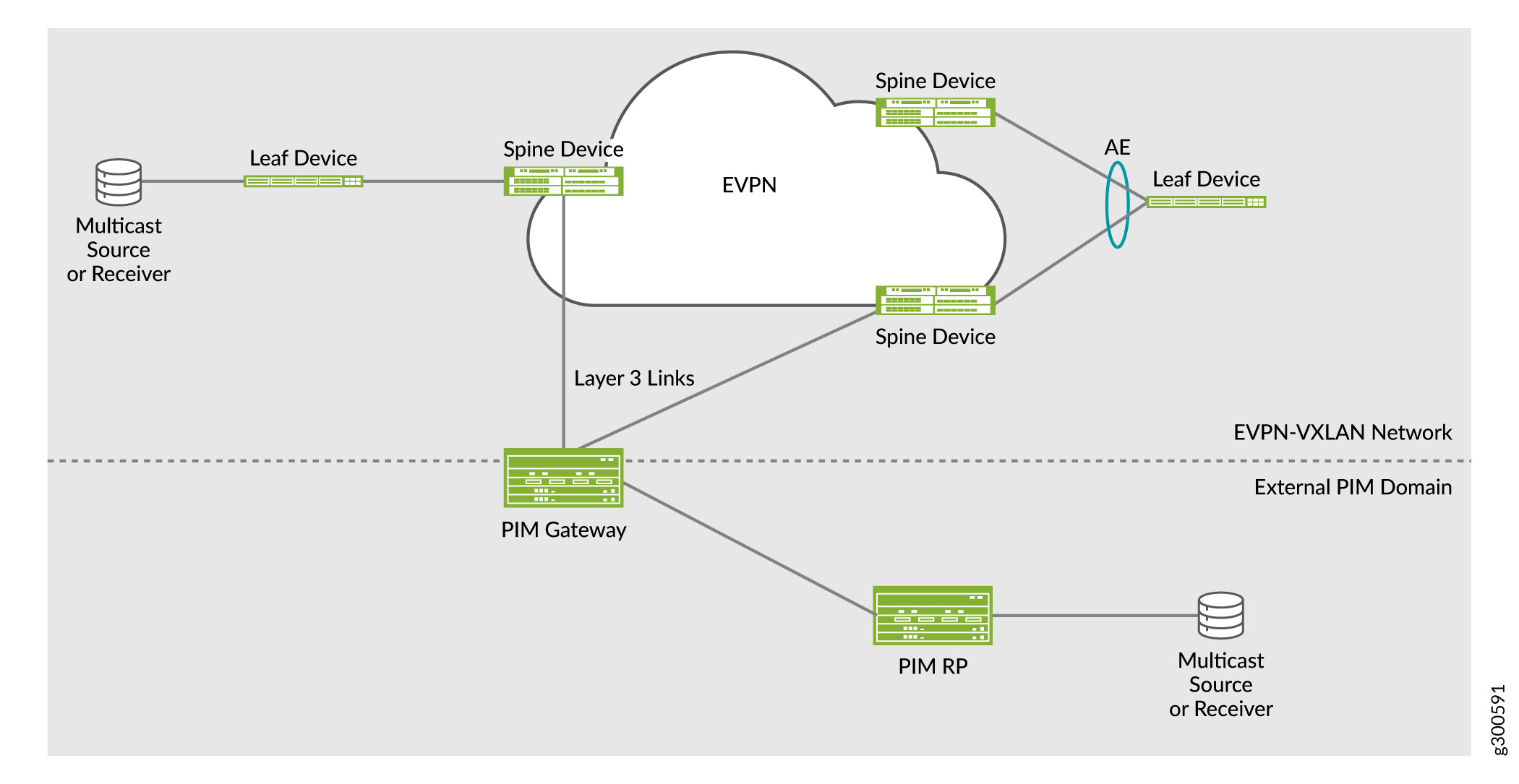

Figure 4 shows the required key components for this use case in a sample EVPN-VXLAN CRB overlay.

Components in the PIM domain:

A PIM gateway that acts as an interface between an existing PIM domain and the EVPN-VXLAN network. The PIM gateway is a Juniper Networks or third-party Layer 3 device on which PIM and a routing protocol such as OSPF are configured. The PIM gateway does not run EVPN. You can connect the PIM gateway to one, some, or all EVPN devices.

A PIM rendezvous point (RP) is a Juniper Networks or third-party Layer 3 device on which PIM and a routing protocol such as OSPF are configured. You must also configure the PIM RP to translate PIM join or prune messages into corresponding IGMP or MLD report or leave messages then forward the reports and messages to the PIM gateway.

Components in the EVPN-VXLAN network:

Note:These components are in addition to the components already configured for use cases 1 and 2.

EVPN devices. You can connect one, some, or all EVPN devices to a PIM gateway. You must make each connection through a Layer 3 interface on which PIM is configured. Other than the Layer 3 interface with PIM, this use case does not require additional configuration on the EVPN devices.

Use Case 5: Inter-VLAN Multicast Routing and Forwarding—External Multicast Router

Starting with Junos OS Release 17.3R1, you can configure an EVPN device to perform inter-VLAN forwarding of multicast traffic without having to configure IRB interfaces on the EVPN device. In such a scenario, an external multicast router is used to send IGMP or MLD queries to solicit reports and to forward VLAN traffic through a Layer 3 multicast protocol such as PIM. IRB interfaces are not supported with the use of an external multicast router.

For this use case, you must include the igmp-snooping proxy or mld-snooping proxy configuration statements at the [edit routing-instances routing-instance-name protocols vlan vlan-name] hierarchy level.

EVPN Multicast Flags Extended Community

Juniper Networks devices that support EVPN-VXLAN and IGMP snooping also support the EVPN multicast flags extended community. When you have enabled IGMP snooping on one of these devices, the device adds the community to EVPN Type 3 (Inclusive Multicast Ethernet Tag) routes.

The absence of this community in an EVPN Type 3 route can indicate the following about the device that advertises the route:

The device does not support IGMP snooping.

The device does not have IGMP snooping enabled on it.

The device is running a Junos OS software release that doesn’t support the community.

The device does not support the advertising of EVPN Type 6 SMET routes.

The device has IGMP snooping and a Layer 3 interface with PIM enabled on it. Although the Layer 3 interface with PIM performs snooping on the access side and selective multicast forwarding on the EVPN core, the device needs to attract all traffic to perform source registration to the PIM RP and inter-VLAN routing.

The behavior described above also applies to devices that support EVPN-VXLAN with MLD and MLD snooping.

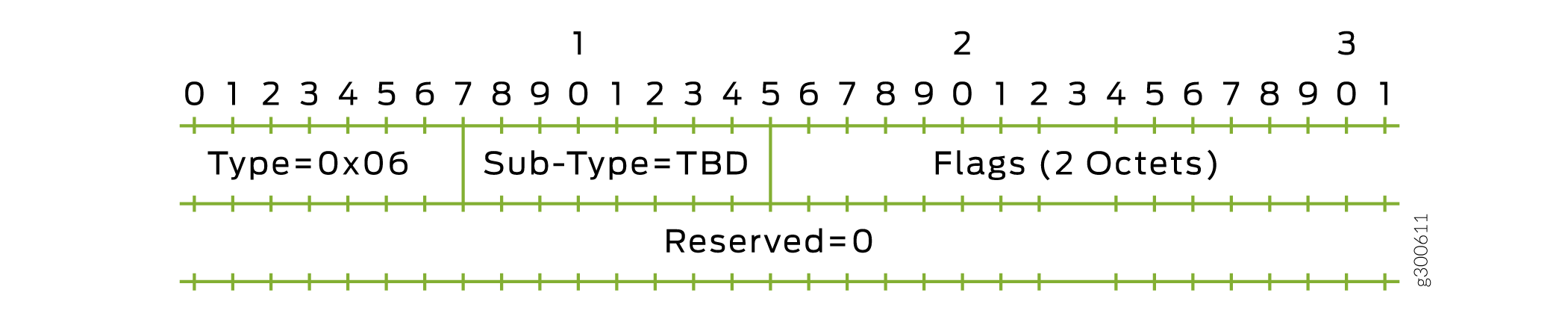

Figure 5 shows the EVPN multicast flag extended community, which has the following characteristics:

The community is encoded as an 8-bit value.

The Type field has a value of 6.

The IGMP Proxy Support flag is set to 1, which means that the device supports IGMP proxy.

The same applies to the MLD Proxy Support flag; if that flag is set to 1, the device supports MLD proxy. Either or both flags might be set.