Install Single Cluster Shared Network CN2

SUMMARY See examples on how to install single cluster CN2 in a deployment where Kubernetes traffic and CN2 traffic share the same network.

In a single cluster shared network deployment:

-

CN2 is the networking platform and CNI plug-in for that cluster. The Contrail controller runs in the Kubernetes control plane, and the Contrail data plane components run on all nodes in the cluster.

-

Kubernetes and CN2 traffic share a single network.

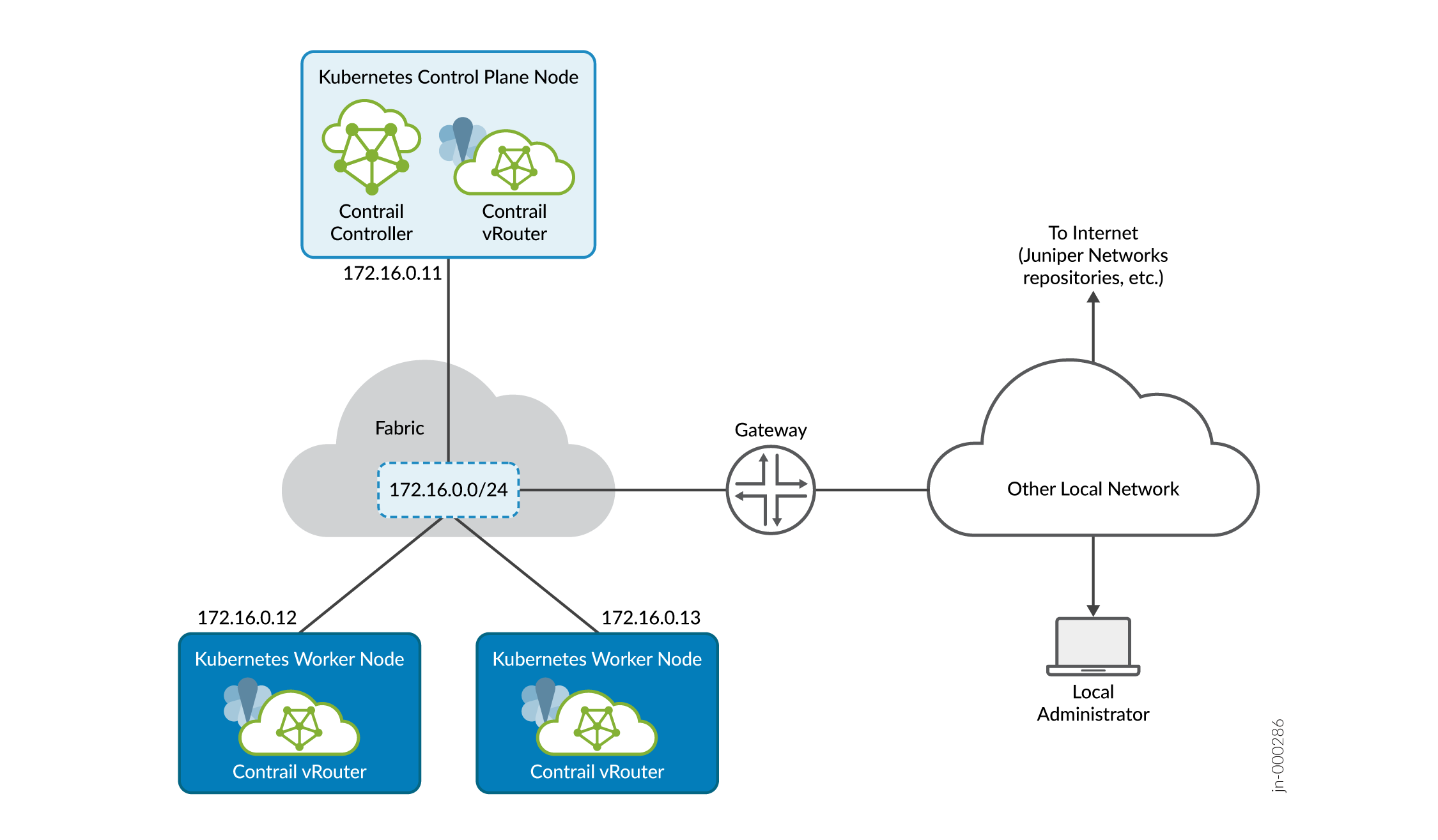

Figure 1 shows the cluster that you'll create if you follow the single cluster shared network example. The cluster consists of a single control plane node and two worker nodes.

All nodes shown can be VMs or bare metal servers.

All communication between nodes in the cluster and between nodes and external sites takes place over the single 172.16.0.0/24 fabric virtual network. The fabric network provides the underlay over which the cluster runs.

The local administrator is shown attached to a separate network reachable through a gateway. This is typical of many installations where the local administrator manages the fabric and cluster from the corporate LAN. In the procedures that follow, we refer to the local administrator station as your local computer.

Connecting all cluster nodes together is the data center fabric, which is shown in the example as a single subnet. In real installations, the data center fabric is a network of spine and leaf switches that provide the physical connectivity for the cluster.

In an Apstra-managed data center, this connectivity would be specified through the overlay virtual networks that you create across the underlying fabric switches.

The procedures in this section show basic examples of how you can use the provided manifests to create the specified CN2 deployment. You're not limited to the deployment described in this section nor are you limited to using the provided manifests. CN2 supports a wide range of deployments that are too numerous to cover in detail. Use the provided examples as a starting point to roll your own manifest tailored to your specific situation.

Install Single Cluster Shared Network CN2 Running Kernel Mode Data Plane

Use this procedure to install CN2 in a single cluster shared network deployment running a kernel mode data plane.

The manifest that you will use in this example procedure is single-cluster/single_cluster_deployer_example.yaml. The procedure assumes that you've placed this manifest into a manifests directory.

Install Single Cluster Shared Network CN2 Running DPDK Data Plane

Use this procedure to install CN2 in a single cluster shared network deployment running a DPDK data plane.

The manifest that you will use in this example procedure is single-cluster/single_cluster_deployer_example.yaml. The procedure assumes that you've placed this manifest into a manifests directory.