ON THIS PAGE

NodePort Service Support in Cloud-Native Contrail Networking

Juniper Networks supports Kubernetes NodePort service in environments using Cloud-Native Contrail® Networking™ Release 22.1 or later in a Kubernetes-orchestrated environment.

In Kubernetes, a service is an abstraction that defines a logical set of pods and the policy,

by which you can access them. The set of pods implementing a service is selected based on the

LabelSelector object in the service definition. NodePort service exposes a

service on each node’s IP at a static port. It maps the static port on each node with a port

of the application on the pod.

In Contrail Networking, Kubernetes NodePort service is implemented using the

InstanceIP resource and FloatingIP resource, both of which

are similar to the ClusterIP service.

Kubernetes provides a flat networking model in which all pods can talk to each other. Network policy is added to provide security between the pods. Contrail Networking integrated with Kubernetes adds additional networking functionality, including multi-tenancy, network isolation, micro-segmentation with network policies, and load-balancing.

The following table lists the mapping between Kubernetes concepts and Contrail Networking resources.

| Kubernetes Concept | Contrail Networking Resource |

|---|---|

| Namespace | Shared or single project |

| Pod | Virtual Machine |

| Service | Equal-cost multipath (ECMP) LoadBalancer |

| Ingress | HAProxy LoadBalancer for URL |

| Network Policy | Contrail Security |

Contrail Networking Load Balancer Objects

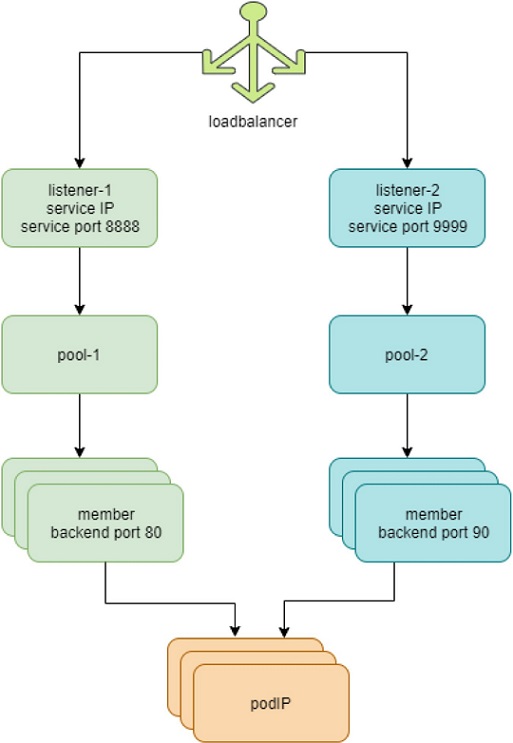

Figure 1 and the following list describe the load balancer objects in Contrail Networking.

- Each service in Contrail Networking is represented by a load balancer object.

- For each service port, a listener object is created for the same service load balancer.

- For each listener there is a pool object.

- The pool contains members. Depending on the number of backend pods, one pool might have multiple members.

- Each member object in the pool maps to one of the backend pods.

- The

contrail-kube-managerlistens tokube-apiserverfor the Kubernetes service. When a service is created, a load balancer object withloadbalancer_providertypenativeis created. - The load balancer has a virtual IP address (VIP), which is the same as the service IP address.

- The service IP/VIP address is linked to the interface of each backend pod. This is accomplished with an ECMP load balancer driver.

- The linkage from the service IP address to the interfaces of multiple backend pods creates an ECMP next hop in Contrail Networking. Traffic is load balanced from the source pod directly to one of the backend pods.

- The

contrail-kube-managercontinues to listen tokube-apiserverfor any changes. Based on the pod list in endpoints,contrail-kube-managerknows the most current backend pods and updates members in the pool.

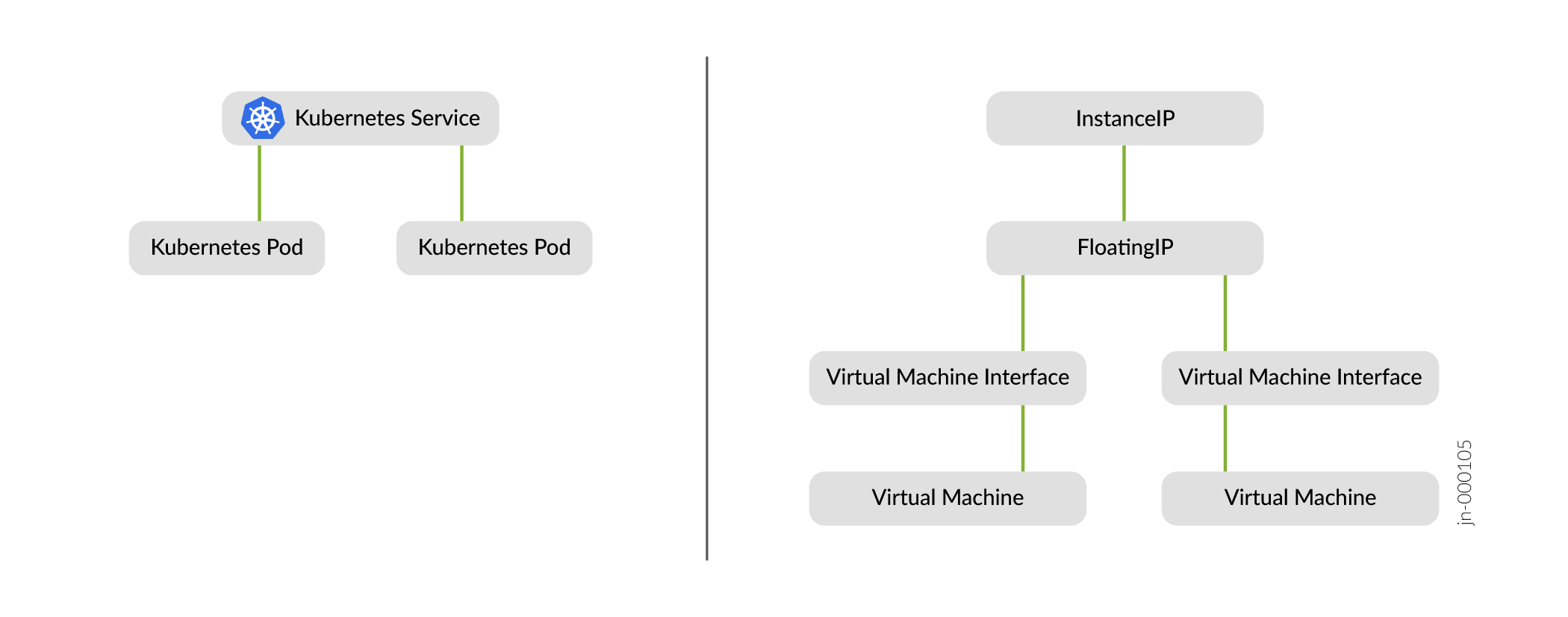

NodePort Service in Contrail Networking

A controller service is implemented in kube-manager. The

kube-manager is the interface between Kubernetes core resources, such as

service and the extended Contrail resources, such as VirtualNetwork and

RoutingInstance. This controller service watchs events going through the

resource endpoints. An endpoint receives an event for any change related to its service. The

endpoint also receives an event for pods created and deleted that match the service

selector. The controller service handles creating the Contrail resources needed: See Figure 2.

InstanceIPresource related to theServiceNetworkFloatingIPresource and the associatedVirtualMachineInterfaces

When a user creates a service, an associated endpoint is automatically created by Kubernetes, which allows the controller service to receive new requests.

Workflow of Creating NodePort Service

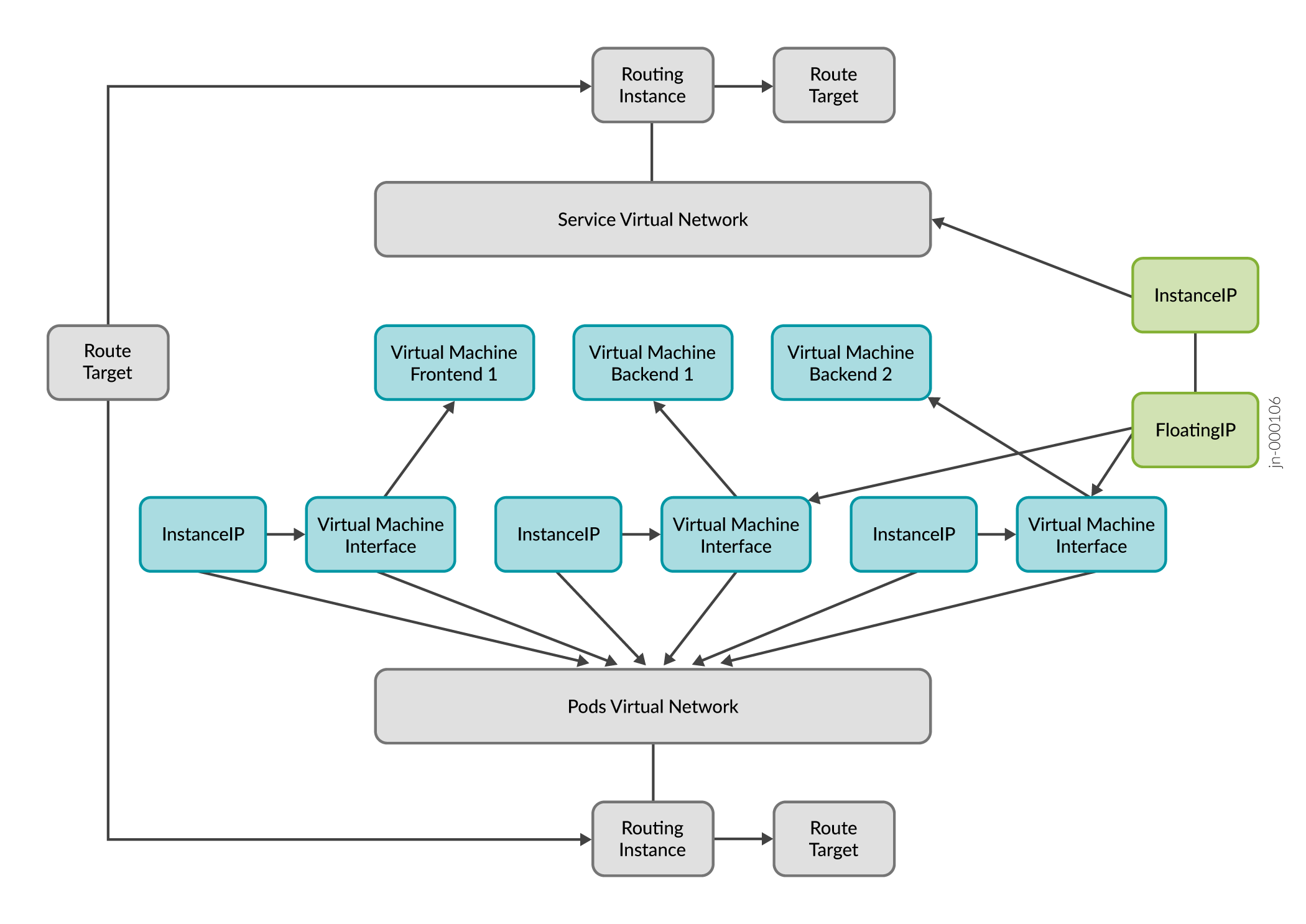

Figure 3 and the steps following detail the workflow when NodePort service is created.

- When the NodePort service is created,

InstanceIP(IIP) is created. TheInstanceIPresource specifies a fixed IP address and its characteristics that belong to a subnet of a referred virtual network. - Once the endpoint is connected to the NodePort service, the

FloatingIPis created.Thekube-managerwatches for the creation of endpoints connected to a service. - When a new endpoint is created,

kube-managerthen creates anInstanceIPin theServiceVirtualNetworksubnet. Thekube-managerthen creates aFloatingIPusing theInstanceIPas the parent. - The

FloatingIPresource specifies a special kind of IP address that does not belong to a specificVirtualMachineInterface(VMI). TheFloatingIPis assigned from a separateVirtualNetworksubnet and can be associated with multiple VMIs. When associated with multiple VMIs, traffic destined to theFloatingIPis distributed using ECMP across all VMIs.

Notes about VMIs:

- VMIs are dynamically updated as pods and labels are added and deleted.

- A VMI represents an interface (port) into a virtual network and might or might not have a corresponding virtual machine.

- A VMI has at minimum a MAC address and an IP address.

Notes about VMs:

- A VM resource represents a compute container. For example VM, baremetal, pod, or container.

- Each VM can communicate with other VMs on the same tenant network, subject to policy restrictions.

- As tenant networks are isolated, VMs in one tenant cannot communicate with VMs in another tenant unless specifically allowed by policy.

Kubernetes Probes and Kubernetes NodePort Service

The kubelet, an agent that runs on each node, needs reachability to pods

for liveness and readiness probes. Contrail network policy is created between the IP fabric

network and pod network to provide reachability between node and pods. Whenever the pod

network is created, the network policy is attached to the pod network to provide

reachability between node and pods. As a result, any process in the node can reach the

pods.

Kubernetes NodePort service is based on node reachability to pods. Since Contrail Networking provides connectivity between nodes and pods through the Contrail network policy, NodePort is supported.

NodePort service supports two types of traffic:

- East-West

- Fabric to Pod

NodePort Service Port Mapping

The port mappings for Kubernetes NodePort service are located in the

FloatingIp resource in the YAML file. In FloatingIp, the

ports are added in "floatingIpPortMappings".

If the targetPort is not mentioned in the service, then the

port value is specified as default.

Example spec YAML file for NodePort service with port details:

spec:

clusterIP: 10.100.13.106

clusterIPs:

- 10.100.13.106

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

run: my-nginx

sessionAffinity: None

For the above example spec YAML, "floatingIpPortMappings"

are created in the FloatingIp resource:

Example "floatingIpPortMappings" YAML:

"floatingIpPortMappings": {

"portMappings": [

{

"srcPort": 80,

"dstPort": 80,

"protocol": "TCP"

}

]

}

Example: NodePort Service Request Journey

Let's follow the journey of a NodePort service request from when the request gets to the node port until the service request reaches the backend pod.

Nodeport service relies on kubeproxy. The Kubernetes network proxy

(kube-proxy) is a daemon running on each node. It reflects the services

defined in the cluster and manages the rules to load balance requests to a service’s backend

pods.

In the following example, the NodePort service apple-service is created

and its endpoints are associated.

user@domain ~ % kubectl describe svc apple-service Name: apple-service Namespace: default Labels: <none> Annotations: <none> Selector: app=apple Type: NodePort IP Families: <none> IP: 10.105.135.144 IPs: 10.105.135.144 Port: <unset> 5678/TCP TargetPort: 5678/TCP NodePort: <unset> 31050/TCP Endpoints: 10.244.0.4:5678 Session Affinity: None External Traffic Policy: Cluster Events: <none> user@domain ~ % kubectl get endpoints apple-service NAME ENDPOINTS AGE apple-service 10.244.0.4:5678 2d18h

Each time a service is created, deleted, or the endpoints are modified,

kube-proxy updates the iptables rules on each node of

the cluster. View the iptables chains to understand and follow the journey

of the request.

First, the KUBE-NODEPORTS chain takes into account the packets coming on service of type

NodePort.

$ sudo iptables -L KUBE-NODEPORTS -t nat

Chain KUBE-NODEPORTS (1 references)

target prot opt source destination

KUBE-MARK-MASQ tcp -- anywhere anywhere /* default/apple-service */ tcp dpt:31050

KUBE-SVC-Y4TE457BRBWMNDKG tcp -- anywhere anywhere /* default/apple-service */ tcp dpt:31050

Each packet coming into port 31050 is first handled by the KUBE-MARK-MASQ,

which tags the packet with a 0x4000 value.

Next, the packet is handled by the KUBE-SVC-Y4TE457BRBWMNDKG chain (referenced in the KUBE-NODEPORTS chain above). If we take a closer look at that one, we can see additional iptables chains:

$ sudo iptables -L KUBE-SVC-Y4TE457BRBWMNDKG -t nat

Chain KUBE-SVC-Y4TE457BRBWMNDKG (2 references)

target prot opt source destination

KUBE-SEP-LCGKUEHRD52LOEFX all -- anywhere anywhere /* default/apple-service */

Inspect the KUBE-SEP-LCGKUEHRD52LOEFX chains to see that they define the routing to one of

the backend pods running the apple-service application.

$ sudo iptables -L KUBE-SEP-LCGKUEHRD52LOEFX -t nat Chain KUBE-SEP-LCGKUEHRD52LOEFX (1 references) target prot opt source destination KUBE-MARK-MASQ all -- 10.244.0.4 anywhere /* default/apple-service */ DNAT tcp -- anywhere anywhere /* default/apple-service */ tcp to:10.244.0.4:5678

This completes the journey of a NodePort service request from when the request gets to the node port until the service request reaches the backend pod.

Local Option Limitation in External Traffic Policy

NodePort service with externalTrafficPolicy set as Local

is not supported in Contrail Networking Release 22.1.

The externalTrafficPolicy denotes if this service desires to route

external traffic to node-local or cluster-wide endpoints.

Localpreserves the client source IP address and avoids a second hop for NodePort type services.Clusterobscures the client source IP address and might cause a second hop to another node.

Cluster is the default for externalTrafficPolicy.

Update or Delete a Service, or Remove a Pod from Service

- Update of service—Any modifiable fields can be changed, excluding

NameandNamespace. For example, Nodeport service can be changed toClusterIpby changing theTypefield in the service YAML definition. - Deletion of service—A service, irrespective of

Type, can be deleted with the command:kubectl delete -n <name_space> <service_name> - Removing pod from service—This can be achieved by changing the

LabelsandSelectoron the service or pod.