Juniper Mist NAC 아키텍처

비디오를 시청하여 Juniper Mist Access Assurance의 아키텍처에 대해 알아보십시오. 마이크로서비스에 대해 자세히 알아보고 마이크로서비스를 활용하여 고가용성 및 확장성을 제공하는 방법을 Juniper Mist 알아보십시오.

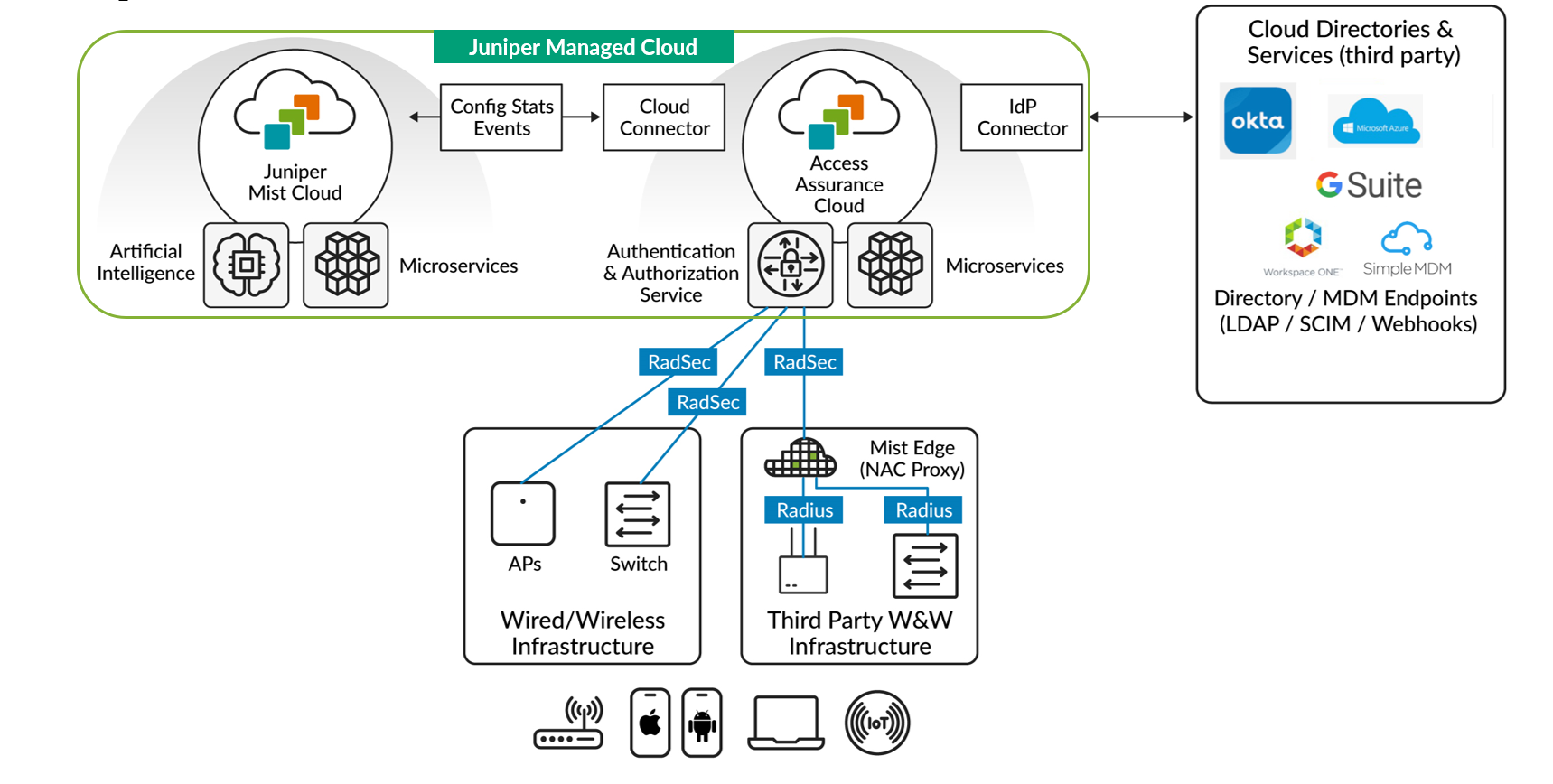

Juniper Mist Access Assurance는 마이크로서비스 아키텍처를 활용합니다. 이 아키텍처는 가동 시간, 중복 및 자동 확장을 우선시하여 유선, 무선, 광역 네트워크 전반에 걸쳐 최적화된 네트워크 연결을 지원합니다.

Mist Access Assurance 아키텍처에 대한 다음 비디오를 시청하십시오.

Architecture. So what we've done is actually we've separated the Authentication Service from the Mist cloud that you all know. We now have authentication service as its own separate cloud, actually spread out around the globe in different pods or points of presence, so we'll talk about that a little bit later on.

But what we have here is an authentication service cloud that has its own set of microservices, where each and every feature, each and every component has its own pool of microservices, whether it's responsible for enforcing policies, for actually doing the user device authentication, keeping state of sessions, keeping the databases of all the endpoints, records, or having identity providers' connectors or cloud connectors back to the Mist cloud.

All the authentication requests, if they're coming from the Mist managed infrastructure, whether it's a Mist AP or a Juniper EX switch, they're automatically wrapped into a secure TLS-encrypted RadSec tunnel going to back to our authentication service.

And from there, once the authentication has happened, the Mist authentication service will would have connections to third-party identity sources like an Okta or Azure ID or Ping Identity. Or it could be an MDM provider, such as Microsoft Intune or Jamf, just to get more context, more visibility in terms of who is trying to connect and what type of device we're dealing with.

And what the Mist authentication service cloud will do is it will actually do all the heavy lifting, all the authentication. But it will send all the visibility metadata, all the session information, all the events, all the statistics back to the Mist cloud. So this is how you get all the visibility, all the end-to-end connection experience in one place, and you can manage everything from there.

In addition to that, when we're dealing with third-party network infrastructure-- say you have a Cisco wired switch. You have an Aruba controller or a third-party vendor AP or a switch. The way we would integrate there is we would leverage our Mist Edge application platform that would function as the authentication proxy component.

What you could do is you could take your third-party infrastructure point, point it via RADIUS to Mist Edge. And from there, Mist Edge will convert it automatically to secure RadSec and then perform the authentication. This way, if you look at this architecture, we're really bringing the microservices architecture down to the authentication service.

All of that gives you the performance that is generally associated with microservices clouds. It also gives you the capability of getting feature updates on a biweekly basis and security patches as and when they're needed, without any downtime to your network or to the functionality that this brings.

Juniper Mist Access Assurance는 외부 디렉터리 서비스(예: Google Workspace, Microsoft Entra ID, Okta Identity)와 모바일 디바이스 관리(MDM) 제공업체(예: Jamf 및 Microsoft Intune)를 통합하여 인증 서비스를 강화합니다. 이 통합은 사용자와 디바이스를 정확하게 식별하는 데 도움이 되며, 확인되고 신뢰할 수 있는 ID에만 네트워크 액세스 권한을 부여하여 보안 조치를 강화합니다.

그림 1 은 Mist Access Assurance NAC(Network Access Control)의 프레임워크를 보여줍니다.

Juniper Mist 클라우드에서 분리된 Juniper Mist 인증 서비스는 독립형 클라우드 서비스로 작동합니다. 인증 및 권한 부여 서비스는 향상된 성능과 안정성을 위해 다양한 POP(points of presence)에 걸쳐 전 세계적으로 배포됩니다.

이 Juniper Mist 인증 서비스는 마이크로서비스 접근 방식을 사용합니다. 즉, 마이크로서비스의 전용 그룹 또는 풀이 정책 시행 또는 사용자 디바이스 인증과 같은 각 서비스 구성 요소의 기능을 관리합니다. 마찬가지로 개별 마이크로서비스는 세션 관리, 엔드포인트 데이터베이스 유지 관리 및 Juniper Mist 클라우드 연결과 같은 각각의 추가 작업을 관리합니다.

주니퍼® 고성능 액세스 포인트 시리즈 또는 주니퍼 네트웍스® EX 시리즈 스위치와 같이 Juniper Mist 클라우드에서 관리되는 디바이스는 Juniper Mist 인증 서비스에 인증 요청을 보냅니다. 이러한 요청은 RadSec(RADIUS over TLS)을 사용하여 자동으로 암호화되고 보안 TLS(전송 레이어 보안) 터널을 통해 인증 서비스로 전송됩니다.

Mist 인증 서비스는 이러한 요청을 처리한 다음 외부 디렉터리 서비스(Google Workspace, Microsoft Azure AD, Okta Identity 등)와 PKI 및 MDM 공급자(Jamf, Microsoft Intune 등)에 연결합니다. 이 연결의 목적은 네트워크를 연결하려는 디바이스 및 사용자에 대한 컨텍스트를 추가로 인증하고 제공하는 것입니다.

인증 작업 외에도 Juniper Mist 인증 서비스는 키 메타데이터, 세션 정보 및 분석을 Juniper Mist 클라우드로 다시 릴레이합니다. 이러한 데이터 공유는 사용자에게 엔드 투 엔드 가시성과 중앙 집중식 관리를 제공합니다.

주니퍼는 Juniper Mist 에지 플랫폼을 인증 프록시로 사용하여 타사 네트워크 인프라를 Juniper Mist Access Assurance와 통합합니다. 타사 인프라는 RADIUS를 통해 Juniper Mist Edge 플랫폼과 상호 작용합니다. Juniper Mist Edge 플랫폼은 RadSec을 사용하여 통신을 보호한 다음 인증을 진행합니다.

이 클라우드 네이티브 마이크로서비스 아키텍처는 인증 및 권한 부여 서비스를 강화하고 네트워크 다운타임을 최소화하면서 정기적인 기능 업데이트와 필요한 보안 패치를 지원합니다.

Mist Access Assurance 고가용성 아키텍처에 대한 다음 비디오를 시청하십시오.

Design of the Mist Access Assurance service. What we've done is we've placed various access assurance clouds that will do the authentication in various regions around the globe, such that you have a POD in the West Coast, in the East Coast, in Europe and Asia/Pac, et cetera, et cetera.

The way that high availability works, which is actually a very nice and tidy way of doing things, it's looking at the physical location of the site where you have you Mist APs or Juniper switches. Based on the geo-affinity, based on the geo AP of that site, the authentication traffic, the RadSec channels that these are forming, it will automatically be redirected to the nearest access assurance cloud available around the globe.

So if you have a site, as you can see in this example, on the West Coast in the United States, it will automatically be redirected to the Mist Access Assurance cloud on the West Coast as well. Similarly, if you have a site on the East Coast, that authentication traffic will be redirected to the East Coast POD as well.

This will provide with the optimal latency, no matter where your physical sites are, while they're still managed by the same Mist dashboard of your choice.

In addition to that, that also serves as the redundancy mechanism as well. So should anything happen to one of the access assurance clouds or one of the components of the access assurance cloud which would result in a service disruption, all the authentication traffic will be automatically redirected to the next nearest access assurance cloud available in the globe.

Mist Access Assurance 워크플로우에 대한 다음 동영상을 시청하십시오.

Mist Assurance authentication service.

So what does it do?

First of all, it's there to do the identification, what or who is trying to connect to our network. Whether it's a wired or wireless device, we want to understand the identity of that user or that device, whether it's using secure authentication, it's using certificate to identify itself, or it's using credentials to authenticate, or it's just a headless IoT device that just has a MAC address that we can look at.

We want to understand the security posture of the device. We want to understand where it belongs to in a customer organization, or where it's located physically, which site it belongs to.

Once we have the user fingerprint, once we understand what type of user or device is trying to connect, we want to assign a policy. We want to say, OK, if it's an employee, a device that's managed by IT that's using a certificate to identify itself, it's a fairly secure device.

We want to assign a VLAN that has less restrictions that provides full access to the network resources. We can optionally assign a group-based policy tag, or we can assign a role to apply a network policy for that given user.

Lastly, but most important, we want to validate that end-to-end connectivity experience across the full stack. We don't care just about the authentication authorization process. We want to combine that whole connection stage end to end.

So we want to answer the question if the user or a device is able to authenticate, and if not, why not exactly? Is it expired certificate? Is it the account that's been disabled?

Is it the policy that's explicitly denying the specific device to connect? We want to answer the question, which policy is assigned to the user?

And after that authentication we want to confirm and validate that the user's getting a good experience so it's able to associate the AP or connect to the right port, get the right VLAN assigned, get the IP address, validate DHCP, resolve ARP and DNS, and finally get access to the network and move packets left and right.

We want to validate that whole path not just some parts of it. And this is where MARVIS anomaly detection, this is where MARVIS conversational interface would help us with troubleshooting.

Mist Access Assurance 아키텍처 확장에 대한 자세한 내용은 다음 비디오를 시청하십시오.

And now let's take a look a little bit, and let's add scale into perspective. Let's take a look at a typical NAC deployment in a production environment.

When you're looking at any type of scale, obviously one box will not be enough just from a redundancy perspective. But more so from a scaling perspective because you'll need to distribute the load, you'll need to load balance your authentications, your endpoint databases, and things like that.

So you're typically looking at deploying a clustered solution, where you would have your policy or authentication nodes, or your brains of the solution, somewhere closer to your NAS devices, your APs and switches, so that they will authenticate clients, they will do the heavy lifting. And at the top, you would have a pair of management nodes. This is where you would configure your NAC policy. This is where you have the visibility logs and things like that.

So that's the cluster deployment you're going to look at. And obviously, in front of that, you'll need to put a load balancer to actually load balance the authentication requests, the RADIUS requests that are come in coming in from various devices, so they would hit all these policy nodes respectively.

And today, this whole deployment is customer managed. It's a customer problem to solve. You need to design for it. You need to scale for it. You need to make sure that you can change that when you need to add scale. You need to make sure that you will maintain this during software upgrades, maintenance windows, and things of that nature.

So that becomes a main challenge. These solutions are becoming complex very, very quickly.

And with those solutions, they're still lacking insights and end-to-end visibility. Because we're still looking at a standalone NAC deployment, which is an overlay to your current network infrastructure.

So even vendors who have infrastructure and NAC from the same vendor, they will not give you end-to-end user connectivity experience visibility in one place. You'll troubleshoot your NAC in one place. You'll troubleshoot your network, your controller switch, your AP or whatever, in a totally different place.

And obviously, think of what will happen if you need to do maintenance, where you'll just want to get a new feature. How would you upgrade this kind of deployment? That requires a lot of investment from the customer side.

Now, just as an example, I'll put the reference here.

We'll take Clearpass as an example, but it's the same for other vendors.

Clearpass has a clustering tech note which is 50 pages long just on how to set up a cluster.

Nothing else, not about the scaling-- nothing else-- it's just about clustering. Think about the complexity here.

마이크로서비스 기반 아키텍처의 개요를 보려면 다음 비디오를 시청하십시오.

What do we want from a NAC solution today, if we would do it from a clean sheet of paper?

First of all, the architecture has to be microservices based. Ideally, it should be a cloud NAC offering.

It should be managed by the vendor. It should be highly available. Feature upgrades should just come periodically automatically, without requiring any downtime whatsoever. Most importantly, the architecture should be API-based and should allow for cross-platform integration.

Secondly, it has to be IT-friendly. We know that existing solutions, they have been so complex that you needed to have an expert on an IT team dedicated just for NAC.

We want that new NAC product to be tightly integrated into the network management and operations. So you want that single pane of glass that manages your whole full stack network, as well as all of your network access control rules and provide end-to-end visibility.

Lastly, with AI being there to help us solve network-specific problems, we want to extend that and make sure that we can capture that end-to-end user connectivity experience and answer questions. Will this affect my end user network experience?

Is it the client configuration problem? Is it the network that is to blame, or network service that is to blame? Is it my NAC policy that is causing users to have a bad experience and have connectivity issues throughout the day?